This is the multi-page printable view of this section. Click here to print.

Continuous data export

1 - .create or alter continuous-export

Creates or alters a continuous export job.

Permissions

You must have at least Database Admin permissions to run this command.

Syntax

.create-or-alter continuous-export continuousExportName [over (T1, T2 )] to table externalTableName [with (propertyName = propertyValue [, …])] <| query

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| continuousExportName | string | ✔️ | The name of the continuous export. Must be unique within the database. |

| externalTableName | string | ✔️ | The name of the external table export target. |

| query | string | ✔️ | The query to export. |

| T1, T2 | string | A comma-separated list of fact tables in the query. If not specified, all tables referenced in the query are assumed to be fact tables. If specified, tables not in this list are treated as dimension tables and aren’t scoped, so all records participate in all exports. See continuous data export overview for details. | |

| propertyName, propertyValue | string | A comma-separated list of optional properties. |

Supported properties

| Property | Type | Description |

|---|---|---|

intervalBetweenRuns | Timespan | The time span between continuous export executions. Must be greater than 1 minute. |

forcedLatency | Timespan | An optional period of time to limit the query to records ingested before a specified period relative to the current time. This property is useful if, for example, the query performs some aggregations or joins, and you want to make sure all relevant records have been ingested before running the export. |

sizeLimit | long | The size limit in bytes of a single storage artifact written before compression. Valid range: 100 MB (default) to 1 GB. |

distributed | bool | Disable or enable distributed export. Setting to false is equivalent to single distribution hint. Default is true. |

parquetRowGroupSize | int | Relevant only when data format is Parquet. Controls the row group size in the exported files. Default row group size is 100,000 records. |

managedIdentity | string | The managed identity for which the continuous export job runs. The managed identity can be an object ID, or the system reserved word. For more information, see Use a managed identity to run a continuous export job. |

isDisabled | bool | Disable or enable the continuous export. Default is false. |

Example

The following example creates or alters a continuous export MyExport that exports data from the T table to ExternalBlob. The data exports occur every hour, and have a defined forced latency and size limit per storage artifact.

.create-or-alter continuous-export MyExport

over (T)

to table ExternalBlob

with

(intervalBetweenRuns=1h,

forcedLatency=10m,

sizeLimit=104857600)

<| T

| Name | ExternalTableName | Query | ForcedLatency | IntervalBetweenRuns | CursorScopedTables | ExportProperties |

|---|---|---|---|---|---|---|

| MyExport | ExternalBlob | S | 00:10:00 | 01:00:00 | [ “[‘DB’].[‘S’]" ] | { “SizeLimit”: 104857600 } |

Related content

2 - .drop continuous-export

Drops a continuous-export job.

Permissions

You must have at least Database Admin permissions to run this command.

Syntax

.drop continuous-export ContinuousExportName

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| ContinuousExportName | string | ✔️ | The name of the continuous export. |

Returns

The remaining continuous exports in the database (post deletion). Output schema as in the show continuous export command.

Related content

3 - .show continuous data-export failures

Returns all failures logged as part of the continuous export within the past 14 days. To view only a specific time range, filter the results by the Timestamp column.

The command doesn’t return any results if executed on a follower database, it must be executed against the leader database.

The command doesn’t return any results if executed on a database shortcut, it must be executed against the leader database.

Permissions

You must have at least Database Monitor or Database Admin permissions to run this command. For more information, see role-based access control.

Syntax

.show continuous-export ContinuousExportName failures

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| ContinuousExportName | string | ✔️ | The name of the continuous export. |

Returns

| Output parameter | Type | Description |

|---|---|---|

| Timestamp | datetime | Timestamp of the failure. |

| OperationId | string | Operation ID of the failure. |

| Name | string | Continuous export name. |

| LastSuccessRun | Timestamp | The last successful run of the continuous export. |

| FailureKind | string | Failure/PartialFailure. PartialFailure indicates some artifacts were exported successfully before the failure occurred. |

| Details | string | Failure error details. |

Example

The following example shows failures from the continuous export MyExport.

.show continuous-export MyExport failures

Output

| Timestamp | OperationId | Name | LastSuccessRun | FailureKind | Details |

|---|---|---|---|---|---|

| 2019-01-01 11:07:41.1887304 | ec641435-2505-4532-ba19-d6ab88c96a9d | MyExport | 2019-01-01 11:06:35.6308140 | Failure | Details… |

Related content

4 - .show continuous-export

Returns the properties of a specified continuous export or all continuous exports in the database.

Permissions

You must have at least Database User, Database Viewer, or Database Monitor permissions to run this command. For more information, see role-based access control.

Syntax

.show continuous-export ContinuousExportName

.show continuous-exports

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| ContinuousExportName | string | ✔️ | The name of the continuous export. |

Returns

| Output parameter | Type | Description |

|---|---|---|

| CursorScopedTables | string | The list of explicitly scoped (fact) tables (JSON serialized). |

| ExportProperties | string | The export properties (JSON serialized). |

| ExportedTo | datetime | The last datetime (ingestion time) that was exported successfully. |

| ExternalTableName | string | The external table name. |

| ForcedLatency | timeSpan | The forced latency timespan, if defined. Returns Null if no timespan is defined. |

| IntervalBetweenRuns | timeSpan | The interval between runs. |

| IsDisabled | bool | A boolean value indicating whether the continuous export is disabled. |

| IsRunning | bool | A boolean value indicating whether the continuous export is currently running. |

| LastRunResult | string | The results of the last continuous-export run (Completed or Failed). |

| LastRunTime | datetime | The last time the continuous export was executed (start time) |

| Name | string | The name of the continuous export. |

| Query | string | The export query. |

| StartCursor | string | The starting point of the first execution of this continuous export. |

Related content

5 - .show continuous-export exported-artifacts

Returns all artifacts exported by the continuous-export in all runs. Filter the results by the Timestamp column in the command to view only records of interest. The history of exported artifacts is retained for 14 days.

The command doesn’t return any results if executed on a follower database, it must be executed against the leader database.

The command doesn’t return any results if executed on a database shortcut, it must be executed against the leader database.

Permissions

You must have at least Database Monitor or Database Admin permissions to run this command. For more information, see role-based access control.

Syntax

.show continuous-export ContinuousExportName exported-artifacts

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| ContinuousExportName | string | ✔️ | The name of the continuous export. |

Returns

| Output parameter | Type | Description |

|---|---|---|

| Timestamp | datetime | THe tTimestamp of the continuous export run |

| ExternalTableName | string | Name of the external table |

| Path | string | Output path |

| NumRecords | long | Number of records exported to path |

Example

The following example shows retrieved artifacts from the continuous export MyExport that were exported within the last hour.

.show continuous-export MyExport exported-artifacts | where Timestamp > ago(1h)

Output

| Timestamp | ExternalTableName | Path | NumRecords | SizeInBytes |

|---|---|---|---|---|

| 2018-12-20 07:31:30.2634216 | ExternalBlob | http://storageaccount.blob.core.windows.net/container1/1_6ca073fd4c8740ec9a2f574eaa98f579.csv | 10 | 1024 |

Related content

6 - Continuous data export

This article describes continuous export of data from Kusto to an external table with a periodically run query. The results are stored in the external table, which defines the destination, such as Azure Blob Storage, and the schema of the exported data. This process guarantees that all records are exported “exactly once”, with some exceptions.

By default, continuous export runs in a distributed mode, where all nodes export concurrently, so the number of artifacts depends on the number of nodes. Continuous export isn’t designed for low-latency streaming data.

To enable continuous data export, create an external table and then create a continuous export definition pointing to the external table.

In some cases, you must use a managed identity to successfully configure a continuous export job. For more information, see Use a managed identity to run a continuous export job.

Permissions

All continuous export commands require at least Database Admin permissions.

Continuous export guidelines

Output schema:

- The output schema of the export query must match the schema of the external table to which you export.

Frequency:

Continuous export runs according to the time period configured for it in the

intervalBetweenRunsproperty. The recommended value for this interval is at least several minutes, depending on the latencies you’re willing to accept. The time interval can be as low as one minute, if the ingestion rate is high.[!NOTE] The

intervalBetweenRunsserves as a recommendation only, and isn’t guaranteed to be precise. Continuous export isn’t suitable for exporting periodic aggregations. For example, a configuration ofintervalBetweenRuns=1hwith an hourly aggregation (T | summarize by bin(Timestamp, 1h)) won’t work as expected, since the continuous export won’t run exactly on-the-hour. Therefore, each hourly bin will receive multiple entries in the exported data.

Number of files:

- The number of files exported in each continuous export iteration depends on how the external table is partitioned. For more information, see export to external table command. Each continuous export iteration always writes to new files, and never appends to existing ones. As a result, the number of exported files also depends on the frequency in which the continuous export runs. The frequency parameter is

intervalBetweenRuns.

- The number of files exported in each continuous export iteration depends on how the external table is partitioned. For more information, see export to external table command. Each continuous export iteration always writes to new files, and never appends to existing ones. As a result, the number of exported files also depends on the frequency in which the continuous export runs. The frequency parameter is

External table storage accounts:

- For best performance, the database and the storage accounts should be colocated in the same Azure region.

- Continuous export works in a distributed manner, such that all nodes are exporting concurrently. On large databases, and if the exported data volume is large, this might lead to storage throttling. The recommendation is to configure multiple storage accounts for the external table. For more information, see storage failures during export commands.

Exactly once export

To guarantee “exactly once” export, continuous export uses database cursors. The continuous export query shouldn’t include a timestamp filter - the database cursors mechanism ensures that records aren’t processed more than once. Adding a timestamp filter in the query can lead to missing data in exported data.

IngestionTime policy must be enabled on all tables referenced in the query that should be processed “exactly once” in the export. The policy is enabled by default on all newly created tables.

The guarantee for “exactly once” export is only for files reported in the show exported artifacts command. Continuous export doesn’t guarantee that each record is written only once to the external table. If a failure occurs after export begins and some of the artifacts were already written to the external table, the external table might contain duplicates. If a write operation was aborted before completion, the external table might contain corrupted files. In such cases, artifacts aren’t deleted from the external table, but they aren’t reported in the show exported artifacts command. Consuming the exported files using the show exported artifacts command guarantees no duplications and no corruptions.

Export from fact and dimension tables

By default, all tables referenced in the export query are assumed to be fact tables. As such, they’re scoped to the database cursor. The syntax explicitly declares which tables are scoped (fact) and which aren’t scoped (dimension). See the over parameter in the create command for details.

The export query includes only the records that joined since the previous export execution. The export query might contain dimension tables in which all records of the dimension table are included in all export queries. When using joins between fact and dimension tables in continuous-export, keep in mind that records in the fact table are only processed once. If the export runs while records in the dimension tables are missing for some keys, records for the respective keys are either missed or include null values for the dimension columns in the exported files. Returning missed or null records depends on whether the query uses inner or outer join. The forcedLatency property in the continuous-export definition can be useful in such cases, where the fact and dimensions tables are ingested during the same time for matching records.

Monitor continuous export

Monitor the health of your continuous export jobs using the following export metrics:

Continuous export max lateness- Max lateness (in minutes) of continuous exports in the database. This is the time between now and the minExportedTotime of all continuous export jobs in database. For more information, see.show continuous exportcommand.Continuous export result- Success/failure result of each continuous export execution. This metric can be split by the continuous export name.

Use the .show continuous export failures command to see the specific failures of a continuous export job.

Resource consumption

- The impact of the continuous export on the database depends on the query the continuous export is running. Most resources, such as CPU and memory, are consumed by the query execution.

- The number of export operations that can run concurrently is limited by the database’s data export capacity. For more information, see Management commands throttling. If the database doesn’t have sufficient capacity to handle all continuous exports, some start lagging behind.

- The show commands-and-queries command can be used to estimate the resources consumption.

- Filter on

| where ClientActivityId startswith "RunContinuousExports"to view the commands and queries associated with continuous export.

- Filter on

Export historical data

Continuous export starts exporting data only from the point of its creation. Records ingested before that time should be exported separately using the non-continuous export command. Historical data might be too large to be exported in a single export command. If needed, partition the query into several smaller batches.

To avoid duplicates with data exported by continuous export, use StartCursor returned by the show continuous export command and export only records where cursor_before_or_at the cursor value. For example:

.show continuous-export MyExport | project StartCursor

| StartCursor |

|---|

| 636751928823156645 |

Followed by:

.export async to table ExternalBlob

<| T | where cursor_before_or_at("636751928823156645")

Continuous export from a table with Row Level Security

To create a continuous export job with a query that references a table with Row Level Security policy, you must:

- Provide a managed identity as part of the continuous export configuration. For more information, see Use a managed identity to run a continuous export job.

- Use impersonation authentication for the external table to which the data is exported.

Continuous export to delta table - Preview

Continuous export to a delta table is currently in preview.

To define continuous export to a delta table, do the following steps:

Create an external delta table, as described in Create and alter delta external tables on Azure Storage.

[!NOTE] If the schema isn’t provided, Kusto will try infer it automatically if there is already a delta table defined in the target storage container.

Delta table partitioning isn’t supported.Define continuous export to this table using the commands described in Create or alter continuous export.

[!IMPORTANT] The schema of the delta table must be in sync with the continuous export query. If the underlying delta table changes, the export might start failing with unexpected behavior.

Limitations

General:

- The following formats are allowed on target tables:

CSV,TSV,JSON, andParquet. - Continuous export isn’t designed to work over materialized views, since a materialized view might be updated, while data exported to storage is always appended and never updated.

- Continuous export can’t be created on follower databases since follower databases are read-only and continuous export requires write operations.

- Records in source table must be ingested to the table directly, using an update policy, or ingest from query commands. If records are moved into the table using .move extents or using .rename table, continuous export might not process these records. See the limitations described in the Database Cursors page.

- If the artifacts used by continuous export are intended to trigger Event Grid notifications, see the known issues section in the Event Grid documentation.

Cross-database and cross-cluster:

- Continuous export doesn’t support cross-cluster calls.

- Continuous export supports cross-database calls only for dimension tables. All fact tables must reside in the local database. See more details in Export from fact and dimension tables.

- If the continuous export includes cross-database calls, it must be configured with a managed identity.

Cross-database and cross-Eventhouse:

- Continuous export doesn’t support cross-Eventhouse calls.

- Continuous export supports cross-database calls only for dimension tables. All fact tables must reside in the local database. See more details in Export from fact and dimension tables.

Policies:

Continuous export can’t be enabled on a table with Row Level Security policy unless specific conditions are met. For more information, see Continuous export from a table with Row Level Security.

Continuous export can’t be configured on a table with restricted view access policy.

Continuous export can’t be configured on a table with restricted view access policy.

Related content

7 - Enable or disable continuous data export

Disables or enables the continuous-export job. A disabled continuous export isn’t executed, but its current state is persisted and can be resumed when the continuous export is enabled.

When enabling a continuous export that was disabled for a long time, exporting continues from where it last stopped when the exporting was disabled. This continuation might result in a long running export, blocking other exports from running, if there isn’t sufficient database capacity to serve all processes. Continuous exports are executed by last run time in ascending order so the oldest export runs first, until catch up is complete.

Permissions

You must have at least Database Admin permissions to run these commands.

Syntax

.enable continuous-export ContinuousExportName

.disable continuous-export ContinuousExportName

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| ContinuousExportName | string | ✔️ | The name of the continuous export. |

Returns

The result of the show continuous export command of the altered continuous export.

Related content

8 - Use a managed identity to run a continuous export job

- sfi-image-nochange

- sfi-ropc-nochange

Use a managed identity to run a continuous export job

A continuous export job exports data to an external table with a periodically run query.

The continuous export job should be configured with a managed identity in the following scenarios:

- When the external table uses impersonation authentication

- When the query references tables in other databases

- When the query references tables with an enabled row level security policy

A continuous export job configured with a managed identity is performed on behalf of the managed identity.

In this article, you learn how to configure a system-assigned or user-assigned managed identity and create a continuous export job using that identity.

Prerequisites

- A cluster and database. Create a cluster and database.

- All Databases Admin permissions on the database.

Configure a managed identity

There are two types of managed identities:

System-assigned: A system-assigned identity is connected to your cluster and is removed when the cluster is removed. Only one system-assigned identity is allowed per cluster.

User-assigned: A user-assigned managed identity is a standalone Azure resource. Multiple user-assigned identities can be assigned to your cluster.

Select one of the following tabs to set up your preferred managed identity type.

User-assigned

Follow the steps to Add a user-assigned identity.

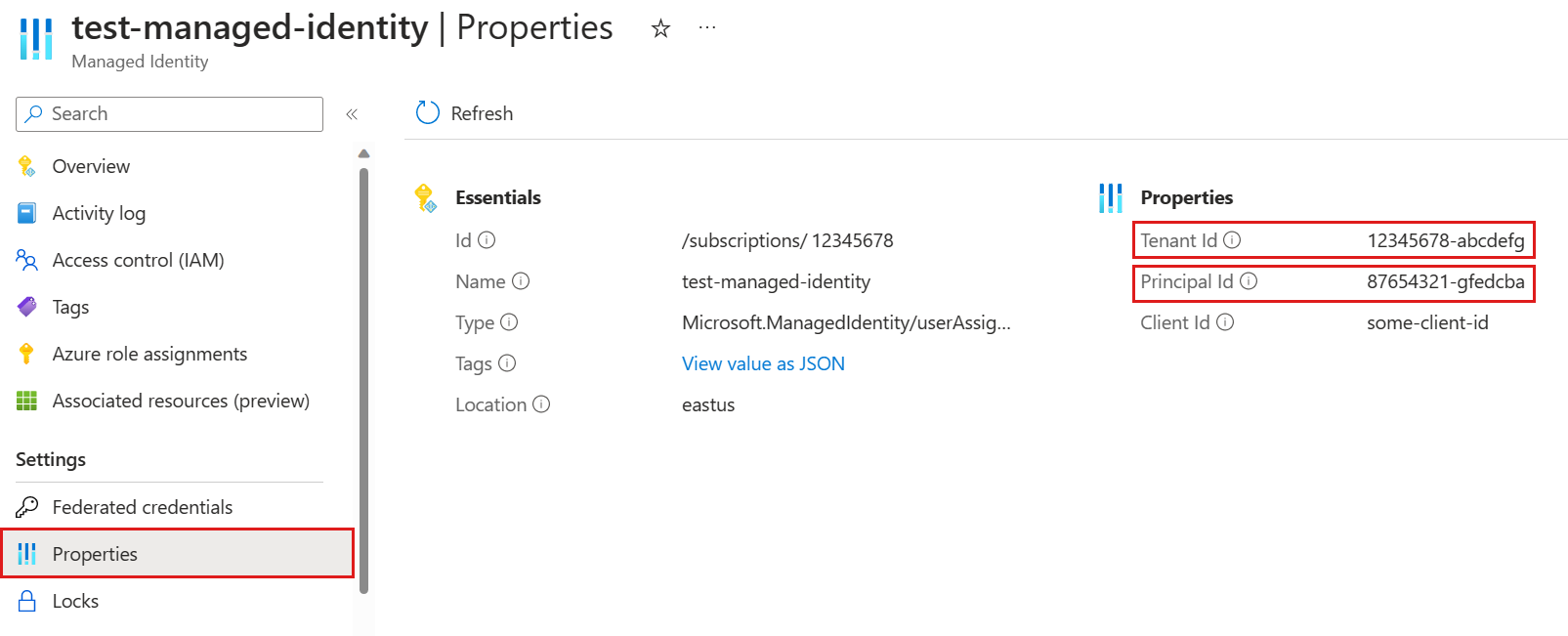

In the Azure portal, in the left menu of your managed identity resource, select Properties. Copy and save the Tenant Id and Principal Id for use in the following steps.

Run the following .alter-merge policy managed_identity command, replacing

<objectId>with the managed identity object ID from the previous step. This command sets a managed identity policy on the cluster that allows the managed identity to be used with continuous export..alter-merge cluster policy managed_identity ```[ { "ObjectId": "<objectId>", "AllowedUsages": "AutomatedFlows" } ]```[!NOTE] To set the policy on a specific database, use

database <DatabaseName>instead ofcluster.Run the following command to grant the managed identity Database Viewer permissions over all databases used for the continuous export, such as the database that contains the external table.

.add database <DatabaseName> viewers ('aadapp=<objectId>;<tenantId>')Replace

<DatabaseName>with the relevant database,<objectId>with the managed identity Principal Id from step 2, and<tenantId>with the Microsoft Entra ID Tenant Id from step 2.

System-assigned

Follow the steps to Add a system-assigned identity.

Copy and save the Object (principal) ID for use in a later step.

Run the following .alter-merge policy managed_identity command. This command sets a managed identity policy on the cluster that allows the managed identity to be used with continuous export.

.alter-merge cluster policy managed_identity ```[ { "ObjectId": "system", "AllowedUsages": "AutomatedFlows" } ]```[!NOTE] To set the policy on a specific database, use

database <DatabaseName>instead ofcluster.Run the following command to grant the managed identity Database Viewer permissions over all databases used for the continuous export, such as the database that contains the external table.

.add database <DatabaseName> viewers ('aadapp=<objectId>')Replace

<DatabaseName>with the relevant database and<objectId>with the managed identity Object (principal) ID from step 2.

Set up an external table

External tables refer to data located in Azure Storage, such as Azure Blob Storage, Azure Data Lake Storage Gen1, Azure Data Lake Storage Gen2, or SQL Server.

Select one of the following tabs to set up an Azure Storage or SQL Server external table.

Azure Storage

Create a connection string based on the storage connection string templates. This string indicates the resource to access and its authentication information. For continuous export flows, we recommend impersonation authentication.

Run the .create or .alter external table command to create the table. Use the connection string from the previous step as the storageConnectionString argument.

For example, the following command creates

MyExternalTablethat refers to CSV-formatted data inmycontainerofmystorageaccountin Azure Blob Storage. The table has two columns, one for an integerxand one for a strings. The connection string ends with;impersonate, which indicates to use impersonation authentication to access the data store..create external table MyExternalTable (x:int, s:string) kind=storage dataformat=csv ( h@'https://mystorageaccount.blob.core.windows.net/mycontainer;impersonate' )Grant the managed identity write permissions over the relevant external data store. The managed identity needs write permissions because the continuous export job exports data to the data store on behalf of the managed identity.

External data store Required permissions Grant the permissions Azure Blob Storage Storage Blob Data Contributor Assign an Azure role Data Lake Storage Gen2 Storage Blob Data Contributor Assign an Azure role Data Lake Storage Gen1 Contributor Assign an Azure role

SQL Server

Create a SQL Server connection string. This string indicates the resource to access and its authentication information. For continuous export flows, we recommend Microsoft Entra integrated authentication, which is impersonation authentication.

Run the .create or .alter external table command to create the table. Use the connection string from the previous step as the sqlServerConnectionString argument.

For example, the following command creates

MySqlExternalTablethat refers toMySqlTabletable inMyDatabaseof SQL Server. The table has two columns, one for an integerxand one for a strings. The connection string contains;Authentication=Active Directory Integrated, which indicates to use impersonation authentication to access the table..create external table MySqlExternalTable (x:int, s:string) kind=sql table=MySqlTable ( h@'Server=tcp:myserver.database.windows.net,1433;Authentication=Active Directory Integrated;Initial Catalog=MyDatabase;' )Grant the managed identity CREATE, UPDATE, and INSERT permissions over the SQL Server database. The managed identity needs write permissions because the continuous export job exports data to the database on behalf of the managed identity. To learn more, see Permissions.

Create a continuous export job

Select one of the following tabs to create a continuous export job that runs on behalf of a user-assigned or system-assigned managed identity.

User-assigned

Run the .create-or-alter continuous-export command with the managedIdentity property set to the managed identity object ID.

For example, the following command creates a continuous export job named MyExport to export the data in MyTable to MyExternalTable on behalf of a user-assigned managed identity. <objectId> should be a managed identity object ID.

.create-or-alter continuous-export MyExport over (MyTable) to table MyExternalTable with (managedIdentity=<objectId>, intervalBetweenRuns=5m) <| MyTable

System-assigned

Run the .create-or-alter continuous-export command with the managedIdentity property set to system.

For example, the following command creates a continuous export job named MyExport to export the data in MyTable to MyExternalTable on behalf of your system-assigned managed identity.

.create-or-alter continuous-export MyExport over (MyTable) to table MyExternalTable with (managedIdentity="system", intervalBetweenRuns=5m) <| MyTable