This is the multi-page printable view of this section. Click here to print.

Policies

- 1: Policies overview

- 2: Auto delete

- 2.1: Auto delete policy

- 3: Caching

- 4: Callout

- 4.1: Callout policy

- 5: Capacity

- 5.1: Capacity policy

- 6: Encoding policy

- 6.1: Encoding policy

- 7: Extent tags policy

- 8: Ingestion batching

- 9: Ingestion time

- 9.1: IngestionTime policy

- 10: Managed identity

- 11: Merge policy

- 11.1: Extents merge policy

- 12: Mirroring policy

- 12.1: Mirroring policy

- 13: Partitioning policy

- 13.1: Partitioning policy

- 14: Query acceleration policy

- 15: Query week consistency policy

- 16: Restricted view access

- 17: Retention policy

- 17.1: Retention policy

- 18: Row level security policy

- 19: Row order policy

- 19.1: Row order policy

- 20: Sandbox policy

- 20.1: Sandbox policy

- 20.2: Sandboxes

- 21: Sharding policy

- 21.1: Data sharding policy

- 22: Streaming ingestion policy

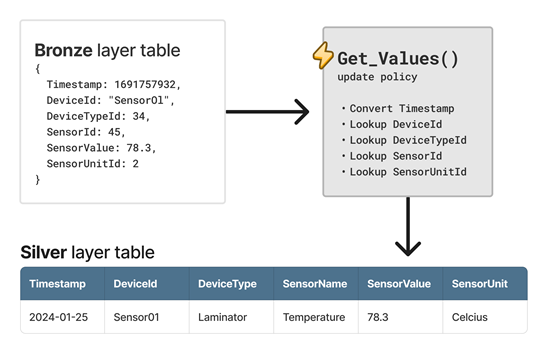

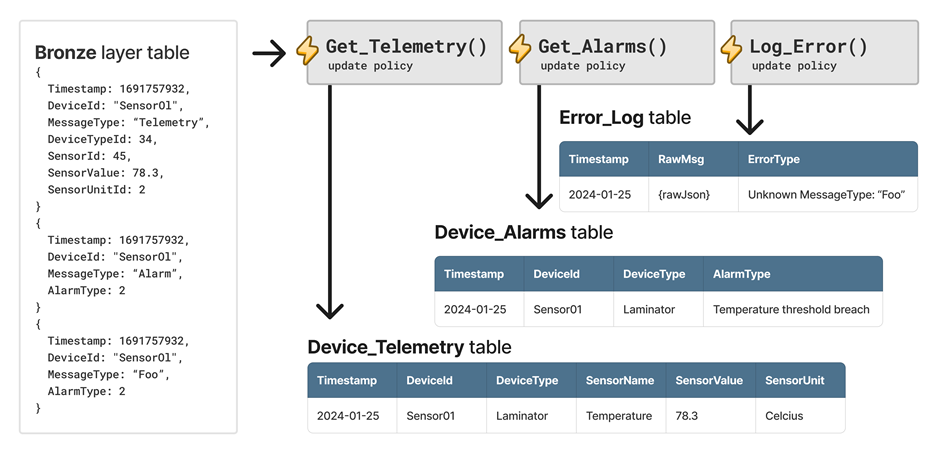

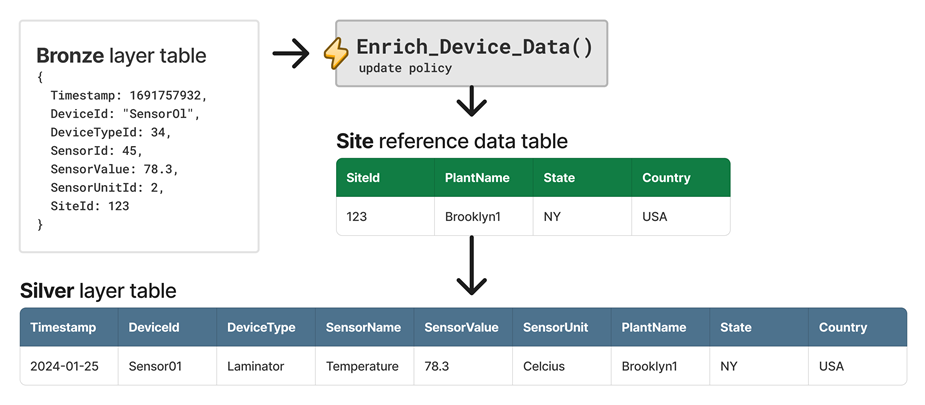

- 23: Update policy

1 - Policies overview

The following table provides an overview of the policies for managing your environment:

| Policy | Description |

|---|---|

| Auto delete policy | Sets an expiry date for the table. The table is automatically deleted at this expiry time. |

| Cache policy | Defines how to prioritize resources. Allows customers to differentiate between hot data cache and cold data cache. |

| Callout policy | Manages the authorized domains for external calls. |

| Capacity policy | Controls the compute resources of data management operations. |

| Encoding policy | Defines how data is encoded, compressed, and indexed. |

| Extent tags retention policy | Controls the mechanism that automatically removes extent tags from tables. |

| Ingestion batching policy | Groups multiple data ingestion requests into batches for more efficient processing. |

| Ingestion time policy | Adds a hidden datetime column to the table that records the time of ingestion. |

| ManagedIdentity policy | Controls which managed identities can be used for what purposes. |

| Merge policy | Defines rules for merging data from different extents into a single extent. |

| Mirroring policy | Allows you to manage your mirroring policy and mirroring policy operations. |

| Partitioning policy | Defines rules for partitioning extents for a specific table or a materialized view. |

| Retention policy | Controls the mechanism that automatically removes data from tables or materialized views. |

| Restricted view access policy | Adds an extra layer of permission requirements for principals to access and view the table. |

| Row level security policy | Defines rules for access to rows in a table based on group membership or execution context. |

| Row order policy | Maintains a specific order for rows within an extent. |

| Sandbox policy | Controls the usage and behavior of sandboxes, which are isolated environments for query execution. |

| Sharding policy | Defines rules for how extents are created. |

| Streaming ingestion policy | Configurations for streaming data ingestion. |

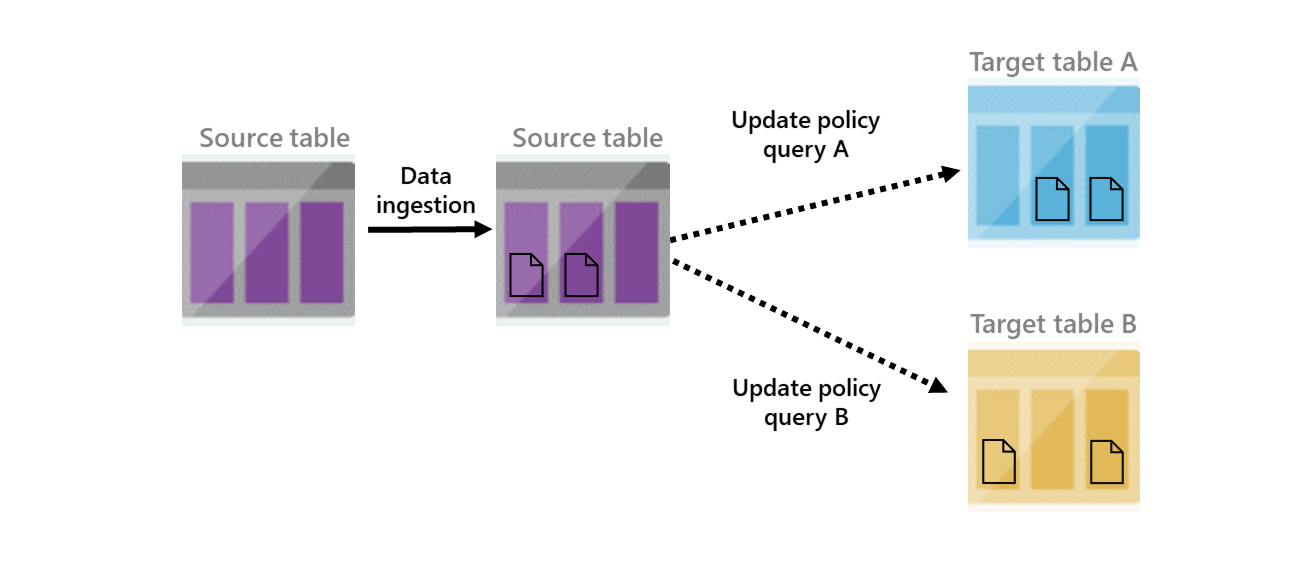

| Update policy | Allows for data to be appended to a target table upon adding data to a source table. |

| Query weak consistency policy | Controls the level of consistency for query results. |

2 - Auto delete

2.1 - Auto delete policy

An auto delete policy on a table sets an expiry date for the table. The table is automatically deleted at this expiry time. Unlike the retention policy, which determines when data (extents) are removed from a table, the auto delete policy drops the entire table.

The auto delete policy can be useful for temporary staging tables. Temporary staging tables are used for data preparation, until the data is moved to its permanent location. We recommend explicitly dropping temporary tables when they’re no longer needed. Only use the auto delete policy as a fallback mechanism in case the explicit deletion doesn’t occur.

Policy object

An auto delete policy includes the following properties:

ExpiryDate:

- Date and time value indicating when the table should be deleted.

- The deletion time is imprecise, and could occur few hours later than the time specified in the ExpiryDate property.

- The value specified can’t be null and it must be greater than current time.

DeleteIfNotEmpty:

- Boolean value indicating whether table should be dropped even if there are still extents in it.

- Defaults to

false.

Related content

For more information, see auto delete policy commands.

3 - Caching

3.1 - Caching policy (hot and cold cache)

To ensure fast query performance, a multi-tiered data cache system is used. Data is stored in reliable storage but parts of it are cached on processing nodes, SSD, or even in RAM for faster access.

The caching policy allows you to choose which data should be cached. You can differentiate between hot data cache and cold data cache by setting a caching policy on hot data. Hot data is kept in local SSD storage for faster query performance, while cold data is stored in reliable storage, which is cheaper but slower to access.

The cache uses 95% of the local SSD disk for hot data. If there isn’t enough space, the most recent data is preferentially kept in the cache. The remaining 5% is used for data that isn’t categorized as hot. This design ensures that queries loading lots of cold data won’t evict hot data from the cache.

The best query performance is achieved when all ingested data is cached. However, certain data might not warrant the expense of being kept in the hot cache. For instance, infrequently accessed old log records might be considered less crucial. In such cases, teams often opt for lower querying performance over paying to keep the data warm.

Use management commands to alter the caching policy at the database, table, or materialized view level.

Use management commands to alter the caching policy at the cluster, database, table, or materialized view level.

How caching policy is applied

When data is ingested, the system keeps track of the date and time of the ingestion, and of the extent that was created. The extent’s ingestion date and time value (or maximum value, if an extent was built from multiple preexisting extents), is used to evaluate the caching policy.

By default, the effective policy is null, which means that all the data is considered hot. A null policy at the table level means that the policy is inherited from the database. A non-null table-level policy overrides a database-level policy.

Scoping queries to hot cache

When running queries, you can limit the scope to only query data in hot cache.

There are several query possibilities:

- Add a client request property called

query_datascopeto the query. Possible values:default,all, andhotcache. - Use a

setstatement in the query text:set query_datascope='...'. Possible values are the same as for the client request property. - Add a

datascope=...text immediately after a table reference in the query body. Possible values areallandhotcache.

The default value indicates use of the default settings, which determine that the query should cover all data.

If there’s a discrepancy between the different methods, then set takes precedence over the client request property. Specifying a value for a table reference takes precedence over both.

For example, in the following query, all table references use hot cache data only, except for the second reference to “T” that is scoped to all the data:

set query_datascope="hotcache";

T | union U | join (T datascope=all | where Timestamp < ago(365d)) on X

Caching policy vs retention policy

Caching policy is independent of retention policy:

- Caching policy defines how to prioritize resources. Queries for important data are faster.

- Retention policy defines the extent of the queryable data in a table/database (specifically,

SoftDeletePeriod).

Configure this policy to achieve the optimal balance between cost and performance, based on the expected query pattern.

Example:

SoftDeletePeriod= 56dhot cache policy= 28d

In the example, the last 28 days of data is stored on the SSD and the additional 28 days of data is stored in Azure blob storage. You can run queries on the full 56 days of data.

Related content

4 - Callout

4.1 - Callout policy

Your cluster can communicate with external services in many different scenarios. Cluster administrators can manage the authorized domains for external calls by updating the cluster’s callout policy.

Supported properties of a callout

A callout policy is composed of the following properties:

| Name | Type | Description |

|---|---|---|

| CalloutType | string | Defines the type of callout, and can be one of types listed in callout types. |

| CalloutUriRegex | string | Specifies the regular expression whose matches represent the domain of resources of the callout domain. |

| CanCall | bool | Whether the callout is permitted or denied external calls. |

Types of callout

Callout policies are managed at cluster-level and are classified into the following types:

| Callout policy type | Description |

|---|---|

| kusto | Controls cross-cluster queries. |

| sql | Controls the SQL plugin. |

| mysql | Controls the MySQL plugin. |

| postgresql | Controls the PostgreSql plugin. |

| azure_digital_twins | Controls the Azure Digital Twins plugin. |

| cosmosdb | Controls the Cosmos DB plugin. |

| sandbox_artifacts | Controls sandboxed plugins (python and R). |

| external_data | Controls access to external data through external tables or externaldata operator. |

| webapi | Controls access to http endpoints. |

| azure_openai | Controls calls to Azure OpenAI plugins such as the embedding plugin ai_embeddings plugin. |

Predefined callout policies

The following table shows a set of predefined callout policies that are preconfigured on your cluster to enable callouts to selected services:

| Service | Designation | Permitted domains |

|---|---|---|

| Kusto | Cross cluster queries | [a-z0-9]{3,22}\\.(\\w+\\.)?kusto(mfa)?\\.windows\\.net/?$ |

| Kusto | Cross cluster queries | `^https://[a-z0-9]{3,22}\.[a-z0-9-]{1,50}\.(kusto\.azuresynapse |

| Kusto | Cross cluster queries | `^https://([A-Za-z0-9]+\.)?(ade |

| Azure DB | SQL requests | [a-z0-9][a-z0-9\\-]{0,61}[a-z0-9]?\\.database\\.windows\\.net/?$ |

| Synapse Analytics | SQL requests | [a-z0-9-]{0,61}?(-ondemand)?\\.sql\\.azuresynapse(-dogfood)?\\.net/?$ |

| External Data | External data | .* |

| Azure Digital Twins | Azure Digital Twins | [A-Za-z0-9\\-]{3,63}\\.api\\.[A-Za-z0-9]+\\.digitaltwins\\.azure\\.net/?$ |

More predefined policies on your cluster may be observed with next query:

.show cluster policy callout

| where EntityType == 'Cluster immutable policy'

| project Policy

Remarks

If an external resource of a given type matches more than one policy defined for such type, and at least one of the matched policies has their CanCall property set to false, access to the resource is denied.

Related content

5 - Capacity

5.1 - Capacity policy

A capacity policy is used for controlling the compute resources of data management operations on the cluster.

The capacity policy object

The capacity policy is made of the following components:

- IngestionCapacity

- ExtentsMergeCapacity

- ExtentsPurgeRebuildCapacity

- ExportCapacity

- ExtentsPartitionCapacity

- MaterializedViewsCapacity

- StoredQueryResultsCapacity

- StreamingIngestionPostProcessingCapacity

- PurgeStorageArtifactsCleanupCapacity

- PeriodicStorageArtifactsCleanupCapacity

- QueryAccelerationCapacity

- GraphSnapshotsCapacity

To view the capacity of your cluster, use the .show capacity command.

Ingestion capacity

| Property | Type | Description |

|---|---|---|

ClusterMaximumConcurrentOperations | long | The maximum number of concurrent ingestion operations allowed in a cluster. This value caps the total ingestion capacity, as shown in the following formula. |

CoreUtilizationCoefficient | real | Determines the percentage of cores to use in the ingestion capacity calculation. |

Formula

The .show capacity command returns the cluster’s ingestion capacity based on the following formula:

Minimum(ClusterMaximumConcurrentOperations , Number of nodes in cluster * Maximum(1, Core count per node * CoreUtilizationCoefficient))

Extents merge capacity

| Property | Type | Description |

|---|---|---|

MinimumConcurrentOperationsPerNode | long | The minimal number of concurrent extents merge/rebuild operations on a single node. Default is 1. |

MaximumConcurrentOperationsPerNode | long | The maximum number of concurrent extents merge/rebuild operations on a single node. Default is 5. |

ClusterMaximumConcurrentOperations | long | The maximum number of concurrent extents merge/rebuild operations allowed in a cluster. This value caps the total merge capacity. |

Formula

The .show capacity command returns the cluster’s extents merge capacity based on the following formula:

Minimum(Number of nodes in cluster * Concurrent operations per node, ClusterMaximumConcurrentOperations)

The effective value for Concurrent operations per node is automatically adjusted by the system in the range [MinimumConcurrentOperationsPerNode,MaximumConcurrentOperationsPerNode], as long as the success rate of the merge operations is 90% or higher.

Extents purge rebuild capacity

| Property | Type | Description |

|---|---|---|

MaximumConcurrentOperationsPerNode | long | The maximum number of concurrent rebuild extents for purge operations on a single node. |

Formula

The .show capacity command returns the cluster’s extents purge rebuild capacity based on the following formula:

Number of nodes in cluster x MaximumConcurrentOperationsPerNode

Export capacity

| Property | Type | Description |

|---|---|---|

ClusterMaximumConcurrentOperations | long | The maximum number of concurrent export operations in a cluster. This value caps the total export capacity, as shown in the following formula. |

CoreUtilizationCoefficient | long | Determines the percentage of cores to use in the export capacity calculation. |

Formula

The .show capacity command returns the cluster’s export capacity based on the following formula:

Minimum(ClusterMaximumConcurrentOperations , Number of nodes in cluster * Maximum(1, Core count per node * CoreUtilizationCoefficient))

Extents partition capacity

| Property | Type | Description |

|---|---|---|

ClusterMinimumConcurrentOperations | long | The minimal number of concurrent extents partition operations in a cluster. Default is 1. |

ClusterMaximumConcurrentOperations | long | The maximum number of concurrent extents partition operations in a cluster. Default is 32. |

The effective value for Concurrent operations is automatically adjusted by the system in the range

[ClusterMinimumConcurrentOperations,ClusterMaximumConcurrentOperations], as long as the success rate of the

partitioning operations is 90% or higher.

Materialized views capacity policy

The policy can be used to change concurrency settings for materialized views. Changing the materialized views capacity policy can be useful when there’s more than a single materialized view defined on a cluster.

| Property | Type | Description |

|---|---|---|

ClusterMinimumConcurrentOperations | long | The minimal number of concurrent materialization operations in a cluster. Default is 1. |

ClusterMaximumConcurrentOperations | long | The maximum number of concurrent materialization operations in a cluster. Default is 10. |

By default, only a single materialization runs concurrently (see how materialized views work). The system adjusts the current concurrency in the range [ClusterMinimumConcurrentOperations,ClusterMaximumConcurrentOperations], based on the number of materialized views in the cluster and the cluster’s CPU. You can increase/decrease concurrency by altering this policy. For example, if the cluster has 10 materialized views, setting the ClusterMinimumConcurrentOperations to five ensures that at least five of them can materialize concurrently.

You can view the effective value for the current concurrency using the .show capacity command

Stored query results capacity

| Property | Type | Description |

|---|---|---|

MaximumConcurrentOperationsPerDbAdmin | long | The maximum number of concurrent ingestion operations in a cluster admin node. |

CoreUtilizationCoefficient | real | Determines the percentage of cores to use in the stored query results creation calculation. |

Formula

The .show capacity command returns the cluster’s stored query results creation capacity based on the following formula:

Minimum(MaximumConcurrentOperationsPerDbAdmin , Number of nodes in cluster * Maximum(1, Core count per node * CoreUtilizationCoefficient))

Streaming ingestion post processing capacity

| Property | Type | Description |

|---|---|---|

MaximumConcurrentOperationsPerNode | long | The maximum number of concurrent streaming ingestion post processing operations on each cluster node. |

Formula

The .show capacity command returns the cluster’s streaming ingestion post processing capacity based on the following formula:

Number of nodes in cluster x MaximumConcurrentOperationsPerNode

Purge storage artifacts cleanup capacity

| Property | Type | Description |

|---|---|---|

MaximumConcurrentOperationsPerCluster | long | The maximum number of concurrent purge storage artifacts cleanup operations on cluster. |

Formula

The .show capacity command returns the cluster’s purge storage artifacts cleanup capacity based on the following formula:

MaximumConcurrentOperationsPerCluster

Periodic storage artifacts cleanup capacity

| Property | Type | Description |

|---|---|---|

MaximumConcurrentOperationsPerCluster | long | The maximum number of concurrent periodic storage artifacts cleanup operations on cluster. |

Formula

The .show capacity command returns the cluster’s periodic storage artifacts cleanup capacity based on the following formula:

MaximumConcurrentOperationsPerCluster

Query Acceleration capacity

| Property | Type | Description |

|---|---|---|

ClusterMaximumConcurrentOperations | long | The maximum number of concurrent query acceleration caching operations in a cluster. This value caps the total query acceleration caching capacity, as shown in the following formula. |

CoreUtilizationCoefficient | long | Determines the percentage of cores to use in the query acceleration caching capacity calculation. |

Formula

The .show capacity command returns the cluster’s query acceleration caching capacity based on the following formula:

Minimum(ClusterMaximumConcurrentOperations , Number of nodes in cluster * Maximum(1, Core count per node * CoreUtilizationCoefficient))

Graph Snapshots capacity

| Property | Type | Description |

|---|---|---|

ClusterMaximumConcurrentOperations | long | The maximum number of concurrent snapshot creation operations on cluster. |

Formula

The .show capacity command returns the cluster’s periodic storage artifacts cleanup capacity based on the following formula:

ClusterMaximumConcurrentOperations

Defaults

The default capacity policy has the following JSON representation:

{

"IngestionCapacity": {

"ClusterMaximumConcurrentOperations": 512,

"CoreUtilizationCoefficient": 0.75

},

"ExtentsMergeCapacity": {

"MinimumConcurrentOperationsPerNode": 1,

"MaximumConcurrentOperationsPerNode": 3

},

"ExtentsPurgeRebuildCapacity": {

"MaximumConcurrentOperationsPerNode": 1

},

"ExportCapacity": {

"ClusterMaximumConcurrentOperations": 100,

"CoreUtilizationCoefficient": 0.25

},

"ExtentsPartitionCapacity": {

"ClusterMinimumConcurrentOperations": 1,

"ClusterMaximumConcurrentOperations": 32

},

"MaterializedViewsCapacity": {

"ClusterMaximumConcurrentOperations": 1,

"ExtentsRebuildCapacity": {

"ClusterMaximumConcurrentOperations": 50,

"MaximumConcurrentOperationsPerNode": 5

}

},

"StoredQueryResultsCapacity": {

"MaximumConcurrentOperationsPerDbAdmin": 250,

"CoreUtilizationCoefficient": 0.75

},

"StreamingIngestionPostProcessingCapacity": {

"MaximumConcurrentOperationsPerNode": 4

},

"PurgeStorageArtifactsCleanupCapacity": {

"MaximumConcurrentOperationsPerCluster": 2

},

"PeriodicStorageArtifactsCleanupCapacity": {

"MaximumConcurrentOperationsPerCluster": 2

},

"QueryAccelerationCapacity": {

"ClusterMaximumConcurrentOperations": 100,

"CoreUtilizationCoefficient": 0.5

},

"GraphSnapshotsCapacity": {

"ClusterMaximumConcurrentOperations": 5

}

}

Management commands

- Use

.show cluster policy capacityto show the current capacity policy of the cluster. - Use

.alter-merge cluster policy capacityto alter the capacity policy of the cluster.

Management commands throttling

Kusto limits the number of concurrent requests for the following user-initiated commands:

- Ingestions

- This category includes commands that ingest from storage, ingest from a query, and ingest inline.

- The ingestion capacity defines the limit.

- Purges

- The global limit is currently fixed at one per cluster.

- The purge rebuild capacity is used internally to determine the number of concurrent rebuild operations during purge commands. Purge commands aren’t blocked or throttled because of this process, but completes faster or slower depending on the purge rebuild capacity.

- Exports

- The limit is as defined in the export capacity.

- Query Acceleration

- The limit is as defined in the query acceleration capacity.

When the cluster detects that an operation exceeded the limit on concurrent requests:

- The command’s state, as presented by System information commands, is

Throttled. - The error message includes the command type, the origin of the throttling and the capacity that exceeded. For example:

- For example:

The management command was aborted due to throttling. Retrying after some backoff might succeed. CommandType: 'TableSetOrAppend', Capacity: 18, Origin: 'CapacityPolicy/Ingestion'.

- For example:

- The HTTP response code is

429. The subcode isTooManyRequests. - The exception type is

ControlCommandThrottledException.

Related content

6 - Encoding policy

6.1 - Encoding policy

The encoding policy defines how data is encoded, compressed, and indexed. This policy applies to all columns of stored data. A default encoding policy is applied based on the column’s data type, and a background process adjusts the encoding policy automatically if necessary.

Scenarios

We recommend the default policy be maintained except for specific scenarios. It can be useful to modify the default column’s encoding policy to fine tune control over the performance/COGS trade-off. For example:

- The default indexing applied to

stringcolumns is built for term searches. If you only query for specific values in the column, COGS might be reduced if the index is simplified using the encoding profileIdentifier. For more information, see the string data type. - Fields that are never queried on or don’t need fast searches can disable indexing. You can use profile

BigObjectto turn off the indexes and increase maximal value size in dynamic or string columns. For example, use this profile to store HLL values returned by hll() function.

How it works

Encoding policy changes do not affect data that has already been ingested. Only new ingestion operations will be performed according to the new policy. The encoding policy applies to individual columns in a table, but can be set at the column level, table level (affecting all columns of the table), or database level.

Related content

- To view the encoding policy, see .show encoding policy.

- To alter the encoding policy, see .alter encoding policy.

7 - Extent tags policy

7.1 - Extent tags retention policy

The extent tags retention policy controls the mechanism that automatically removes extent tags from tables, based on the age of the extents.

It’s recommended to remove any tags that are no longer helpful, or were used temporarily as part of an ingestion pipeline, and may limit the system from reaching optimal performance. For example: old drop-by: tags, which prevent merging extents together.

The policy can be set at the table-level, or at the database-level. A database-level policy applies to all tables in the database that don’t override the policy.

The policy object

The extent tags retention policy is an array of policy objects. Each object includes the following properties:

| Property name | Type | Description | Example |

|---|---|---|---|

| TagPrefix | string | The prefix of the tags to be automatically deleted, once RetentionPeriod is exceeded. The prefix must include a colon (:) as its final character, and may only include one colon. | drop-by:, ingest-by:, custom_prefix: |

| RetentionPeriod | timespan | The duration for which it’s guaranteed that the tags aren’t dropped. This period is measured starting from the extent’s creation time. | 1.00:00:00 |

Example

The following policy will have any drop-by: tags older than three days and any ingest-by: tags older than two hours automatically dropped:

[

{

"TagPrefix": "drop-by:",

"RetentionPeriod": "3.00:00:00"

},

{

"TagPrefix": "ingest-by:",

"RetentionPeriod": "02:00:00"

}

]

Defaults

By default, when the policy isn’t defined, extent tags of any kind are retained as long as the extent isn’t dropped.

Management commands

The following management commands can be used to manage the extent tags retention policy:

8 - Ingestion batching

8.1 - IngestionBatching policy

Search Keywords: IngestionBatching, ingestion batching, batching policy, ingestion optimization, data ingestion settings, Azure Data Explorer ingestion, Kusto ingestion, data batching, ingestion performance

Ingestion batching policy

During the queued ingestion process, the service optimizes for throughput by batching small ingress data chunks together before ingestion. Batching reduces the resources consumed by the queued ingestion process and doesn’t require post-ingestion resources to optimize the small data shards produced by non-batched ingestion.

The downside to doing batching before ingestion is the forced delay. Therefore, the end-to-end time from requesting the data ingestion until the data ready for query is larger.

When you define the IngestionBatching policy, you’ll need to find a balance between optimizing for throughput and time delay. This policy applies to queued ingestion. It defines the maximum forced delay allowed when batching small blobs together. To learn more about using batching policy commands, and optimizing for throughput, see:

Sealing a batch

There’s an optimal size of about 1 GB of uncompressed data for bulk ingestion. Ingestion of blobs with much less data is suboptimal, so in queued ingestion the service will batch small blobs together.

The following list shows the basic batching policy triggers to seal a batch. A batch is sealed and ingested when the first condition is met:

Size: Batch size limit reached or exceededCount: Batch file number limit reachedTime: Batching time has expiredFlushImmediatly: A blob with ‘FlushImmediately’ indication caused a batch to be sealed

The IngestionBatching policy can be set on databases or tables. Default values are as follows: 5 minutes maximum delay time, 500 items, total size of 1 GB.

The following list shows conditions to seal batches related to single blob ingestion. A batch is sealed and ingested when the conditions are met:

SingleBlob_FlushImmediately: Deprecated! Ingest a single blob because ‘FlushImmediately’ was setSingleBlob_IngestIfNotExists: Ingest a single blob because ‘IngestIfNotExists’ was setSingleBlob_IngestByTag: Ingest a single blob because ‘ingest-by’ was setSingleBlob_SizeUnknown: Ingest a single blob because blob size is unknown

If the SystemFlush condition is set, a batch will be sealed when a system flush is triggered. With the SystemFlush parameter set, the system flushes the data, for example due to database scaling or internal reset of system components.

Defaults and limits

| Type | Property | Default | Low latency setting | Minimum value | Maximum value |

|---|---|---|---|---|---|

| Number of items | MaximumNumberOfItems | 500 | 500 | 1 | 25,000 |

| Data size (MB) | MaximumRawDataSizeMB | 1024 | 1024 | 100 | 4096 |

| Time (TimeSpan) | MaximumBatchingTimeSpan | 00:05:00 | 00:00:20 - 00:00:30 | 00:00:10 | 00:30:00 |

The most effective way of controlling the end-to-end latency using ingestion batching policy is to alter its time boundary at table or database level, according to the higher bound of latency requirements. A database level policy affects all tables in that database that don’t have the table-level policy defined, and any newly created table.

Batch data size

The batching policy data size is set for uncompressed data. For Parquet, AVRO, and ORC files, an estimation is calculated based on file size. For compressed data, the uncompressed data size is evaluated as follows in descending order of accuracy:

- If the uncompressed size is provided in the ingestion source options, that value is used.

- When ingesting local files using SDKs, zip archives and gzip streams are inspected to assess their raw size.

- If previous options don’t provide a data size, a factor is applied to the compressed data size to estimate the uncompressed data size.

Batching latencies

Latencies can result from many causes that can be addressed using batching policy settings.

| Cause | Solution |

|---|---|

Data latency matches the time setting, with too little data to reach the size or count limit | Reduce the time limit |

| Inefficient batching due to a large number of very small files | Increase the size of the source files. If using Kafka Sink, configure it to send data in ~100 KB chunks or higher. If you have many small files, increase the count (up to 2000) in the database or table ingestion policy. |

| Batching a large amount of uncompressed data | This is common when ingesting Parquet files. Incrementally decrease size for the table or database batching policy towards 250 MB and check for improvement. |

| Backlog because the database is under scaled | Accept any Azure advisor suggestions to scale aside or scale up your database. Alternatively, manually scale your database to see if the backlog is closed. If these options don’t work, contact support for assistance. |

9 - Ingestion time

9.1 - IngestionTime policy

The IngestionTime policy is an optional policy that can be set (enabled) on tables.

When enabled, Kusto adds a hidden datetime column to the table, called $IngestionTime.

Now, whenever new data is ingested, the time of ingestion is recorded in the hidden column.

That time is measured just before the data is committed.

Since the ingestion time column is hidden, you can’t directly query for its value.

Instead, a special function called

ingestion_time()

retrieves that value. If there’s no datetime column in the table,

or the IngestionTime policy wasn’t enabled when a record was ingested, a null

value is returned.

The IngestionTime policy is designed for two main scenarios:

To allow users to estimate the latency in ingesting data. Many tables with log data have a timestamp column. The timestamp value gets filled by the source and indicates the time when the record was produced. By comparing that column’s value with the ingestion time column, you can estimate the latency for getting the data in.

[!NOTE] The calculated value is only an estimate, because the source and Kusto don’t necessarily have their clocks synchronized.

To support Database Cursors that let users issue consecutive queries, the query is limited to the data that was ingested since the previous query.

For more information. see the management commands for managing the IngestionTime policy.

For more information. see the management commands for managing the IngestionTime policy.

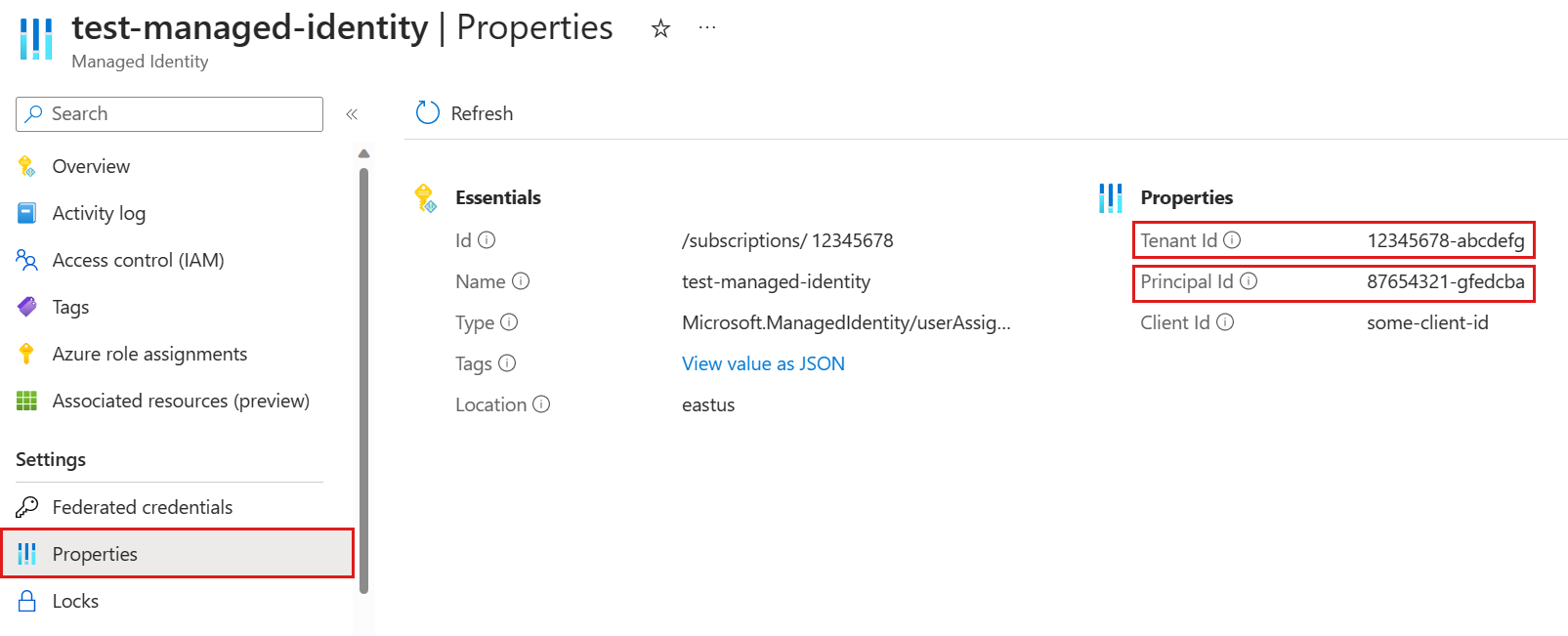

10 - Managed identity

10.1 - Kusto ManagedIdentity policy

ManagedIdentity is a policy that controls which managed identities can be used for what purposes. For example, you can configure a policy that allows a specific managed identity to be used for accessing a storage account for ingestion purposes.

This policy can be enabled at the cluster and database levels. The policy is additive, meaning that for every operation that involves a managed identity, the operation will be permitted if the usage is allowed at either the cluster or database level.

Permissions

Creating or altering a managed identity policy requires AllDatabasesAdmin permissions.

The ManagedIdentity policy object

A cluster or database may have zero or more ManagedIdentity policy objects associated with it. Each ManagedIdentity policy object has the following user-definable properties: DisplayName and AllowedUsages. Other properties are automatically populated from the managed identity associated with the specified ObjectId and displayed for convenience.

The following table describes the properties of the ManagedIdentity policy object:

| Property | Type | Required | Description |

|---|---|---|---|

| ObjectId | string | ✔️ | Either the actual object ID of the managed identity or the reserved keyword system to reference the System Managed Identity of the cluster on which the command is run. |

| ClientId | string | Not applicable | The client ID of the managed identity. |

| TenantId | string | Not applicable | The tenant ID of the managed identity. |

| DisplayName | string | Not applicable | The display name of the managed identity. |

| IsSystem | bool | Not applicable | A Boolean value indicating true if the identity is a System Managed Identity; false if otherwise. |

| AllowedUsages | string | ✔️ | A list of comma-separated allowed usage values for the managed identity. See managed identity usages. |

The following is an example of a ManagedIdentity policy object:

{

"ObjectId": "<objectID>",

"ClientId": "<clientID>",

"TenantId": "<tenantID",

"DisplayName": "myManagedIdentity",

"IsSystem": false,

"AllowedUsages": "NativeIngestion, ExternalTable"

}

Managed identity usages

The following values specify authentication to a usage using the configured managed identity:

| Value | Description |

|---|---|

All | All current and future usages are allowed. |

AutomatedFlows | Run a Continuous Export or Update Policy automated flow on behalf of a managed identity. |

AzureAI | Authenticate to an Azure OpenAI service using the ai_embeddings plugin with a managed identity. |

DataConnection | Authenticate to data connections to an Event Hub or an Event Grid. |

ExportRequest | Authenticate to an external storage export destination. |

ExternalDataRequest | Authenticate to an external data source using the externaldata operator / infer storage schema plugin. |

ExternalTable | Authenticate to external tables using connection strings configured with a managed identity. |

NativeIngestion | Authenticate to an SDK for native ingestion from an external source. |

SandboxArtifacts | Authenticate to external artifacts referenced in sandboxed plugins (e.g., Python) with a managed identity. This usage needs to be defined on the cluster level managed identity policy. |

SqlRequest | Authenticate to an external database using the sql_request or cosmosdb_request plugin with a managed identity. |

11 - Merge policy

11.1 - Extents merge policy

The merge policy defines if and how Extents (data shards) should get merged.

There are two types of merge operations: Merge, which rebuilds indexes, and Rebuild, which completely reingests the data.

Both operation types result in a single extent that replaces the source extents.

By default, Rebuild operations are preferred. If there are extents that don’t fit the criteria for being rebuilt, then an attempt will be made to merge them.

Merge policy properties

The merge policy contains the following properties:

- RowCountUpperBoundForMerge:

- Defaults to 16,000,000.

- Maximum allowed row count of the merged extent.

- Applies to Merge operations, not Rebuild.

- OriginalSizeMBUpperBoundForMerge:

- Defaults to 30,000.

- Maximum allowed original size (in MBs) of the merged extent.

- Applies to Merge operations, not Rebuild.

- MaxExtentsToMerge:

- Defaults to 100.

- Maximum allowed number of extents to be merged in a single operation.

- Applies to Merge operations.

- This value shouldn’t be changed.

- AllowRebuild:

- Defaults to ’true'.

- Defines whether

Rebuildoperations are enabled (in which case, they’re preferred overMergeoperations).

- AllowMerge:

- Defaults to ’true'.

- Defines whether

Mergeoperations are enabled, in which case, they’re less preferred thanRebuildoperations.

- MaxRangeInHours:

- Defaults to 24.

- The maximum allowed difference, in hours, between any two different extents’ creation times, so that they can still be merged.

- Timestamps are of extent creation, and don’t relate to the actual data contained in the extents.

- Applies to both Merge and Rebuild operations.

- In materialized views: defaults to 336 (14 days), unless recoverability is disabled in the materialized view’s effective retention policy.

- This value should be set according to the effective retention policy SoftDeletePeriod, or cache policy DataHotSpan values. Take the lower value of SoftDeletePeriod and DataHotSpan. Set the MaxRangeInHours value to between 2-3% of it. See the examples .

- Lookback:

- Defines the timespan during which extents are considered for rebuild/merge.

- Supported values:

Default- The system-managed default. This is the recommended and default value, whose period is currently set to 14 days.All- All extents, hot and cold, are included.HotCache- Only hot extents are included.Custom- Only extents whose age is under the providedCustomPeriodare included.CustomPeriodis a timespan value in the formatdd.hh:mm.

Default policy example

The following example shows the default policy:

{

"RowCountUpperBoundForMerge": 16000000,

"OriginalSizeMBUpperBoundForMerge": 30000,

"MaxExtentsToMerge": 100,,

"MaxRangeInHours": 24,

"AllowRebuild": true,

"AllowMerge": true,

"Lookback": {

"Kind": "Default",

"CustomPeriod": null

}

}

MaxRangeInHours examples

| min(SoftDeletePeriod (Retention Policy), DataHotSpan (Cache Policy)) | Max Range in hours (Merge Policy) |

|---|---|

| 7 days (168 hours) | 4 |

| 14 days (336 hours) | 8 |

| 30 days (720 hours) | 18 |

| 60 days (1,440 hours) | 36 |

| 90 days (2,160 hours) | 60 |

| 180 days (4,320 hours) | 120 |

| 365 days (8,760 hours) | 250 |

When a database is created, it’s set with the default merge policy values mentioned above. The policy is by default inherited by all tables created in the database, unless their policies are explicitly overridden at table-level.

For more information, see management commands that allow you to manage merge policies for databases or tables.

12 - Mirroring policy

12.1 - Mirroring policy

The mirroring policy commands allow you to view, change, partition, and delete your table mirroring policy. They also provide a way to check the mirroring latency by reviewing the operations mirroring status.

Management commands

- Use .show table policy mirroring command to show the current mirroring policy of the table.

- Use .alter-merge table policy mirroring command to change the current mirroring policy.

- Use .delete table policy mirroring command to soft-delete the current mirroring policy.

- Use .show database operations mirroring-statistics to check database operations mirroring statistics.

- Use .show table operations mirroring-statistics to check table operations mirroring statistics.

- Use .show table mirroring operations exported artifacts command to check operations exported artifacts status.

- Use .show table mirroring operations failures to check operations mirroring failure status.

The policy object

The mirroring policy includes the following properties:

| Property | Description | Values | Default |

|---|---|---|---|

| Format | The format of your mirrored files. | Valid value is parquet. | parquet |

| ConnectionStrings | An array of connection strings that help configure and establish connections. This value is autopopulated. | ||

| IsEnabled | Determines whether the mirroring policy is enabled. When the mirroring policy is disabled and set to false, the underlying mirroring data is retained in the database. | true, false, null. | null |

| Partitions | A comma-separated list of columns used to divide the data into smaller partitions. | See Partitions formatting. |

Data types mapping

To ensure compatibility and optimize queries, ensure that your data types are properly mapped to the parquet data types.

Event house to Delta parquet data types mapping

Event house data types are mapped to Delta Parquet data types using the following rules:

| Event house data type | Delta data type |

|---|---|

bool | boolean |

datetime | timestamp OR date (for date-bound partition definitions) |

dynamic | string |

guid | string |

int | integer |

long | long |

real | double |

string | string |

timespan | long |

decimal | decimal(38,18) |

For more information on Event house data types, see Scalar data types.

Example policy

{

"Format": "parquet",

"IsEnabled": true,

"Partitions": null,

}

13 - Partitioning policy

13.1 - Partitioning policy

The partitioning policy defines if and how extents (data shards) should be partitioned for a specific table or a materialized view.

The policy triggers an additional background process that takes place after the creation of extents, following data ingestion. This process includes reingesting data from the source extents and producing homogeneous extents, in which all values of the column designated as the partition key reside within a single partition.

The primary objective of the partitioning policy is to enhance query performance in specific supported scenarios.

Supported scenarios

The following are the only scenarios in which setting a data partitioning policy is recommended. In all other scenarios, setting the policy isn’t advised.

- Frequent filters on a medium or high cardinality

stringorguidcolumn:- For example: multitenant solutions, or a metrics table where most or all queries filter on a column of type

stringorguid, such as theTenantIdor theMetricId. - Medium cardinality is at least 10,000 distinct values.

- Set the hash partition key to be the

stringorguidcolumn, and set thePartitionAssignmentModeproperty touniform.

- For example: multitenant solutions, or a metrics table where most or all queries filter on a column of type

- Frequent aggregations or joins on a high cardinality

stringorguidcolumn:- For example, IoT information from many different sensors, or academic records of many different students.

- High cardinality is at least 1,000,000 distinct values, where the distribution of values in the column is approximately even.

- In this case, set the hash partition key to be the column frequently grouped-by or joined-on, and set the

PartitionAssignmentModeproperty toByPartition.

- Out-of-order data ingestion:

- Data ingested into a table might not be ordered and partitioned into extents (shards) according to a specific

datetimecolumn that represents the data creation time and is commonly used to filter data. This could be due to a backfill from heterogeneous source files that include datetime values over a large time span. - In this case, set the uniform range datetime partition key to be the

datetimecolumn. - If you need retention and caching policies to align with the datetime values in the column, instead of aligning with the time of ingestion, set the

OverrideCreationTimeproperty totrue.

- Data ingested into a table might not be ordered and partitioned into extents (shards) according to a specific

Partition keys

The following kinds of partition keys are supported.

| Kind | Column Type | Partition properties | Partition value |

|---|---|---|---|

| Hash | string or guid | Function, MaxPartitionCount, Seed, PartitionAssignmentMode | Function(ColumnName, MaxPartitionCount, Seed) |

| Uniform range | datetime | RangeSize, Reference, OverrideCreationTime | bin_at(ColumnName, RangeSize, Reference) |

Hash partition key

If the policy includes a hash partition key, all homogeneous extents that belong to the same partition will be assigned to the same data node.

- A hash-modulo function is used to partition the data.

- Data in homogeneous (partitioned) extents is ordered by the hash partition key.

- You don’t need to include the hash partition key in the row order policy, if one is defined on the table.

- Queries that use the shuffle strategy, and in which the

shuffle keyused injoin,summarizeormake-seriesis the table’s hash partition key, are expected to perform better because the amount of data required to move across nodes is reduced.

Partition properties

| Property | Description | Supported value(s) | Recommended value |

|---|---|---|---|

Function | The name of a hash-modulo function to use. | XxHash64 | |

MaxPartitionCount | The maximum number of partitions to create (the modulo argument to the hash-modulo function) per time period. | In the range (1,2048]. | Higher values lead to greater overhead of the data partitioning process, and a higher number of extents for each time period. The recommended value is 128. Higher values will significantly increase the overhead of partitioning the data post-ingestion, and the size of metadata - and are therefore not recommended. |

Seed | Use for randomizing the hash value. | A positive integer. | 1, which is also the default value. |

PartitionAssignmentMode | The mode used for assigning partitions to nodes. | ByPartition: All homogeneous (partitioned) extents that belong to the same partition are assigned to the same node.Uniform: An extents’ partition values are disregarded. Extents are assigned uniformly to the nodes. | If queries don’t join or aggregate on the hash partition key, use Uniform. Otherwise, use ByPartition. |

Hash partition key example

A hash partition key over a string-typed column named tenant_id.

It uses the XxHash64 hash function, with MaxPartitionCount set to the recommended value 128, and the default Seed of 1.

{

"ColumnName": "tenant_id",

"Kind": "Hash",

"Properties": {

"Function": "XxHash64",

"MaxPartitionCount": 128,

"Seed": 1,

"PartitionAssignmentMode": "Uniform"

}

}

Uniform range datetime partition key

In these cases, you can reshuffle the data between extents so that each extent includes records from a limited time range. This process results in filters on the datetime column being more effective at query time.

The partition function used is bin_at() and isn’t customizable.

Partition properties

| Property | Description | Recommended value |

|---|---|---|

RangeSize | A timespan scalar constant that indicates the size of each datetime partition. | Start with the value 1.00:00:00 (one day). Don’t set a shorter value, because it may result in the table having a large number of small extents that can’t be merged. |

Reference | A datetime scalar constant that indicates a fixed point in time, according to which datetime partitions are aligned. | Start with 1970-01-01 00:00:00. If there are records in which the datetime partition key has null values, their partition value is set to the value of Reference. |

OverrideCreationTime | A bool indicating whether or not the result extent’s minimum and maximum creation times should be overridden by the range of the values in the partition key. | Defaults to false. Set to true if data isn’t ingested in-order of time of arrival. For example, a single source file may include datetime values that are distant, and/or you may want to enforce retention or caching based on the datetime values rather than the time of ingestion.When OverrideCreationTime is set to true, extents may be missed in the merge process. Extents are missed if their creation time is older than the Lookback period of the table’s Extents merge policy. To make sure that the extents are discoverable, set the Lookback property to HotCache. |

Uniform range datetime partition example

The snippet shows a uniform datetime range partition key over a datetime typed column named timestamp.

It uses datetime(2021-01-01) as its reference point, with a size of 7d for each partition, and doesn’t

override the extents’ creation times.

{

"ColumnName": "timestamp",

"Kind": "UniformRange",

"Properties": {

"Reference": "2021-01-01T00:00:00",

"RangeSize": "7.00:00:00",

"OverrideCreationTime": false

}

}

The policy object

By default, a table’s data partitioning policy is null, in which case data in the table won’t be repartitioned after it’s ingested.

The data partitioning policy has the following main properties:

PartitionKeys:

- A collection of partition keys that define how to partition the data in the table.

- A table can have up to

2partition keys, with one of the following options: - Each partition key has the following properties:

ColumnName:string- The name of the column according to which the data will be partitioned.Kind:string- The data partitioning kind to apply (HashorUniformRange).Properties:property bag- Defines parameters according to which partitioning is done.

EffectiveDateTime:

- The UTC datetime from which the policy is effective.

- This property is optional. If it isn’t specified, the policy will take effect for data ingested after the policy was applied.

Data partitioning example

Data partitioning policy object with two partition keys.

- A hash partition key over a

string-typed column namedtenant_id.- It uses the

XxHash64hash function, withMaxPartitionCountset to the recommended value128, and the defaultSeedof1.

- It uses the

- A uniform datetime range partition key over a

datetimetype column namedtimestamp.- It uses

datetime(2021-01-01)as its reference point, with a size of7dfor each partition.

- It uses

{

"PartitionKeys": [

{

"ColumnName": "tenant_id",

"Kind": "Hash",

"Properties": {

"Function": "XxHash64",

"MaxPartitionCount": 128,

"Seed": 1,

"PartitionAssignmentMode": "Uniform"

}

},

{

"ColumnName": "timestamp",

"Kind": "UniformRange",

"Properties": {

"Reference": "2021-01-01T00:00:00",

"RangeSize": "7.00:00:00",

"OverrideCreationTime": false

}

}

]

}

Additional properties

The following properties can be defined as part of the policy. These properties are optional and we recommend not changing them.

| Property | Description | Recommended value | Default value |

|---|---|---|---|

| MinRowCountPerOperation | Minimum target for the sum of row count of the source extents of a single data partitioning operation. | 0 | |

| MaxRowCountPerOperation | Maximum target for the sum of the row count of the source extents of a single data partitioning operation. | Set a value lower than 5M if you see that the partitioning operations consume a large amount of memory or CPU per operation. | 0, with a default target of 5,000,000 records. |

| MaxOriginalSizePerOperation | Maximum target for the sum of the original size (in bytes) of the source extents of a single data partitioning operation. | If the partitioning operations consume a large amount of memory or CPU per operation, set a value lower than 5 GB. | 0, with a default target of 5,368,709,120 bytes (5 GB). |

The data partitioning process

- Data partitioning runs as a post-ingestion background process.

- A table that is continuously ingested into is expected to always have a “tail” of data that is yet to be partitioned (nonhomogeneous extents).

- Data partitioning runs only on hot extents, regardless of the value of the

EffectiveDateTimeproperty in the policy.- If partitioning cold extents is required, you need to temporarily adjust the caching policy.

You can monitor the partitioning status of tables with defined policies in a database by using the .show database extents partitioning statistics command and partitioning metrics.

Partitioning capacity

The data partitioning process results in the creation of more extents. The extents merge capacity may gradually increase, so that the process of merging extents can keep up.

If there’s a high ingestion throughput, or a large enough number of tables that have a partitioning policy defined, then the Extents partition capacity may gradually increase, so that the process of partitioning extents can keep up.

To avoid consuming too many resources, these dynamic increases are capped. You may be required to gradually and linearly increase them beyond the cap, if they’re used up entirely.

Limitations

- Attempts to partition data in a database that already has more than 5,000,000 extents will be throttled.

- In such cases, the

EffectiveDateTimeproperty of partitioning policies of tables in the database will be automatically delayed by several hours, so that you can reevaluate your configuration and policies.

- In such cases, the

Outliers in partitioned columns

- The following situations can contribute to imbalanced distribution of data across nodes, and degrade query performance:

- If a hash partition key includes values that are much more prevalent than others, for example, an empty string, or a generic value (such as

nullorN/A). - The values represent an entity (such as

tenant_id) that is more prevalent in the dataset.

- If a hash partition key includes values that are much more prevalent than others, for example, an empty string, or a generic value (such as

- If a uniform range datetime partition key has a large enough percentage of values that are “far” from the majority of the values in the column, the overhead of the data partitioning process is increased and may lead to many small extents to keep track of. An example of such a situation is datetime values from the distant past or future.

In both of these cases, either “fix” the data, or filter out any irrelevant records in the data before or at ingestion time, to reduce the overhead of the data partitioning. For example, use an update policy.

Related content

14 - Query acceleration policy

14.1 - Query acceleration policy (preview)

An external table is a schema entity that references data stored external to a Kusto database. Queries run over external tables can be less performant than on data that is ingested due to various factors such as network calls to fetch data from storage, the absence of indexes, and more. Query acceleration allows specifying a policy on top of external delta tables. This policy defines the number of days to accelerate data for high-performance queries.

Query acceleration is supported in Azure Data Explorer over Azure Data Lake Store Gen2 or Azure blob storage external tables.

Query acceleration is supported in Eventhouse over OneLake, Azure Data Lake Store Gen2, or Azure blob storage external tables.

To enable query acceleration in the Fabric UI, see Query acceleration over OneLake shortcuts.

Limitations

- The number of columns in the external table can’t exceed 900.

- Delta tables with checkpoint V2 aren’t supported.

- Query performance over accelerated external delta tables that have more than 2.5 million data files may not be optimal.

- The feature assumes delta tables with static advanced features, for example column mapping doesn’t change, partitions don’t change, and so on. To change advanced features, first disable the policy, and once the change is made, re-enable the policy.

- Schema changes on the delta table must also be followed with the respective

.alterexternal delta table schema, which might result in acceleration starting from scratch if there was breaking schema change. - Parquet files larger than 1 GB won’t be cached.

- Manual edits to the delta table are not allowed and can lead to unexpected results.

Known issues

- Data in the external delta table that’s optimized with the OPTIMIZE function will need to be reaccelerated.

- Frequent MERGE/UPDATE/DELETE operations in delta may result in reacceleration of the updated files. If you run these operations frequently where most of the files in the delta table are touched, this can be equivalent of reacceleration of the entire table.

- The system assumes that all artifacts under the delta table directory have the same access level to the selected users. Different files having different access permissions under the delta table directory might result with unexpected behavior.

Commands for query acceleration

15 - Query week consistency policy

15.1 - Query weak consistency policy

The query weak consistency policy is a cluster-level policy object that configures the weak consistency service.

Management commands

- Use

.show cluster policy query_weak_consistencyto show the current query weak consistency policy of the cluster. - Use

.alter cluster policy query_weak_consistencyto change the current query weak consistency policy of the cluster.

The policy object

The query weak consistency policy includes the following properties:

| Property | Description | Values | Default |

|---|---|---|---|

| PercentageOfNodes | The percentage of nodes in the cluster that execute the query weak consistency service (the selected nodes will execute the weakly consistent queries). | An integer between 1 to 100, or -1 for default value (which is currently 20%). | -1 |

| MinimumNumberOfNodes | Minimum number of nodes that execute the query weak consistency service (will determine the number of nodes in case PercentageOfNodes*#NodesInCluster is smaller). | A positive integer, or -1 for default value (which is currently 2). Smaller or equal to MaximumNumberOfNodes. | -1 |

| MaximumNumberOfNodes | Maximum number of nodes that execute the query weak consistency service (will determine the number of nodes in case PercentageOfNodes*#NodesInCluster is greater). | A positive integer, or -1 for default value (which is currently 30). Greater or equal to MinimumNumberOfNodes. | -1 |

| SuperSlackerNumberOfNodesThreshold | If the total number of nodes in the cluster exceeds this number, nodes that execute the weak consistency service will become ‘super slacker’, meaning they won’t have data on them (in order to reduce load). See Warning below. | A positive integer that is greater than or equal to 4, or -1 for default value (currently no threshold - weak consistency nodes won’t become ‘super slacker’). | -1 |

| EnableMetadataPrefetch | When set to true, database metadata will be pre-loaded when the cluster comes up, and reloaded every few minutes, on all weak consistency nodes. When set to false, database metadata load will be triggered by queries (on demand), so some queries might be delayed (until the database metadata is pulled from storage). Database metadata must be reloaded from storage to query the database, when its age is greater than MaximumLagAllowedInMinutes. See Warning and Important below. | true or false | false |

| MaximumLagAllowedInMinutes | The maximum duration (in minutes) that weakly consistent metadata is allowed to lag behind. If metadata is older than this value, the most up-to-date metadata will be pulled from storage (when the database is queried, or periodically if EnableMetadataPrefech is enabled). See Warning below. | An integer between 1 to 60, or -1 for default value (currently 5 minutes). | -1 |

| RefreshPeriodInSeconds | The refresh period (in seconds) to update a database metadata on each weak consistency node. See Warning below. | An integer between 30 to 1800, or -1 for default value (currently 120 seconds). | -1 |

Default policy

The default policy is:

{

"PercentageOfNodes": -1,

"MinimumNumberOfNodes": -1,

"MaximumNumberOfNodes": -1,

"SuperSlackerNumberOfNodesThreshold": -1,

"EnableMetadataPrefetch": false,

"MaximumLagAllowedInMinutes": -1,

"RefreshPeriodInSeconds": -1

}

16 - Restricted view access

16.1 - Restricted view access policy

The restricted view access policy is an optional security feature that governs view permissions on a table. By default, the policy is disabled. When enabled, the policy adds an extra layer of permission requirements for principals to access and view the table.

For a table with an enabled restricted view access policy, only principals assigned the UnrestrictedViewer role have the necessary permissions to view the table. Even principals with roles like Table Admin or Database Admin are restricted unless granted the UnrestrictedViewer role.

While the restricted view access policy is specific to individual tables, the UnrestrictedViewer role operates at the database level. Thereby, a principal with the UnrestrictedViewer role has view permissions for all tables within the database. For more detailed information on managing table view access, see Manage view access to tables.

Limitations

- The restricted view access policy can’t be configured on a table on which a Row Level Security policy is enabled.

- A table with the restricted view access policy enabled can’t be used as the source of a materialized view. For more information, see materialized views limitations and known issues.

Related content

17 - Retention policy

17.1 - Retention policy

The retention policy controls the mechanism that automatically removes data from tables or materialized views. It’s useful to remove data that continuously flows into a table, and whose relevance is age-based. For example, the policy can be used for a table that holds diagnostics events that may become uninteresting after two weeks.

The retention policy can be configured for a specific table or materialized view, or for an entire database. The policy then applies to all tables in the database that don’t override it. When the policy is configured both at the database and table level, the retention policy in the table takes precedence over the database policy.

Setting up a retention policy is important when continuously ingesting data, which will limit costs.

Data that is “outside” the retention policy is eligible for removal. There’s no specific guarantee when removal occurs. Data may “linger” even if the retention policy is triggered.

The retention policy is most commonly set to limit the age of the data since ingestion. For more information, see SoftDeletePeriod.

deleted before the limit is exceeded, but deletion isn’t immediate following that point.

The policy object

A retention policy includes the following properties:

- SoftDeletePeriod:

- Time span for which it’s guaranteed that the data is kept available to query. The period is measured starting from the time the data was ingested.

- Defaults to

1,000 years. - When altering the soft-delete period of a table or database, the new value applies to both existing and new data.

- Recoverability:

- Data recoverability (Enabled/Disabled) after the data was deleted.

- Defaults to

Enabled. - If set to

Enabled, data deleted due to retention policy will be recoverable for 14 days after it’s been soft-deleted. - It is not possible to configure the recoverability period.

Management commands

- Use

.show policy retentionto show the current retention policy for a database, table, or materialized view. - Use

.alter policy retentionto change current retention policy of a database, table, or materialized view.

Defaults

By default, when a database or a table is created, it doesn’t have a retention policy defined. Normally, the database is created and then immediately has its retention policy set by its creator according to known requirements.

When you run a .show command for the retention policy of a database or table that hasn’t had its policy set, Policy appears as null.

The default retention policy, with the default values mentioned above, can be applied using the following command.

.alter database DatabaseName policy retention "{}"

.alter table TableName policy retention "{}"

.alter materialized-view ViewName policy retention "{}"

The command results in the following policy object applied to the database or table.

{

"SoftDeletePeriod": "365000.00:00:00", "Recoverability":"Enabled"

}

Clearing the retention policy of a database or table can be done using the following command.

.delete database DatabaseName policy retention

.delete table TableName policy retention

Examples

For an environment that has a database named MyDatabase, with tables MyTable1, MyTable2, and MySpecialTable.

Soft-delete period of seven days and recoverability disabled

Set all tables in the database to have a soft-delete period of seven days and disabled recoverability.

Option 1 (Recommended): Set a database-level retention policy, and verify there are no table-level policies set.

.delete table MyTable1 policy retention // optional, only if the table previously had its policy set .delete table MyTable2 policy retention // optional, only if the table previously had its policy set .delete table MySpecialTable policy retention // optional, only if the table previously had its policy set .alter-merge database MyDatabase policy retention softdelete = 7d recoverability = disabled .alter-merge materialized-view ViewName policy retention softdelete = 7dOption 2: For each table, set a table-level retention policy, with a soft-delete period of seven days and recoverability disabled.

.alter-merge table MyTable1 policy retention softdelete = 7d recoverability = disabled .alter-merge table MyTable2 policy retention softdelete = 7d recoverability = disabled .alter-merge table MySpecialTable policy retention softdelete = 7d recoverability = disabled

Soft-delete period of seven days and recoverability enabled

Set tables

MyTable1andMyTable2to have a soft-delete period of seven days and recoverability disabled.Set

MySpecialTableto have a soft-delete period of 14 days and recoverability enabled.Option 1 (Recommended): Set a database-level retention policy, and set a table-level retention policy.

.delete table MyTable1 policy retention // optional, only if the table previously had its policy set .delete table MyTable2 policy retention // optional, only if the table previously had its policy set .alter-merge database MyDatabase policy retention softdelete = 7d recoverability = disabled .alter-merge table MySpecialTable policy retention softdelete = 14d recoverability = enabledOption 2: For each table, set a table-level retention policy, with the relevant soft-delete period and recoverability.

.alter-merge table MyTable1 policy retention softdelete = 7d recoverability = disabled .alter-merge table MyTable2 policy retention softdelete = 7d recoverability = disabled .alter-merge table MySpecialTable policy retention softdelete = 14d recoverability = enabled

Soft-delete period of seven days, and MySpecialTable keeps its data indefinitely

Set tables MyTable1 and MyTable2 to have a soft-delete period of seven days, and have MySpecialTable keep its data indefinitely.

Option 1: Set a database-level retention policy, and set a table-level retention policy, with a soft-delete period of 1,000 years, the default retention policy, for

MySpecialTable..delete table MyTable1 policy retention // optional, only if the table previously had its policy set .delete table MyTable2 policy retention // optional, only if the table previously had its policy set .alter-merge database MyDatabase policy retention softdelete = 7d .alter table MySpecialTable policy retention "{}" // this sets the default retention policyOption 2: For tables

MyTable1andMyTable2, set a table-level retention policy, and verify that the database-level and table-level policy forMySpecialTablearen’t set..delete database MyDatabase policy retention // optional, only if the database previously had its policy set .delete table MySpecialTable policy retention // optional, only if the table previously had its policy set .alter-merge table MyTable1 policy retention softdelete = 7d .alter-merge table MyTable2 policy retention softdelete = 7dOption 3: For tables

MyTable1andMyTable2, set a table-level retention policy. For tableMySpecialTable, set a table-level retention policy with a soft-delete period of 1,000 years, the default retention policy..alter-merge table MyTable1 policy retention softdelete = 7d .alter-merge table MyTable2 policy retention softdelete = 7d .alter table MySpecialTable policy retention "{}"

18 - Row level security policy

18.1 - Row level security policy

Use group membership or execution context to control access to rows in a database table.

Row Level Security (RLS) simplifies the design and coding of security. It lets you apply restrictions on data row access in your application. For example, limit user access to rows relevant to their department, or restrict customer access to only the data relevant to their company.

The access restriction logic is located in the database tier, rather than away from the data in another application tier. The database system applies the access restrictions every time data access is attempted from any tier. This logic makes your security system more reliable and robust by reducing the surface area of your security system.

RLS lets you provide access to other applications and users, only to a certain portion of a table. For example, you might want to:

- Grant access only to rows that meet some criteria

- Anonymize data in some of the columns

- All of the above

For more information, see management commands for managing the Row Level Security policy.

Limitations

- There’s no limit on the number of tables on which Row Level Security policy can be configured.

- Row Level Security policy cannot be configured on External Tables.

- The RLS policy can’t be enabled on a table under the following circumstances:

- The table is referenced by an update policy that doesn’t use a managed identity. A managed identity is required so the engine can securely determine which rows from the referenced tables are allowed to be queried.

- The table is referenced by a continuous export that doesn’t use the

impersonateauthentication method. Impersonation ensures that only users who have access to the target storage account can read the exported (and potentially sensitive) data. - The table has restricted view access policy configured.

- The RLS query can’t reference other tables that have Row Level Security policy enabled.

- The RLS query can’t reference tables located in other databases.

Examples

Limit access to Sales table

In a table named Sales, each row contains details about a sale. One of the columns contains the name of the salesperson. Instead of giving your salespeople access to all records in Sales, enable a Row Level Security policy on this table to only return records where the salesperson is the current user:

Sales | where SalesPersonAadUser == current_principal()

You can also mask the email address:

Sales | where SalesPersonAadUser == current_principal() | extend EmailAddress = "****"

If you want every sales person to see all the sales of a specific country/region, you can define a query similar to:

let UserToCountryMapping = datatable(User:string, Country:string)

[

"john@domain.com", "USA",

"anna@domain.com", "France"

];

Sales

| where Country in ((UserToCountryMapping | where User == current_principal_details()["UserPrincipalName"] | project Country))

If you have a group that contains the managers, you might want to give them access to all rows. Here’s the query for the Row Level Security policy.

let IsManager = current_principal_is_member_of('aadgroup=sales_managers@domain.com');

let AllData = Sales | where IsManager;

let PartialData = Sales | where not(IsManager) and (SalesPersonAadUser == current_principal()) | extend EmailAddress = "****";

union AllData, PartialData

Expose different data to members of different Microsoft Entra groups

If you have multiple Microsoft Entra groups, and you want the members of each group to see a different subset of data, use this structure for an RLS query.

Customers

| where (current_principal_is_member_of('aadgroup=group1@domain.com') and <filtering specific for group1>) or

(current_principal_is_member_of('aadgroup=group2@domain.com') and <filtering specific for group2>) or

(current_principal_is_member_of('aadgroup=group3@domain.com') and <filtering specific for group3>)

Apply the same RLS function on multiple tables

First, define a function that receives the table name as a string parameter, and references the table using the table() operator.

For example:

.create-or-alter function RLSForCustomersTables(TableName: string) {

table(TableName)

| ...

}

Then configure RLS on multiple tables this way:

.alter table Customers1 policy row_level_security enable "RLSForCustomersTables('Customers1')"

.alter table Customers2 policy row_level_security enable "RLSForCustomersTables('Customers2')"

.alter table Customers3 policy row_level_security enable "RLSForCustomersTables('Customers3')"

Produce an error upon unauthorized access

If you want nonauthorized table users to receive an error instead of returning an empty table, use the assert() function. The following example shows you how to produce this error in an RLS function:

.create-or-alter function RLSForCustomersTables() {

MyTable

| where assert(current_principal_is_member_of('aadgroup=mygroup@mycompany.com') == true, "You don't have access")

}

You can combine this approach with other examples. For example, you can display different results to users in different Microsoft Entra groups, and produce an error for everyone else.

Control permissions on follower databases

The RLS policy that you configure on the production database will also take effect in the follower databases. You can’t configure different RLS policies on the production and follower databases. However, you can use the current_cluster_endpoint() function in your RLS query to achieve the same effect, as having different RLS queries in follower tables.

For example:

.create-or-alter function RLSForCustomersTables() {

let IsProductionCluster = current_cluster_endpoint() == "mycluster.eastus.kusto.windows.net";

let DataForProductionCluster = TempTable | where IsProductionCluster;

let DataForFollowerClusters = TempTable | where not(IsProductionCluster) | extend EmailAddress = "****";

union DataForProductionCluster, DataForFollowerClusters

}

Control permissions on shortcut databases

The RLS policy that you configure on the production database will also take effect in the shortcut databases. You can’t configure different RLS policies on the production and shortcut databases. However, you can use the current_cluster_endpoint() function in your RLS query to achieve the same effect, as having different RLS queries in shortcut tables.

For example:

.create-or-alter function RLSForCustomersTables() {

let IsProductionCluster = current_cluster_endpoint() == "mycluster.eastus.kusto.windows.net";

let DataForProductionCluster = TempTable | where IsProductionCluster;

let DataForFollowerClusters = TempTable | where not(IsProductionCluster) | extend EmailAddress = "****";

union DataForProductionCluster, DataForFollowerClusters

}

More use cases

- A call center support person may identify callers by several digits of their social security number. This number shouldn’t be fully exposed to the support person. An RLS policy can be applied on the table to mask all but the last four digits of the social security number in the result set of any query.