This is the multi-page printable view of this section. Click here to print.

Queries

- 1: Aggregation functions

- 1.1: covariance() (aggregation function)

- 1.2: covarianceif() (aggregation function)

- 1.3: covariancep() (aggregation function)

- 1.4: covariancepif() (aggregation function)

- 1.5: variancepif() (aggregation function)

- 1.6: Aggregation Functions

- 1.7: arg_max() (aggregation function)

- 1.8: arg_min() (aggregation function)

- 1.9: avg() (aggregation function)

- 1.10: avgif() (aggregation function)

- 1.11: binary_all_and() (aggregation function)

- 1.12: binary_all_or() (aggregation function)

- 1.13: binary_all_xor() (aggregation function)

- 1.14: buildschema() (aggregation function)

- 1.15: count_distinct() (aggregation function) - (preview)

- 1.16: count_distinctif() (aggregation function) - (preview)

- 1.17: count() (aggregation function)

- 1.18: countif() (aggregation function)

- 1.19: dcount() (aggregation function)

- 1.20: dcountif() (aggregation function)

- 1.21: hll_if() (aggregation function)

- 1.22: hll_merge() (aggregation function)

- 1.23: hll() (aggregation function)

- 1.24: make_bag_if() (aggregation function)

- 1.25: make_bag() (aggregation function)

- 1.26: make_list_if() (aggregation function)

- 1.27: make_list_with_nulls() (aggregation function)

- 1.28: make_list() (aggregation function)

- 1.29: make_set_if() (aggregation function)

- 1.30: make_set() (aggregation function)

- 1.31: max() (aggregation function)

- 1.32: maxif() (aggregation function)

- 1.33: min() (aggregation function)

- 1.34: minif() (aggregation function)

- 1.35: percentile(), percentiles()

- 1.36: percentilew(), percentilesw()

- 1.37: stdev() (aggregation function)

- 1.38: stdevif() (aggregation function)

- 1.39: stdevp() (aggregation function)

- 1.40: sum() (aggregation function)

- 1.41: sumif() (aggregation function)

- 1.42: take_any() (aggregation function)

- 1.43: take_anyif() (aggregation function)

- 1.44: tdigest_merge() (aggregation functions)

- 1.45: tdigest() (aggregation function)

- 1.46: variance() (aggregation function)

- 1.47: varianceif() (aggregation function)

- 1.48: variancep() (aggregation function)

- 2: Best practices for KQL queries

- 3: Data types

- 3.1: Null values

- 3.2: Scalar data types

- 3.3: The bool data type

- 3.4: The datetime data type

- 3.5: The decimal data type

- 3.6: The dynamic data type

- 3.7: The guid data type

- 3.8: The int data type

- 3.9: The long data type

- 3.10: The real data type

- 3.11: The string data type

- 3.12: The timespan data type

- 4: Entities

- 4.1: Columns

- 4.2: Databases

- 4.3: Entities

- 4.4: Entity names

- 4.5: Entity references

- 4.6: External tables

- 4.7: Fact and dimension tables

- 4.8: Stored functions

- 4.9: Tables

- 4.10: Views

- 5: Functions

- 5.1: detect_anomalous_access_cf_fl()

- 5.2: detect_anomalous_spike_fl()

- 5.3: graph_blast_radius_fl()

- 5.4: graph_exposure_perimeter_fl()

- 5.5: graph_node_centrality_fl()

- 5.6: graph_path_discovery_fl()

- 5.7: bartlett_test_fl()

- 5.8: binomial_test_fl()

- 5.9: comb_fl()

- 5.10: dbscan_dynamic_fl()

- 5.11: dbscan_fl()

- 5.12: detect_anomalous_new_entity_fl()

- 5.13: factorial_fl()

- 5.14: Functions

- 5.15: Functions library

- 5.16: geoip_fl()

- 5.17: get_packages_version_fl()

- 5.18: kmeans_dynamic_fl()

- 5.19: kmeans_fl()

- 5.20: ks_test_fl()

- 5.21: levene_test_fl()

- 5.22: log_reduce_fl()

- 5.23: log_reduce_full_fl()

- 5.24: log_reduce_predict_fl()

- 5.25: log_reduce_predict_full_fl()

- 5.26: log_reduce_train_fl()

- 5.27: mann_whitney_u_test_fl()

- 5.28: normality_test_fl()

- 5.29: pair_probabilities_fl()

- 5.30: pairwise_dist_fl()

- 5.31: percentiles_linear_fl()

- 5.32: perm_fl()

- 5.33: plotly_anomaly_fl()

- 5.34: plotly_gauge_fl()

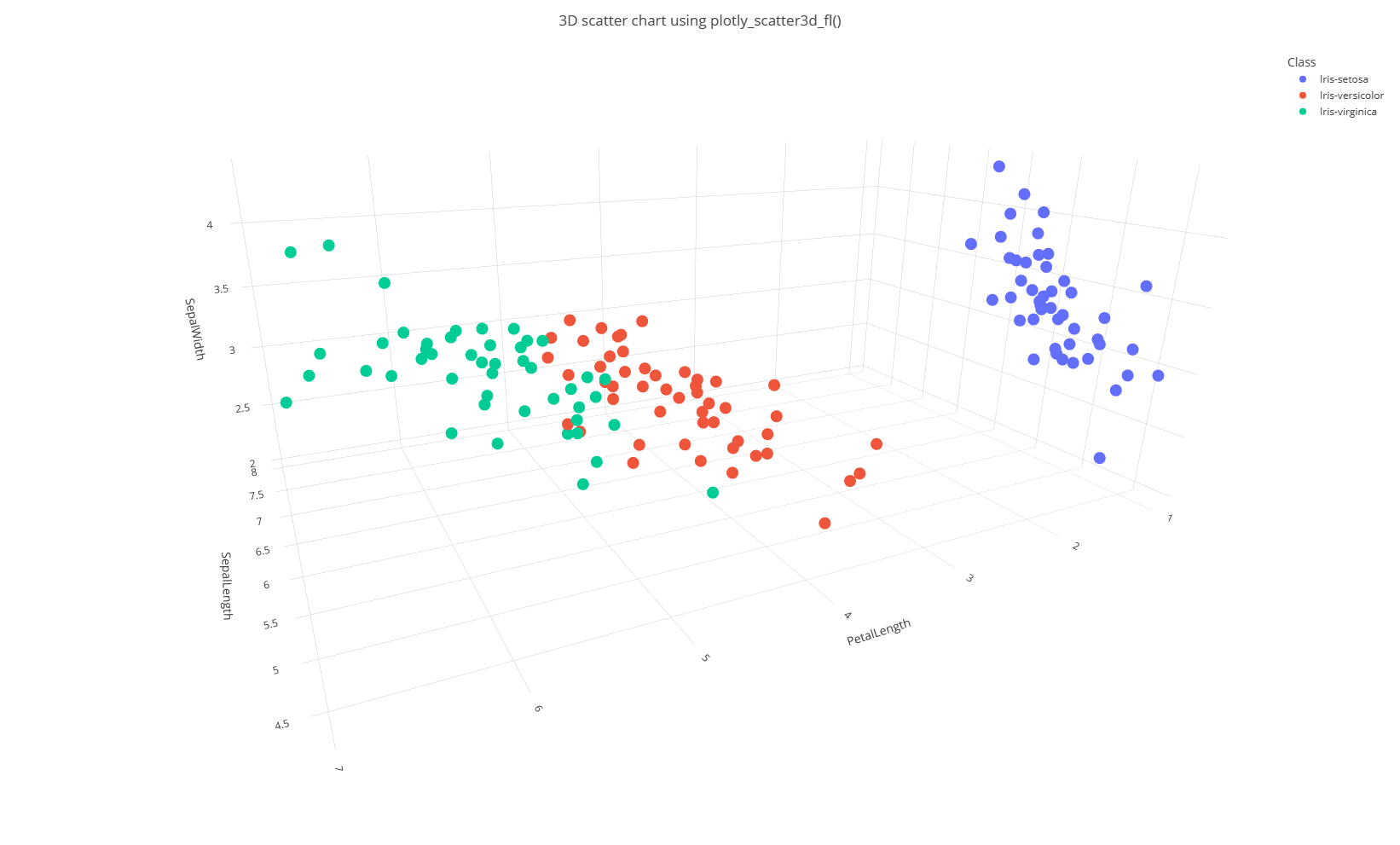

- 5.35: plotly_scatter3d_fl()

- 5.36: predict_fl()

- 5.37: predict_onnx_fl()

- 5.38: quantize_fl()

- 5.39: series_clean_anomalies_fl()

- 5.40: series_cosine_similarity_fl()

- 5.41: series_dbl_exp_smoothing_fl()

- 5.42: series_dot_product_fl()

- 5.43: series_downsample_fl()

- 5.44: series_exp_smoothing_fl()

- 5.45: series_fbprophet_forecast_fl()

- 5.46: series_fit_lowess_fl()

- 5.47: series_fit_poly_fl()

- 5.48: series_lag_fl()

- 5.49: series_metric_fl()

- 5.50: series_monthly_decompose_anomalies_fl()

- 5.51: series_moving_avg_fl()

- 5.52: series_moving_var_fl()

- 5.53: series_mv_ee_anomalies_fl()

- 5.54: series_mv_if_anomalies_fl()

- 5.55: series_mv_oc_anomalies_fl()

- 5.56: series_rate_fl()

- 5.57: series_rolling_fl()

- 5.58: series_shapes_fl()

- 5.59: series_uv_anomalies_fl()

- 5.60: series_uv_change_points_fl()

- 5.61: time_weighted_avg_fl()

- 5.62: time_weighted_avg2_fl()

- 5.63: time_weighted_val_fl()

- 5.64: time_window_rolling_avg_fl()

- 5.65: two_sample_t_test_fl()

- 5.66: User-defined functions

- 5.67: wilcoxon_test_fl()

- 6: Geospatial

- 6.1: geo_closest_point_on_line()

- 6.2: geo_closest_point_on_polygon()

- 6.3: geo_from_wkt()

- 6.4: geo_line_interpolate_point()

- 6.5: geo_line_locate_point()

- 6.6: Geospatial grid system

- 6.7: Geospatial joins

- 6.8: geo_angle()

- 6.9: geo_azimuth()

- 6.10: geo_distance_2points()

- 6.11: geo_distance_point_to_line()

- 6.12: geo_distance_point_to_polygon()

- 6.13: geo_geohash_neighbors()

- 6.14: geo_geohash_to_central_point()

- 6.15: geo_geohash_to_polygon()

- 6.16: geo_h3cell_children()

- 6.17: geo_h3cell_level()

- 6.18: geo_h3cell_neighbors()

- 6.19: geo_h3cell_parent()

- 6.20: geo_h3cell_rings()

- 6.21: geo_h3cell_to_central_point()

- 6.22: geo_h3cell_to_polygon()

- 6.23: geo_intersection_2lines()

- 6.24: geo_intersection_2polygons()

- 6.25: geo_intersection_line_with_polygon()

- 6.26: geo_intersects_2lines()

- 6.27: geo_intersects_2polygons()

- 6.28: geo_intersects_line_with_polygon()

- 6.29: geo_line_buffer()

- 6.30: geo_line_centroid()

- 6.31: geo_line_densify()

- 6.32: geo_line_length()

- 6.33: geo_line_simplify()

- 6.34: geo_line_to_s2cells()

- 6.35: geo_point_buffer()

- 6.36: geo_point_in_circle()

- 6.37: geo_point_in_polygon()

- 6.38: geo_point_to_geohash()

- 6.39: geo_point_to_h3cell()

- 6.40: geo_point_to_s2cell()

- 6.41: geo_polygon_area()

- 6.42: geo_polygon_buffer()

- 6.43: geo_polygon_centroid()

- 6.44: geo_polygon_densify()

- 6.45: geo_polygon_perimeter()

- 6.46: geo_polygon_simplify()

- 6.47: geo_polygon_to_h3cells()

- 6.48: geo_polygon_to_s2cells()

- 6.49: geo_s2cell_neighbors()

- 6.50: geo_s2cell_to_central_point()

- 6.51: geo_s2cell_to_polygon()

- 6.52: geo_simplify_polygons_array()

- 6.53: geo_union_lines_array()

- 6.54: geo_union_polygons_array()

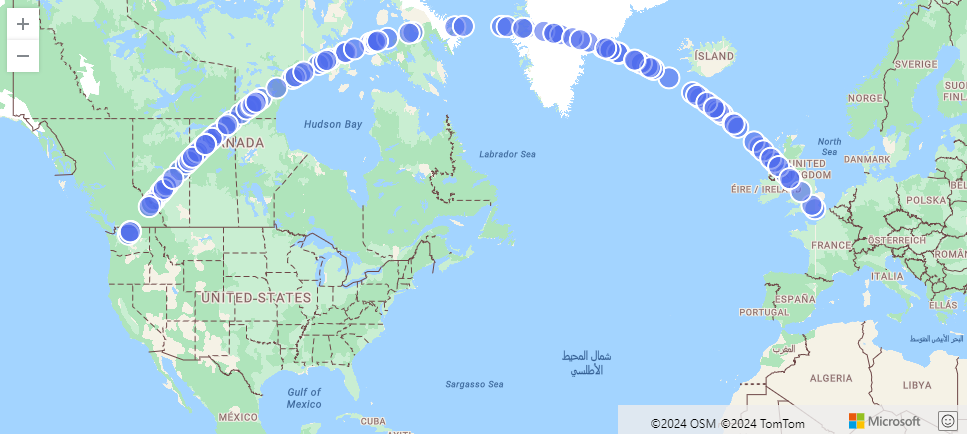

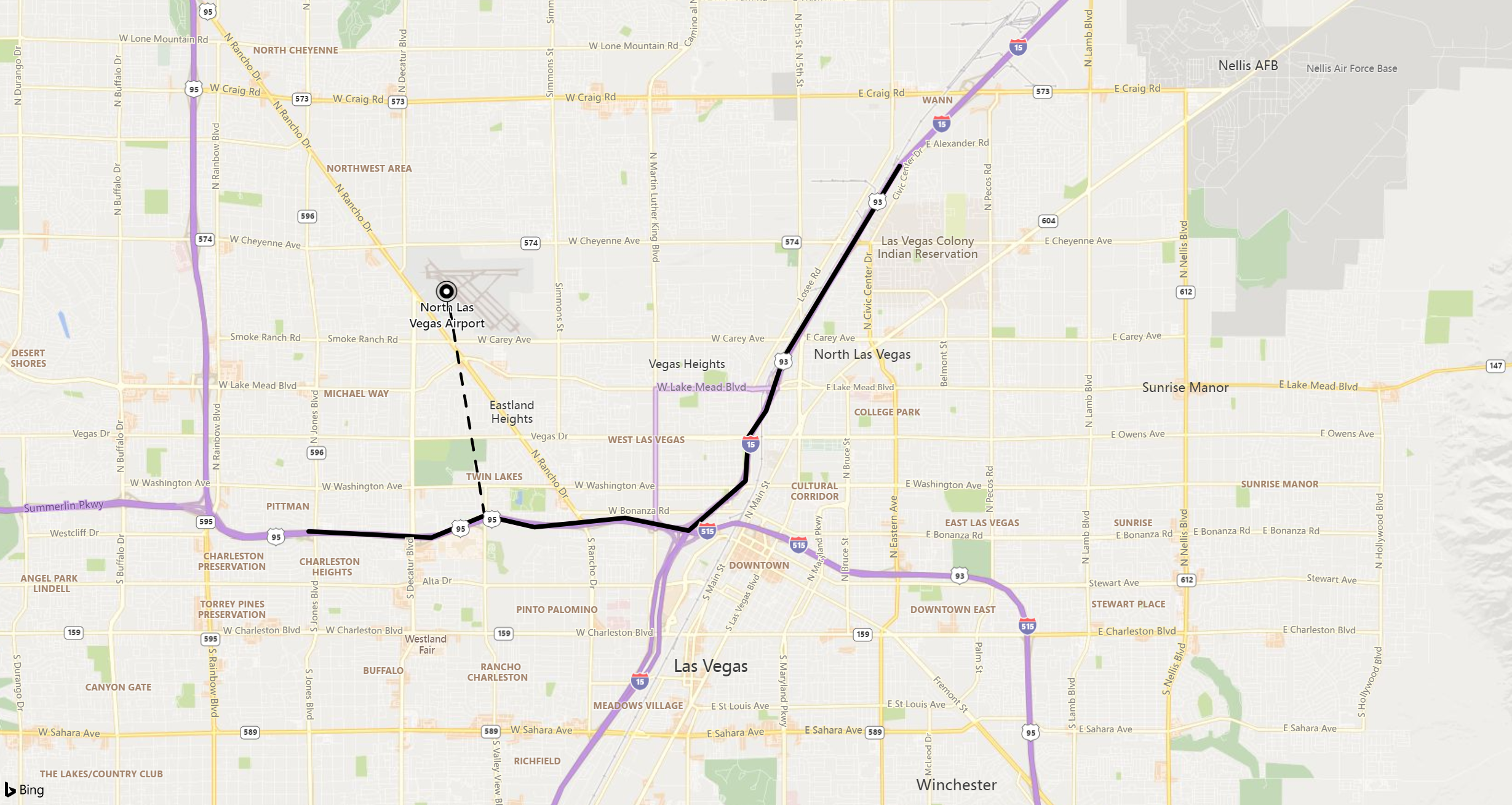

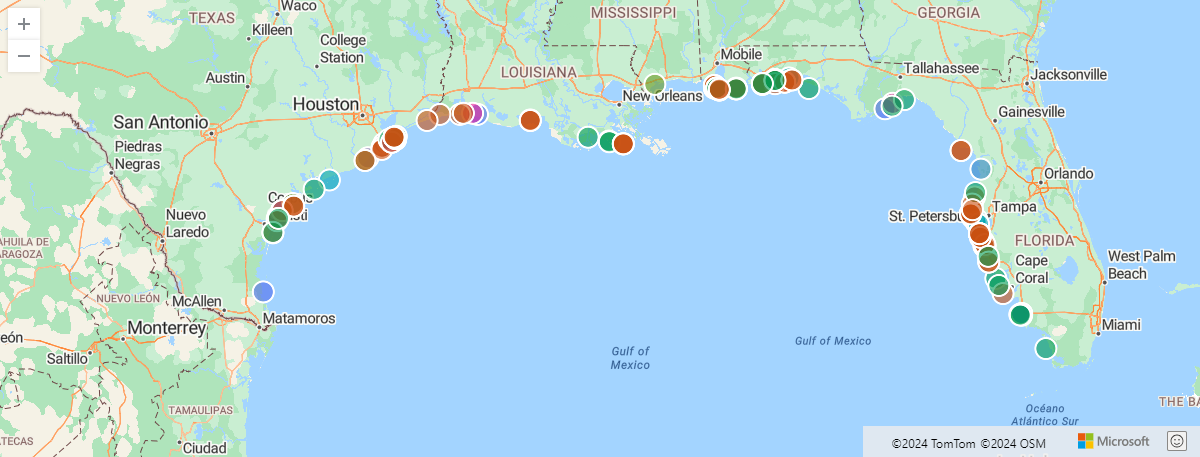

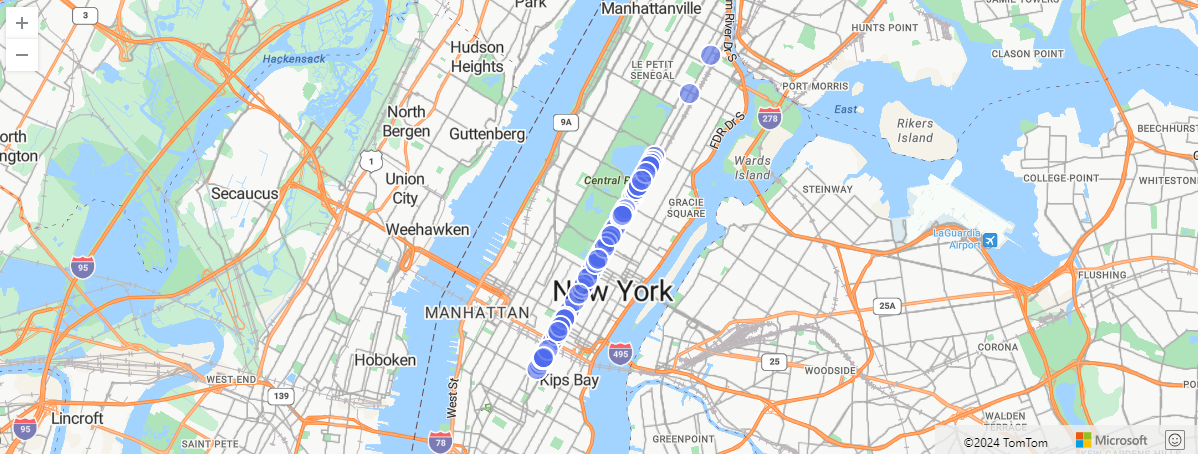

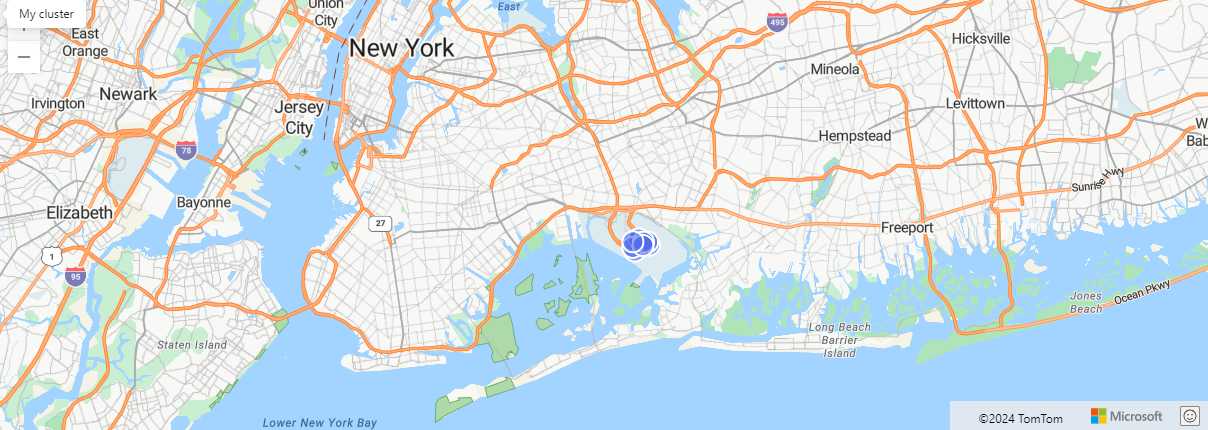

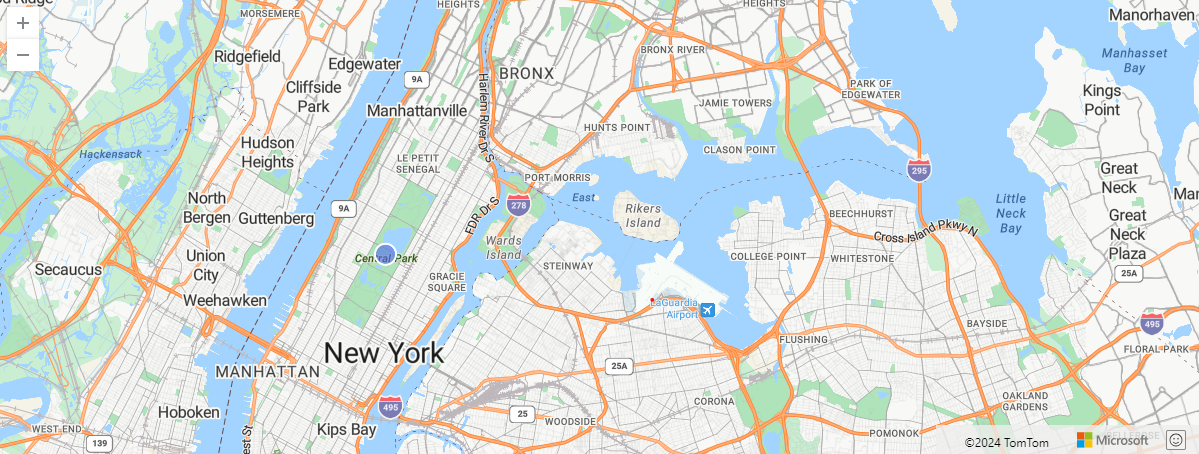

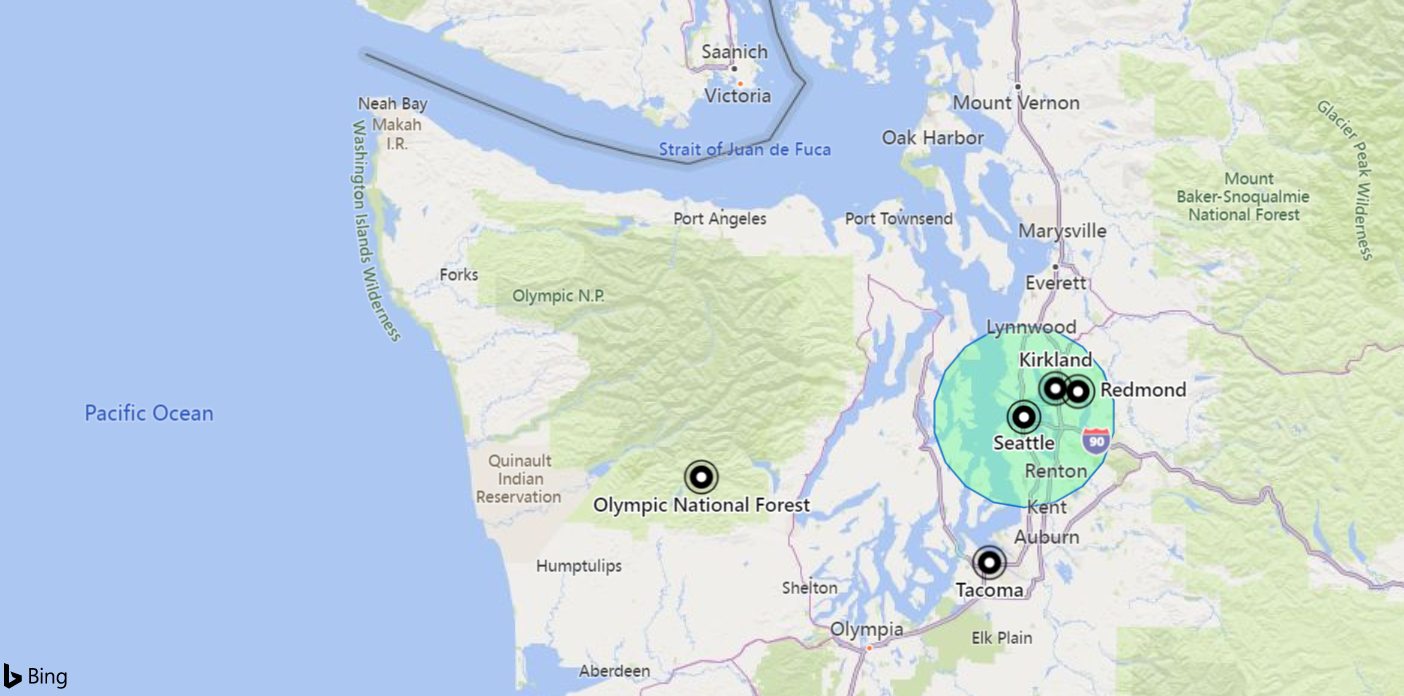

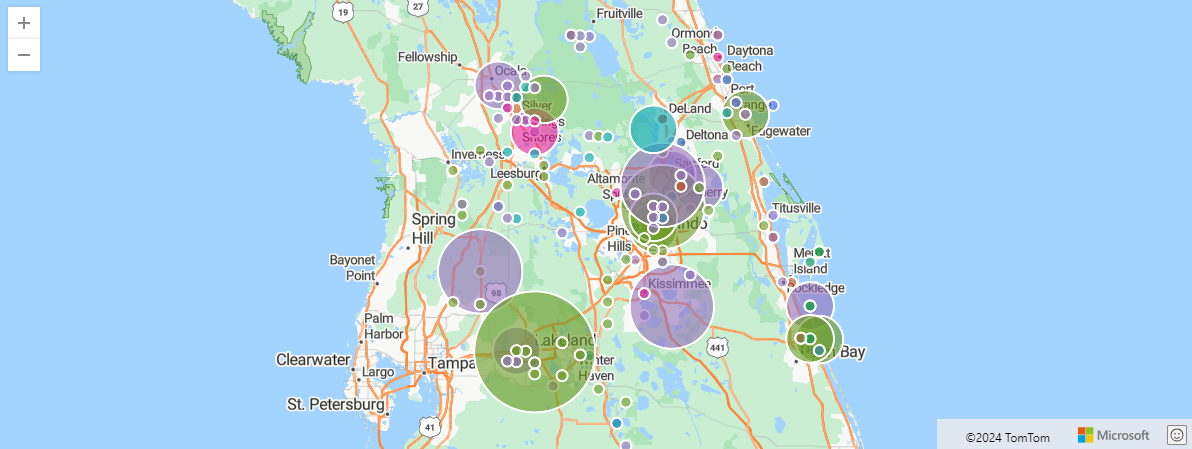

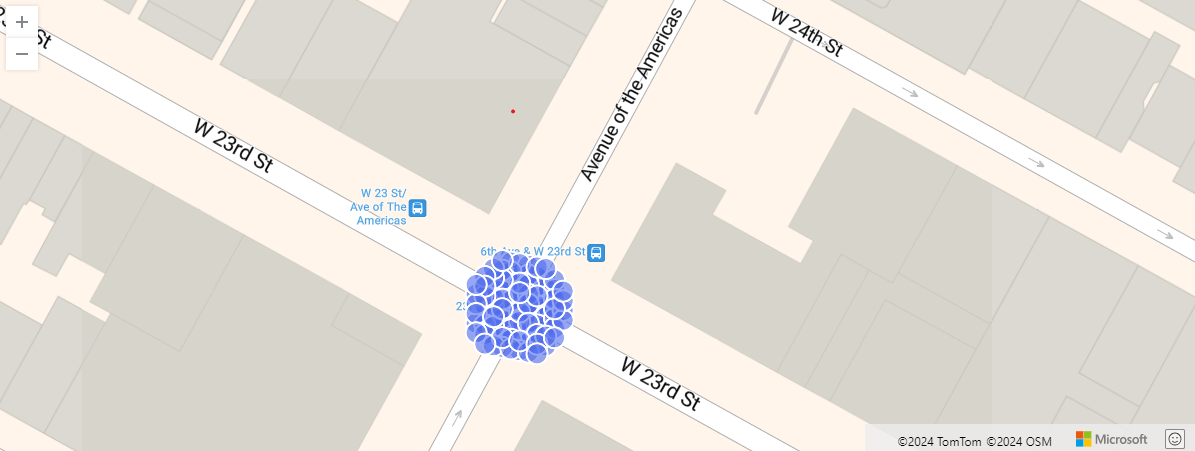

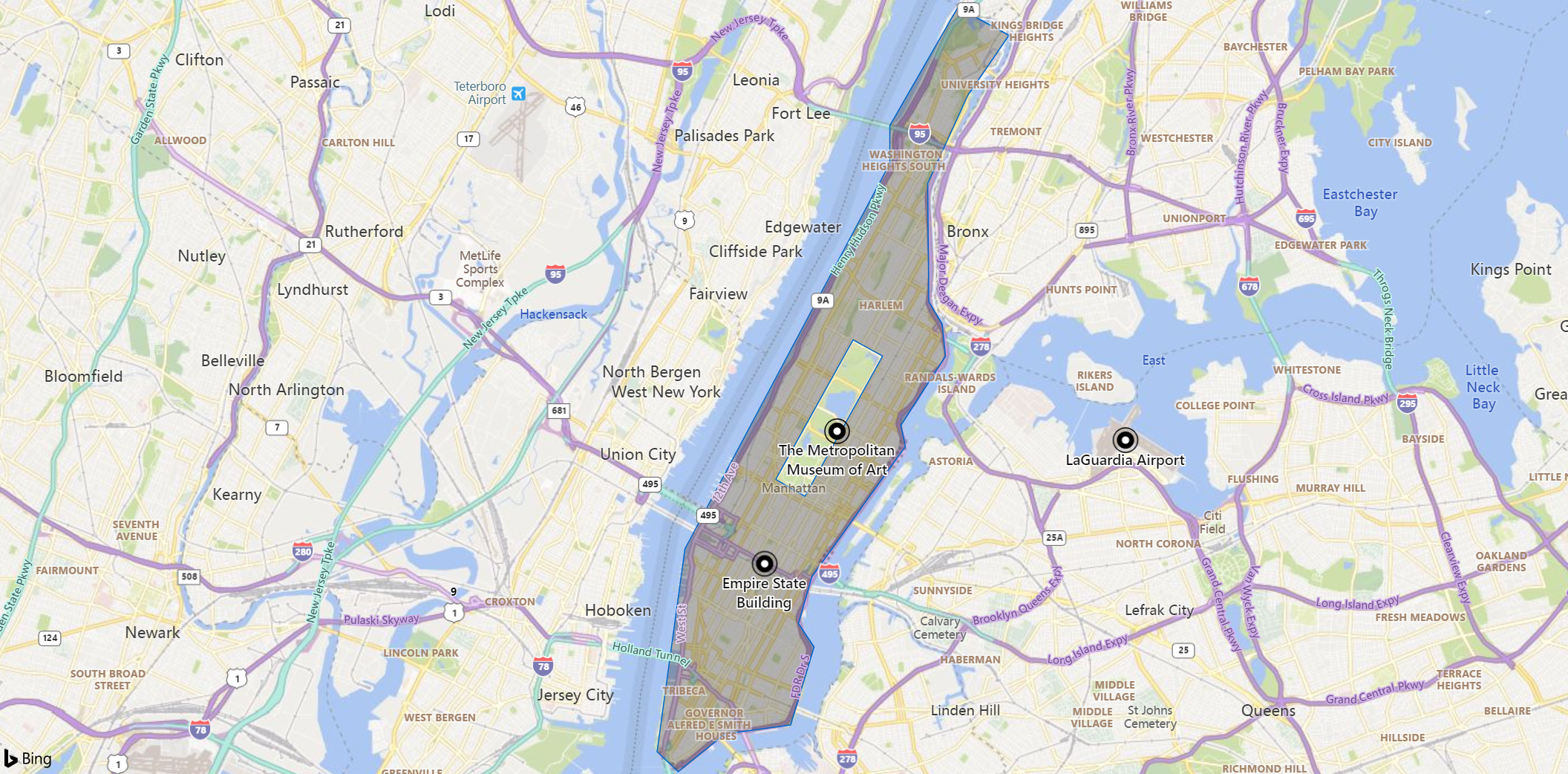

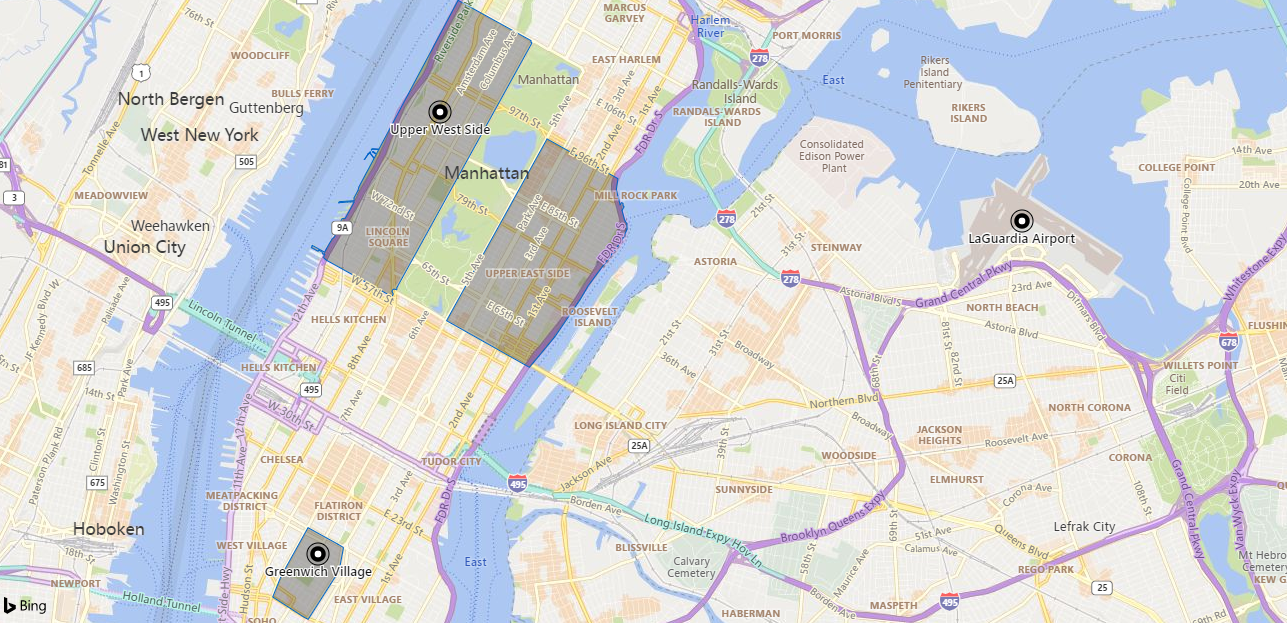

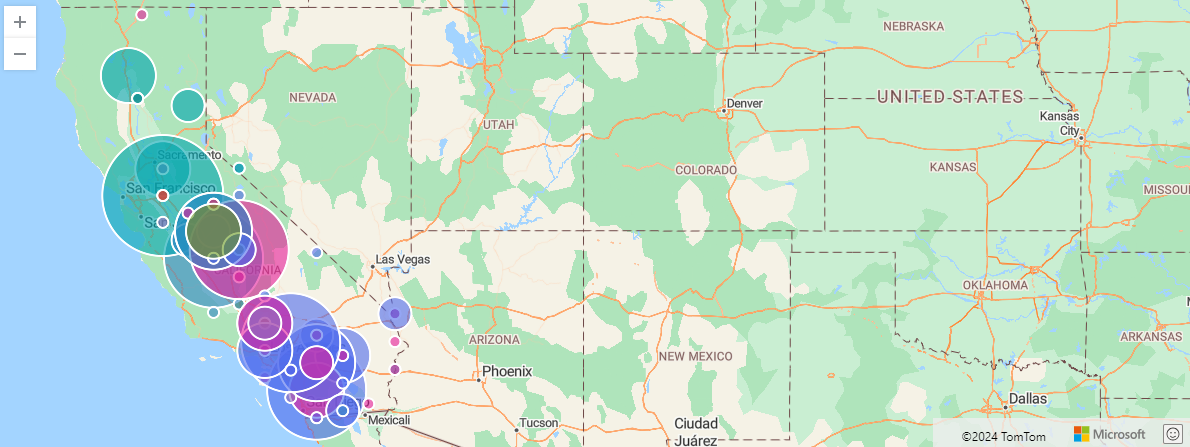

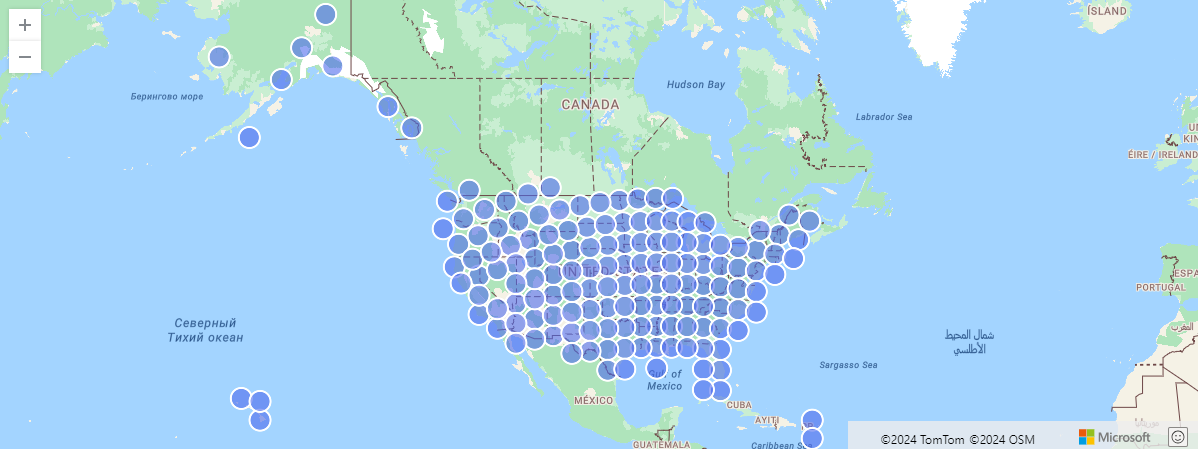

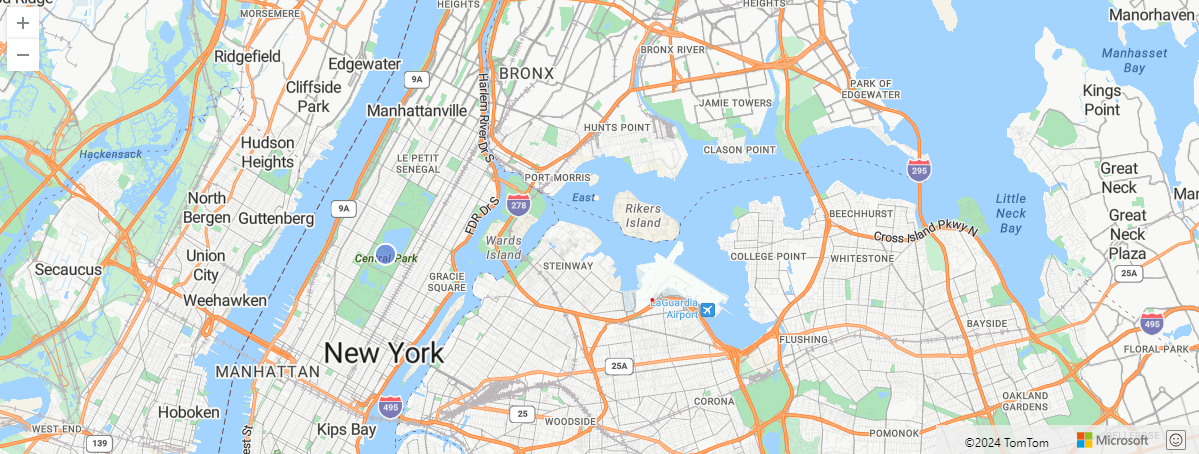

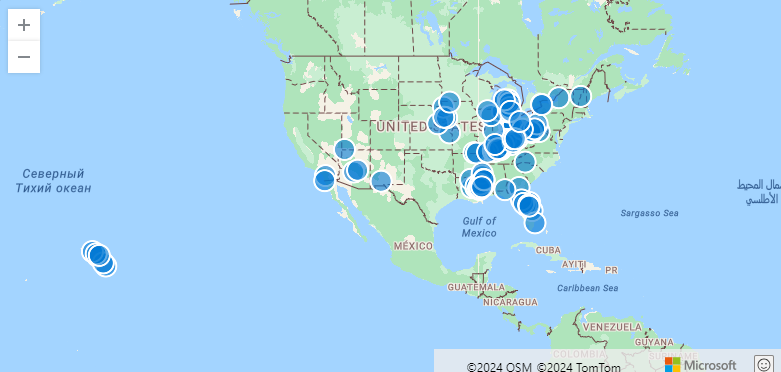

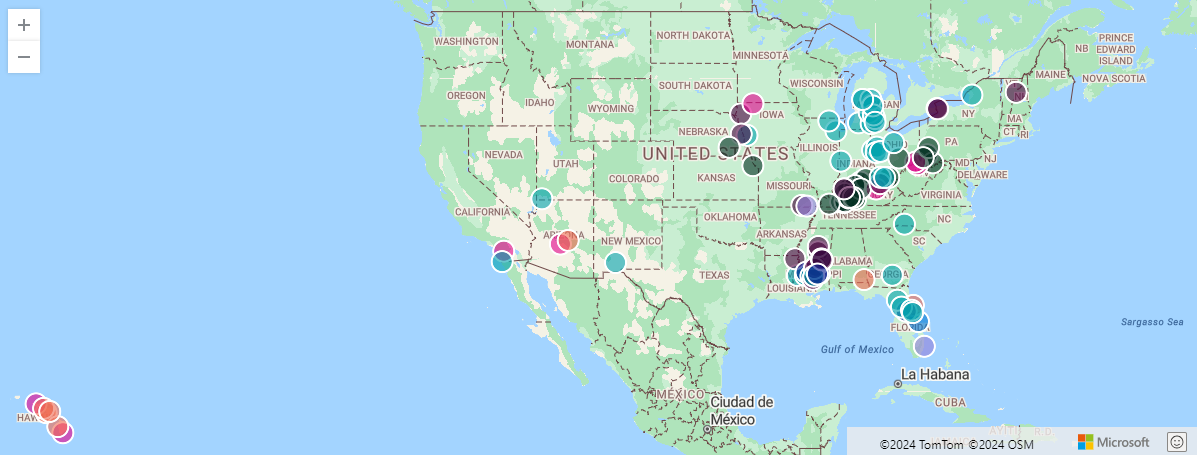

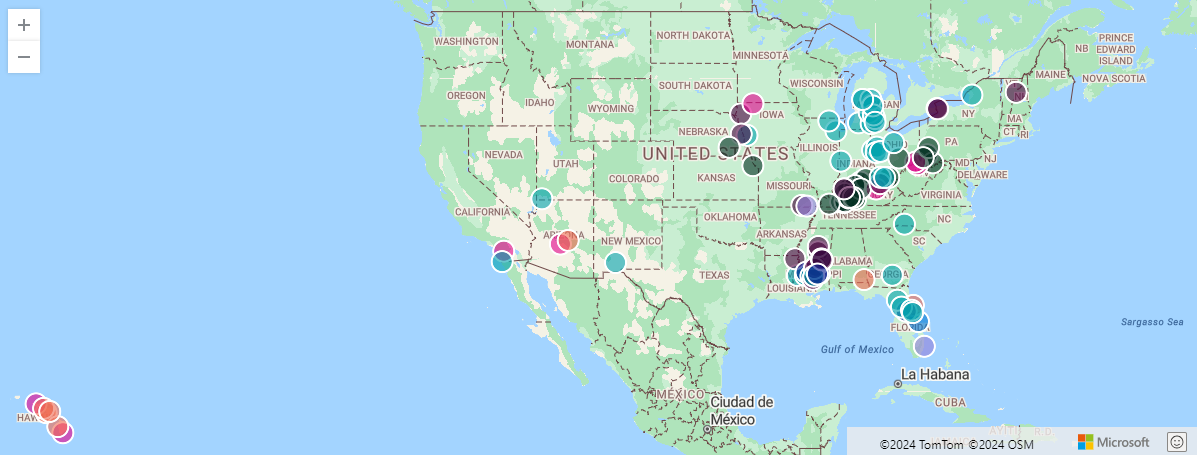

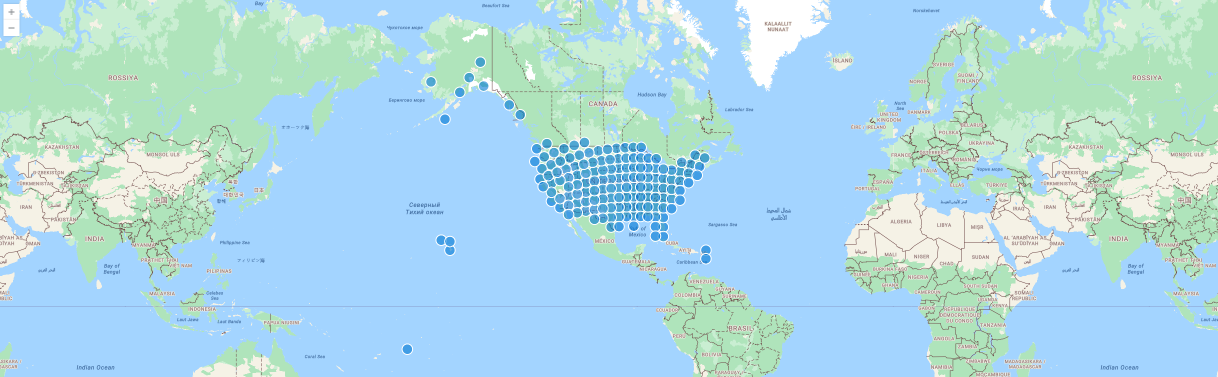

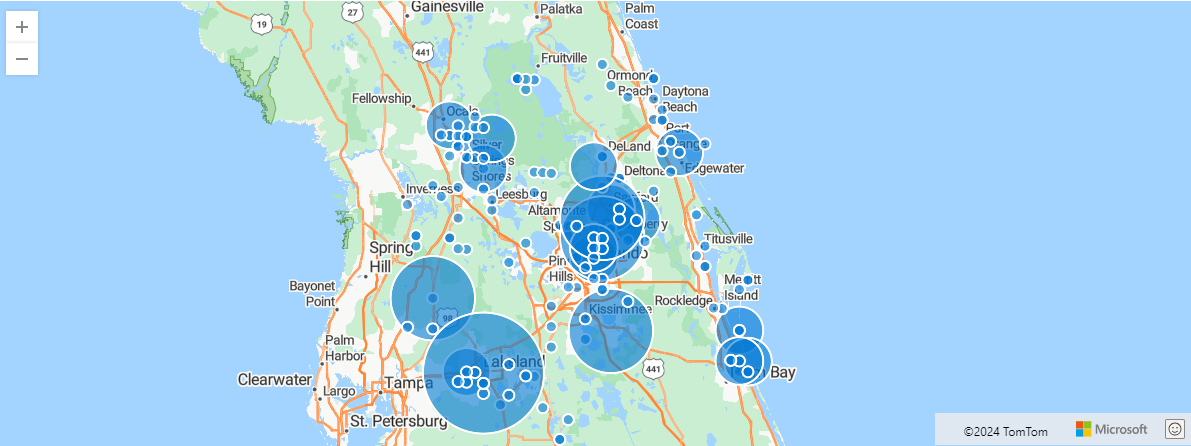

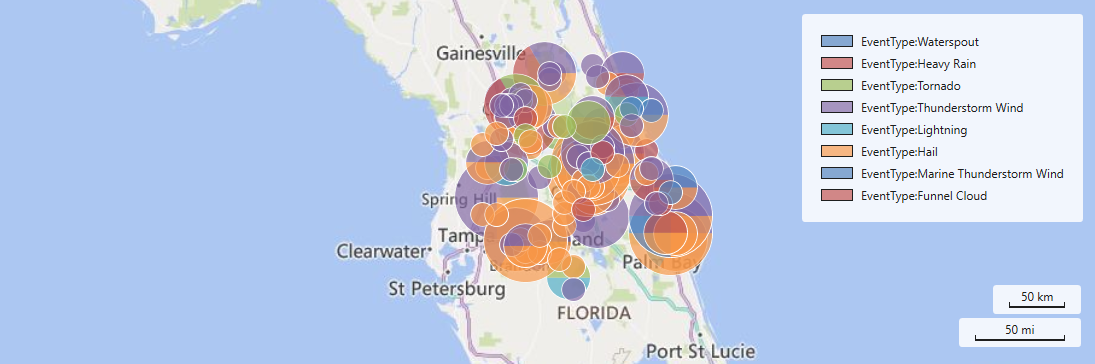

- 6.55: Geospatial data visualizations

- 6.56: Geospatial grid system

- 7: Graph operators

- 7.1: Best practices for Kusto Query Language (KQL) graph semantics

- 7.2: Graph operators

- 7.3: graph-mark-components operator (Preview)

- 7.4: graph-match operator

- 7.5: graph-shortest-paths Operator (Preview)

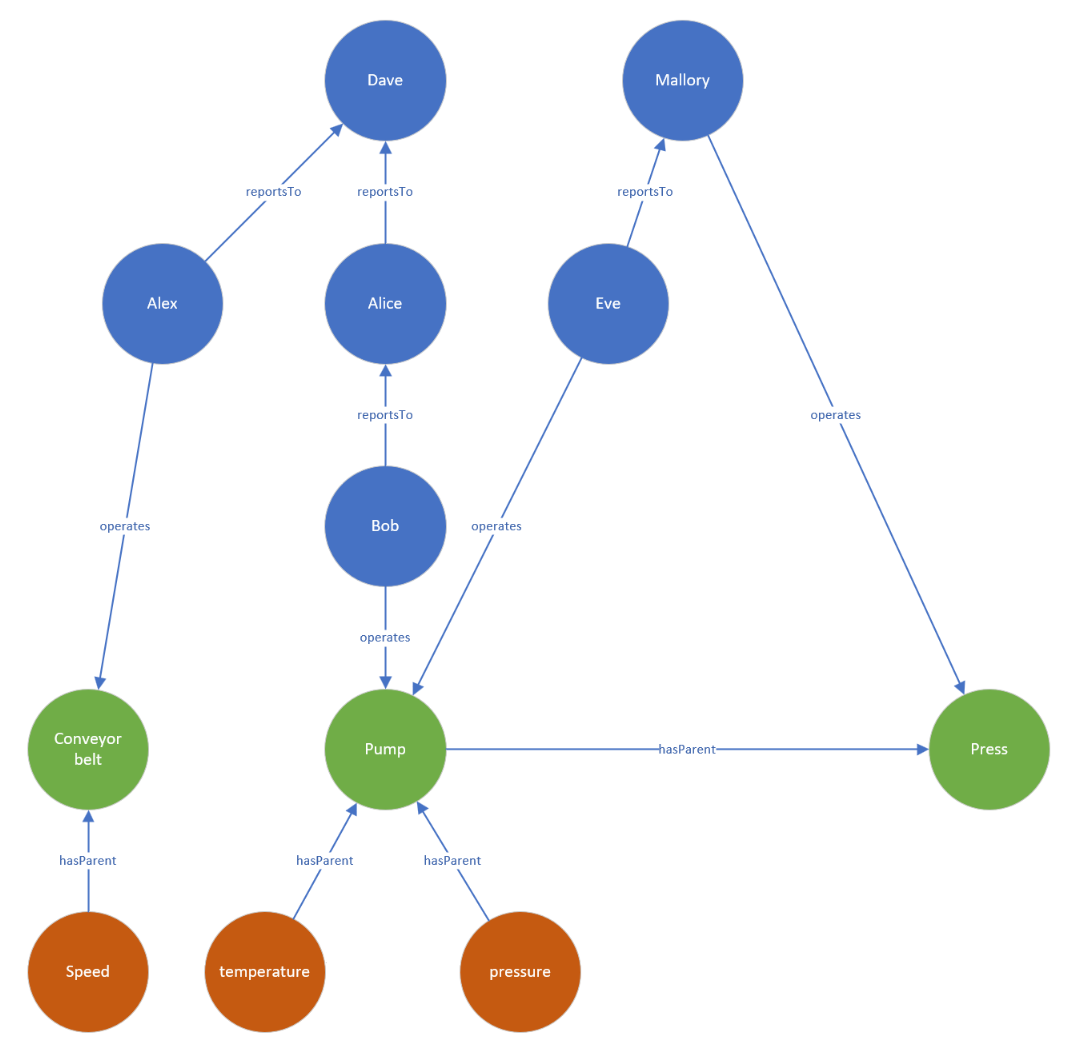

- 7.6: graph-to-table operator

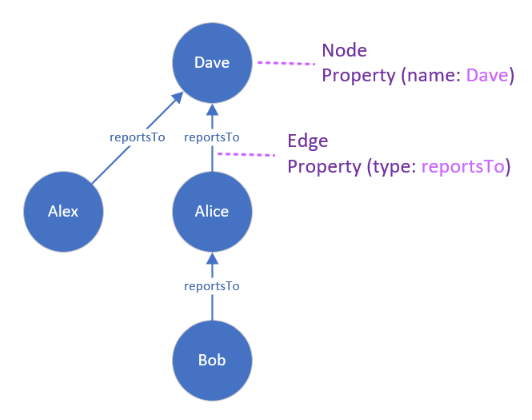

- 7.7: Kusto Query Language (KQL) graph semantics overview

- 7.8: make-graph operator

- 7.9: Scenarios for using Kusto Query Language (KQL) graph semantics

- 8: Limits and Errors

- 8.1: Query Resource Consumption

- 8.2: Query consistency

- 8.3: Query limits

- 8.4: Partial query failures

- 8.4.1: Kusto query result set exceeds internal limit

- 8.4.2: Overflows

- 8.4.3: Runaway queries

- 9: Plugins

- 9.1: geo_line_lookup plugin

- 9.2: geo_polygon_lookup plugin

- 9.3: Data reshaping plugins

- 9.3.1: bag_unpack plugin

- 9.3.2: narrow plugin

- 9.3.3: pivot plugin

- 9.4: General plugins

- 9.4.1: dcount_intersect plugin

- 9.4.2: infer_storage_schema plugin

- 9.4.3: infer_storage_schema_with_suggestions plugin

- 9.4.4: ipv4_lookup plugin

- 9.4.5: ipv6_lookup plugin

- 9.4.6: preview plugin

- 9.4.7: schema_merge plugin

- 9.5: Language plugins

- 9.5.1: Python plugin

- 9.5.2: Python plugin packages

- 9.5.3: R plugin (Preview)

- 9.6: Machine learning plugins

- 9.6.1: autocluster plugin

- 9.6.2: basket plugin

- 9.6.3: diffpatterns plugin

- 9.6.4: diffpatterns_text plugin

- 9.7: Query connectivity plugins

- 9.7.1: ai_chat_completion plugin (preview)

- 9.7.2: ai_chat_completion_prompt plugin (preview)

- 9.7.3: ai_embeddings plugin (preview)

- 9.7.4: ai_embed_text plugin (Preview)

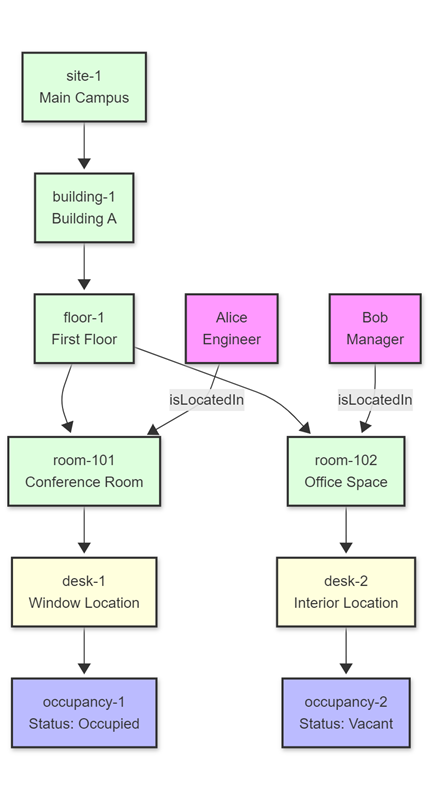

- 9.7.5: azure_digital_twins_query_request plugin

- 9.7.6: cosmosdb_sql_request plugin

- 9.7.7: http_request plugin

- 9.7.8: http_request_post plugin

- 9.7.9: mysql_request plugin

- 9.7.10: postgresql_request plugin

- 9.7.11: sql_request plugin

- 9.8: User and sequence analytics plugins

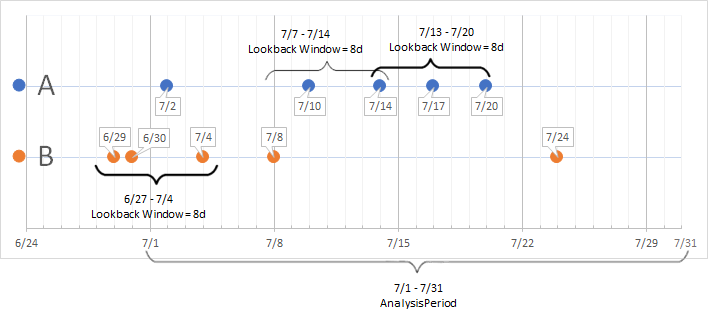

- 9.8.1: active_users_count plugin

- 9.8.2: activity_counts_metrics plugin

- 9.8.3: activity_engagement plugin

- 9.8.4: activity_metrics plugin

- 9.8.5: funnel_sequence plugin

- 9.8.6: funnel_sequence_completion plugin

- 9.8.7: new_activity_metrics plugin

- 9.8.8: rolling_percentile plugin

- 9.8.9: rows_near plugin

- 9.8.10: sequence_detect plugin

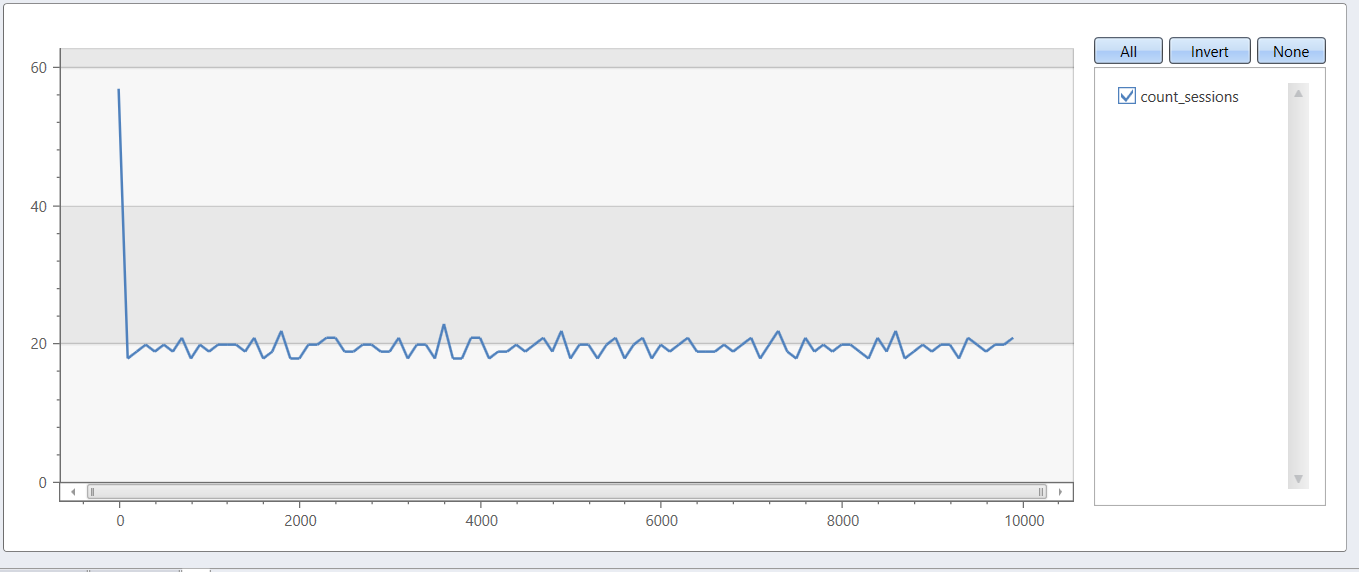

- 9.8.11: session_count plugin

- 9.8.12: sliding_window_counts plugin

- 9.8.13: User Analytics

- 10: Query statements

- 10.1: Alias statement

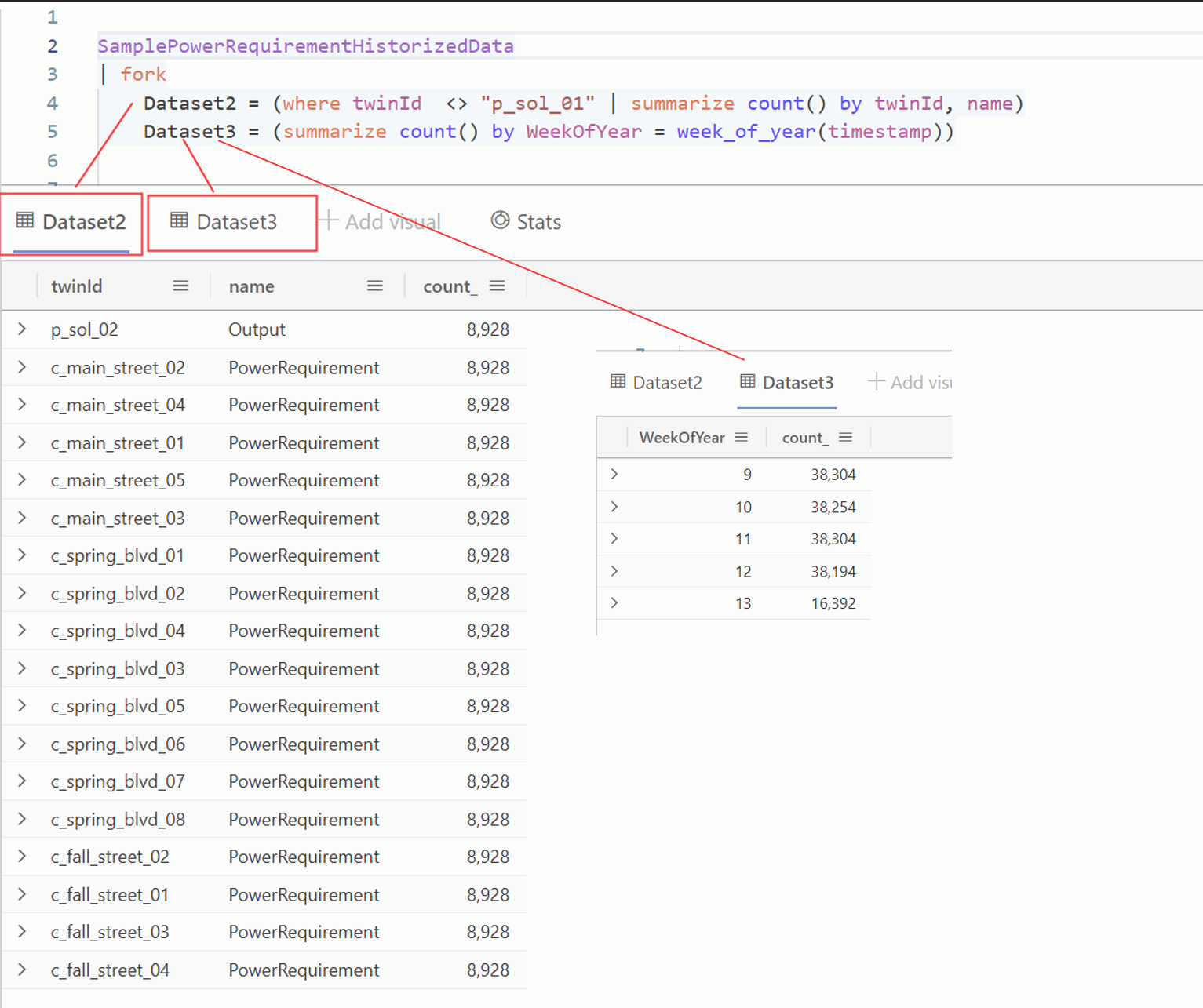

- 10.2: Batches

- 10.3: Let statement

- 10.4: Pattern statement

- 10.5: Query parameters declaration statement

- 10.6: Query statements

- 10.7: Restrict statement

- 10.8: Set statement

- 10.9: Tabular expression statements

- 11: Reference

- 11.1: T-SQL

- 11.2: JSONPath syntax

- 11.3: KQL docs navigation guide

- 11.4: Regex syntax

- 11.5: Splunk to Kusto map

- 11.6: SQL to Kusto query translation

- 11.7: Timezone

- 12: Scalar functions

- 12.1: column_names_of()

- 12.2: abs()

- 12.3: acos()

- 12.4: ago()

- 12.5: around() function

- 12.6: array_concat()

- 12.7: array_iff()

- 12.8: array_index_of()

- 12.9: array_length()

- 12.10: array_reverse()

- 12.11: array_rotate_left()

- 12.12: array_rotate_right()

- 12.13: array_shift_left()

- 12.14: array_shift_right()

- 12.15: array_slice()

- 12.16: array_sort_asc()

- 12.17: array_sort_desc()

- 12.18: array_split()

- 12.19: array_sum()

- 12.20: asin()

- 12.21: assert()

- 12.22: atan()

- 12.23: atan2()

- 12.24: bag_has_key()

- 12.25: bag_keys()

- 12.26: bag_merge()

- 12.27: bag_pack_columns()

- 12.28: bag_pack()

- 12.29: bag_remove_keys()

- 12.30: bag_set_key()

- 12.31: bag_zip()

- 12.32: base64_decode_toarray()

- 12.33: base64_decode_toguid()

- 12.34: base64_decode_tostring()

- 12.35: base64_encode_fromarray()

- 12.36: base64_encode_fromguid()

- 12.37: base64_encode_tostring()

- 12.38: beta_cdf()

- 12.39: beta_inv()

- 12.40: beta_pdf()

- 12.41: bin_at()

- 12.42: bin_auto()

- 12.43: bin()

- 12.44: binary_and()

- 12.45: binary_not()

- 12.46: binary_or()

- 12.47: binary_shift_left()

- 12.48: binary_shift_right()

- 12.49: binary_xor()

- 12.50: bitset_count_ones()

- 12.51: case()

- 12.52: ceiling()

- 12.53: coalesce()

- 12.54: column_ifexists()

- 12.55: convert_angle()

- 12.56: convert_energy()

- 12.57: convert_force()

- 12.58: convert_length()

- 12.59: convert_mass()

- 12.60: convert_speed()

- 12.61: convert_temperature()

- 12.62: convert_volume()

- 12.63: cos()

- 12.64: cot()

- 12.65: countof()

- 12.66: current_cluster_endpoint()

- 12.67: current_database()

- 12.68: current_principal_details()

- 12.69: current_principal_is_member_of()

- 12.70: current_principal()

- 12.71: cursor_after()

- 12.72: cursor_before_or_at()

- 12.73: cursor_current()

- 12.74: datetime_add()

- 12.75: datetime_diff()

- 12.76: datetime_list_timezones()

- 12.77: datetime_local_to_utc()

- 12.78: datetime_part()

- 12.79: datetime_utc_to_local()

- 12.80: dayofmonth()

- 12.81: dayofweek()

- 12.82: dayofyear()

- 12.83: dcount_hll()

- 12.84: degrees()

- 12.85: dynamic_to_json()

- 12.86: endofday()

- 12.87: endofmonth()

- 12.88: endofweek()

- 12.89: endofyear()

- 12.90: erf()

- 12.91: erfc()

- 12.92: estimate_data_size()

- 12.93: exp()

- 12.94: exp10()

- 12.95: exp2()

- 12.96: extent_id()

- 12.97: extent_tags()

- 12.98: extract_all()

- 12.99: extract_json()

- 12.100: extract()

- 12.101: format_bytes()

- 12.102: format_datetime()

- 12.103: format_ipv4_mask()

- 12.104: format_ipv4()

- 12.105: format_timespan()

- 12.106: gamma()

- 12.107: geo_info_from_ip_address()

- 12.108: gettype()

- 12.109: getyear()

- 12.110: gzip_compress_to_base64_string

- 12.111: gzip_decompress_from_base64_string()

- 12.112: has_any_ipv4_prefix()

- 12.113: has_any_ipv4()

- 12.114: has_ipv4_prefix()

- 12.115: has_ipv4()

- 12.116: hash_combine()

- 12.117: hash_many()

- 12.118: hash_md5()

- 12.119: hash_sha1()

- 12.120: hash_sha256()

- 12.121: hash_xxhash64()

- 12.122: hash()

- 12.123: hll_merge()

- 12.124: hourofday()

- 12.125: iff()

- 12.126: indexof_regex()

- 12.127: indexof()

- 12.128: ingestion_time()

- 12.129: ipv4_compare()

- 12.130: ipv4_is_in_any_range()

- 12.131: ipv4_is_in_range()

- 12.132: ipv4_is_match()

- 12.133: ipv4_is_private()

- 12.134: ipv4_netmask_suffix()

- 12.135: ipv4_range_to_cidr_list()

- 12.136: ipv6_compare()

- 12.137: ipv6_is_in_any_range()

- 12.138: ipv6_is_in_range()

- 12.139: ipv6_is_match()

- 12.140: isascii()

- 12.141: isempty()

- 12.142: isfinite()

- 12.143: isinf()

- 12.144: isnan()

- 12.145: isnotempty()

- 12.146: isnotnull()

- 12.147: isnull()

- 12.148: isutf8()

- 12.149: jaccard_index()

- 12.150: log()

- 12.151: log10()

- 12.152: log2()

- 12.153: loggamma()

- 12.154: make_datetime()

- 12.155: make_timespan()

- 12.156: max_of()

- 12.157: merge_tdigest()

- 12.158: min_of()

- 12.159: monthofyear()

- 12.160: new_guid()

- 12.161: not()

- 12.162: now()

- 12.163: pack_all()

- 12.164: pack_array()

- 12.165: parse_command_line()

- 12.166: parse_csv()

- 12.167: parse_ipv4_mask()

- 12.168: parse_ipv4()

- 12.169: parse_ipv6_mask()

- 12.170: parse_ipv6()

- 12.171: parse_json() function

- 12.172: parse_path()

- 12.173: parse_url()

- 12.174: parse_urlquery()

- 12.175: parse_user_agent()

- 12.176: parse_version()

- 12.177: parse_xml()

- 12.178: percentile_array_tdigest()

- 12.179: percentile_tdigest()

- 12.180: percentrank_tdigest()

- 12.181: pi()

- 12.182: pow()

- 12.183: punycode_domain_from_string

- 12.184: punycode_domain_to_string

- 12.185: punycode_from_string

- 12.186: punycode_to_string

- 12.187: radians()

- 12.188: rand()

- 12.189: range()

- 12.190: rank_tdigest()

- 12.191: regex_quote()

- 12.192: repeat()

- 12.193: replace_regex()

- 12.194: replace_string()

- 12.195: replace_strings()

- 12.196: reverse()

- 12.197: round()

- 12.198: Scalar Functions

- 12.199: set_difference()

- 12.200: set_has_element()

- 12.201: set_intersect()

- 12.202: set_union()

- 12.203: sign()

- 12.204: sin()

- 12.205: split()

- 12.206: sqrt()

- 12.207: startofday()

- 12.208: startofmonth()

- 12.209: startofweek()

- 12.210: startofyear()

- 12.211: strcat_array()

- 12.212: strcat_delim()

- 12.213: strcat()

- 12.214: strcmp()

- 12.215: string_size()

- 12.216: strlen()

- 12.217: strrep()

- 12.218: substring()

- 12.219: tan()

- 12.220: The has_any_index operator

- 12.221: tobool()

- 12.222: todatetime()

- 12.223: todecimal()

- 12.224: toguid()

- 12.225: tohex()

- 12.226: toint()

- 12.227: tolong()

- 12.228: tolower()

- 12.229: toreal()

- 12.230: tostring()

- 12.231: totimespan()

- 12.232: toupper()

- 12.233: translate()

- 12.234: treepath()

- 12.235: trim_end()

- 12.236: trim_start()

- 12.237: trim()

- 12.238: unicode_codepoints_from_string()

- 12.239: unicode_codepoints_to_string()

- 12.240: unixtime_microseconds_todatetime()

- 12.241: unixtime_milliseconds_todatetime()

- 12.242: unixtime_nanoseconds_todatetime()

- 12.243: unixtime_seconds_todatetime()

- 12.244: url_decode()

- 12.245: url_encode_component()

- 12.246: url_encode()

- 12.247: week_of_year()

- 12.248: welch_test()

- 12.249: zip()

- 12.250: zlib_compress_to_base64_string

- 12.251: zlib_decompress_from_base64_string()

- 13: Scalar operators

- 13.1: Bitwise (binary) operators

- 13.2: Datetime / timespan arithmetic

- 13.3: Logical (binary) operators

- 13.4: Numerical operators

- 13.5: Between operators

- 13.5.1: The !between operator

- 13.5.2: The between operator

- 13.6: in operators

- 13.6.1: The case-insensitive !in~ string operator

- 13.6.2: The case-insensitive in~ string operator

- 13.6.3: The case-sensitive !in string operator

- 13.6.4: The case-sensitive in string operator

- 13.7: String operators

- 13.7.1: matches regex operator

- 13.7.2: String operators

- 13.7.3: The case-insensitive !~ (not equals) string operator

- 13.7.4: The case-insensitive !contains string operator

- 13.7.5: The case-insensitive !endswith string operator

- 13.7.6: The case-insensitive !has string operators

- 13.7.7: The case-insensitive !hasprefix string operator

- 13.7.8: The case-insensitive !hassuffix string operator

- 13.7.9: The case-insensitive !in~ string operator

- 13.7.10: The case-insensitive !startswith string operators

- 13.7.11: The case-insensitive =~ (equals) string operator

- 13.7.12: The case-insensitive contains string operator

- 13.7.13: The case-insensitive endswith string operator

- 13.7.14: The case-insensitive has string operator

- 13.7.15: The case-insensitive has_all string operator

- 13.7.16: The case-insensitive has_any string operator

- 13.7.17: The case-insensitive hasprefix string operator

- 13.7.18: The case-insensitive hassuffix string operator

- 13.7.19: The case-insensitive in~ string operator

- 13.7.20: The case-insensitive startswith string operator

- 13.7.21: The case-sensitive != (not equals) string operator

- 13.7.22: The case-sensitive !contains_cs string operator

- 13.7.23: The case-sensitive !endswith_cs string operator

- 13.7.24: The case-sensitive !has_cs string operator

- 13.7.25: The case-sensitive !hasprefix_cs string operator

- 13.7.26: The case-sensitive !hassuffix_cs string operator

- 13.7.27: The case-sensitive !in string operator

- 13.7.28: The case-sensitive !startswith_cs string operator

- 13.7.29: The case-sensitive == (equals) string operator

- 13.7.30: The case-sensitive contains_cs string operator

- 13.7.31: The case-sensitive endswith_cs string operator

- 13.7.32: The case-sensitive has_cs string operator

- 13.7.33: The case-sensitive hasprefix_cs string operator

- 13.7.34: The case-sensitive hassuffix_cs string operator

- 13.7.35: The case-sensitive in string operator

- 13.7.36: The case-sensitive startswith string operator

- 14: Special functions

- 14.1: graph function

- 14.2: cluster()

- 14.3: Cross-cluster and cross-database queries

- 14.4: database()

- 14.5: external_table()

- 14.6: materialize()

- 14.7: materialized_view()

- 14.8: Query results cache

- 14.9: stored_query_result()

- 14.10: table()

- 14.11: toscalar()

- 15: Tabular operators

- 15.1: Join operator

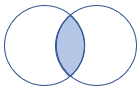

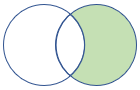

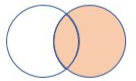

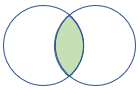

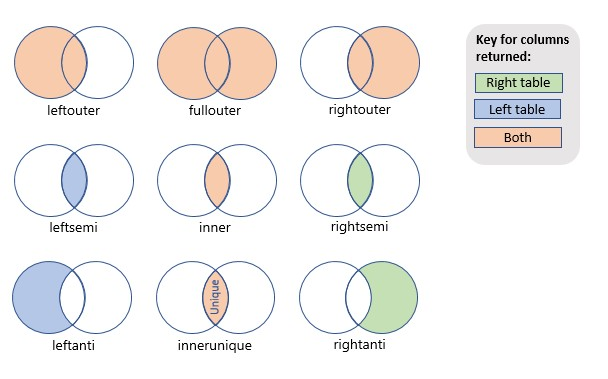

- 15.1.1: join flavors

- 15.1.1.1: fullouter join

- 15.1.1.2: inner join

- 15.1.1.3: innerunique join

- 15.1.1.4: leftanti join

- 15.1.1.5: leftouter join

- 15.1.1.6: leftsemi join

- 15.1.1.7: rightanti join

- 15.1.1.8: rightouter join

- 15.1.1.9: rightsemi join

- 15.1.2: Broadcast join

- 15.1.3: Cross-cluster join

- 15.1.4: join operator

- 15.1.5: Joining within time window

- 15.2: Render operator

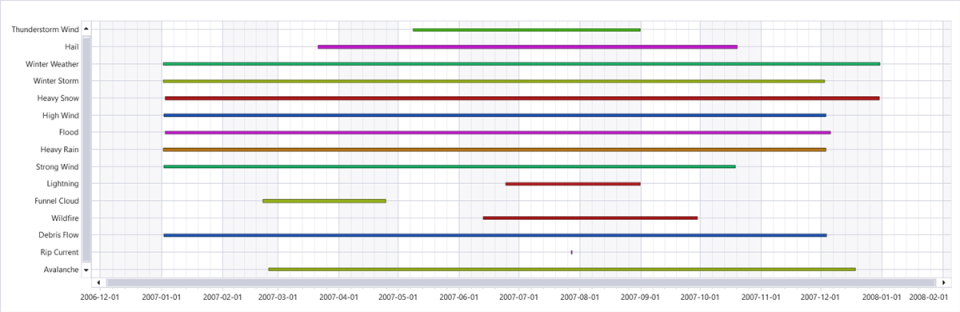

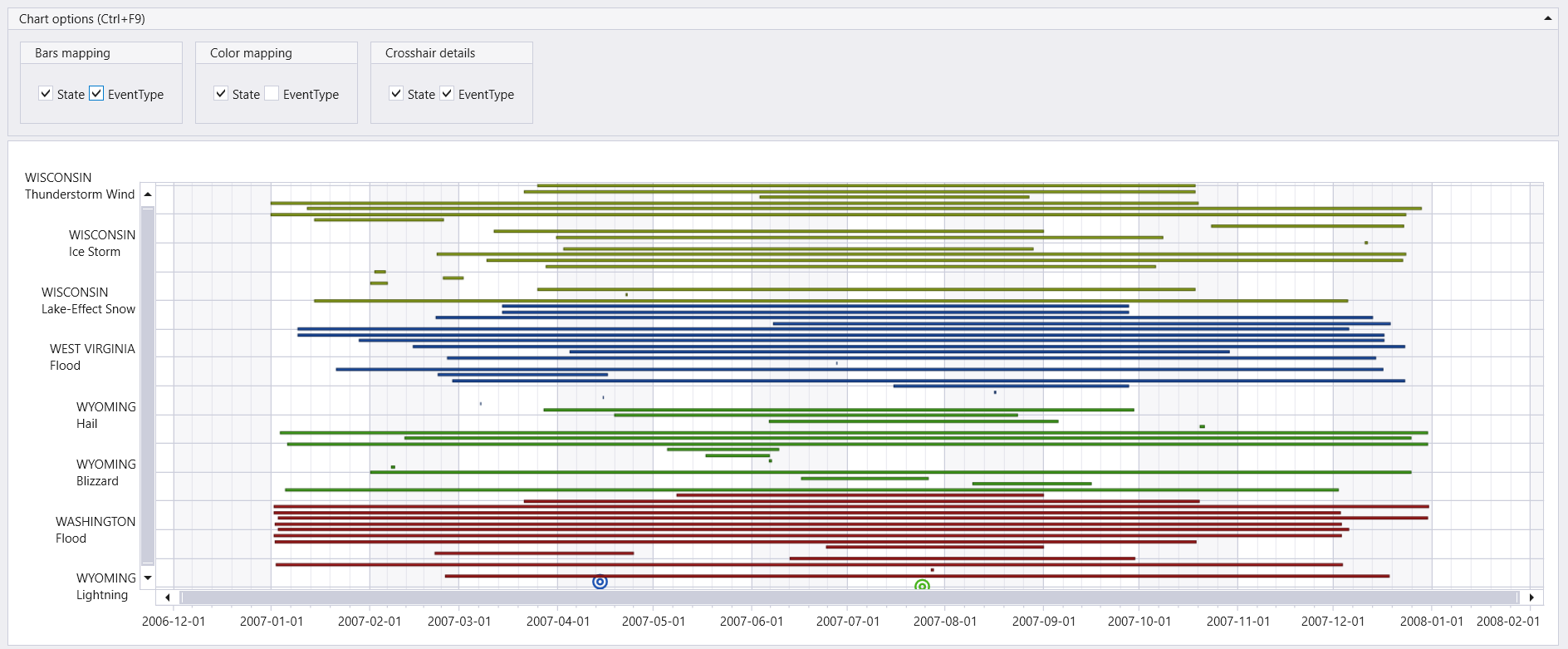

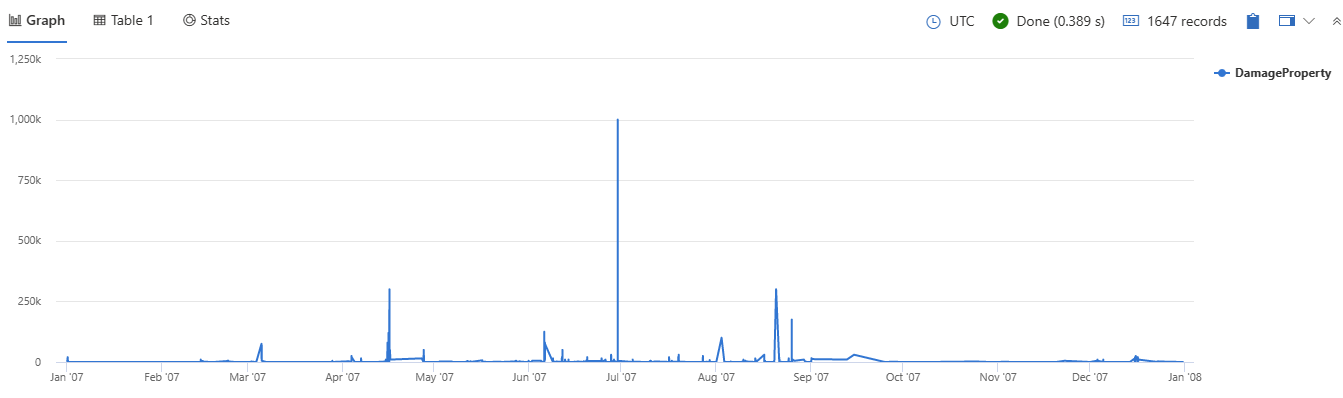

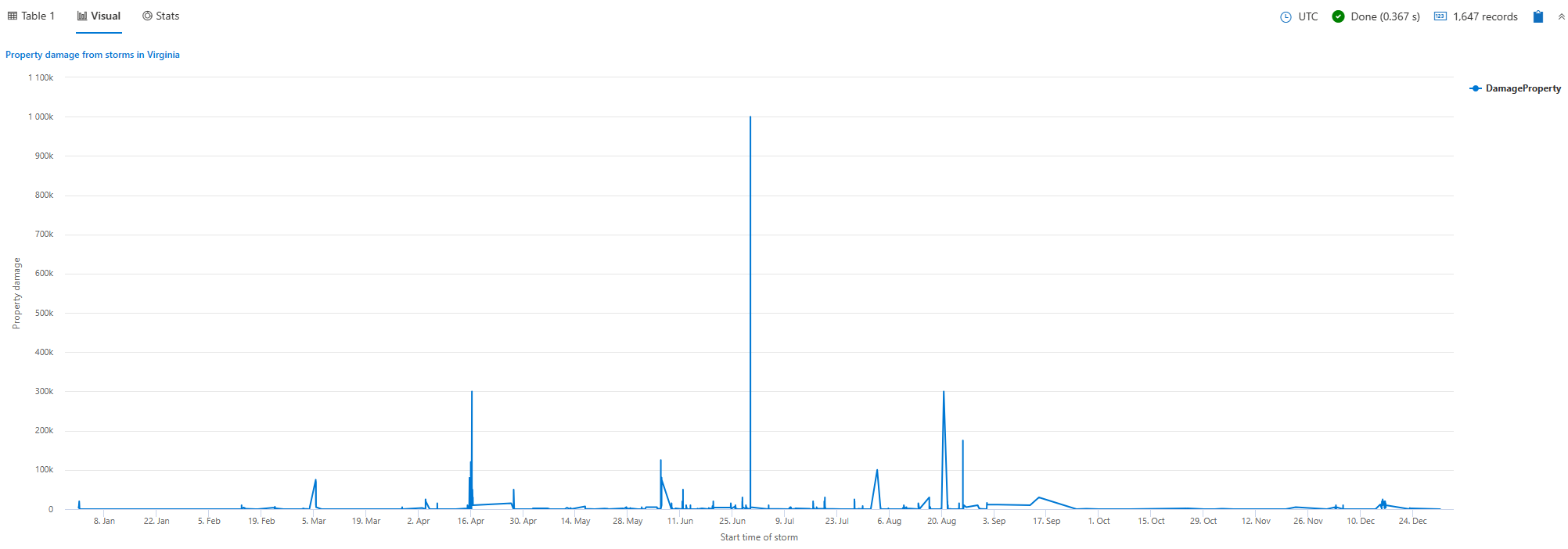

- 15.2.1: visualizations

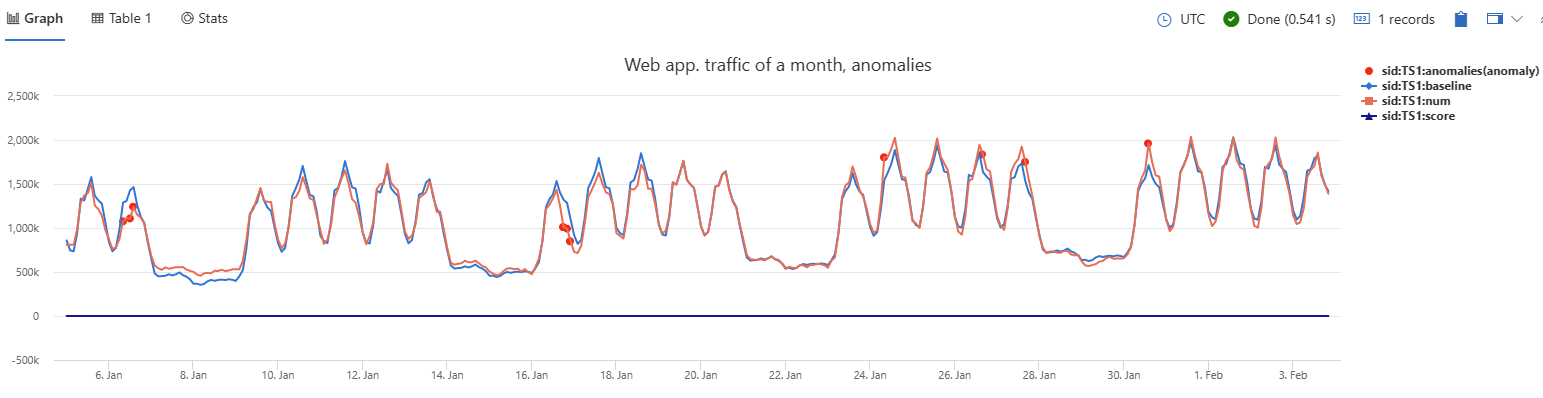

- 15.2.1.1: Anomaly chart visualization

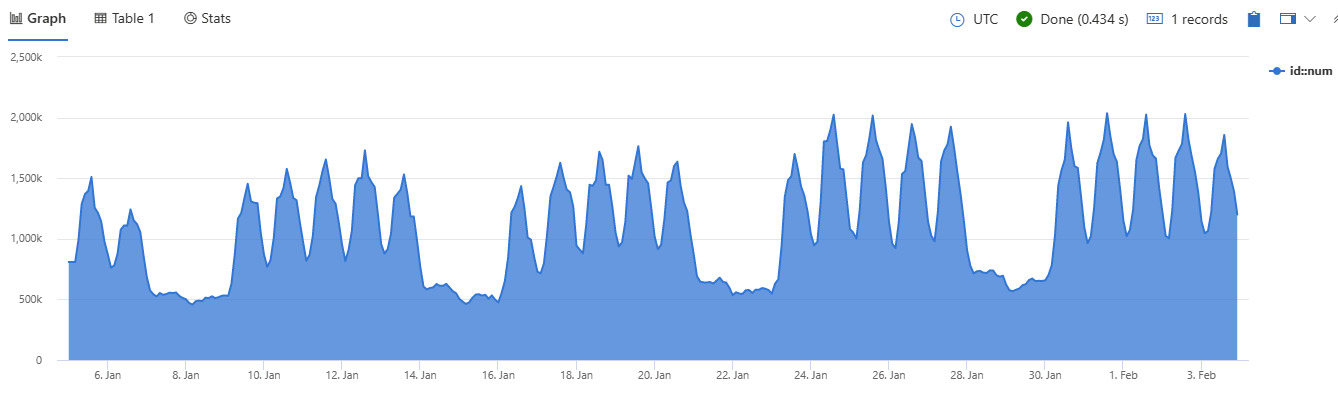

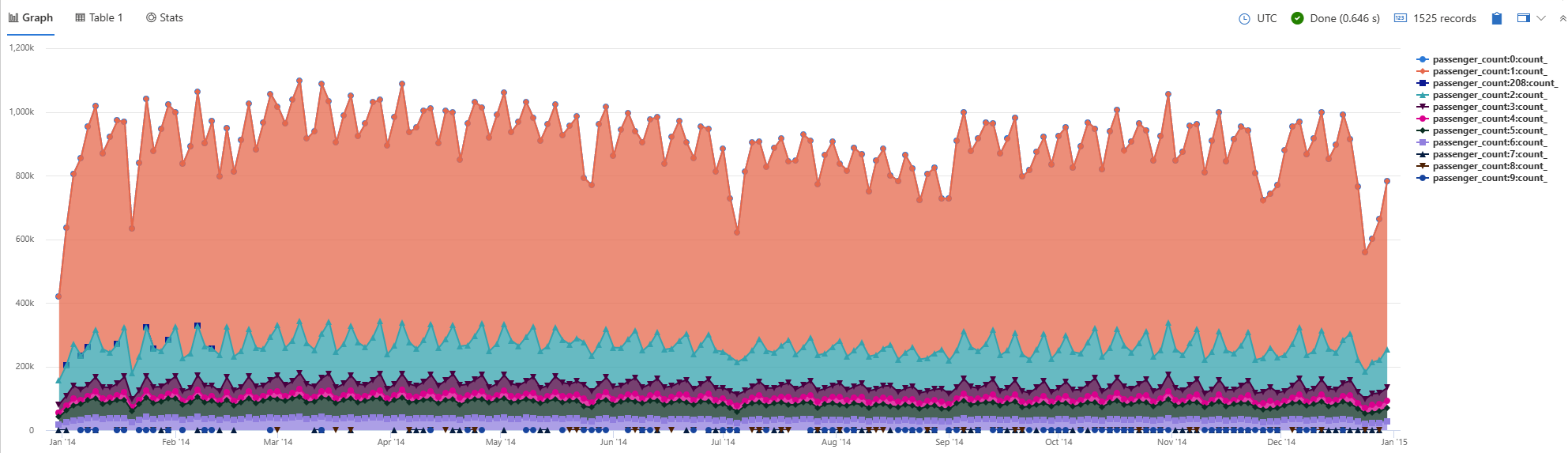

- 15.2.1.2: Area chart visualization

- 15.2.1.3: Bar chart visualization

- 15.2.1.4: Card visualization

- 15.2.1.5: Column chart visualization

- 15.2.1.6: Ladder chart visualization

- 15.2.1.7: Line chart visualization

- 15.2.1.8: Pie chart visualization

- 15.2.1.9: Pivot chart visualization

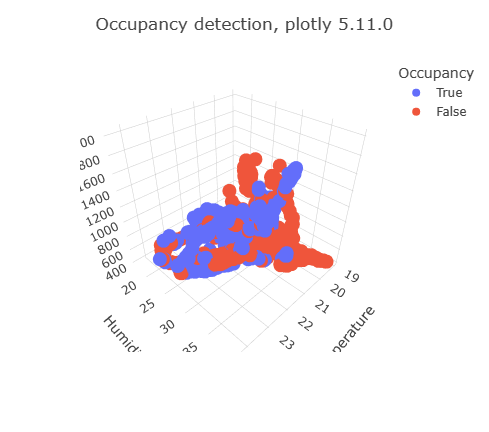

- 15.2.1.10: Plotly visualization

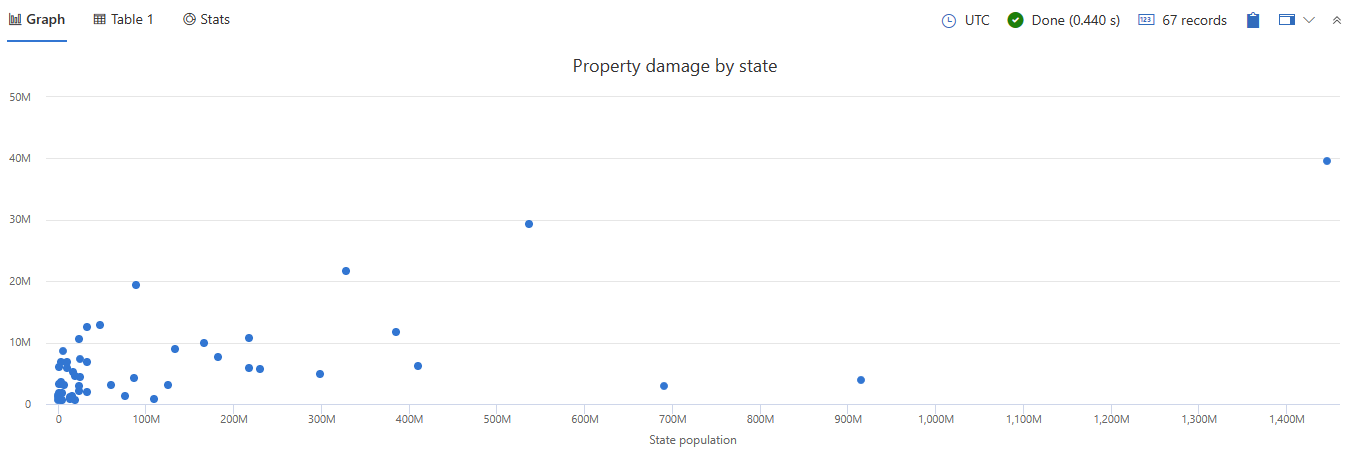

- 15.2.1.11: Scatter chart visualization

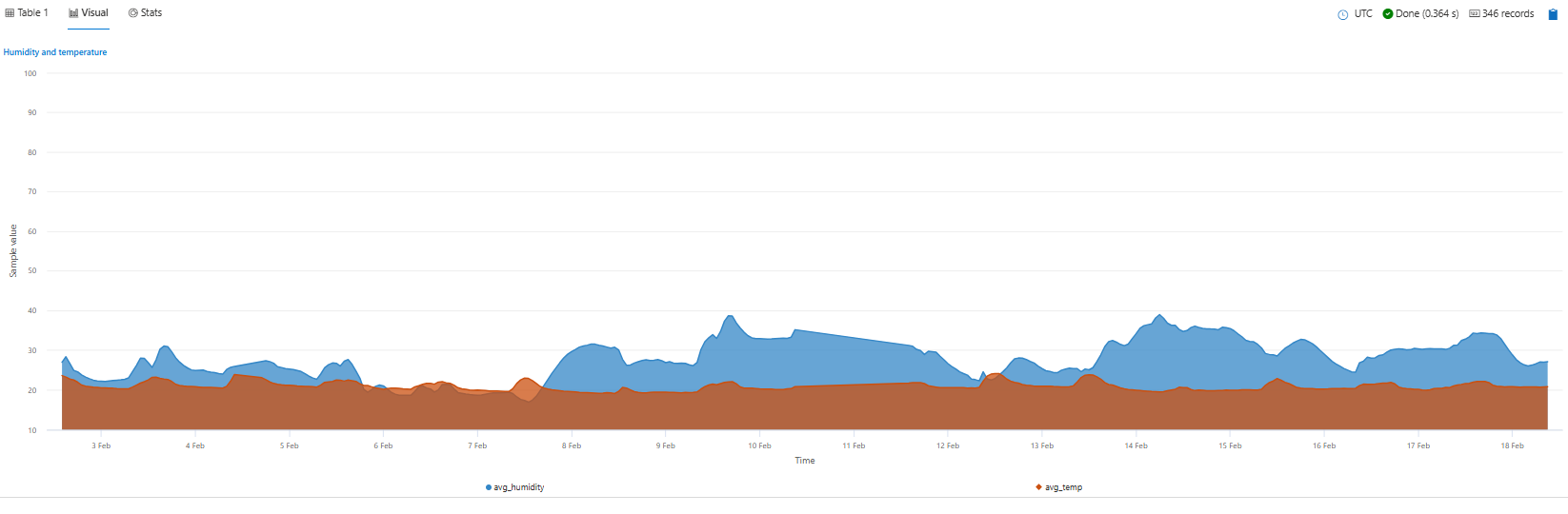

- 15.2.1.12: Stacked area chart visualization

- 15.2.1.13: Table visualization

- 15.2.1.14: Time chart visualization

- 15.2.1.15: Time pivot visualization

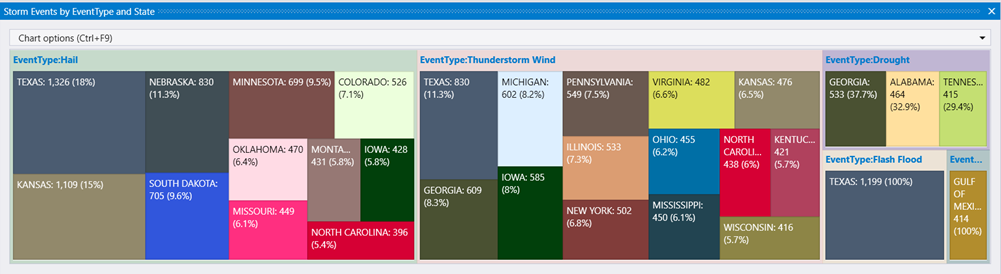

- 15.2.1.16: Treemap visualization

- 15.2.2: render operator

- 15.3: Summarize operator

- 15.4: macro-expand operator

- 15.5: project-by-names operator

- 15.6: as operator

- 15.7: consume operator

- 15.8: count operator

- 15.9: datatable operator

- 15.10: distinct operator

- 15.11: evaluate plugin operator

- 15.12: extend operator

- 15.13: externaldata operator

- 15.14: facet operator

- 15.15: find operator

- 15.16: fork operator

- 15.17: getschema operator

- 15.18: invoke operator

- 15.19: lookup operator

- 15.20: mv-apply operator

- 15.21: mv-expand operator

- 15.22: parse operator

- 15.23: parse-kv operator

- 15.24: parse-where operator

- 15.25: partition operator

- 15.26: print operator

- 15.27: Project operator

- 15.28: project-away operator

- 15.29: project-keep operator

- 15.30: project-rename operator

- 15.31: project-reorder operator

- 15.32: Queries

- 15.33: range operator

- 15.34: reduce operator

- 15.35: sample operator

- 15.36: sample-distinct operator

- 15.37: scan operator

- 15.38: search operator

- 15.39: serialize operator

- 15.40: Shuffle query

- 15.41: sort operator

- 15.42: take operator

- 15.43: top operator

- 15.44: top-hitters operator

- 15.45: top-nested operator

- 15.46: union operator

- 15.47: where operator

- 16: Time series analysis

- 16.1: Example use cases

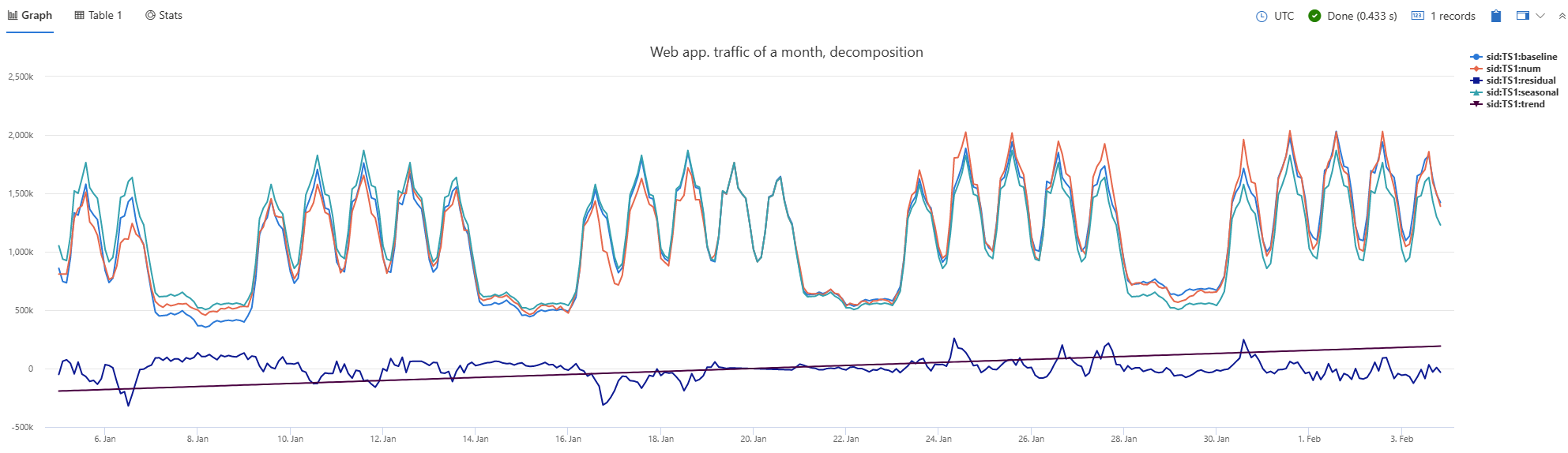

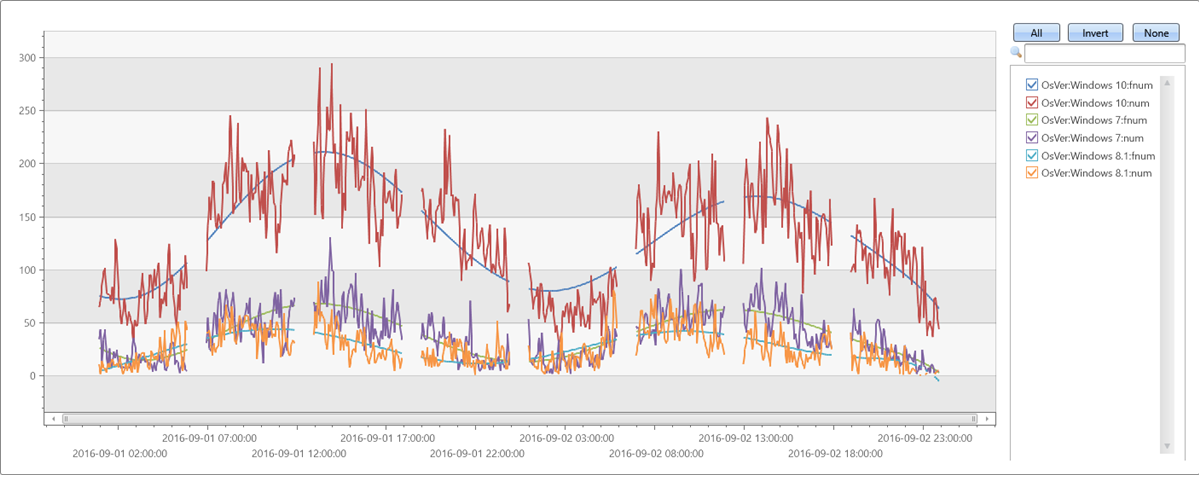

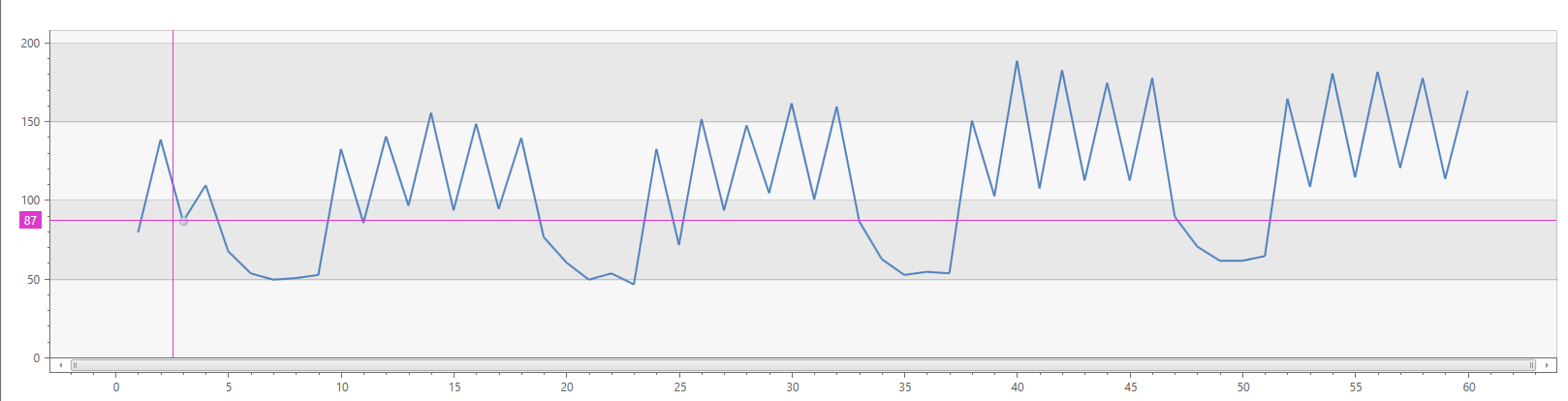

- 16.1.1: Analyze time series data

- 16.1.2: Anomaly diagnosis for root cause analysis

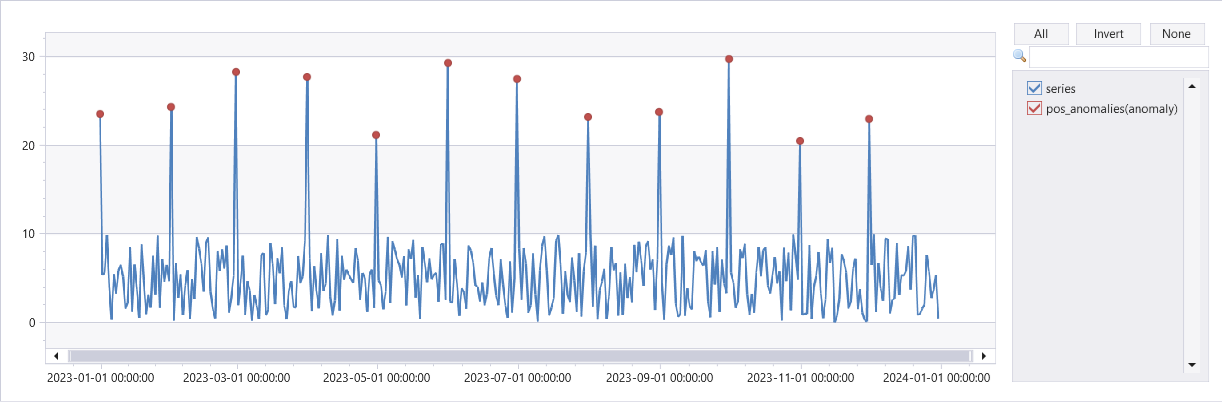

- 16.1.3: Time series anomaly detection & forecasting

- 16.2: series_product()

- 16.3: make-series operator

- 16.4: series_abs()

- 16.5: series_acos()

- 16.6: series_add()

- 16.7: series_atan()

- 16.8: series_cos()

- 16.9: series_cosine_similarity()

- 16.10: series_decompose_anomalies()

- 16.11: series_decompose_forecast()

- 16.12: series_decompose()

- 16.13: series_divide()

- 16.14: series_dot_product()

- 16.15: series_equals()

- 16.16: series_exp()

- 16.17: series_fft()

- 16.18: series_fill_backward()

- 16.19: series_fill_const()

- 16.20: series_fill_forward()

- 16.21: series_fill_linear()

- 16.22: series_fir()

- 16.23: series_fit_2lines_dynamic()

- 16.24: series_fit_2lines()

- 16.25: series_fit_line_dynamic()

- 16.26: series_fit_line()

- 16.27: series_fit_poly()

- 16.28: series_floor()

- 16.29: series_greater_equals()

- 16.30: series_greater()

- 16.31: series_ifft()

- 16.32: series_iir()

- 16.33: series_less_equals()

- 16.34: series_less()

- 16.35: series_log()

- 16.36: series_magnitude()

- 16.37: series_multiply()

- 16.38: series_not_equals()

- 16.39: series_outliers()

- 16.40: series_pearson_correlation()

- 16.41: series_periods_detect()

- 16.42: series_periods_validate()

- 16.43: series_seasonal()

- 16.44: series_sign()

- 16.45: series_sin()

- 16.46: series_stats_dynamic()

- 16.47: series_stats()

- 16.48: series_subtract()

- 16.49: series_sum()

- 16.50: series_tan()

- 16.51: series_asin()

- 16.52: series_ceiling()

- 16.53: series_pow()

- 17: Window functions

- 17.1: next()

- 17.2: prev()

- 17.3: row_cumsum()

- 17.4: row_number()

- 17.5: row_rank_dense()

- 17.6: row_rank_min()

- 17.7: row_window_session()

- 17.8: Window functions

- 18: Add a comment in KQL

- 19: Database cursors

- 20: Graph

- 20.1: Best practices for Kusto Query Language (KQL) graph semantics

- 20.2: Functions

- 20.2.1: all() (graph function)

- 20.2.2: any() (graph function)

- 20.2.3: inner_nodes() (graph function)

- 20.2.4: labels() (graph function)

- 20.2.5: map() (graph function)

- 20.2.6: node_degree_in (graph function)

- 20.2.7: node_degree_out (graph function)

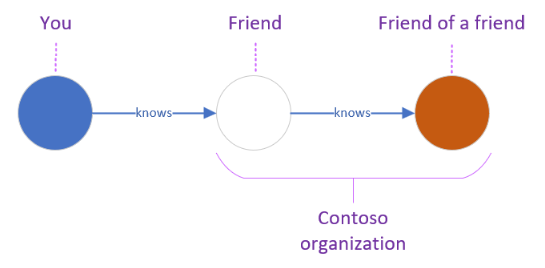

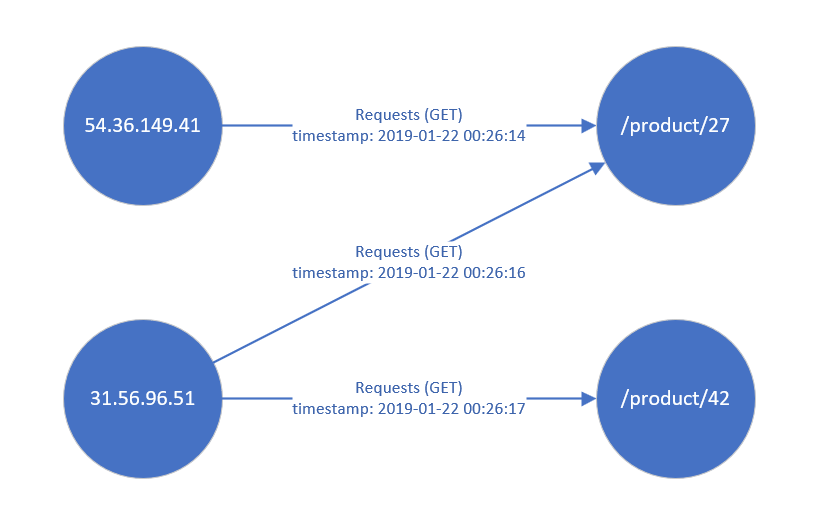

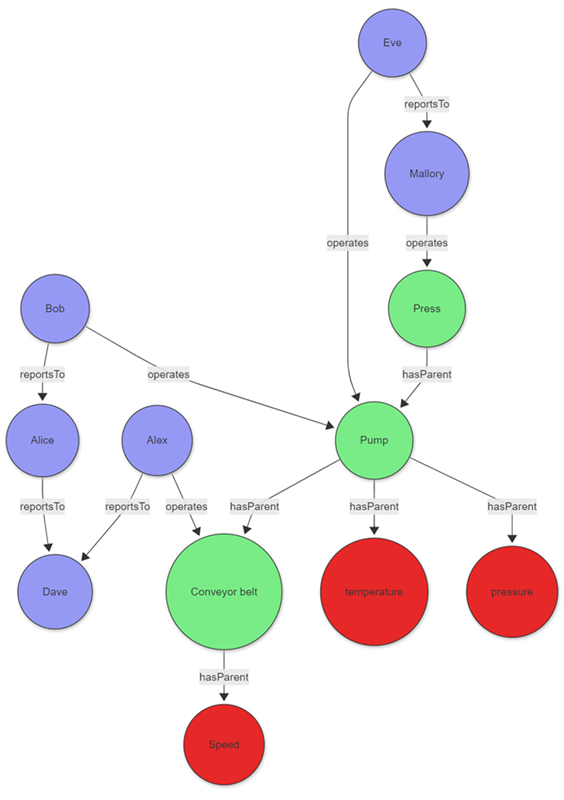

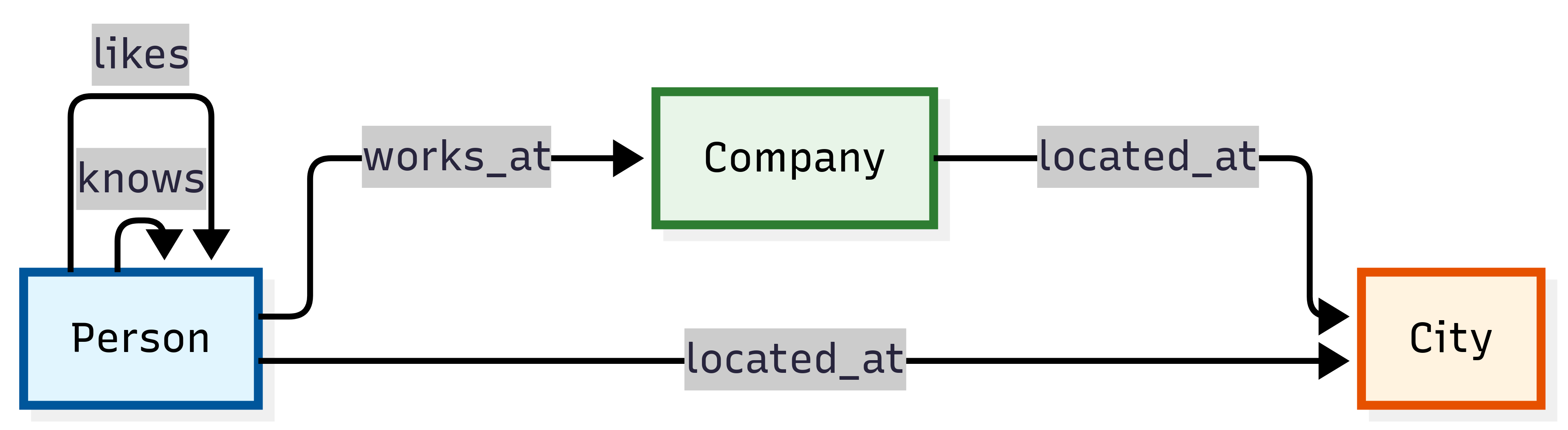

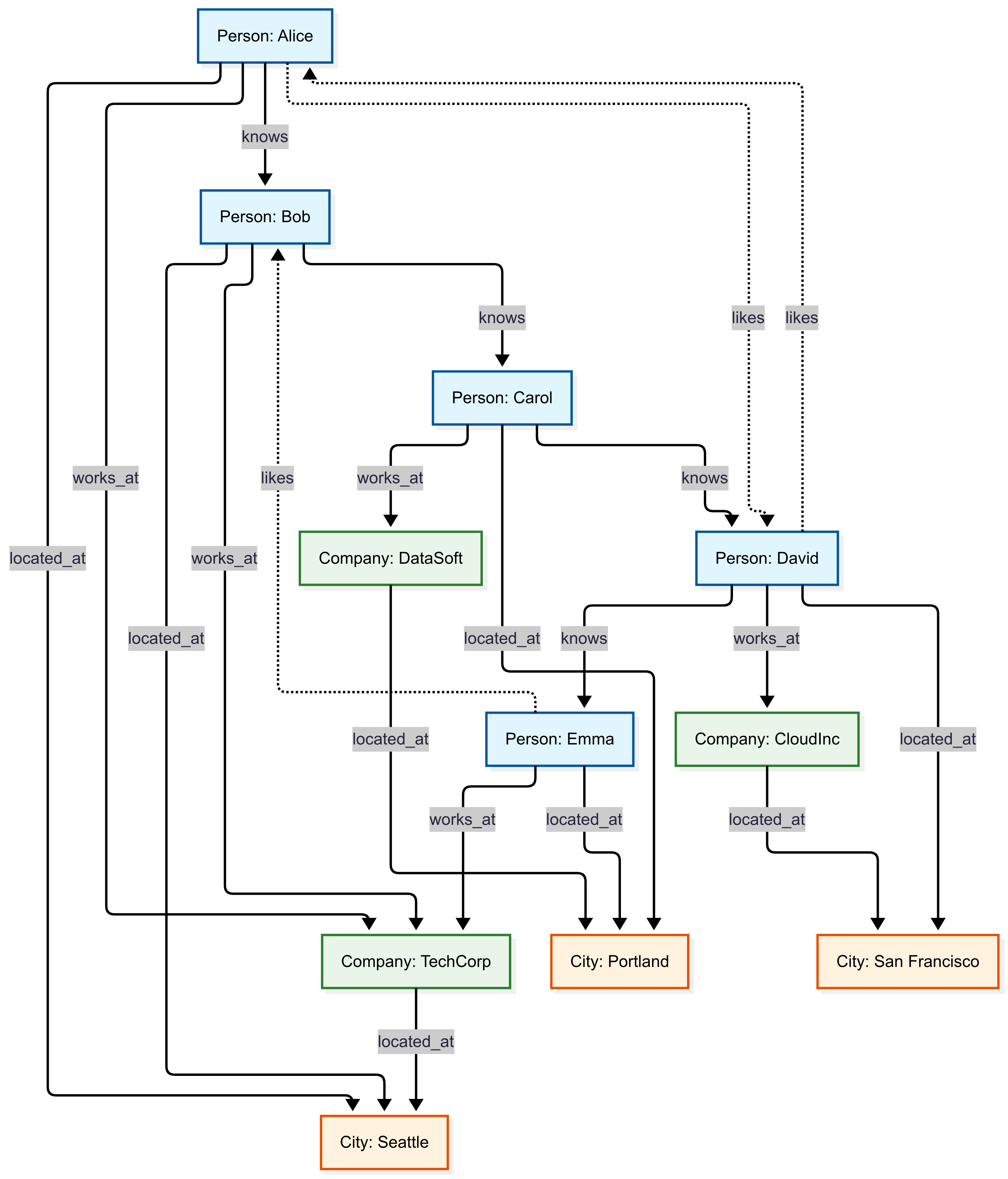

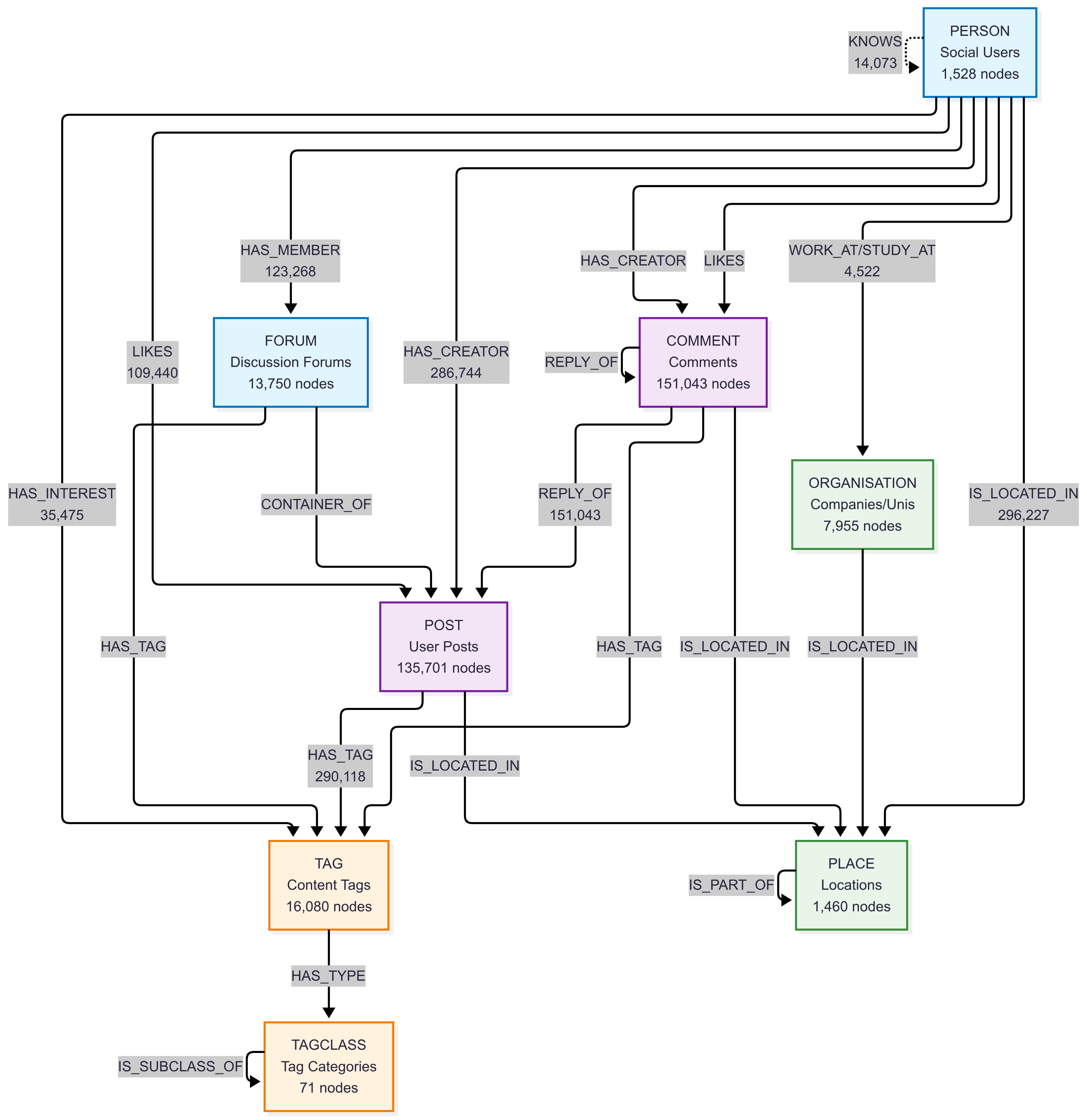

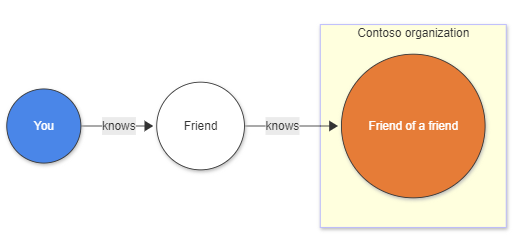

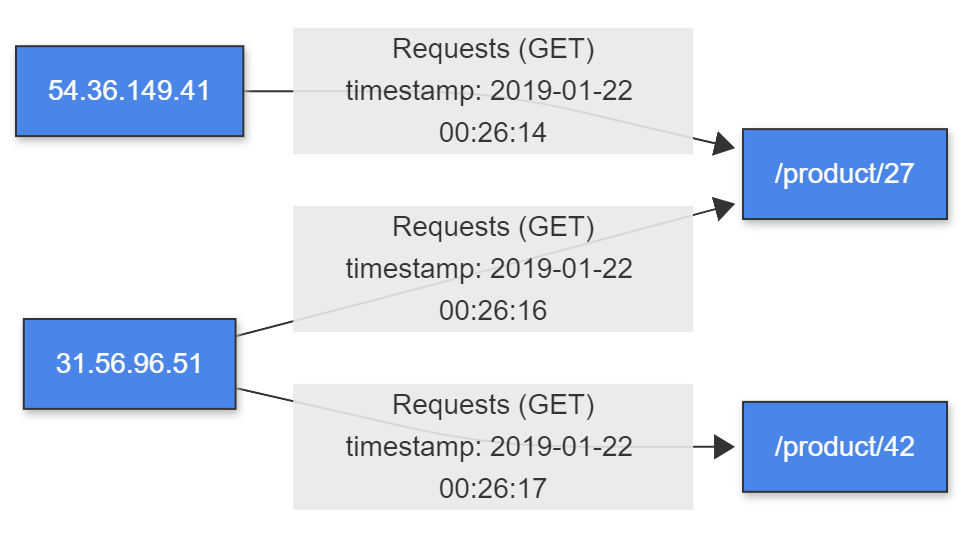

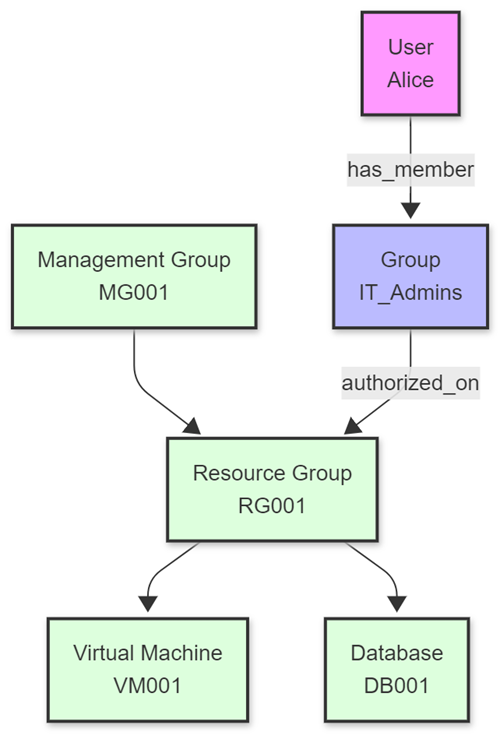

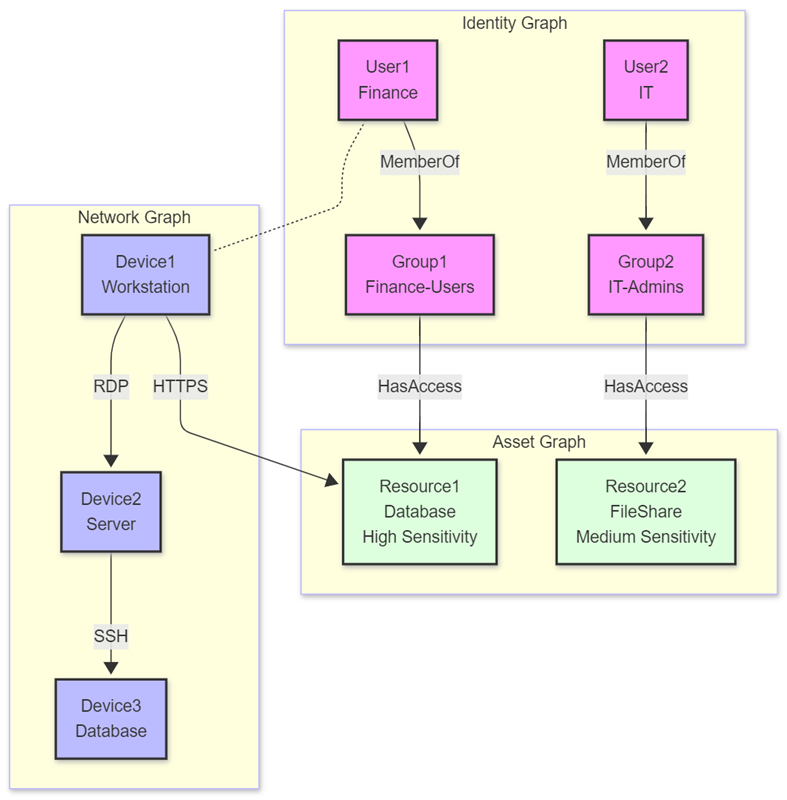

- 20.3: Graph exploration basics

- 20.4: Graph sample datasets and examples

- 20.5: Graph semantics overview

- 20.6: Operators

- 20.6.1: Graph operators

- 20.6.2: graph-mark-components operator (preview)

- 20.6.3: graph-match operator

- 20.6.4: graph-shortest-paths Operator (preview)

- 20.6.5: graph-to-table operator

- 20.6.6: make-graph operator

- 20.7: Scenarios for using Kusto Query Language (KQL) graph semantics

- 21: Add a comment in KQL

- 22: Debug Kusto Query Language inline Python using Visual Studio Code

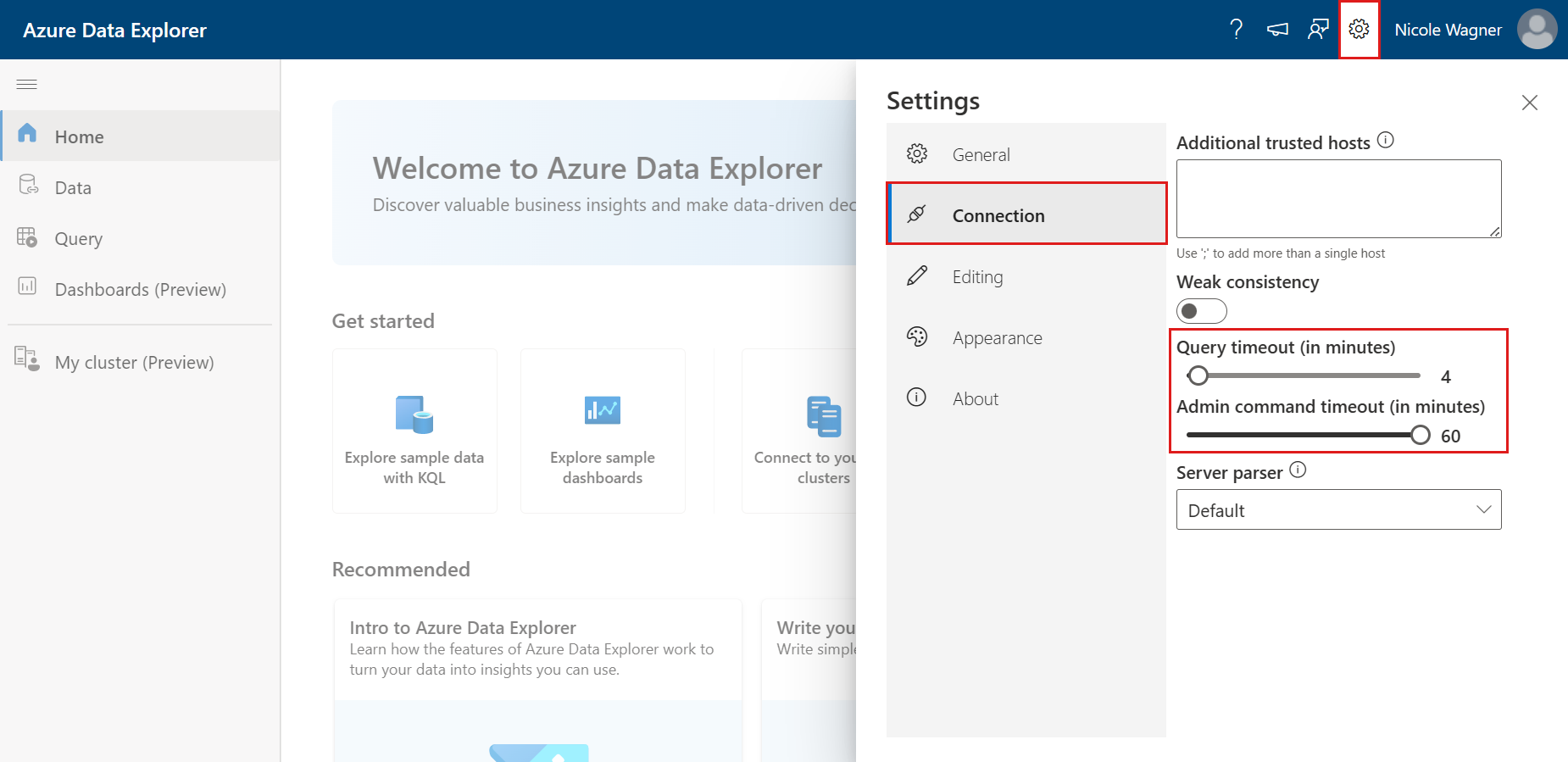

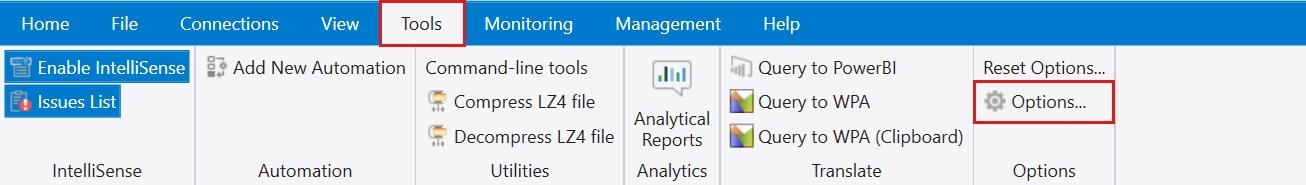

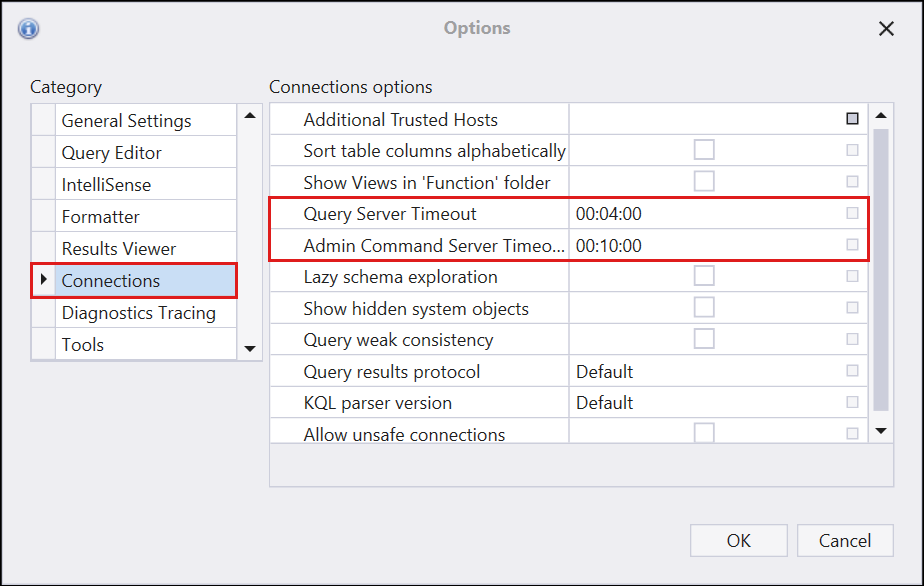

- 23: Set timeouts

- 24: Syntax conventions for reference documentation

- 25: T-SQL

1 - Aggregation functions

1.1 - covariance() (aggregation function)

Calculates the sample covariance of two random variables expr1 and expr2.

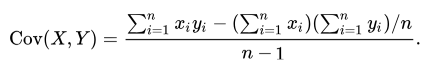

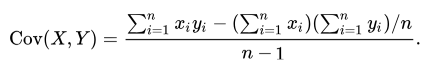

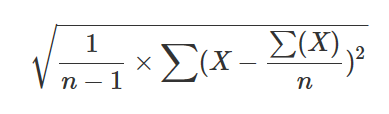

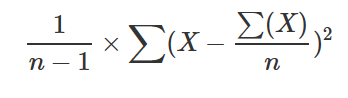

The following formula is used:

Syntax

covariance(expr1 , *expr2 )

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr1 | real | ✔️ | First random variable expression. |

| expr2 | real | ✔️ | Second random variable expression. |

Returns

Returns the covariance value of expr1 and expr2.

Examples

The example in this section shows how to use the syntax to help you get started.

datatable(x:real, y:real) [

1.0, 14.0,

2.0, 10.0,

3.0, 17.0,

4.0, 20.0,

5.0, 50.0,

]

| summarize covariance(x, y)

Output

| covariance_x_y | |

|---|---|

| 20.5 |

1.2 - covarianceif() (aggregation function)

Calculates the sample covariance of two random variables expr1 and expr2 in records for which predicate evaluates to true.

The following formula is used:

Syntax

covarianceif(expr1 , *expr2 , predicate)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr1 | real | ✔️ | First random variable expression. |

| expr2 | real | ✔️ | Second random variable expression. |

| predicate | string | ✔️ | If predicate evaluates to true, values of expr1 and expr2 will be added to the covariance. |

Returns

Returns the covariance value of expr1 and expr2 in records for which predicate evaluates to true.

Example

The example in this section shows how to use the syntax to help you get started.

This query calculates the covariance of x and y for the subset of numbers where x is divisible by 3.

range x from 1 to 100 step 1

| extend y = iff(x % 2 == 0, x * 2, x * 3)

| summarize covarianceif(x, y, x % 3 == 0)

Output

| covarianceif_x_y | |

|---|---|

| 2142 |

1.3 - covariancep() (aggregation function)

Calculates the population covariance of two random variables expr1 and expr2.

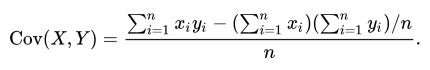

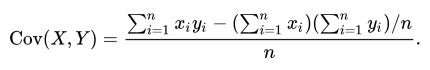

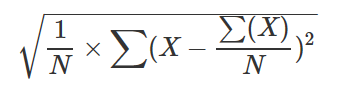

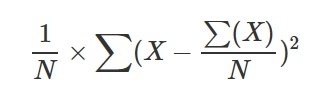

The following formula is used:

Syntax

covariancep(expr1 , expr2 )

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr1 | real | ✔️ | First random variable expression. |

| expr2 | real | ✔️ | Second random variable expression. |

Returns

Returns the covariance value of expr1 and expr2.

Examples

The example in this section shows how to use the syntax to help you get started.

datatable(x:real, y:real) [

1.0, 14.0,

2.0, 10.0,

3.0, 17.0,

4.0, 20.0,

5.0, 50.0,

]

| summarize covariancep(x, y)

Output

| covariancep_x_y | |

|---|---|

| 16.4 |

1.4 - covariancepif() (aggregation function)

Calculates the sample covariance of two random variables expr1 and expr2 in records for which predicate evaluates to true.

The following formula is used:

Syntax

covariancepif(expr1 , *expr2 , predicate)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr1 | real | ✔️ | First random variable expression. |

| expr2 | real | ✔️ | Second random variable expression. |

| predicate | string | ✔️ | If predicate evaluates to true, values of expr1 and expr2 will be added to the covariance. |

Returns

Returns the covariance value of expr1 and expr2 in records for which predicate evaluates to true.

Example

The example in this section shows how to use the syntax to help you get started.

This query creates a new variable y based on whether x is even or odd and then calculates the covariance of x and y for the subset of numbers where x is divisible by 3.

range x from 1 to 100 step 1

| extend y = iff(x % 2 == 0, x * 2, x * 3)

| summarize covariancepif(x, y, x % 3 == 0)

Output

| covariancepif_x_y | |

|---|---|

| 2077.09090909091 |

1.5 - variancepif() (aggregation function)

Calculates the variance of expr in records for which predicate evaluates to true.

Syntax

variancepif(expr, predicate)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The expression to use for the variance calculation. |

| predicate | string | ✔️ | If predicate evaluates to true, the expr calculated value will be added to the variance. |

Returns

Returns the variance value of expr in records for which predicate evaluates to true.

Example

This query generates a sequence of numbers from 1 to 100 and then calculates the variance of the even numbers within that sequence.

range x from 1 to 100 step 1

| summarize variancepif(x, x%2 == 0)

Output

| variancepif_x |

|---|

| 850 |

1.6 - Aggregation Functions

An aggregation function performs a calculation on a set of values, and returns a single value. These functions are used in conjunction with the summarize operator. This article lists all available aggregation functions grouped by type. For scalar functions, see Scalar function types.

Binary functions

| Function Name | Description |

|---|---|

| binary_all_and() | Returns aggregated value using the binary AND of the group. |

| binary_all_or() | Returns aggregated value using the binary OR of the group. |

| binary_all_xor() | Returns aggregated value using the binary XOR of the group. |

Dynamic functions

| Function Name | Description |

|---|---|

| buildschema() | Returns the minimal schema that admits all values of the dynamic input. |

| make_bag(), make_bag_if() | Returns a property bag of dynamic values within the group without/with a predicate. |

| make_list(), make_list_if() | Returns a list of all the values within the group without/with a predicate. |

| make_list_with_nulls() | Returns a list of all the values within the group, including null values. |

| make_set(), make_set_if() | Returns a set of distinct values within the group without/with a predicate. |

Row selector functions

| Function Name | Description |

|---|---|

| arg_max() | Returns one or more expressions when the argument is maximized. |

| arg_min() | Returns one or more expressions when the argument is minimized. |

| take_any(), take_anyif() | Returns a random non-empty value for the group without/with a predicate. |

Statistical functions

| Function Name | Description |

|---|---|

| avg() | Returns an average value across the group. |

| avgif() | Returns an average value across the group (with predicate). |

| count(), countif() | Returns a count of the group without/with a predicate. |

| count_distinct(), count_distinctif() | Returns a count of unique elements in the group without/with a predicate. |

| dcount(), dcountif() | Returns an approximate distinct count of the group elements without/with a predicate. |

| hll() | Returns the HyperLogLog (HLL) results of the group elements, an intermediate value of the dcount approximation. |

| hll_if() | Returns the HyperLogLog (HLL) results of the group elements, an intermediate value of the dcount approximation (with predicate). |

| hll_merge() | Returns a value for merged HLL results. |

| max(), maxif() | Returns the maximum value across the group without/with a predicate. |

| min(), minif() | Returns the minimum value across the group without/with a predicate. |

| percentile() | Returns a percentile estimation of the group. |

| percentiles() | Returns percentile estimations of the group. |

| percentiles_array() | Returns the percentile approximates of the array. |

| percentilesw() | Returns the weighted percentile approximate of the group. |

| percentilesw_array() | Returns the weighted percentile approximate of the array. |

| stdev(), stdevif() | Returns the standard deviation across the group for a population that is considered a sample without/with a predicate. |

| stdevp() | Returns the standard deviation across the group for a population that is considered representative. |

| sum(), sumif() | Returns the sum of the elements within the group without/with a predicate. |

| tdigest() | Returns an intermediate result for the percentiles approximation, the weighted percentile approximate of the group. |

| tdigest_merge() | Returns the merged tdigest value across the group. |

| variance(), varianceif() | Returns the variance across the group without/with a predicate. |

| variancep(), variancepif() | Returns the variance across the group for a population that is considered representative. |

1.7 - arg_max() (aggregation function)

Finds a row in the table that maximizes the specified expression. It returns all columns of the input table or specified columns.

Syntax

arg_max (ExprToMaximize, * | ExprToReturn [, …])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| ExprToMaximize | string | ✔️ | The expression for which the maximum value is determined. |

| ExprToReturn | string | ✔️ | The expression determines which columns’ values are returned, from the row that has the maximum value for ExprToMaximize. Use a wildcard * to return all columns. |

Returns

Returns a row in the table that maximizes the specified expression ExprToMaximize, and the values of columns specified in ExprToReturn.

Examples

General examples

The following example finds the maximum latitude of a storm event in each state.

StormEvents

| summarize arg_max(BeginLat, BeginLocation) by State

Output

The results table displays only the first 10 rows.

| State | BeginLat | BeginLocation |

|---|---|---|

| MISSISSIPPI | 34.97 | BARTON |

| VERMONT | 45 | NORTH TROY |

| AMERICAN SAMOA | -14.2 | OFU |

| HAWAII | 22.2113 | PRINCEVILLE |

| MINNESOTA | 49.35 | ARNESEN |

| RHODE ISLAND | 42 | WOONSOCKET |

| INDIANA | 41.73 | FREMONT |

| WEST VIRGINIA | 40.62 | CHESTER |

| SOUTH CAROLINA | 35.18 | LANDRUM |

| TEXAS | 36.4607 | DARROUZETT |

| … | … | … |

The following example finds the last time an event with a direct death happened in each state, showing all the columns.

The query first filters the events to include only those events where there was at least one direct death. Then the query returns the entire row with the most recent StartTime.

StormEvents

| where DeathsDirect > 0

| summarize arg_max(StartTime, *) by State

Output

The results table displays only the first 10 rows and first three columns.

| State | StartTime | EndTime | … |

|---|---|---|---|

| GUAM | 2007-01-27T11:15:00Z | 2007-01-27T11:30:00Z | … |

| MASSACHUSETTS | 2007-02-03T22:00:00Z | 2007-02-04T10:00:00Z | … |

| AMERICAN SAMOA | 2007-02-17T13:00:00Z | 2007-02-18T11:00:00Z | … |

| IDAHO | 2007-02-17T13:00:00Z | 2007-02-17T15:00:00Z | … |

| DELAWARE | 2007-02-25T13:00:00Z | 2007-02-26T01:00:00Z | … |

| WYOMING | 2007-03-10T17:00:00Z | 2007-03-10T17:00:00Z | … |

| NEW MEXICO | 2007-03-23T18:42:00Z | 2007-03-23T19:06:00Z | … |

| INDIANA | 2007-05-15T14:14:00Z | 2007-05-15T14:14:00Z | … |

| MONTANA | 2007-05-18T14:20:00Z | 2007-05-18T14:20:00Z | … |

| LAKE MICHIGAN | 2007-06-07T13:00:00Z | 2007-06-07T13:00:00Z | … |

| … | … | … | … |

The following example demonstrates null handling.

datatable(Fruit: string, Color: string, Version: int) [

"Apple", "Red", 1,

"Apple", "Green", int(null),

"Banana", "Yellow", int(null),

"Banana", "Green", int(null),

"Pear", "Brown", 1,

"Pear", "Green", 2,

]

| summarize arg_max(Version, *) by Fruit

Output

| Fruit | Version | Color |

|---|---|---|

| Apple | 1 | Red |

| Banana | Yellow | |

| Pear | 2 | Green |

Examples comparing arg_max() and max()

The arg_max() function differs from the max() function. The arg_max() function allows you to return other columns along with the maximum value, and max() only returns the maximum value itself.

The following example uses arg_max() to find the last time an event with a direct death happened in each state, showing all the columns. The query first filters the events to only include events where there was at least one direct death. Then the query returns the entire row with the most recent (maximum) StartTime.

StormEvents

| where DeathsDirect > 0

| summarize arg_max(StartTime, *)

The results table returns all the columns for the row containing the highest value in the expression specified.

| StartTime | EndTime | EpisodeId | EventId | State | EventType | … | |–|–|–|–| | 2007-12-31T15:00:00Z | 2007-12-31T15:00:00 | 12688 | 69700 | UTAH | Avalanche | … |

The following example uses the max() function to find the last time an event with a direct death happened in each state, but only returns the maximum value of StartTime.

StormEvents

| where DeathsDirect > 0

| summarize max(StartTime)

The results table returns the maximum value of StartTime, without returning other columns for this record.

| max_StartTime |

|---|

| 2007-12-31T15:00:00Z |

Related content

1.8 - arg_min() (aggregation function)

Finds a row in the table that minimizes the specified expression. It returns all columns of the input table or specified columns.

Syntax

arg_min (ExprToMinimize, * | ExprToReturn [, …])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| ExprToMinimize | string | ✔️ | The expression for which the minimum value is determined. |

| ExprToReturn | string | ✔️ | The expression determines which columns’ values are returned, from the row that has the minimum value for ExprToMinimize. Use a wildcard * to return all columns. |

Null handling

When ExprToMinimize is null for all rows in a table, one row in the table is picked. Otherwise, rows where ExprToMinimize is null are ignored.

Returns

Returns a row in the table that minimizes ExprToMinimize, and the values of columns specified in ExprToReturn. Use or * to return the entire row.

Examples

The following example finds the maximum latitude of a storm event in each state.

StormEvents

| summarize arg_min(BeginLat, BeginLocation) by State

Output

The results table shown includes only the first 10 rows.

| State | BeginLat | BeginLocation |

|---|---|---|

| AMERICAN SAMOA | -14.3 | PAGO PAGO |

| CALIFORNIA | 32.5709 | NESTOR |

| MINNESOTA | 43.5 | BIGELOW |

| WASHINGTON | 45.58 | WASHOUGAL |

| GEORGIA | 30.67 | FARGO |

| ILLINOIS | 37 | CAIRO |

| FLORIDA | 24.6611 | SUGARLOAF KEY |

| KENTUCKY | 36.5 | HAZEL |

| TEXAS | 25.92 | BROWNSVILLE |

| OHIO | 38.42 | SOUTH PT |

| … | … | … |

Find the first time an event with a direct death happened in each state, showing all of the columns.

The query first filters the events to only include those where there was at least one direct death. Then the query returns the entire row with the lowest value for StartTime.

StormEvents

| where DeathsDirect > 0

| summarize arg_min(StartTime, *) by State

Output

The results table shown includes only the first 10 rows and first 3 columns.

| State | StartTime | EndTime | … |

|---|---|---|---|

| INDIANA | 2007-01-01T00:00:00Z | 2007-01-22T18:49:00Z | … |

| FLORIDA | 2007-01-03T10:55:00Z | 2007-01-03T10:55:00Z | … |

| NEVADA | 2007-01-04T09:00:00Z | 2007-01-05T14:00:00Z | … |

| LOUISIANA | 2007-01-04T15:45:00Z | 2007-01-04T15:52:00Z | … |

| WASHINGTON | 2007-01-09T17:00:00Z | 2007-01-09T18:00:00Z | … |

| CALIFORNIA | 2007-01-11T22:00:00Z | 2007-01-24T10:00:00Z | … |

| OKLAHOMA | 2007-01-12T00:00:00Z | 2007-01-18T23:59:00Z | … |

| MISSOURI | 2007-01-13T03:00:00Z | 2007-01-13T08:30:00Z | … |

| TEXAS | 2007-01-13T10:30:00Z | 2007-01-13T14:30:00Z | … |

| ARKANSAS | 2007-01-14T03:00:00Z | 2007-01-14T03:00:00Z | … |

| … | … | … | … |

The following example demonstrates null handling.

datatable(Fruit: string, Color: string, Version: int) [

"Apple", "Red", 1,

"Apple", "Green", int(null),

"Banana", "Yellow", int(null),

"Banana", "Green", int(null),

"Pear", "Brown", 1,

"Pear", "Green", 2,

]

| summarize arg_min(Version, *) by Fruit

Output

| Fruit | Version | Color |

|---|---|---|

| Apple | 1 | Red |

| Banana | Yellow | |

| Pear | 1 | Brown |

Comparison to min()

The arg_min() function differs from the min() function. The arg_min() function allows you to return additional columns along with the minimum value, and min() only returns the minimum value itself.

Examples

The following example uses arg_min() to find the last time an event with a direct death happened in each state, showing all the columns.

StormEvents

| where DeathsDirect > 0

| summarize arg_min(StartTime, *)

The results table returns all the columns for the row containing the lowest value in the expression specified.

| StartTime | EndTime | EpisodeId | EventId | State | EventType | … | |–|–|–|–| | 2007-01-01T00:00:00Z | 2007-01-22T18:49:00Z | 2408 | 11929 | INDIANA | Flood | … |

The following example uses the min() function to find the last time an event with a direct death happened in each state, but only returns the minimum value of StartTime.

StormEvents

| where DeathsDirect > 0

| summarize min(StartTime)

The results table returns the lowest value in the specific column only.

| min_StartTime |

|---|

| 2007-01-01T00:00:00Z |

Related content

1.9 - avg() (aggregation function)

Calculates the average (arithmetic mean) of expr across the group.

Syntax

avg(expr)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The expression used for aggregation calculation. Records with null values are ignored and not included in the calculation. |

Returns

Returns the average value of expr across the group.

Examples

The following example returns the average number of damaged crops per state.

StormEvents

| summarize AvgDamageToCrops = avg(DamageCrops) by State

The results table shown includes only the first 10 rows.

| State | AvgDamageToCrops |

|---|---|

| TEXAS | 7524.569241 |

| KANSAS | 15366.86671 |

| IOWA | 4332.477535 |

| ILLINOIS | 44568.00198 |

| MISSOURI | 340719.2212 |

| GEORGIA | 490702.5214 |

| MINNESOTA | 2835.991494 |

| WISCONSIN | 17764.37838 |

| NEBRASKA | 21366.36467 |

| NEW YORK | 5.714285714 |

| … | … |

Related content

1.10 - avgif() (aggregation function)

Calculates the average of expr in records for which predicate evaluates to true.

Syntax

avgif (expr, predicate)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The expression used for aggregation calculation. Records with null values are ignored and not included in the calculation. |

| predicate | string | ✔️ | The predicate that if true, the expr calculated value is added to the average. |

Returns

Returns the average value of expr in records where predicate evaluates to true.

Examples

The following example calculates the average damage by state in cases where there was any damage.

StormEvents

| summarize Averagedamage=tolong(avg( DamageCrops)),AverageWhenDamage=tolong(avgif(DamageCrops,DamageCrops >0)) by State

Output

The results table shown includes only the first 10 rows.

| State | Averagedamage | Averagewhendamage |

|---|---|---|

| TEXAS | 7524 | 491291 |

| KANSAS | 15366 | 695021 |

| IOWA | 4332 | 28203 |

| ILLINOIS | 44568 | 2574757 |

| MISSOURI | 340719 | 8806281 |

| GEORGIA | 490702 | 57239005 |

| MINNESOTA | 2835 | 144175 |

| WISCONSIN | 17764 | 438188 |

| NEBRASKA | 21366 | 187726 |

| NEW YORK | 5 | 10000 |

| … | … | … |

Related content

1.11 - binary_all_and() (aggregation function)

Accumulates values using the binary AND operation for each summarization group, or in total if a group isn’t specified.

Syntax

binary_all_and (expr)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | long | ✔️ | The value used for the binary AND calculation. |

Returns

Returns an aggregated value using the binary AND operation over records for each summarization group, or in total if a group isn’t specified.

Examples

The following example produces CAFEF00D using binary AND operations.

datatable(num:long)

[

0xFFFFFFFF,

0xFFFFF00F,

0xCFFFFFFD,

0xFAFEFFFF,

]

| summarize result = toupper(tohex(binary_all_and(num)))

Output

| result |

|---|

| CAFEF00D |

Related content

1.12 - binary_all_or() (aggregation function)

Accumulates values using the binary OR operation for each summarization group, or in total if a group isn’t specified.

Syntax

binary_all_or (expr)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | long | ✔️ | The value used for the binary OR calculation. |

Returns

Returns an aggregated value using the binary OR operation over records for each summarization group, or in total if a group isn’t specified.

Examples

The following example produces CAFEF00D using binary OR operations.

datatable(num:long)

[

0x88888008,

0x42000000,

0x00767000,

0x00000005,

]

| summarize result = toupper(tohex(binary_all_or(num)))

Output

| result |

|---|

| CAFEF00D |

Related content

1.13 - binary_all_xor() (aggregation function)

Accumulates values using the binary XOR operation for each summarization group, or in total if a group is not specified.

Syntax

binary_all_xor (expr)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | long | ✔️ | The value used for the binary XOR calculation. |

Returns

Returns a value that is aggregated using the binary XOR operation over records for each summarization group, or in total if a group isn’t specified.

Examples

The following example produces CAFEF00D using binary XOR operations.

datatable(num:long)

[

0x44404440,

0x1E1E1E1E,

0x90ABBA09,

0x000B105A,

]

| summarize result = toupper(tohex(binary_all_xor(num)))

Output

| results |

|---|

| CAFEF00D |

Related content

1.14 - buildschema() (aggregation function)

Builds the minimal schema that admits all values of DynamicExpr.

Syntax

buildschema (DynamicExpr)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| DynamicExpr | dynamic | ✔️ | Expression used for the aggregation calculation. |

Returns

Returns the minimal schema that admits all values of DynamicExpr.

Examples

The following example builds a schema based on:

{"x":1, "y":3.5}{"x":"somevalue", "z":[1, 2, 3]}{"y":{"w":"zzz"}, "t":["aa", "bb"], "z":["foo"]}

datatable(value: dynamic) [

dynamic({"x":1, "y":3.5}),

dynamic({"x":"somevalue", "z":[1, 2, 3]}),

dynamic({"y":{"w":"zzz"}, "t":["aa", "bb"], "z":["foo"]})

]

| summarize buildschema(value)

Output

| schema_value |

|---|

{“x”:[“long”,“string”],“y”:[“double”,{“w”:“string”}],“z”:{"indexer":[“long”,“string”]},“t”:{"indexer":“string”}} |

Schema breakdown

In the resulting schema:

- The root object is a container with four properties named

x,y,z, andt. - Property

xis either type long or type string. - Property

yis either type double or another container with a propertywof type string. - Property

zis an array, indicated by theindexerkeyword, where each item can be either type long or type string. - Property

tis an array, indicated by theindexerkeyword, where each item is a string. - Every property is implicitly optional, and any array might be empty.

Related content

1.15 - count_distinct() (aggregation function) - (preview)

Counts unique values specified by the scalar expression per summary group, or the total number of unique values if the summary group is omitted.

If you only need an estimation of unique values count, we recommend using the less resource-consuming dcount aggregation function.

To count only records for which a predicate returns true, use the count_distinctif aggregation function.

Syntax

count_distinct (expr)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | scalar | ✔️ | The expression whose unique values are to be counted. |

Returns

Long integer value indicating the number of unique values of expr per summary group.

Examples

The following example shows how many types of storm events happened in each state.

Function performance can be degraded when operating on multiple data sources from different clusters.

StormEvents

| summarize UniqueEvents=count_distinct(EventType) by State

| top 5 by UniqueEvents

Output

| State | UniqueEvents |

|---|---|

| TEXAS | 27 |

| CALIFORNIA | 26 |

| PENNSYLVANIA | 25 |

| GEORGIA | 24 |

| NORTH CAROLINA | 23 |

Related content

1.16 - count_distinctif() (aggregation function) - (preview)

Conditionally counts unique values specified by the scalar expression per summary group, or the total number of unique values if the summary group is omitted. Only records for which predicate evaluates to true are counted.

If you only need an estimation of unique values count, we recommend using the less resource-consuming dcountif aggregation function.

Syntax

count_distinctif (expr, predicate)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | scalar | ✔️ | The expression whose unique values are to be counted. |

| predicate | string | ✔️ | The expression used to filter records to be aggregated. |

Returns

Integer value indicating the number of unique values of expr per summary group, for all records for which the predicate evaluates to true.

Examples

The following example shows how many types of death-causing storm events happened in each state. Only storm events with a nonzero count of deaths are counted.

StormEvents

| summarize UniqueFatalEvents=count_distinctif(EventType,(DeathsDirect + DeathsIndirect)>0) by State

| where UniqueFatalEvents > 0

| top 5 by UniqueFatalEvents

Output

| State | UniqueFatalEvents |

|---|---|

| TEXAS | 12 |

| CALIFORNIA | 12 |

| OKLAHOMA | 10 |

| NEW YORK | 9 |

| KANSAS | 9 |

Related content

1.17 - count() (aggregation function)

Counts the number of records per summarization group, or total if summarization is done without grouping.

To only count records for which a predicate returns true, use countif().

Syntax

count()

Returns

Returns a count of the records per summarization group, or in total if summarization is done without grouping.

Examples

The following example returns a count of events in states:

StormEvents

| summarize Count=count() by State

Output

| State | Count |

|---|---|

| TEXAS | 4701 |

| KANSAS | 3166 |

| IOWA | 2337 |

| ILLINOIS | 2022 |

| MISSOURI | 2016 |

| GEORGIA | 1983 |

| MINNESOTA | 1881 |

| WISCONSIN | 1850 |

| NEBRASKA | 1766 |

| NEW YORK | 1750 |

| … | … |

Related content

1.18 - countif() (aggregation function)

Counts the rows in which predicate evaluates to true.

Syntax

countif (predicate)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| predicate | string | ✔️ | The expression used for aggregation calculation. The value can be any scalar expression with a return type of bool. |

Returns

Returns a count of rows in which predicate evaluates to true.

Examples

Count storms by state

This example shows the number of storms with damage to crops by state.

StormEvents

| summarize TotalCount=count(),TotalWithDamage=countif(DamageCrops >0) by State

The results table shown includes only the first 10 rows.

| State | TotalCount | TotalWithDamage |

|---|---|---|

| TEXAS | 4701 | 72 |

| KANSAS | 3166 | 70 |

| IOWA | 2337 | 359 |

| ILLINOIS | 2022 | 35 |

| MISSOURI | 2016 | 78 |

| GEORGIA | 1983 | 17 |

| MINNESOTA | 1881 | 37 |

| WISCONSIN | 1850 | 75 |

| NEBRASKA | 1766 | 201 |

| NEW YORK | 1750 | 1 |

| … | … | … |

Count based on string length

This example shows the number of names with more than four letters.

let T = datatable(name:string, day_of_birth:long)

[

"John", 9,

"Paul", 18,

"George", 25,

"Ringo", 7

];

T

| summarize countif(strlen(name) > 4)

Output

| countif_ |

|---|

| 2 |

Related content

1.19 - dcount() (aggregation function)

Calculates an estimate of the number of distinct values that are taken by a scalar expression in the summary group.

Syntax

dcount (expr[, accuracy])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The input whose distinct values are to be counted. |

| accuracy | int | The value that defines the requested estimation accuracy. The default value is 1. See Estimation accuracy for supported values. |

Returns

Returns an estimate of the number of distinct values of expr in the group.

Examples

The following example shows how many types of storm events happened in each state.

StormEvents

| summarize DifferentEvents=dcount(EventType) by State

| order by DifferentEvents

The results table shown includes only the first 10 rows.

| State | DifferentEvents |

|---|---|

| TEXAS | 27 |

| CALIFORNIA | 26 |

| PENNSYLVANIA | 25 |

| GEORGIA | 24 |

| ILLINOIS | 23 |

| MARYLAND | 23 |

| NORTH CAROLINA | 23 |

| MICHIGAN | 22 |

| FLORIDA | 22 |

| OREGON | 21 |

| KANSAS | 21 |

| … | … |

Estimation accuracy

Related content

1.20 - dcountif() (aggregation function)

Estimates the number of distinct values of expr for rows in which predicate evaluates to true.

Syntax

dcountif (expr, predicate, [, accuracy])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The expression used for the aggregation calculation. |

| predicate | string | ✔️ | The expression used to filter rows. |

| accuracy | int | The control between speed and accuracy. If unspecified, the default value is 1. See Estimation accuracy for supported values. |

Returns

Returns an estimate of the number of distinct values of expr for rows in which predicate evaluates to true.

Examples

The following example shows how many types of fatal storm events happened in each state.

StormEvents

| summarize DifferentFatalEvents=dcountif(EventType,(DeathsDirect + DeathsIndirect)>0) by State

| where DifferentFatalEvents > 0

| order by DifferentFatalEvents

The results table shown includes only the first 10 rows.

| State | DifferentFatalEvents |

|---|---|

| CALIFORNIA | 12 |

| TEXAS | 12 |

| OKLAHOMA | 10 |

| ILLINOIS | 9 |

| KANSAS | 9 |

| NEW YORK | 9 |

| NEW JERSEY | 7 |

| WASHINGTON | 7 |

| MICHIGAN | 7 |

| MISSOURI | 7 |

| … | … |

Estimation accuracy

Related content

1.21 - hll_if() (aggregation function)

Calculates the intermediate results of dcount in records for which the predicate evaluates to true.

Read about the underlying algorithm (HyperLogLog) and the estimation accuracy.

Syntax

hll_if (expr, predicate [, accuracy])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The expression used for the aggregation calculation. |

| predicate | string | ✔️ | The Expr used to filter records to add to the intermediate result of dcount. |

| accuracy | int | The value that controls the balance between speed and accuracy. If unspecified, the default value is 1. For supported values, see Estimation accuracy. |

Returns

Returns the intermediate results of distinct count of Expr for which Predicate evaluates to true.

Examples

The following query results in the number of unique flood event sources in Iowa and Kansas. It uses the hll_if() function to show only flood events.

StormEvents

| where State in ("IOWA", "KANSAS")

| summarize hll_flood = hll_if(Source, EventType == "Flood") by State

| project State, SourcesOfFloodEvents = dcount_hll(hll_flood)

Output

| State | SourcesOfFloodEvents |

|---|---|

| KANSAS | 11 |

| IOWA | 7 |

Estimation accuracy

| Accuracy | Speed | Error (%) | |

|---|---|---|---|

| 0 | Fastest | 1.6 | |

| 1 | Balanced | 0.8 | |

| 2 | Slow | 0.4 | |

| 3 | Slow | 0.28 | |

| 4 | Slowest | 0.2 |

Related content

1.22 - hll_merge() (aggregation function)

Merges HLL results across the group into a single HLL value.

For more information, see the underlying algorithm (HyperLogLog) and estimation accuracy.

Syntax

hll_merge (hll)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| hll | string | ✔️ | The column name containing HLL values to merge. |

Returns

The function returns the merged HLL values of hll across the group.

Example

The following example shows HLL results across a group merged into a single HLL value.

StormEvents

| summarize hllRes = hll(DamageProperty) by bin(StartTime,10m)

| summarize hllMerged = hll_merge(hllRes)

Output

The results show only the first five results in the array.

| hllMerged |

|---|

| [[1024,14],["-6903255281122589438","-7413697181929588220","-2396604341988936699",“5824198135224880646”,"-6257421034880415225", …],[]] |

Estimation accuracy

Related content

1.23 - hll() (aggregation function)

The hll() function is a way to estimate the number of unique values in a set of values. It does so by calculating intermediate results for aggregation within the summarize operator for a group of data using the dcount function.

Read about the underlying algorithm (HyperLogLog) and the estimation accuracy.

Syntax

hll (expr [, accuracy])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The expression used for the aggregation calculation. |

| accuracy | int | The value that controls the balance between speed and accuracy. If unspecified, the default value is 1. For supported values, see Estimation accuracy. |

Returns

Returns the intermediate results of distinct count of expr across the group.

Example

In the following example, the hll() function is used to estimate the number of unique values of the DamageProperty column within each 10-minute time bin of the StartTime column.

StormEvents

| summarize hll(DamageProperty) by bin(StartTime,10m)

Output

The results table shown includes only the first 10 rows.

| StartTime | hll_DamageProperty |

|---|---|

| 2007-01-01T00:20:00Z | [[1024,14],[“3803688792395291579”],[]] |

| 2007-01-01T01:00:00Z | [[1024,14],[“7755241107725382121”,"-5665157283053373866",“3803688792395291579”,"-1003235211361077779"],[]] |

| 2007-01-01T02:00:00Z | [[1024,14],["-1003235211361077779","-5665157283053373866",“7755241107725382121”],[]] |

| 2007-01-01T02:20:00Z | [[1024,14],[“7755241107725382121”],[]] |

| 2007-01-01T03:30:00Z | [[1024,14],[“3803688792395291579”],[]] |

| 2007-01-01T03:40:00Z | [[1024,14],["-5665157283053373866"],[]] |

| 2007-01-01T04:30:00Z | [[1024,14],[“3803688792395291579”],[]] |

| 2007-01-01T05:30:00Z | [[1024,14],[“3803688792395291579”],[]] |

| 2007-01-01T06:30:00Z | [[1024,14],[“1589522558235929902”],[]] |

Estimation accuracy

Related content

1.24 - make_bag_if() (aggregation function)

Creates a dynamic JSON property bag (dictionary) of expr values in records for which predicate evaluates to true.

Syntax

make_bag_if(expr, predicate [, maxSize])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | dynamic | ✔️ | The expression used for the aggregation calculation. |

| predicate | bool | ✔️ | The predicate that evaluates to true, in order for expr to be added to the result. |

| maxSize | int | The limit on the maximum number of elements returned. The default and max value is 1048576. |

Returns

Returns a dynamic JSON property bag (dictionary) of expr values in records for which predicate evaluates to true. Nondictionary values are skipped.

If a key appears in more than one row, an arbitrary value, out of the possible values for this key, are selected.

Example

The following example shows a packed JSON property bag.

let T = datatable(prop:string, value:string, predicate:bool)

[

"prop01", "val_a", true,

"prop02", "val_b", false,

"prop03", "val_c", true

];

T

| extend p = bag_pack(prop, value)

| summarize dict=make_bag_if(p, predicate)

Output

| dict |

|---|

| { “prop01”: “val_a”, “prop03”: “val_c” } |

Use bag_unpack() plugin for transforming the bag keys in the make_bag_if() output into columns.

let T = datatable(prop:string, value:string, predicate:bool)

[

"prop01", "val_a", true,

"prop02", "val_b", false,

"prop03", "val_c", true

];

T

| extend p = bag_pack(prop, value)

| summarize bag=make_bag_if(p, predicate)

| evaluate bag_unpack(bag)

Output

| prop01 | prop03 |

|---|---|

| val_a | val_c |

Related content

1.25 - make_bag() (aggregation function)

Creates a dynamic JSON property bag (dictionary) of all the values of expr in the group.

Syntax

make_bag (expr [, maxSize])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | dynamic | ✔️ | The expression used for the aggregation calculation. |

| maxSize | int | The limit on the maximum number of elements returned. The default and max value is 1048576. |

Returns

Returns a dynamic JSON property bag (dictionary) of all the values of Expr in the group, which are property bags. Nondictionary values are skipped.

If a key appears in more than one row, an arbitrary value, out of the possible values for this key, is selected.

Example

The following example shows a packed JSON property bag.

let T = datatable(prop:string, value:string)

[

"prop01", "val_a",

"prop02", "val_b",

"prop03", "val_c",

];

T

| extend p = bag_pack(prop, value)

| summarize dict=make_bag(p)

Output

| dict |

|---|

| { “prop01”: “val_a”, “prop02”: “val_b”, “prop03”: “val_c” } |

Use the bag_unpack() plugin for transforming the bag keys in the make_bag() output into columns.

let T = datatable(prop:string, value:string)

[

"prop01", "val_a",

"prop02", "val_b",

"prop03", "val_c",

];

T

| extend p = bag_pack(prop, value)

| summarize bag=make_bag(p)

| evaluate bag_unpack(bag)

Output

| prop01 | prop02 | prop03 |

|---|---|---|

| val_a | val_b | val_c |

Related content

1.26 - make_list_if() (aggregation function)

Creates a dynamic array of expr values in the group for which predicate evaluates to true.

Syntax

make_list_if(expr, predicate [, maxSize])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The expression used for the aggregation calculation. |

| predicate | string | ✔️ | A predicate that has to evaluate to true in order for expr to be added to the result. |

| maxSize | integer | The maximum number of elements returned. The default and max value is 1048576. |

Returns

Returns a dynamic array of expr values in the group for which predicate evaluates to true.

If the input to the summarize operator isn’t sorted, the order of elements in the resulting array is undefined.

If the input to the summarize operator is sorted, the order of elements in the resulting array tracks that of the input.

Example

The following example shows a list of names with more than 4 letters.

let T = datatable(name:string, day_of_birth:long)

[

"John", 9,

"Paul", 18,

"George", 25,

"Ringo", 7

];

T

| summarize make_list_if(name, strlen(name) > 4)

Output

| list_name |

|---|

| [“George”, “Ringo”] |

Related content

1.27 - make_list_with_nulls() (aggregation function)

dynamic JSON object (array) which includes null values.Creates a dynamic array of all the values of expr in the group, including null values.

Syntax

make_list_with_nulls(expr)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The expression that to use to create the array. |

Returns

Returns a dynamic JSON object (array) of all the values of expr in the group, including null values.

If the input to the summarize operator isn’t sorted, the order of elements in the resulting array is undefined.

If the input to the summarize operator is sorted, the order of elements in the resulting array tracks that of the input.

Example

The following example shows null values in the results.

let shapes = datatable (name:string , sideCount: int)

[

"triangle", int(null),

"square", 4,

"rectangle", 4,

"pentagon", 5,

"hexagon", 6,

"heptagon", 7,

"octagon", 8,

"nonagon", 9,

"decagon", 10

];

shapes

| summarize mylist = make_list_with_nulls(sideCount)

Output

| mylist |

|---|

| [null,4,4,5,6,7,8,9,10] |

1.28 - make_list() (aggregation function)

Creates a dynamic array of all the values of expr in the group.

Syntax

make_list(expr [, maxSize])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | dynamic | ✔️ | The expression used for the aggregation calculation. |

| maxSize | int | The maximum number of elements returned. The default and max value is 1048576. |

Returns

Returns a dynamic array of all the values of expr in the group.

If the input to the summarize operator isn’t sorted, the order of elements in the resulting array is undefined.

If the input to the summarize operator is sorted, the order of elements in the resulting array tracks that of the input.

Examples

The examples in this section show how to use the syntax to help you get started.

One column

The following example uses the datatable, shapes, to return a list of shapes in a single column.

let shapes = datatable (name: string, sideCount: int)

[

"triangle", 3,

"square", 4,

"rectangle", 4,

"pentagon", 5,

"hexagon", 6,

"heptagon", 7,

"octagon", 8,

"nonagon", 9,

"decagon", 10

];

shapes

| summarize mylist = make_list(name)

Output

| mylist |

|---|

| [“triangle”,“square”,“rectangle”,“pentagon”,“hexagon”,“heptagon”,“octagon”,“nonagon”,“decagon”] |

Using the ‘by’ clause

The following example uses the make_list function and the by clause to create two lists of objects grouped by whether they have an even or odd number of sides.

let shapes = datatable (name: string, sideCount: int)

[

"triangle", 3,

"square", 4,

"rectangle", 4,

"pentagon", 5,

"hexagon", 6,

"heptagon", 7,

"octagon", 8,

"nonagon", 9,

"decagon", 10

];

shapes

| summarize mylist = make_list(name) by isEvenSideCount = sideCount % 2 == 0

Output

| isEvenSideCount | mylist |

|---|---|

| false | [“triangle”,“pentagon”,“heptagon”,“nonagon”] |

| true | [“square”,“rectangle”,“hexagon”,“octagon”,“decagon”] |

Packing a dynamic object

The following examples show how to pack a dynamic object in a column before making it a list. It returns a column with a boolean table isEvenSideCount indicating whether the side count is even or odd and a mylist column that contains lists of packed bags int each category.

let shapes = datatable (name: string, sideCount: int)

[

"triangle", 3,

"square", 4,

"rectangle", 4,

"pentagon", 5,

"hexagon", 6,

"heptagon", 7,

"octagon", 8,

"nonagon", 9,

"decagon", 10

];

shapes

| extend d = bag_pack("name", name, "sideCount", sideCount)

| summarize mylist = make_list(d) by isEvenSideCount = sideCount % 2 == 0

Output

| isEvenSideCount | mylist |

|---|---|

| false | [{“name”:“triangle”,“sideCount”:3},{“name”:“pentagon”,“sideCount”:5},{“name”:“heptagon”,“sideCount”:7},{“name”:“nonagon”,“sideCount”:9}] |

| true | [{“name”:“square”,“sideCount”:4},{“name”:“rectangle”,“sideCount”:4},{“name”:“hexagon”,“sideCount”:6},{“name”:“octagon”,“sideCount”:8},{“name”:“decagon”,“sideCount”:10}] |

Related content

1.29 - make_set_if() (aggregation function)

Creates a dynamic array of the set of distinct values that expr takes in records for which predicate evaluates to true.

Syntax

make_set_if(expr, predicate [, maxSize])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The expression used for the aggregation calculation. |

| predicate | string | ✔️ | A predicate that has to evaluate to true in order for expr to be added to the result. |

| maxSize | int | The maximum number of elements returned. The default and max value is 1048576. |

Returns

Returns a dynamic array of the set of distinct values that expr takes in records for which predicate evaluates to true. The array’s sort order is undefined.

Example

The following example shows a list of names with more than four letters.

let T = datatable(name:string, day_of_birth:long)

[

"John", 9,

"Paul", 18,

"George", 25,

"Ringo", 7

];

T

| summarize make_set_if(name, strlen(name) > 4)

Output

| set_name |

|---|

| [“George”, “Ringo”] |

Related content

1.30 - make_set() (aggregation function)

Creates a dynamic array of the set of distinct values that expr takes in the group.

Syntax

make_set(expr [, maxSize])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| expr | string | ✔️ | The expression used for the aggregation calculation. |

| maxSize | int | The maximum number of elements returned. The default and max value is 1048576. |

Returns

Returns a dynamic array of the set of distinct values that expr takes in the group.

The array’s sort order is undefined.

Example

Set from a scalar column

The following example shows the set of states grouped with the same amount of crop damage.

StormEvents

| summarize states=make_set(State) by DamageCrops

The results table shown includes only the first 10 rows.

| DamageCrops | states |

|---|---|

| 0 | [“NORTH CAROLINA”,“WISCONSIN”,“NEW YORK”,“ALASKA”,“DELAWARE”,“OKLAHOMA”,“INDIANA”,“ILLINOIS”,“MINNESOTA”,“SOUTH DAKOTA”,“TEXAS”,“UTAH”,“COLORADO”,“VERMONT”,“NEW JERSEY”,“VIRGINIA”,“CALIFORNIA”,“PENNSYLVANIA”,“MONTANA”,“WASHINGTON”,“OREGON”,“HAWAII”,“IDAHO”,“PUERTO RICO”,“MICHIGAN”,“FLORIDA”,“WYOMING”,“GULF OF MEXICO”,“NEVADA”,“LOUISIANA”,“TENNESSEE”,“KENTUCKY”,“MISSISSIPPI”,“ALABAMA”,“GEORGIA”,“SOUTH CAROLINA”,“OHIO”,“NEW MEXICO”,“ATLANTIC SOUTH”,“NEW HAMPSHIRE”,“ATLANTIC NORTH”,“NORTH DAKOTA”,“IOWA”,“NEBRASKA”,“WEST VIRGINIA”,“MARYLAND”,“KANSAS”,“MISSOURI”,“ARKANSAS”,“ARIZONA”,“MASSACHUSETTS”,“MAINE”,“CONNECTICUT”,“GUAM”,“HAWAII WATERS”,“AMERICAN SAMOA”,“LAKE HURON”,“DISTRICT OF COLUMBIA”,“RHODE ISLAND”,“LAKE MICHIGAN”,“LAKE SUPERIOR”,“LAKE ST CLAIR”,“LAKE ERIE”,“LAKE ONTARIO”,“E PACIFIC”,“GULF OF ALASKA”] |

| 30000 | [“TEXAS”,“NEBRASKA”,“IOWA”,“MINNESOTA”,“WISCONSIN”] |

| 4000000 | [“CALIFORNIA”,“KENTUCKY”,“NORTH DAKOTA”,“WISCONSIN”,“VIRGINIA”] |

| 3000000 | [“CALIFORNIA”,“ILLINOIS”,“MISSOURI”,“SOUTH CAROLINA”,“NORTH CAROLINA”,“MISSISSIPPI”,“NORTH DAKOTA”,“OHIO”] |

| 14000000 | [“CALIFORNIA”,“NORTH DAKOTA”] |

| 400000 | [“CALIFORNIA”,“MISSOURI”,“MISSISSIPPI”,“NEBRASKA”,“WISCONSIN”,“NORTH DAKOTA”] |

| 50000 | [“CALIFORNIA”,“GEORGIA”,“NEBRASKA”,“TEXAS”,“WEST VIRGINIA”,“KANSAS”,“MISSOURI”,“MISSISSIPPI”,“NEW MEXICO”,“IOWA”,“NORTH DAKOTA”,“OHIO”,“WISCONSIN”,“ILLINOIS”,“MINNESOTA”,“KENTUCKY”] |

| 18000 | [“WASHINGTON”,“WISCONSIN”] |

| 107900000 | [“CALIFORNIA”] |

| 28900000 | [“CALIFORNIA”] |

Set from array column

The following example shows the set of elements in an array.

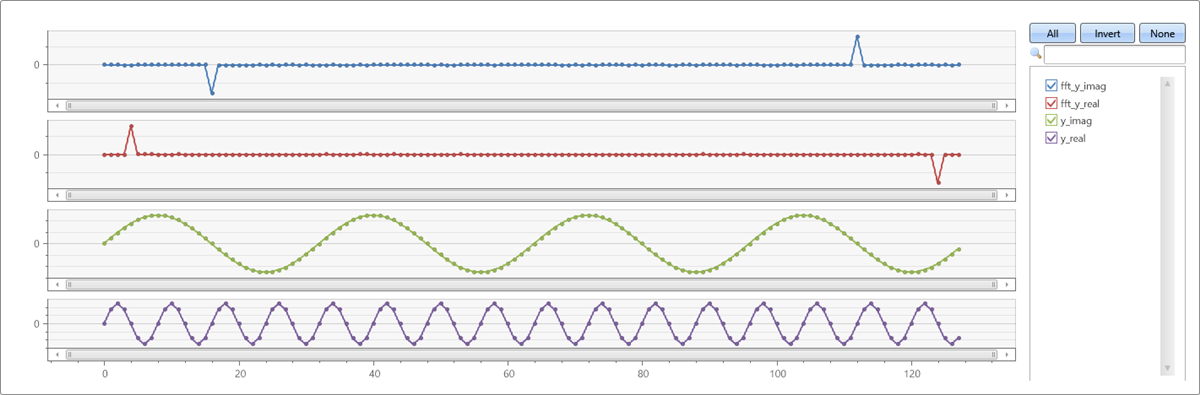

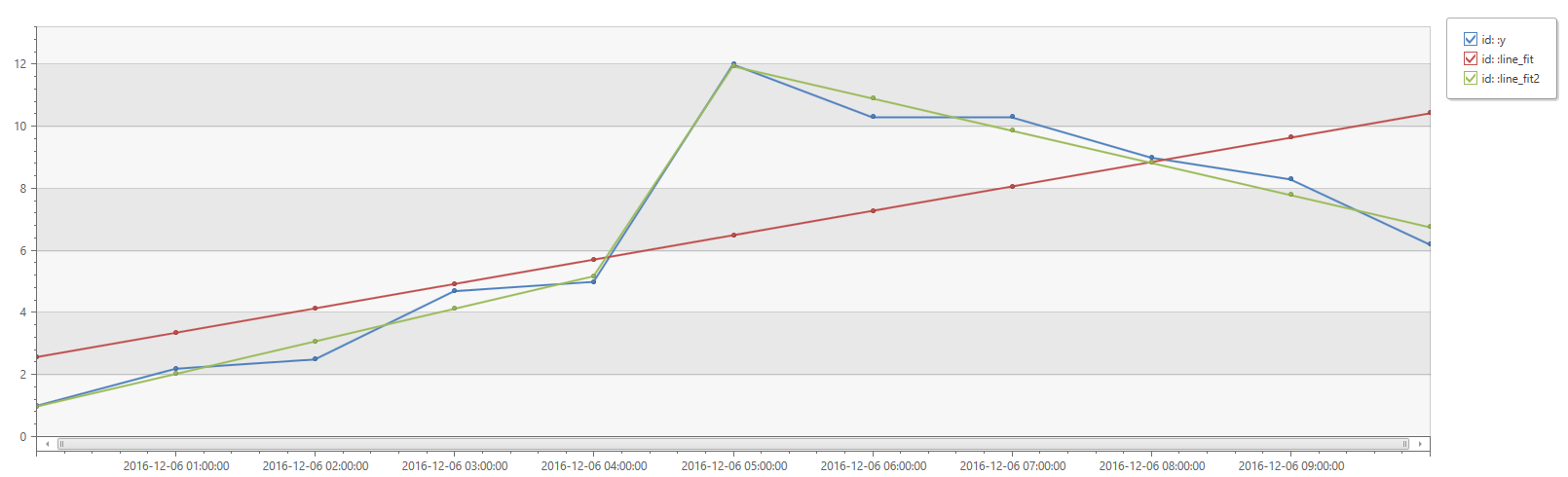

datatable (Val: int, Arr1: dynamic)