This is the multi-page printable view of this section. Click here to print.

Plugins

- 1: geo_line_lookup plugin

- 2: geo_polygon_lookup plugin

- 3: Data reshaping plugins

- 3.1: bag_unpack plugin

- 3.2: narrow plugin

- 3.3: pivot plugin

- 4: General plugins

- 4.1: dcount_intersect plugin

- 4.2: infer_storage_schema plugin

- 4.3: infer_storage_schema_with_suggestions plugin

- 4.4: ipv4_lookup plugin

- 4.5: ipv6_lookup plugin

- 4.6: preview plugin

- 4.7: schema_merge plugin

- 5: Language plugins

- 5.1: Python plugin

- 5.2: Python plugin packages

- 5.3: R plugin (Preview)

- 6: Machine learning plugins

- 6.1: autocluster plugin

- 6.2: basket plugin

- 6.3: diffpatterns plugin

- 6.4: diffpatterns_text plugin

- 7: Query connectivity plugins

- 7.1: ai_chat_completion plugin (preview)

- 7.2: ai_chat_completion_prompt plugin (preview)

- 7.3: ai_embeddings plugin (preview)

- 7.4: ai_embed_text plugin (Preview)

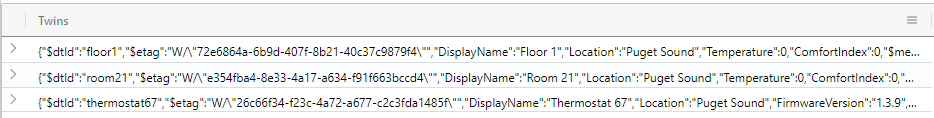

- 7.5: azure_digital_twins_query_request plugin

- 7.6: cosmosdb_sql_request plugin

- 7.7: http_request plugin

- 7.8: http_request_post plugin

- 7.9: mysql_request plugin

- 7.10: postgresql_request plugin

- 7.11: sql_request plugin

- 8: User and sequence analytics plugins

- 8.1: active_users_count plugin

- 8.2: activity_counts_metrics plugin

- 8.3: activity_engagement plugin

- 8.4: activity_metrics plugin

- 8.5: funnel_sequence plugin

- 8.6: funnel_sequence_completion plugin

- 8.7: new_activity_metrics plugin

- 8.8: rolling_percentile plugin

- 8.9: rows_near plugin

- 8.10: sequence_detect plugin

- 8.11: session_count plugin

- 8.12: sliding_window_counts plugin

- 8.13: User Analytics

1 - geo_line_lookup plugin

The geo_line_lookup plugin looks up a Line value in a lookup table and returns rows with matched values. The plugin is invoked with the evaluate operator.

Syntax

T | evaluate geo_line_lookup( LookupTable , LookupLineKey , SourceLongitude , SourceLatitude , Radius , [ return_unmatched ] , [ lookup_area_radius ] , [ return_lookup_key ] )

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The tabular input whose columns SourceLongitude and SourceLatitude are used for line matching. |

| LookupTable | string | ✔️ | Table or tabular expression with lines lookup data, whose column LookupLineKey is used for line matching. |

| LookupLineKey | dynamic | ✔️ | The column of LookupTable with line or multiline in the GeoJSON format and of dynamic type that is matched against each SourceLongitude, SourceLatitudes values. |

| SourceLongitude | real | ✔️ | The column of T with longitude value to be looked up in LookupTable. Longitude value in degrees. Valid value is a real number and in the range [-180, +180]. |

| SourceLatitude | real | ✔️ | The column of T with latitude value to be looked up in LookupTable. Latitude value in degrees. Valid value is a real number and in the range [-90, +90]. |

| Radius | real | ✔️ | Length from the line where the source location is considered a match. |

| return_unmatched | bool | An optional boolean flag that defines if the result should include all or only matching rows (default: false - only matching rows returned). | |

| lookup_area_radius | real | An optional lookup area radius distance in meters value that might help in matching locations to their respective lines. | |

| return_lookup_key | bool | An optional boolean flag that defines if the result should include column LookupLineKey (default: false). |

Returns

The geo_line_lookup plugin returns a result of join (lookup). The schema of the table is the union of the source table and the lookup table, similar to the result of the lookup operator.

Location distance from a line is tested via geo_distance_point_to_line().

If the return_unmatched argument is set to true, the resulting table includes both matched and unmatched rows (filled with nulls).

If the return_unmatched argument is set to false, or omitted (the default value of false is used), the resulting table has as many records as matching results. This variant of lookup has better performance compared to return_unmatched=true execution.

Setting lookup_area_radius length overrides internal matching mechanism and might improve or worsen run time and\or memory consumption. It doesn’t affect query correctness. Read more below on how to set this optional value.

LineString definition and constraints

dynamic({“type”: “LineString”,“coordinates”: [[lng_1,lat_1], [lng_2,lat_2],…, [lng_N,lat_N]]})

dynamic({“type”: “MultiLineString”,“coordinates”: [[line_1, line_2, …, line_N]]})

- LineString coordinates array must contain at least two entries.

- Coordinates [longitude, latitude] must be valid where longitude is a real number in the range [-180, +180] and latitude is a real number in the range [-90, +90].

- Edge length must be less than 180 degrees. The shortest edge between the two vertices is chosen.

Setting lookup_area_radius (if needed)

Setting lookup area radius overrides internal mechanism for matching locations to their respective lines. The value is a distance in meters. Ideally, lookup area radius should represent a distance from line center, such that within that distance a point matches to exactly one line in one-to-one manner and within that distance, there are no more than a single line. Because the lines data might be big, lines might vary greatly in size and shape compared to each other and the proximity of the line one to another, it might be challenging to come up with the radius that performs the best. If needed, here’s a sample that might help.

LinesTable | project value = geo_line_length(line) | summarize min = min(value), avg = avg(value), max = max(value)

Try using lookup radius starting from average value towards either minimum (If the lines are close to each other) or maximum by multiples of 2.

Examples

The following example returns only matching rows.

let roads = datatable(road_name:string, road:dynamic)

[

"5th Avenue NY", dynamic({"type":"LineString","coordinates":[[-73.97291864770574,40.76428551254824],[-73.99708638113894,40.73145135821781]]}),

"Palace Ave", dynamic({"type":"LineString","coordinates":[[-0.18756982045002246,51.50245944666557],[-0.18908519740253382,51.50544952706903]]}),

];

let locations = datatable(location_name:string, longitude:real, latitude:real)

[

"Empire State Building", -73.98567337898565, 40.74842629977377,

"Grand Central Terminal", -73.97713140725149, 40.752730320824895,

"Statue of Liberty", -74.04462223203123, 40.689195627512674,

"Kensington Palace", -0.1885272501232862, 51.504906159672316

];

locations

| evaluate geo_line_lookup(roads, road, longitude, latitude, 100, return_lookup_key = true)

Output

| location_name | longitude | latitude | road_name | road |

|---|---|---|---|---|

| Empire State Building | -73.9856733789857 | 40.7484262997738 | 5th Avenue NY | {“type”:“LineString”,“coordinates”:[[-73.97291864770574,40.76428551254824],[-73.99708638113894,40.73145135821781]]} |

| Kensington Palace | -0.188527250123286 | 51.5049061596723 | Palace Ave | {“type”:“LineString”,“coordinates”:[[-0.18756982045002247,51.50245944666557],[-0.18908519740253383,51.50544952706903]]} |

The following example returns both matching and nonmatching rows.

let roads = datatable(road_name:string, road:dynamic)

[

"5th Avenue NY", dynamic({"type":"LineString","coordinates":[[-73.97291864770574,40.76428551254824],[-73.99708638113894,40.73145135821781]]}),

"Palace Ave", dynamic({"type":"LineString","coordinates":[[-0.18756982045002246,51.50245944666557],[-0.18908519740253382,51.50544952706903]]}),

];

let locations = datatable(location_name:string, longitude:real, latitude:real)

[

"Empire State Building", -73.98567337898565, 40.74842629977377,

"Grand Central Terminal", -73.97713140725149, 40.752730320824895,

"Statue of Liberty", -74.04462223203123, 40.689195627512674,

"Kensington Palace", -0.1885272501232862, 51.504906159672316

];

locations

| evaluate geo_line_lookup(roads, road, longitude, latitude, 100, return_unmatched = true, return_lookup_key = true)

Output

| location_name | longitude | latitude | road_name | road |

|---|---|---|---|---|

| Empire State Building | -73.9856733789857 | 40.7484262997738 | 5th Avenue NY | {“type”:“LineString”,“coordinates”:[[-73.97291864770574,40.76428551254824],[-73.99708638113894,40.73145135821781]]} |

| Kensington Palace | -0.188527250123286 | 51.5049061596723 | Palace Ave | {“type”:“LineString”,“coordinates”:[[-0.18756982045002247,51.50245944666557],[-0.18908519740253383,51.50544952706903]]} |

| Statue of Liberty | -74.04462223203123 | 40.689195627512674 | ||

| Grand Central Terminal | -73.97713140725149 | 40.752730320824895 |

The following example returns both matching and nonmatching rows, with radius set to 350m.

let roads = datatable(road_name:string, road:dynamic)

[

"5th Avenue NY", dynamic({"type":"LineString","coordinates":[[-73.97291864770574,40.76428551254824],[-73.99708638113894,40.73145135821781]]}),

"Palace Ave", dynamic({"type":"LineString","coordinates":[[-0.18756982045002246,51.50245944666557],[-0.18908519740253382,51.50544952706903]]}),

];

let locations = datatable(location_name:string, longitude:real, latitude:real)

[

"Empire State Building", -73.98567337898565, 40.74842629977377,

"Grand Central Terminal", -73.97713140725149, 40.752730320824895,

"Statue of Liberty", -74.04462223203123, 40.689195627512674,

"Kensington Palace", -0.1885272501232862, 51.504906159672316

];

locations

| evaluate geo_line_lookup(roads, road, longitude, latitude, 350, return_unmatched = true, return_lookup_key = true)

| location_name | longitude | latitude | road_name | road |

|---|---|---|---|---|

| Empire State Building | -73.9856733789857 | 40.7484262997738 | 5th Avenue NY | {“type”:“LineString”,“coordinates”:[[-73.97291864770574,40.76428551254824],[-73.99708638113894,40.73145135821781]]} |

| Kensington Palace | -0.188527250123286 | 51.5049061596723 | Palace Ave | {“type”:“LineString”,“coordinates”:[[-0.18756982045002247,51.50245944666557],[-0.18908519740253383,51.50544952706903]]} |

| Grand Central Terminal | -73.97713140725149 | 40.752730320824895 | 5th Avenue NY | {“type”:“LineString”,“coordinates”:[[-73.97291864770574,40.76428551254824],[-73.99708638113894,40.73145135821781]]} |

| Statue of Liberty | -74.04462223203123 | 40.689195627512674 |

The following example counts locations by proximity to road.

let roads = datatable(road_name:string, road:dynamic)

[

"5th Avenue NY", dynamic({"type":"LineString","coordinates":[[-73.97291864770574,40.76428551254824],[-73.99708638113894,40.73145135821781]]}),

"Palace Ave", dynamic({"type":"LineString","coordinates":[[-0.18756982045002246,51.50245944666557],[-0.18908519740253382,51.50544952706903]]}),

];

let locations = datatable(location_name:string, longitude:real, latitude:real)

[

"Empire State Building", -73.98567337898565, 40.74842629977377,

"Grand Central Terminal", -73.97713140725149, 40.752730320824895,

"Statue of Liberty", -74.04462223203123, 40.689195627512674,

"Kensington Palace", -0.1885272501232862, 51.504906159672316

];

locations

| evaluate geo_line_lookup(roads, road, longitude, latitude, 350)

| summarize count() by road_name

Output

| road_name | count_ |

|---|---|

| 5th Avenue NY | 2 |

| Palace Ave | 1 |

Related content

- Overview of geo_distance_point_to_line()

- Overview of geo_line_to_s2cells()

2 - geo_polygon_lookup plugin

The geo_polygon_lookup plugin looks up a Polygon value in a lookup table and returns rows with matched values. The plugin is invoked with the evaluate operator.

Syntax

T | evaluate geo_polygon_lookup( LookupTable , LookupPolygonKey , SourceLongitude , SourceLatitude , [ radius ] , [ return_unmatched ] , [ lookup_area_radius ] , [ return_lookup_key ] )

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The tabular input whose columns SourceLongitude and SourceLatitude are used for polygon matching. |

| LookupTable | string | ✔️ | Table or tabular expression with polygons lookup data, whose column LookupPolygonKey is used for polygon matching. |

| LookupPolygonKey | dynamic | ✔️ | The column of LookupTable with Polygon or multipolygon in the GeoJSON format and of dynamic type that is matched against each SourceLongitude, SourceLatitudes values. |

| SourceLongitude | real | ✔️ | The column of T with longitude value to be looked up in LookupTable. Longitude value in degrees. Valid value is a real number and in the range [-180, +180]. |

| SourceLatitude | real | ✔️ | The column of T with latitude value to be looked up in LookupTable. Latitude value in degrees. Valid value is a real number and in the range [-90, +90]. |

| radius | real | An optional radius value that defines the length from the polygon borders where the location is considered a match. | |

| return_unmatched | bool | An optional boolean flag that defines if the result should include all or only matching rows (default: false - only matching rows returned). | |

| lookup_area_radius | real | An optional lookup area radius distance in meters value that might help in matching locations to their respective polygons. | |

| return_lookup_key | bool | An optional boolean flag that defines if the result should include column LookupPolygonKey (default: false). |

Returns

The geo_polygon_lookup plugin returns a result of join (lookup). The schema of the table is the union of the source table and the lookup table, similar to the result of the lookup operator.

Point containment in polygon is tested via geo_point_in_polygon(), or if radius is set, then geo_distance_point_to_polygon().

If the return_unmatched argument is set to true, the resulting table includes both matched and unmatched rows (filled with nulls).

If the return_unmatched argument is set to false, or omitted (the default value of false is used), the resulting table has as many records as matching results. This variant of lookup has better performance compared to return_unmatched=true execution.

Setting lookup_area_radius length overrides internal matching mechanism and might improve or worsen run time and\or memory consumption. It doesn’t affect query correctness. Read more below on how to set this optional value.

Polygon definition and constraints

dynamic({“type”: “Polygon”,“coordinates”: [ LinearRingShell, LinearRingHole_1, …, LinearRingHole_N ]})

dynamic({“type”: “MultiPolygon”,“coordinates”: [[LinearRingShell, LinearRingHole_1, …, LinearRingHole_N ], …, [LinearRingShell, LinearRingHole_1, …, LinearRingHole_M]]})

- LinearRingShell is required and defined as a

counterclockwiseordered array of coordinates [[lng_1,lat_1],…,[lng_i,lat_i],…,[lng_j,lat_j],…,[lng_1,lat_1]]. There can be only one shell. - LinearRingHole is optional and defined as a

clockwiseordered array of coordinates [[lng_1,lat_1],…,[lng_i,lat_i],…,[lng_j,lat_j],…,[lng_1,lat_1]]. There can be any number of interior rings and holes. - LinearRing vertices must be distinct with at least three coordinates. The first coordinate must be equal to the last. At least four entries are required.

- Coordinates [longitude, latitude] must be valid. Longitude must be a real number in the range [-180, +180] and latitude must be a real number in the range [-90, +90].

- LinearRingShell encloses at most half of the sphere. LinearRing divides the sphere into two regions. The smaller of the two regions, is chosen.

- LinearRing edge length must be less than 180 degrees. The shortest edge between the two vertices is chosen.

- LinearRings must not cross and must not share edges. LinearRings might share vertices.

Setting lookup_area_radius (if needed)

Setting lookup area radius overrides internal mechanism for matching locations to their respective polygons. The value is a distance in meters. Ideally, lookup area radius should represent a distance from polygon center, such that within that distance a point matches to exactly one polygon in one-to-one manner and within that distance, there are no more than a single polygon. Because the polygons data might be large, polygons might vary greatly in size and shape compared to each other and the proximity of the polygon one to another, it might be challenging to come up with the radius that performs the best. If needed, here’s a sample that might help.

PolygonsTable | project value = sqrt(geo_polygon_area(polygon)) | summarize min = min(value), avg = avg(value), max = max(value)

Try using lookup radius starting from average value towards either minimum (If the polygons are close to each other) or maximum by multiples of 2.

Examples

The following example returns only matching rows.

let polygons = datatable(polygon_name:string, polygon:dynamic)

[

"New York", dynamic({"type":"Polygon", "coordinates":[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331991,40.74112695466084],[-73.97375470114766,40.74300078124614]]]}),

"Paris", dynamic({"type":"Polygon","coordinates":[[[2.57564669886321,48.769567764921334],[2.420098611499384,49.05163394896812],[2.1016783119165723,48.80113794475062],[2.57564669886321,48.769567764921334]]]}),

];

let locations = datatable(location_name:string, longitude:real, latitude:real)

[

"Empire State Building", -73.98567337898565, 40.74842629977377,

"National Museum of Mathematics", -73.98778501496217, 40.743565232771545,

"Eiffel Tower", 2.294489426068907, 48.858263476169185,

"London", -0.13245599272019604, 51.49879464208368,

"Statue of Liberty", -74.04462223203123, 40.689195627512674,

];

locations

| evaluate geo_polygon_lookup(polygons, polygon, longitude, latitude, return_lookup_key = true)

Output

| location_name | longitude | latitude | polygon_name | polygon |

|---|---|---|---|---|

| NY National Museum of Mathematics | -73.9877850149622 | 40.7435652327715 | New York | {“type”:“Polygon”,“coordinates”:[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331992,40.74112695466084],[-73.97375470114766,40.74300078124614]]]} |

| Empire State Building | -73.9856733789857 | 40.7484262997738 | New York | {“type”:“Polygon”,“coordinates”:[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331992,40.74112695466084],[-73.97375470114766,40.74300078124614]]]} |

| Eiffel Tower | 2.29448942606891 | 48.8582634761692 | Paris | {“type”:“Polygon”,“coordinates”:[[[2.57564669886321,48.769567764921337],[2.420098611499384,49.05163394896812],[2.1016783119165725,48.80113794475062],[2.57564669886321,48.769567764921337]]]} |

The following example returns both matching and nonmatching rows.

let polygons = datatable(polygon_name:string, polygon:dynamic)

[

"New York", dynamic({"type":"Polygon", "coordinates":[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331991,40.74112695466084],[-73.97375470114766,40.74300078124614]]]}),

"Paris", dynamic({"type":"Polygon","coordinates":[[[2.57564669886321,48.769567764921334],[2.420098611499384,49.05163394896812],[2.1016783119165723,48.80113794475062],[2.57564669886321,48.769567764921334]]]}),

];

let locations = datatable(location_name:string, longitude:real, latitude:real)

[

"Empire State Building", -73.98567337898565, 40.74842629977377,

"NY National Museum of Mathematics", -73.98778501496217, 40.743565232771545,

"Eiffel Tower", 2.294489426068907, 48.858263476169185,

"London", -0.13245599272019604, 51.49879464208368,

"Statue of Liberty", -74.04462223203123, 40.689195627512674,

];

locations

| evaluate geo_polygon_lookup(polygons, polygon, longitude, latitude, return_unmatched = true, return_lookup_key = true)

Output

| location_name | longitude | latitude | polygon_name | polygon |

|---|---|---|---|---|

| NY National Museum of Mathematics | -73.9877850149622 | 40.7435652327715 | New York | {“type”:“Polygon”,“coordinates”:[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331992,40.74112695466084],[-73.97375470114766,40.74300078124614]]]} |

| Empire State Building | -73.9856733789857 | 40.7484262997738 | New York | {“type”:“Polygon”,“coordinates”:[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331992,40.74112695466084],[-73.97375470114766,40.74300078124614]]]} |

| Eiffel Tower | 2.29448942606891 | 48.8582634761692 | Paris | {“type”:“Polygon”,“coordinates”:[[[2.57564669886321,48.769567764921337],[2.420098611499384,49.05163394896812],[2.1016783119165725,48.80113794475062],[2.57564669886321,48.769567764921337]]]} |

| Statue of Liberty | -74.04462223203123 | 40.689195627512674 | ||

| London | -0.13245599272019604 | 51.498794642083681 |

The following example returns both matching and nonmatching rows where radius is set to 7 km.

let polygons = datatable(polygon_name:string, polygon:dynamic)

[

"New York", dynamic({"type":"Polygon", "coordinates":[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331991,40.74112695466084],[-73.97375470114766,40.74300078124614]]]}),

"Paris", dynamic({"type":"Polygon","coordinates":[[[2.57564669886321,48.769567764921334],[2.420098611499384,49.05163394896812],[2.1016783119165723,48.80113794475062],[2.57564669886321,48.769567764921334]]]}),

];

let locations = datatable(location_name:string, longitude:real, latitude:real)

[

"Empire State Building", -73.98567337898565, 40.74842629977377,

"NY National Museum of Mathematics", -73.98778501496217, 40.743565232771545,

"Eiffel Tower", 2.294489426068907, 48.858263476169185,

"London", -0.13245599272019604, 51.49879464208368,

"Statue of Liberty", -74.04462223203123, 40.689195627512674,

];

locations

| evaluate geo_polygon_lookup(polygons, polygon, longitude, latitude, radius = 7000, return_unmatched = true, return_lookup_key = true)

Output

| location_name | longitude | latitude | polygon_name | polygon |

|---|---|---|---|---|

| NY National Museum of Mathematics | -73.9877850149622 | 40.7435652327715 | New York | {“type”:“Polygon”,“coordinates”:[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331992,40.74112695466084],[-73.97375470114766,40.74300078124614]]]} |

| Empire State Building | -73.9856733789857 | 40.7484262997738 | New York | {“type”:“Polygon”,“coordinates”:[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331992,40.74112695466084],[-73.97375470114766,40.74300078124614]]]} |

| Eiffel Tower | 2.29448942606891 | 48.8582634761692 | Paris | {“type”:“Polygon”,“coordinates”:[[[2.57564669886321,48.769567764921337],[2.420098611499384,49.05163394896812],[2.1016783119165725,48.80113794475062],[2.57564669886321,48.769567764921337]]]} |

| Statue of Liberty | -74.04462223203123 | 40.689195627512674 | New York | {“type”:“Polygon”,“coordinates”:[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331992,40.74112695466084],[-73.97375470114766,40.74300078124614]]]} |

| London | -0.13245599272019604 | 51.498794642083681 |

The following example counts locations by polygon.

let polygons = datatable(polygon_name:string, polygon:dynamic)

[

"New York", dynamic({"type":"Polygon", "coordinates":[[[-73.97375470114766,40.74300078124614],[-73.98653921014294,40.75486501361894],[-73.99910622331991,40.74112695466084],[-73.97375470114766,40.74300078124614]]]}),

"Paris", dynamic({"type":"Polygon","coordinates":[[[2.57564669886321,48.769567764921334],[2.420098611499384,49.05163394896812],[2.1016783119165723,48.80113794475062],[2.57564669886321,48.769567764921334]]]}),

];

let locations = datatable(location_name:string, longitude:real, latitude:real)

[

"Empire State Building", -73.98567337898565, 40.74842629977377,

"NY National Museum of Mathematics", -73.98778501496217, 40.743565232771545,

"Eiffel Tower", 2.294489426068907, 48.858263476169185,

"London", -0.13245599272019604, 51.49879464208368,

"Statue of Liberty", -74.04462223203123, 40.689195627512674,

];

locations

| evaluate geo_polygon_lookup(polygons, polygon, longitude, latitude)

| summarize count() by polygon_name

Output

| polygon_name | count_ |

|---|---|

| New York | 2 |

| Paris | 1 |

Related content

- Overview of geo_point_in_polygon()

- Overview of geo_distance_point_to_polygon()

- Overview of geo_polygon_to_s2cells()

3 - Data reshaping plugins

3.1 - bag_unpack plugin

The bag_unpack plugin unpacks a single column of type dynamic, by treating each property bag top-level slot as a column. The plugin is invoked with the evaluate operator.

Syntax

T | evaluate bag_unpack( Column [, OutputColumnPrefix ] [, columnsConflict ] [, ignoredProperties ] ) [: OutputSchema]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The tabular input whose column Column is to be unpacked. |

| Column | dynamic | ✔️ | The column of T to unpack. |

| OutputColumnPrefix | string | A common prefix to add to all columns produced by the plugin. | |

| columnsConflict | string | The direction for column conflict resolution. Valid values:error - Query produces an error (default)replace_source - Source column is replacedkeep_source - Source column is kept | |

| ignoredProperties | dynamic | An optional set of bag properties to be ignored. } | |

| OutputSchema | Specify the column names and types for the bag_unpack plugin output. For syntax information, see Output schema syntax, and to understand the implications, see Performance considerations. |

Output schema syntax

( ColumnName : ColumnType [, …] )

Use a wildcard * as the first parameter to include all columns of the source table in the output, as follows:

( * , ColumnName : ColumnType [, …] )

Performance considerations

Using the plugin without an OutputSchema can have severe performance implications in large datasets and should be avoided.

Providing an OutputSchema allows the query engine to optimize the query execution, as it can determine the output schema without needing to parse and analyze the input data. OutputSchema is beneficial when the input data is large or complex. See the Examples with performance implications of using the plugin with and without a defined OutputSchema.

Returns

The bag_unpack plugin returns a table with as many records as its tabular input (T). The schema of the table is the same as the schema of its tabular input with the following modifications:

- The specified input column (Column) is removed.

- The name of each column corresponds to the name of each slot, optionally prefixed by OutputColumnPrefix.

- The type of each column is either the type of the slot, if all values of the same slot have the same type, or

dynamic, if the values differ in type. - The schema is extended with as many columns as there are distinct slots in the top-level property bag values of T.

Tabular schema rules apply to the input data. In particular:

- An output column name can’t be the same as an existing column in the tabular input T, unless it’s the column to unpack (Column). Otherwise, the output includes two columns with the same name.

- All slot names, when prefixed by OutputColumnPrefix, must be valid entity names and follow the identifier naming rules.

The plugin ignores null values.

Examples

The examples in this section show how to use the syntax to help you get started.

Expand a bag:

datatable(d:dynamic)

[

dynamic({"Name": "John", "Age":20}),

dynamic({"Name": "Dave", "Age":40}),

dynamic({"Name": "Jasmine", "Age":30}),

]

| evaluate bag_unpack(d)

Output

Age | Name |

|---|---|

| 20 | John |

| 40 | Dave |

| 30 | Jasmine |

Expand a bag and use the OutputColumnPrefix option to produce column names with a prefix:

datatable(d:dynamic)

[

dynamic({"Name": "John", "Age":20}),

dynamic({"Name": "Dave", "Age":40}),

dynamic({"Name": "Jasmine", "Age":30}),

]

| evaluate bag_unpack(d, 'Property_')

Output

Property_Age | Property_Name |

|---|---|

| 20 | John |

| 40 | Dave |

| 30 | Jasmine |

Expand a bag and use the columnsConflict option to resolve a column conflict between the dynamic column and the existing column:

datatable(Name:string, d:dynamic)

[

'James', dynamic({"Name": "John", "Age":20}),

'David', dynamic({ "Age":40}),

'Emily', dynamic({"Name": "Jasmine", "Age":30}),

]

| evaluate bag_unpack(d, columnsConflict='replace_source') // Replace old column Name by new column

Output

Name | Age |

|---|---|

| John | 20 |

| 40 | |

| Jasmine | 30 |

datatable(Name:string, d:dynamic)

[

'James', dynamic({"Name": "John", "Age":20}),

'David', dynamic({"Name": "Dave", "Age":40}),

'Emily', dynamic({"Name": "Jasmine", "Age":30}),

]

| evaluate bag_unpack(d, columnsConflict='keep_source') // Keep old column Name

Output

Name | Age |

|---|---|

| James | 20 |

| David | 40 |

| Emily | 30 |

Expand a bag and use the ignoredProperties option to ignore 2 of the properties in the property bag:

datatable(d:dynamic)

[

dynamic({"Name": "John", "Age":20, "Address": "Address-1" }),

dynamic({"Name": "Dave", "Age":40, "Address": "Address-2"}),

dynamic({"Name": "Jasmine", "Age":30, "Address": "Address-3"}),

]

// Ignore 'Age' and 'Address' properties

| evaluate bag_unpack(d, ignoredProperties=dynamic(['Address', 'Age']))

Output

Name |

|---|

| John |

| Dave |

| Jasmine |

Expand a bag and use the OutputSchema option:

datatable(d:dynamic)

[

dynamic({"Name": "John", "Age":20}),

dynamic({ "Name": "Dave", "Height": 170, "Age":40}),

dynamic({"Name": "Jasmine", "Age":30}),

]

| evaluate bag_unpack(d)

Output

Age | Height | Name |

|---|---|---|

| 20 | John | |

| 40 | 170 | Dave |

| 30 | Jasmine |

Expand a bag with an OutputSchema and use the wildcard * option:

This query returns the original slot Description and the columns defined in the OutputSchema.

datatable(d:dynamic, Description: string)

[

dynamic({"Name": "John", "Age":20, "height":180}), "Student",

dynamic({"Name": "Dave", "Age":40, "height":160}), "Teacher",

dynamic({"Name": "Jasmine", "Age":30, "height":172}), "Student",

]

| evaluate bag_unpack(d) : (*, Name:string, Age:long)

Output

| Description | Name | Age |

|---|---|---|

| Student | John | 20 |

| Teacher | Dave | 40 |

| Student | Jasmine | 30 |

Examples with performance implications

Expand a bag with and without a defined OutputSchema to compare performance implications:

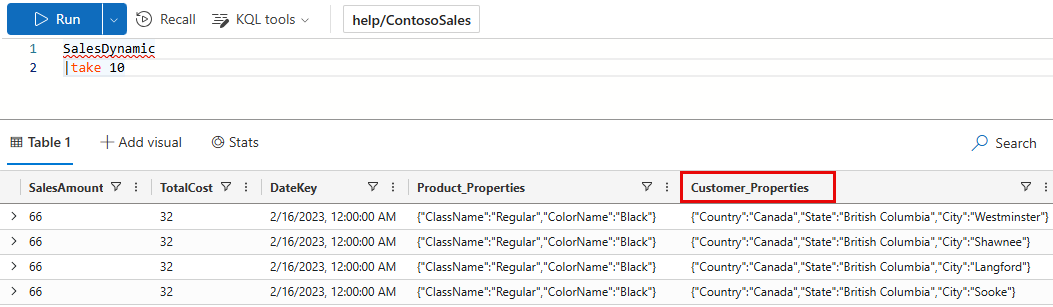

This example uses a publicly available table in the help cluster. In the ContosoSales database, there’s a table called SalesDynamic. The table contains sales data and includes a dynamic column named Customer_Properties.

Example with no output schema: The first query doesn’t define an OutputSchema. The query takes 5.84 seconds of CPU and scans 36.39 MB of data.

[!div class=“nextstepaction”] Run the query

SalesDynamic | evaluate bag_unpack(Customer_Properties) | summarize Sales=sum(SalesAmount) by Country, StateExample with output schema: The second query does provide an OutputSchema. The query takes 0.45 seconds of CPU and scans 19.31 MB of data. The query doesn’t have to analyze the input table, saving on processing time.

[!div class=“nextstepaction”] Run the query

SalesDynamic | evaluate bag_unpack(Customer_Properties) : (*, Country:string, State:string, City:string) | summarize Sales=sum(SalesAmount) by Country, State

Output

The output is the same for both queries. The first 10 rows of the output are shown below.

Country/Region | State | Sales |

|---|---|---|

| Canada | British Columbia | 56,101,083 |

| United Kingdom | England | 77,288,747 |

| Australia | Victoria | 31,242,423 |

| Australia | Queensland | 27,617,822 |

| Australia | South Australia | 8,530,537 |

| Australia | New South Wales | 54,765,786 |

| Australia | Tasmania | 3,704,648 |

| Canada | Alberta | 375,061 |

| Canada | Ontario | 38,282 |

| United States | Washington | 80,544,870 |

| … | … | … |

Related content

3.2 - narrow plugin

The narrow plugin “unpivots” a wide table into a table with three columns:

- Row number

- Column type

- Column value (as

string)

The narrow plugin is designed mainly for display purposes, as it allows wide

tables to be displayed comfortably without the need of horizontal scrolling.

The plugin is invoked with the evaluate operator.

Syntax

T | evaluate narrow()

Examples

The following example shows an easy way to read the output of the Kusto

.show diagnostics management command.

.show diagnostics

| evaluate narrow()

The results of .show diagnostics itself is a table with a single row and

33 columns. By using the narrow plugin we “rotate” the output to something

like this:

| Row | Column | Value |

|---|---|---|

| 0 | IsHealthy | True |

| 0 | IsRebalanceRequired | False |

| 0 | IsScaleOutRequired | False |

| 0 | MachinesTotal | 2 |

| 0 | MachinesOffline | 0 |

| 0 | NodeLastRestartedOn | 2017-03-14 10:59:18.9263023 |

| 0 | AdminLastElectedOn | 2017-03-14 10:58:41.6741934 |

| 0 | ClusterWarmDataCapacityFactor | 0.130552847673333 |

| 0 | ExtentsTotal | 136 |

| 0 | DiskColdAllocationPercentage | 5 |

| 0 | InstancesTargetBasedOnDataCapacity | 2 |

| 0 | TotalOriginalDataSize | 5167628070 |

| 0 | TotalExtentSize | 1779165230 |

| 0 | IngestionsLoadFactor | 0 |

| 0 | IngestionsInProgress | 0 |

| 0 | IngestionsSuccessRate | 100 |

| 0 | MergesInProgress | 0 |

| 0 | BuildVersion | 1.0.6281.19882 |

| 0 | BuildTime | 2017-03-13 11:02:44.0000000 |

| 0 | ClusterDataCapacityFactor | 0.130552847673333 |

| 0 | IsDataWarmingRequired | False |

| 0 | RebalanceLastRunOn | 2017-03-21 09:14:53.8523455 |

| 0 | DataWarmingLastRunOn | 2017-03-21 09:19:54.1438800 |

| 0 | MergesSuccessRate | 100 |

| 0 | NotHealthyReason | [null] |

| 0 | IsAttentionRequired | False |

| 0 | AttentionRequiredReason | [null] |

| 0 | ProductVersion | KustoRelease_2017.03.13.2 |

| 0 | FailedIngestOperations | 0 |

| 0 | FailedMergeOperations | 0 |

| 0 | MaxExtentsInSingleTable | 64 |

| 0 | TableWithMaxExtents | KustoMonitoringPersistentDatabase.KustoMonitoringTable |

| 0 | WarmExtentSize | 1779165230 |

3.3 - pivot plugin

Rotates a table by turning the unique values from one column in the input table into multiple columns in the output table and performs aggregations as required on any remaining column values that will appear in the final output.

Syntax

T | evaluate pivot(pivotColumn[, aggregationFunction] [,column1 [,column2 … ]]) [: OutputSchema]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| pivotColumn | string | ✔️ | The column to rotate. Each unique value from this column will be a column in the output table. |

| aggregationFunction | string | An aggregation function used to aggregate multiple rows in the input table to a single row in the output table. Currently supported functions: min(), max(), take_any(), sum(), dcount(), avg(), stdev(), variance(), make_list(), make_bag(), make_set(), count(). The default is count(). | |

| column1, column2, … | string | A column name or comma-separated list of column names. The output table will contain an additional column per each specified column. The default is all columns other than the pivoted column and the aggregation column. | |

| OutputSchema | The names and types for the expected columns of the pivot plugin output.Syntax: ( ColumnName : ColumnType [, …] )Specifying the expected schema optimizes query execution by not having to first run the actual query to explore the schema. An error is raised if the run-time schema doesn’t match the OutputSchema schema. |

Returns

Pivot returns the rotated table with specified columns (column1, column2, …) plus all unique values of the pivot columns. Each cell for the pivoted columns will contain the aggregate function computation.

Examples

Pivot by a column

For each EventType and State starting with ‘AL’, count the number of events of this type in this state.

StormEvents

| project State, EventType

| where State startswith "AL"

| where EventType has "Wind"

| evaluate pivot(State)

Output

| EventType | ALABAMA | ALASKA |

|---|---|---|

| Thunderstorm Wind | 352 | 1 |

| High Wind | 0 | 95 |

| Extreme Cold/Wind Chill | 0 | 10 |

| Strong Wind | 22 | 0 |

Pivot by a column with aggregation function

For each EventType and State starting with ‘AR’, display the total number of direct deaths.

StormEvents

| where State startswith "AR"

| project State, EventType, DeathsDirect

| where DeathsDirect > 0

| evaluate pivot(State, sum(DeathsDirect))

Output

| EventType | ARKANSAS | ARIZONA |

|---|---|---|

| Heavy Rain | 1 | 0 |

| Thunderstorm Wind | 1 | 0 |

| Lightning | 0 | 1 |

| Flash Flood | 0 | 6 |

| Strong Wind | 1 | 0 |

| Heat | 3 | 0 |

Pivot by a column with aggregation function and a single additional column

Result is identical to previous example.

StormEvents

| where State startswith "AR"

| project State, EventType, DeathsDirect

| where DeathsDirect > 0

| evaluate pivot(State, sum(DeathsDirect), EventType)

Output

| EventType | ARKANSAS | ARIZONA |

|---|---|---|

| Heavy Rain | 1 | 0 |

| Thunderstorm Wind | 1 | 0 |

| Lightning | 0 | 1 |

| Flash Flood | 0 | 6 |

| Strong Wind | 1 | 0 |

| Heat | 3 | 0 |

Specify the pivoted column, aggregation function, and multiple additional columns

For each event type, source, and state, sum the number of direct deaths.

StormEvents

| where State startswith "AR"

| where DeathsDirect > 0

| evaluate pivot(State, sum(DeathsDirect), EventType, Source)

Output

| EventType | Source | ARKANSAS | ARIZONA |

|---|---|---|---|

| Heavy Rain | Emergency Manager | 1 | 0 |

| Thunderstorm Wind | Emergency Manager | 1 | 0 |

| Lightning | Newspaper | 0 | 1 |

| Flash Flood | Trained Spotter | 0 | 2 |

| Flash Flood | Broadcast Media | 0 | 3 |

| Flash Flood | Newspaper | 0 | 1 |

| Strong Wind | Law Enforcement | 1 | 0 |

| Heat | Newspaper | 3 | 0 |

Pivot with a query-defined output schema

The following example selects specific columns in the StormEvents table. It uses an explicit schema definition that allows various optimizations to be evaluated before running the actual query.

StormEvents

| project State, EventType

| where EventType has "Wind"

| evaluate pivot(State): (EventType:string, ALABAMA:long, ALASKA:long)

Output

| EventType | ALABAMA | ALASKA |

|---|---|---|

| Thunderstorm Wind | 352 | 1 |

| High Wind | 0 | 95 |

| Marine Thunderstorm Wind | 0 | 0 |

| Strong Wind | 22 | 0 |

| Extreme Cold/Wind Chill | 0 | 10 |

| Cold/Wind Chill | 0 | 0 |

| Marine Strong Wind | 0 | 0 |

| Marine High Wind | 0 | 0 |

4 - General plugins

4.1 - dcount_intersect plugin

Calculates intersection between N sets based on hll values (N in range of [2..16]), and returns N dcount values. The plugin is invoked with the evaluate operator.

Syntax

T | evaluate dcount_intersect(hll_1, hll_2, [, hll_3, …])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The input tabular expression. |

| hll_i | The values of set Si calculated with the hll() function. |

Returns

Returns a table with N dcount values (per column, representing set intersections).

Column names are s0, s1, … (until n-1).

Given sets S1, S2, .. Sn return values will be representing distinct counts of:

S1,

S1 ∩ S2,

S1 ∩ S2 ∩ S3,

… ,

S1 ∩ S2 ∩ … ∩ Sn

Examples

// Generate numbers from 1 to 100

range x from 1 to 100 step 1

| extend isEven = (x % 2 == 0), isMod3 = (x % 3 == 0), isMod5 = (x % 5 == 0)

// Calculate conditional HLL values (note that '0' is included in each of them as additional value, so we will subtract it later)

| summarize hll_even = hll(iif(isEven, x, 0), 2),

hll_mod3 = hll(iif(isMod3, x, 0), 2),

hll_mod5 = hll(iif(isMod5, x, 0), 2)

// Invoke the plugin that calculates dcount intersections

| evaluate dcount_intersect(hll_even, hll_mod3, hll_mod5)

| project evenNumbers = s0 - 1, // 100 / 2 = 50

even_and_mod3 = s1 - 1, // gcd(2,3) = 6, therefor: 100 / 6 = 16

even_and_mod3_and_mod5 = s2 - 1 // gcd(2,3,5) is 30, therefore: 100 / 30 = 3

Output

| evenNumbers | even_and_mod3 | even_and_mod3_and_mod5 |

|---|---|---|

| 50 | 16 | 3 |

Related content

4.2 - infer_storage_schema plugin

This plugin infers the schema of external data, and returns it as CSL schema string. The string can be used when creating external tables. The plugin is invoked with the evaluate operator.

Authentication and authorization

In the properties of the request, you specify storage connection strings to access. Each storage connection string specifies the authorization method to use for access to the storage. Depending on the authorization method, the principal may need to be granted permissions on the external storage to perform the schema inference.

The following table lists the supported authentication methods and any required permissions by storage type.

| Authentication method | Azure Blob Storage / Data Lake Storage Gen2 | Data Lake Storage Gen1 |

|---|---|---|

| Impersonation | Storage Blob Data Reader | Reader |

| Managed Identity | Storage Blob Data Reader | Reader |

| Shared Access (SAS) token | List + Read | This authentication method isn’t supported in Gen1. |

| Microsoft Entra access token | ||

| Storage account access key | This authentication method isn’t supported in Gen1. | |

| Authentication method | Azure Blob Storage / Data Lake Storage Gen2 | Data Lake Storage Gen1 |

| – | – | – |

| Impersonation | Storage Blob Data Reader | Reader |

| Shared Access (SAS) token | List + Read | This authentication method isn’t supported in Gen1. |

| Microsoft Entra access token | ||

| Storage account access key | This authentication method isn’t supported in Gen1. |

Syntax

evaluate infer_storage_schema( Options )

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| Options | dynamic | ✔️ | A property bag specifying the properties of the request. |

Supported properties of the request

| Name | Type | Required | Description |

|---|---|---|---|

| StorageContainers | dynamic | ✔️ | An array of storage connection strings that represent prefix URI for stored data artifacts. |

| DataFormat | string | ✔️ | One of the supported data formats. |

| FileExtension | string | If specified, the function only scans files ending with this file extension. Specifying the extension may speed up the process or eliminate data reading issues. | |

| FileNamePrefix | string | If specified, the function only scans files starting with this prefix. Specifying the prefix may speed up the process. | |

| Mode | string | The schema inference strategy. A value of: any, last, all. The function infers the data schema from the first found file, from the last written file, or from all files respectively. The default value is last. | |

| InferenceOptions | dynamic | More inference options. Valid options: UseFirstRowAsHeader for delimited file formats. For example, 'InferenceOptions': {'UseFirstRowAsHeader': true} . |

Returns

The infer_storage_schema plugin returns a single result table containing a single row/column containing CSL schema string.

Example

let options = dynamic({

'StorageContainers': [

h@'https://storageaccount.blob.core.windows.net/MobileEvents;secretKey'

],

'FileExtension': '.parquet',

'FileNamePrefix': 'part-',

'DataFormat': 'parquet'

});

evaluate infer_storage_schema(options)

Output

| CslSchema |

|---|

| app_id:string, user_id:long, event_time:datetime, country:string, city:string, device_type:string, device_vendor:string, ad_network:string, campaign:string, site_id:string, event_type:string, event_name:string, organic:string, days_from_install:int, revenue:real |

Use the returned schema in external table definition:

.create external table MobileEvents(

app_id:string, user_id:long, event_time:datetime, country:string, city:string, device_type:string, device_vendor:string, ad_network:string, campaign:string, site_id:string, event_type:string, event_name:string, organic:string, days_from_install:int, revenue:real

)

kind=blob

partition by (dt:datetime = bin(event_time, 1d), app:string = app_id)

pathformat = ('app=' app '/dt=' datetime_pattern('yyyyMMdd', dt))

dataformat = parquet

(

h@'https://storageaccount.blob.core.windows.net/MovileEvents;secretKey'

)

Related content

4.3 - infer_storage_schema_with_suggestions plugin

This infer_storage_schema_with_suggestions plugin infers the schema of external data and returns a JSON object. For each column, the object provides inferred type, a recommended type, and the recommended mapping transformation. The recommended type and mapping are provided by the suggestion logic that determines the optimal type using the following logic:

- Identity columns: If the inferred type for a column is

longand the column name ends withid, the suggested type isstringsince it provides optimized indexing for identity columns where equality filters are common. - Unix datetime columns: If the inferred type for a column is

longand one of the unix-time to datetime mapping transformations produces a valid datetime value, the suggested type isdatetimeand the suggestedApplicableTransformationMappingmapping is the one that produced a valid datetime value.

The plugin is invoked with the evaluate operator. To obtain the table schema that uses the inferred schema for Create and alter Azure Storage external tables without suggestions, use the infer_storage_schema plugin.

Authentication and authorization

In the properties of the request, you specify storage connection strings to access. Each storage connection string specifies the authorization method to use for access to the storage. Depending on the authorization method, the principal may need to be granted permissions on the external storage to perform the schema inference.

The following table lists the supported authentication methods and any required permissions by storage type.

| Authentication method | Azure Blob Storage / Data Lake Storage Gen2 | Data Lake Storage Gen1 |

|---|---|---|

| Impersonation | Storage Blob Data Reader | Reader |

| Shared Access (SAS) token | List + Read | This authentication method isn’t supported in Gen1. |

| Microsoft Entra access token | ||

| Storage account access key | This authentication method isn’t supported in Gen1. |

Syntax

evaluate infer_storage_schema_with_suggestions( Options )

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| Options | dynamic | ✔️ | A property bag specifying the properties of the request. |

Supported properties of the request

| Name | Type | Required | Description |

|---|---|---|---|

| StorageContainers | dynamic | ✔️ | An array of storage connection strings that represent prefix URI for stored data artifacts. |

| DataFormat | string | ✔️ | One of the supported Data formats supported for ingestion |

| FileExtension | string | If specified, the function only scans files ending with this file extension. Specifying the extension may speed up the process or eliminate data reading issues. | |

| FileNamePrefix | string | If specified, the function only scans files starting with this prefix. Specifying the prefix may speed up the process. | |

| Mode | string | The schema inference strategy. A value of: any, last, all. The function infers the data schema from the first found file, from the last written file, or from all files respectively. The default value is last. | |

| InferenceOptions | dynamic | More inference options. Valid options: UseFirstRowAsHeader for delimited file formats. For example, 'InferenceOptions': {'UseFirstRowAsHeader': true} . |

Returns

The infer_storage_schema_with_suggestions plugin returns a single result table containing a single row/column containing a JSON string.

Example

let options = dynamic({

'StorageContainers': [

h@'https://storageaccount.blob.core.windows.net/MobileEvents;secretKey'

],

'FileExtension': '.json',

'FileNamePrefix': 'js-',

'DataFormat': 'json'

});

evaluate infer_storage_schema_with_suggestions(options)

Example input data

{

"source": "DataExplorer",

"created_at": "2022-04-10 15:47:57",

"author_id": 739144091473215488,

"time_millisec":1547083647000

}

Output

{

"Columns": [

{

"OriginalColumn": {

"Name": "source",

"CslType": {

"type": "string",

"IsNumeric": false,

"IsSummable": false

}

},

"RecommendedColumn": {

"Name": "source",

"CslType": {

"type": "string",

"IsNumeric": false,

"IsSummable": false

}

},

"ApplicableTransformationMapping": "None"

},

{

"OriginalColumn": {

"Name": "created_at",

"CslType": {

"type": "datetime",

"IsNumeric": false,

"IsSummable": true

}

},

"RecommendedColumn": {

"Name": "created_at",

"CslType": {

"type": "datetime",

"IsNumeric": false,

"IsSummable": true

}

},

"ApplicableTransformationMapping": "None"

},

{

"OriginalColumn": {

"Name": "author_id",

"CslType": {

"type": "long",

"IsNumeric": true,

"IsSummable": true

}

},

"RecommendedColumn": {

"Name": "author_id",

"CslType": {

"type": "string",

"IsNumeric": false,

"IsSummable": false

}

},

"ApplicableTransformationMapping": "None"

},

{

"OriginalColumn": {

"Name": "time_millisec",

"CslType": {

"type": "long",

"IsNumeric": true,

"IsSummable": true

}

},

"RecommendedColumn": {

"Name": "time_millisec",

"CslType": {

"type": "datetime",

"IsNumeric": false,

"IsSummable": true

}

},

"ApplicableTransformationMapping": "DateTimeFromUnixMilliseconds"

}

]

}

Related content

4.4 - ipv4_lookup plugin

The ipv4_lookup plugin looks up an IPv4 value in a lookup table and returns rows with matched values. The plugin is invoked with the evaluate operator.

Syntax

T | evaluate ipv4_lookup( LookupTable , SourceIPv4Key , IPv4LookupKey [, ExtraKey1 [.. , ExtraKeyN [, return_unmatched ]]] )

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The tabular input whose column SourceIPv4Key is used for IPv4 matching. |

| LookupTable | string | ✔️ | Table or tabular expression with IPv4 lookup data, whose column LookupKey is used for IPv4 matching. IPv4 values can be masked using IP-prefix notation. |

| SourceIPv4Key | string | ✔️ | The column of T with IPv4 string to be looked up in LookupTable. IPv4 values can be masked using IP-prefix notation. |

| IPv4LookupKey | string | ✔️ | The column of LookupTable with IPv4 string that is matched against each SourceIPv4Key value. |

| ExtraKey1 .. ExtraKeyN | string | Additional column references that are used for lookup matches. Similar to join operation: records with equal values are considered matching. Column name references must exist both is source table T and LookupTable. | |

| return_unmatched | bool | A boolean flag that defines if the result should include all or only matching rows (default: false - only matching rows returned). |

Returns

The ipv4_lookup plugin returns a result of join (lookup) based on IPv4 key. The schema of the table is the union of the source table and the lookup table, similar to the result of the lookup operator.

If the return_unmatched argument is set to true, the resulting table includes both matched and unmatched rows (filled with nulls).

If the return_unmatched argument is set to false, or omitted (the default value of false is used), the resulting table has as many records as matching results. This variant of lookup has better performance compared to return_unmatched=true execution.

Examples

IPv4 lookup - matching rows only

// IP lookup table: IP_Data

// Partial data from: https://raw.githubusercontent.com/datasets/geoip2-ipv4/master/data/geoip2-ipv4.csv

let IP_Data = datatable(network:string, continent_code:string ,continent_name:string, country_iso_code:string, country_name:string)

[

"111.68.128.0/17","AS","Asia","JP","Japan",

"5.8.0.0/19","EU","Europe","RU","Russia",

"223.255.254.0/24","AS","Asia","SG","Singapore",

"46.36.200.51/32","OC","Oceania","CK","Cook Islands",

"2.20.183.0/24","EU","Europe","GB","United Kingdom",

];

let IPs = datatable(ip:string)

[

'2.20.183.12', // United Kingdom

'5.8.1.2', // Russia

'192.165.12.17', // Unknown

];

IPs

| evaluate ipv4_lookup(IP_Data, ip, network)

Output

| ip | network | continent_code | continent_name | country_iso_code | country_name |

|---|---|---|---|---|---|

| 2.20.183.12 | 2.20.183.0/24 | EU | Europe | GB | United Kingdom |

| 5.8.1.2 | 5.8.0.0/19 | EU | Europe | RU | Russia |

IPv4 lookup - return both matching and nonmatching rows

// IP lookup table: IP_Data

// Partial data from:

// https://raw.githubusercontent.com/datasets/geoip2-ipv4/master/data/geoip2-ipv4.csv

let IP_Data = datatable(network:string,continent_code:string ,continent_name:string ,country_iso_code:string ,country_name:string )

[

"111.68.128.0/17","AS","Asia","JP","Japan",

"5.8.0.0/19","EU","Europe","RU","Russia",

"223.255.254.0/24","AS","Asia","SG","Singapore",

"46.36.200.51/32","OC","Oceania","CK","Cook Islands",

"2.20.183.0/24","EU","Europe","GB","United Kingdom",

];

let IPs = datatable(ip:string)

[

'2.20.183.12', // United Kingdom

'5.8.1.2', // Russia

'192.165.12.17', // Unknown

];

IPs

| evaluate ipv4_lookup(IP_Data, ip, network, return_unmatched = true)

Output

| ip | network | continent_code | continent_name | country_iso_code | country_name |

|---|---|---|---|---|---|

| 2.20.183.12 | 2.20.183.0/24 | EU | Europe | GB | United Kingdom |

| 5.8.1.2 | 5.8.0.0/19 | EU | Europe | RU | Russia |

| 192.165.12.17 |

IPv4 lookup - using source in external_data()

let IP_Data = external_data(network:string,geoname_id:long,continent_code:string,continent_name:string ,country_iso_code:string,country_name:string,is_anonymous_proxy:bool,is_satellite_provider:bool)

['https://raw.githubusercontent.com/datasets/geoip2-ipv4/master/data/geoip2-ipv4.csv'];

let IPs = datatable(ip:string)

[

'2.20.183.12', // United Kingdom

'5.8.1.2', // Russia

'192.165.12.17', // Sweden

];

IPs

| evaluate ipv4_lookup(IP_Data, ip, network, return_unmatched = true)

Output

| ip | network | geoname_id | continent_code | continent_name | country_iso_code | country_name | is_anonymous_proxy | is_satellite_provider |

|---|---|---|---|---|---|---|---|---|

| 2.20.183.12 | 2.20.183.0/24 | 2635167 | EU | Europe | GB | United Kingdom | 0 | 0 |

| 5.8.1.2 | 5.8.0.0/19 | 2017370 | EU | Europe | RU | Russia | 0 | 0 |

| 192.165.12.17 | 192.165.8.0/21 | 2661886 | EU | Europe | SE | Sweden | 0 | 0 |

IPv4 lookup - using extra columns for matching

let IP_Data = external_data(network:string,geoname_id:long,continent_code:string,continent_name:string ,country_iso_code:string,country_name:string,is_anonymous_proxy:bool,is_satellite_provider:bool)

['https://raw.githubusercontent.com/datasets/geoip2-ipv4/master/data/geoip2-ipv4.csv'];

let IPs = datatable(ip:string, continent_name:string, country_iso_code:string)

[

'2.20.183.12', 'Europe', 'GB', // United Kingdom

'5.8.1.2', 'Europe', 'RU', // Russia

'192.165.12.17', 'Europe', '', // Sweden is 'SE' - so it won't be matched

];

IPs

| evaluate ipv4_lookup(IP_Data, ip, network, continent_name, country_iso_code)

Output

| ip | continent_name | country_iso_code | network | geoname_id | continent_code | country_name | is_anonymous_proxy | is_satellite_provider |

|---|---|---|---|---|---|---|---|---|

| 2.20.183.12 | Europe | GB | 2.20.183.0/24 | 2635167 | EU | United Kingdom | 0 | 0 |

| 5.8.1.2 | Europe | RU | 5.8.0.0/19 | 2017370 | EU | Russia | 0 | 0 |

Related content

- Overview of IPv4/IPv6 functions

- Overview of IPv4 text match functions

4.5 - ipv6_lookup plugin

The ipv6_lookup plugin looks up an IPv6 value in a lookup table and returns rows with matched values. The plugin is invoked with the evaluate operator.

Syntax

T | evaluate ipv6_lookup( LookupTable , SourceIPv6Key , IPv6LookupKey [, return_unmatched ] )

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The tabular input whose column SourceIPv6Key is used for IPv6 matching. |

| LookupTable | string | ✔️ | Table or tabular expression with IPv6 lookup data, whose column LookupKey is used for IPv6 matching. IPv6 values can be masked using IP-prefix notation. |

| SourceIPv6Key | string | ✔️ | The column of T with IPv6 string to be looked up in LookupTable. IPv6 values can be masked using IP-prefix notation. |

| IPv6LookupKey | string | ✔️ | The column of LookupTable with IPv6 string that is matched against each SourceIPv6Key value. |

| return_unmatched | bool | A boolean flag that defines if the result should include all or only matching rows (default: false - only matching rows returned). |

Returns

The ipv6_lookup plugin returns a result of join (lookup) based on IPv6 key. The schema of the table is the union of the source table and the lookup table, similar to the result of the lookup operator.

If the return_unmatched argument is set to true, the resulting table includes both matched and unmatched rows (filled with nulls).

If the return_unmatched argument is set to false, or omitted (the default value of false is used), the resulting table has as many records as matching results. This variant of lookup has better performance compared to return_unmatched=true execution.

Examples

IPv6 lookup - matching rows only

// IP lookup table: IP_Data (the data is generated by ChatGPT).

let IP_Data = datatable(network:string, continent_code:string ,continent_name:string, country_iso_code:string, country_name:string)

[

"2001:0db8:85a3::/48","NA","North America","US","United States",

"2404:6800:4001::/48","AS","Asia","JP","Japan",

"2a00:1450:4001::/48","EU","Europe","DE","Germany",

"2800:3f0:4001::/48","SA","South America","BR","Brazil",

"2c0f:fb50:4001::/48","AF","Africa","ZA","South Africa",

"2607:f8b0:4001::/48","NA","North America","CA","Canada",

"2a02:26f0:4001::/48","EU","Europe","FR","France",

"2400:cb00:4001::/48","AS","Asia","IN","India",

"2801:0db8:85a3::/48","SA","South America","AR","Argentina",

"2a03:2880:4001::/48","EU","Europe","GB","United Kingdom"

];

let IPs = datatable(ip:string)

[

"2001:0db8:85a3:0000:0000:8a2e:0370:7334", // United States

"2404:6800:4001:0001:0000:8a2e:0370:7334", // Japan

"2a02:26f0:4001:0006:0000:8a2e:0370:7334", // France

"a5e:f127:8a9d:146d:e102:b5d3:c755:abcd", // N/A

"a5e:f127:8a9d:146d:e102:b5d3:c755:abce" // N/A

];

IPs

| evaluate ipv6_lookup(IP_Data, ip, network)

Output

| network | continent_code | continent_name | country_iso_code | country_name | ip |

|---|---|---|---|---|---|

| 2001:0db8:85a3::/48 | NA | North America | US | United States | 2001:0db8:85a3:0000:0000:8a2e:0370:7334 |

| 2404:6800:4001::/48 | AS | Asia | JP | Japan | 2404:6800:4001:0001:0000:8a2e:0370:7334 |

| 2a02:26f0:4001::/48 | EU | Europe | FR | France | 2a02:26f0:4001:0006:0000:8a2e:0370:7334 |

IPv6 lookup - return both matching and nonmatching rows

// IP lookup table: IP_Data (the data is generated by ChatGPT).

let IP_Data = datatable(network:string, continent_code:string ,continent_name:string, country_iso_code:string, country_name:string)

[

"2001:0db8:85a3::/48","NA","North America","US","United States",

"2404:6800:4001::/48","AS","Asia","JP","Japan",

"2a00:1450:4001::/48","EU","Europe","DE","Germany",

"2800:3f0:4001::/48","SA","South America","BR","Brazil",

"2c0f:fb50:4001::/48","AF","Africa","ZA","South Africa",

"2607:f8b0:4001::/48","NA","North America","CA","Canada",

"2a02:26f0:4001::/48","EU","Europe","FR","France",

"2400:cb00:4001::/48","AS","Asia","IN","India",

"2801:0db8:85a3::/48","SA","South America","AR","Argentina",

"2a03:2880:4001::/48","EU","Europe","GB","United Kingdom"

];

let IPs = datatable(ip:string)

[

"2001:0db8:85a3:0000:0000:8a2e:0370:7334", // United States

"2404:6800:4001:0001:0000:8a2e:0370:7334", // Japan

"2a02:26f0:4001:0006:0000:8a2e:0370:7334", // France

"a5e:f127:8a9d:146d:e102:b5d3:c755:abcd", // N/A

"a5e:f127:8a9d:146d:e102:b5d3:c755:abce" // N/A

];

IPs

| evaluate ipv6_lookup(IP_Data, ip, network, true)

Output

| network | continent_code | continent_name | country_iso_code | country_name | ip |

|---|---|---|---|---|---|

| 2001:0db8:85a3::/48 | NA | North America | US | United States | 2001:0db8:85a3:0000:0000:8a2e:0370:7334 |

| 2404:6800:4001::/48 | AS | Asia | JP | Japan | 2404:6800:4001:0001:0000:8a2e:0370:7334 |

| 2a02:26f0:4001::/48 | EU | Europe | FR | France | 2a02:26f0:4001:0006:0000:8a2e:0370:7334 |

| a5e:f127:8a9d:146d:e102:b5d3:c755:abcd | |||||

| a5e:f127:8a9d:146d:e102:b5d3:c755:abce |

Related content

- Overview of IPv6/IPv6 functions

4.6 - preview plugin

Returns a table with up to the specified number of rows from the input record set, and the total number of records in the input record set.

Syntax

T | evaluate preview(NumberOfRows)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The table to preview. |

| NumberOfRows | int | ✔️ | The number of rows to preview from the table. |

Returns

The preview plugin returns two result tables:

- A table with up to the specified number of rows.

For example, the sample query above is equivalent to running

T | take 50. - A table with a single row/column, holding the number of records in the

input record set.

For example, the sample query above is equivalent to running

T | count.

Example

StormEvents | evaluate preview(5)

Table1

The following output table only includes the first 6 columns. To see the full result, run the query.

|StartTime|EndTime|EpisodeId|EventId|State|EventType|…| |–|–|–| |2007-12-30T16:00:00Z|2007-12-30T16:05:00Z|11749|64588|GEORGIA| Thunderstorm Wind|…| |2007-12-20T07:50:00Z|2007-12-20T07:53:00Z|12554|68796|MISSISSIPPI| Thunderstorm Wind|…| |2007-09-29T08:11:00Z|2007-09-29T08:11:00Z|11091|61032|ATLANTIC SOUTH| Waterspout|…| |2007-09-20T21:57:00Z|2007-09-20T22:05:00Z|11078|60913|FLORIDA| Tornado|…| |2007-09-18T20:00:00Z|2007-09-19T18:00:00Z|11074|60904|FLORIDA| Heavy Rain|…|

Table2

| Count |

|---|

| 59066 |

4.7 - schema_merge plugin

Merges tabular schema definitions into a unified schema.

Schema definitions are expected to be in the format produced by the getschema operator.

The schema merge operation joins columns in input schemas and tries to reduce

data types to common ones. If data types can’t be reduced, an error is displayed on the problematic column.

The plugin is invoked with the evaluate operator.

Syntax

T | evaluate schema_merge(PreserveOrder)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| PreserveOrder | bool | When set to true, directs the plugin to validate the column order as defined by the first tabular schema that is kept. If the same column is in several schemas, the column ordinal must be like the column ordinal of the first schema that it appeared in. Default value is true. |

Returns

The schema_merge plugin returns output similar to what getschema operator returns.

Examples

Merge with a schema that has a new column appended.

let schema1 = datatable(Uri:string, HttpStatus:int)[] | getschema;

let schema2 = datatable(Uri:string, HttpStatus:int, Referrer:string)[] | getschema;

union schema1, schema2 | evaluate schema_merge()

Output

| ColumnName | ColumnOrdinal | DataType | ColumnType |

|---|---|---|---|

| Uri | 0 | System.String | string |

| HttpStatus | 1 | System.Int32 | int |

| Referrer | 2 | System.String | string |

Merge with a schema that has different column ordering (HttpStatus ordinal changes from 1 to 2 in the new variant).

let schema1 = datatable(Uri:string, HttpStatus:int)[] | getschema;

let schema2 = datatable(Uri:string, Referrer:string, HttpStatus:int)[] | getschema;

union schema1, schema2 | evaluate schema_merge()

Output

| ColumnName | ColumnOrdinal | DataType | ColumnType |

|---|---|---|---|

| Uri | 0 | System.String | string |

| Referrer | 1 | System.String | string |

| HttpStatus | -1 | ERROR(unknown CSL type:ERROR(columns are out of order)) | ERROR(columns are out of order) |

Merge with a schema that has different column ordering, but with PreserveOrder set to false.

let schema1 = datatable(Uri:string, HttpStatus:int)[] | getschema;

let schema2 = datatable(Uri:string, Referrer:string, HttpStatus:int)[] | getschema;

union schema1, schema2 | evaluate schema_merge(PreserveOrder = false)

Output

| ColumnName | ColumnOrdinal | DataType | ColumnType |

|---|---|---|---|

| Uri | 0 | System.String | string |

| Referrer | 1 | System.String | string |

| HttpStatus | 2 | System.Int32 | int |

5 - Language plugins

5.1 - Python plugin

5.2 - Python plugin packages

This article lists the available Python packages in the Python plugin. For more information, see Python plugin.

3.11.7 (preview)

Python engine 3.11.7 + common data science and ML packages

| Package | Version |

|---|---|

| annotated-types | 0.6.0 |

| anytree | 2.12.1 |

| arrow | 1.3.0 |

| attrs | 23.2.0 |

| blinker | 1.7.0 |

| blis | 0.7.11 |

| Bottleneck | 1.3.8 |

| Brotli | 1.1.0 |

| brotlipy | 0.7.0 |

| catalogue | 2.0.10 |

| certifi | 2024.2.2 |

| cffi | 1.16.0 |

| chardet | 5.2.0 |

| charset-normalizer | 3.3.2 |

| click | 8.1.7 |

| cloudpathlib | 0.16.0 |

| cloudpickle | 3.0.0 |

| colorama | 0.4.6 |

| coloredlogs | 15.0.1 |

| confection | 0.1.4 |

| contourpy | 1.2.1 |

| cycler | 0.12.1 |

| cymem | 2.0.8 |

| Cython | 3.0.10 |

| daal | 2024.3.0 |

| daal4py | 2024.3.0 |

| dask | 2024.4.2 |

| diff-match-patch | 20230430 |

| dill | 0.3.8 |

| distributed | 2024.4.2 |

| filelock | 3.13.4 |

| flashtext | 2.7 |

| Flask | 3.0.3 |

| Flask-Compress | 1.15 |

| flatbuffers | 24.3.25 |

| fonttools | 4.51.0 |

| fsspec | 2024.3.1 |

| gensim | 4.3.2 |

| humanfriendly | 10.0 |

| idna | 3.7 |

| importlib_metadata | 7.1.0 |

| intervaltree | 3.1.0 |

| itsdangerous | 2.2.0 |

| jellyfish | 1.0.3 |

| Jinja2 | 3.1.3 |

| jmespath | 1.0.1 |

| joblib | 1.4.0 |

| json5 | 0.9.25 |

| jsonschema | 4.21.1 |

| jsonschema-specifications | 2023.12.1 |

| kiwisolver | 1.4.5 |

| langcodes | 3.4.0 |

| language_data | 1.2.0 |

| locket | 1.0.0 |

| lxml | 5.2.1 |

| marisa-trie | 1.1.0 |

| MarkupSafe | 2.1.5 |

| mlxtend | 0.23.1 |

| mpmath | 1.3.0 |

| msgpack | 1.0.8 |

| murmurhash | 1.0.10 |

| networkx | 3.3 |

| nltk | 3.8.1 |

| numpy | 1.26.4 |

| onnxruntime | 1.17.3 |

| packaging | 24.0 |

| pandas | 2.2.2 |

| partd | 1.4.1 |

| patsy | 0.5.6 |

| pillow | 10.3.0 |

| platformdirs | 4.2.1 |

| plotly | 5.21.0 |

| preshed | 3.0.9 |

| protobuf | 5.26.1 |

| psutil | 5.9.8 |

| pycparser | 2.22 |

| pydantic | 2.7.1 |

| pydantic_core | 2.18.2 |

| pyfpgrowth | 1.0 |

| pyparsing | 3.1.2 |

| pyreadline3 | 3.4.1 |

| python-dateutil | 2.9.0.post0 |

| pytz | 2024.1 |

| PyWavelets | 1.6.0 |

| PyYAML | 6.0.1 |

| queuelib | 1.6.2 |

| referencing | 0.35.0 |

| regex | 2024.4.16 |

| requests | 2.31.0 |

| requests-file | 2.0.0 |

| rpds-py | 0.18.0 |

| scikit-learn | 1.4.2 |

| scipy | 1.13.0 |

| sip | 6.8.3 |

| six | 1.16.0 |

| smart-open | 6.4.0 |

| snowballstemmer | 2.2.0 |

| sortedcollections | 2.1.0 |

| sortedcontainers | 2.4.0 |

| spacy | 3.7.4 |

| spacy-legacy | 3.0.12 |

| spacy-loggers | 1.0.5 |

| srsly | 2.4.8 |

| statsmodels | 0.14.2 |

| sympy | 1.12 |

| tbb | 2021.12.0 |

| tblib | 3.0.0 |

| tenacity | 8.2.3 |

| textdistance | 4.6.2 |

| thinc | 8.2.3 |

| threadpoolctl | 3.4.0 |

| three-merge | 0.1.1 |

| tldextract | 5.1.2 |

| toolz | 0.12.1 |

| tornado | 6.4 |

| tqdm | 4.66.2 |

| typer | 0.9.4 |

| types-python-dateutil | 2.9.0.20240316 |

| typing_extensions | 4.11.0 |

| tzdata | 2024.1 |

| ujson | 5.9.0 |

| Unidecode | 1.3.8 |

| urllib3 | 2.2.1 |

| wasabi | 1.1.2 |

| weasel | 0.3.4 |

| Werkzeug | 3.0.2 |

| xarray | 2024.3.0 |

| zict | 3.0.0 |

| zipp | 3.18.1 |

| zstandard | 0.22.0 |

3.11.7 DL (preview)

Python engine 3.11.7 + common data science and ML packages + deep learning packages (tensorflow & torch)

| Package | Version |

|---|---|

| absl-py | 2.1.0 |

| alembic | 1.13.1 |

| aniso8601 | 9.0.1 |

| annotated-types | 0.6.0 |

| anytree | 2.12.1 |

| arch | 7.0.0 |

| arrow | 1.3.0 |

| astunparse | 1.6.3 |

| attrs | 23.2.0 |

| blinker | 1.7.0 |

| blis | 0.7.11 |

| Bottleneck | 1.3.8 |

| Brotli | 1.1.0 |

| brotlipy | 0.7.0 |

| cachetools | 5.3.3 |

| catalogue | 2.0.10 |

| certifi | 2024.2.2 |

| cffi | 1.16.0 |

| chardet | 5.2.0 |

| charset-normalizer | 3.3.2 |

| click | 8.1.7 |

| cloudpathlib | 0.16.0 |

| cloudpickle | 3.0.0 |

| colorama | 0.4.6 |

| coloredlogs | 15.0.1 |

| confection | 0.1.4 |

| contourpy | 1.2.1 |

| cycler | 0.12.1 |

| cymem | 2.0.8 |

| Cython | 3.0.10 |

| daal | 2024.3.0 |

| daal4py | 2024.3.0 |

| dask | 2024.4.2 |

| Deprecated | 1.2.14 |

| diff-match-patch | 20230430 |

| dill | 0.3.8 |

| distributed | 2024.4.2 |

| docker | 7.1.0 |

| entrypoints | 0.4 |

| filelock | 3.13.4 |

| flashtext | 2.7 |

| Flask | 3.0.3 |

| Flask-Compress | 1.15 |

| flatbuffers | 24.3.25 |

| fonttools | 4.51.0 |

| fsspec | 2024.3.1 |

| gast | 0.5.4 |

| gensim | 4.3.2 |

| gitdb | 4.0.11 |

| GitPython | 3.1.43 |

| google-pasta | 0.2.0 |

| graphene | 3.3 |

| graphql-core | 3.2.3 |

| graphql-relay | 3.2.0 |

| greenlet | 3.0.3 |

| grpcio | 1.64.0 |

| h5py | 3.11.0 |

| humanfriendly | 10.0 |

| idna | 3.7 |

| importlib-metadata | 7.0.0 |

| iniconfig | 2.0.0 |

| intervaltree | 3.1.0 |

| itsdangerous | 2.2.0 |

| jellyfish | 1.0.3 |

| Jinja2 | 3.1.3 |

| jmespath | 1.0.1 |

| joblib | 1.4.0 |

| json5 | 0.9.25 |

| jsonschema | 4.21.1 |

| jsonschema-specifications | 2023.12.1 |

| keras | 3.3.3 |

| kiwisolver | 1.4.5 |

| langcodes | 3.4.0 |

| language_data | 1.2.0 |

| libclang | 18.1.1 |

| locket | 1.0.0 |

| lxml | 5.2.1 |

| Mako | 1.3.5 |

| marisa-trie | 1.1.0 |

| Markdown | 3.6 |

| markdown-it-py | 3.0.0 |

| MarkupSafe | 2.1.5 |

| mdurl | 0.1.2 |

| ml-dtypes | 0.3.2 |

| mlflow | 2.13.0 |

| mlxtend | 0.23.1 |

| mpmath | 1.3.0 |

| msgpack | 1.0.8 |

| murmurhash | 1.0.10 |

| namex | 0.0.8 |

| networkx | 3.3 |

| nltk | 3.8.1 |

| numpy | 1.26.4 |

| onnxruntime | 1.17.3 |

| opentelemetry-api | 1.24.0 |

| opentelemetry-sdk | 1.24.0 |

| opentelemetry-semantic-conventions | 0.45b0 |

| opt-einsum | 3.3.0 |

| optree | 0.11.0 |

| packaging | 24.0 |

| pandas | 2.2.2 |

| partd | 1.4.1 |

| patsy | 0.5.6 |

| pillow | 10.3.0 |

| platformdirs | 4.2.1 |

| plotly | 5.21.0 |

| pluggy | 1.5.0 |

| preshed | 3.0.9 |

| protobuf | 4.25.3 |

| psutil | 5.9.8 |

| pyarrow | 15.0.2 |

| pycparser | 2.22 |

| pydantic | 2.7.1 |

| pydantic_core | 2.18.2 |

| pyfpgrowth | 1.0 |

| Pygments | 2.18.0 |

| pyparsing | 3.1.2 |

| pyreadline3 | 3.4.1 |

| pytest | 8.2.1 |

| python-dateutil | 2.9.0.post0 |

| pytz | 2024.1 |

| PyWavelets | 1.6.0 |

| pywin32 | 306 |

| PyYAML | 6.0.1 |

| querystring-parser | 1.2.4 |

| queuelib | 1.6.2 |

| referencing | 0.35.0 |

| regex | 2024.4.16 |

| requests | 2.31.0 |

| requests-file | 2.0.0 |

| rich | 13.7.1 |

| rpds-py | 0.18.0 |

| rstl | 0.1.3 |

| scikit-learn | 1.4.2 |

| scipy | 1.13.0 |

| seasonal | 0.3.1 |

| sip | 6.8.3 |

| six | 1.16.0 |

| smart-open | 6.4.0 |

| smmap | 5.0.1 |

| snowballstemmer | 2.2.0 |

| sortedcollections | 2.1.0 |

| sortedcontainers | 2.4.0 |

| spacy | 3.7.4 |

| spacy-legacy | 3.0.12 |

| spacy-loggers | 1.0.5 |

| SQLAlchemy | 2.0.30 |

| sqlparse | 0.5.0 |

| srsly | 2.4.8 |

| statsmodels | 0.14.2 |

| sympy | 1.12 |

| tbb | 2021.12.0 |

| tblib | 3.0.0 |

| tenacity | 8.2.3 |

| tensorboard | 2.16.2 |

| tensorboard-data-server | 0.7.2 |

| tensorflow | 2.16.1 |

| tensorflow-intel | 2.16.1 |

| tensorflow-io-gcs-filesystem | 0.31.0 |

| termcolor | 2.4.0 |

| textdistance | 4.6.2 |

| thinc | 8.2.3 |

| threadpoolctl | 3.4.0 |

| three-merge | 0.1.1 |

| time-series-anomaly-detector | 0.2.7 |

| tldextract | 5.1.2 |

| toolz | 0.12.1 |

| torch | 2.2.2 |

| torchaudio | 2.2.2 |

| torchvision | 0.17.2 |

| tornado | 6.4 |

| tqdm | 4.66.2 |

| typer | 0.9.4 |

| types-python-dateutil | 2.9.0.20240316 |

| typing_extensions | 4.11.0 |

| tzdata | 2024.1 |

| ujson | 5.9.0 |

| Unidecode | 1.3.8 |

| urllib3 | 2.2.1 |

| waitress | 3.0.0 |

| wasabi | 1.1.2 |

| weasel | 0.3.4 |

| Werkzeug | 3.0.2 |

| wrapt | 1.16.0 |

| xarray | 2024.3.0 |

| zict | 3.0.0 |

| zipp | 3.18.1 |

| zstandard | 0.22.0 |

3.10.8

Python engine 3.10.8 + common data science and ML packages

| Package | Version |

|---|---|

| alembic | 1.11.1 |