This is the multi-page printable view of this section. Click here to print.

User and sequence analytics plugins

- 1: active_users_count plugin

- 2: activity_counts_metrics plugin

- 3: activity_engagement plugin

- 4: activity_metrics plugin

- 5: funnel_sequence plugin

- 6: funnel_sequence_completion plugin

- 7: new_activity_metrics plugin

- 8: rolling_percentile plugin

- 9: rows_near plugin

- 10: sequence_detect plugin

- 11: session_count plugin

- 12: sliding_window_counts plugin

- 13: User Analytics

1 - active_users_count plugin

Calculates distinct count of values, where each value has appeared in at least a minimum number of periods in a lookback period.

Useful for calculating distinct counts of “fans” only, while not including appearances of “non-fans”. A user is counted as a “fan” only if it was active during the lookback period. The lookback period is only used to determine whether a user is considered active (“fan”) or not. The aggregation itself doesn’t include users from the lookback window. In comparison, the sliding_window_counts aggregation is performed over a sliding window of the lookback period.

Syntax

T | evaluate active_users_count(IdColumn, TimelineColumn, Start, End, LookbackWindow, Period, ActivePeriodsCount, Bin , [dim1, dim2, …])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The tabular input used to count active users. |

| IdColumn | string | ✔️ | The name of the column with ID values that represent user activity. |

| TimelineColumn | string | ✔️ | The name of the column that represents timeline. |

| Start | datetime | ✔️ | The analysis start period. |

| End | datetime | ✔️ | The analysis end period. |

| LookbackWindow | timespan | ✔️ | The time window defining a period where user appearance is checked. The lookback period starts at ([current appearance] - [lookback window]) and ends on ([current appearance]). |

| Period | timespan | ✔️ | A constant to count as single appearance (a user will be counted as active if it appears in at least distinct ActivePeriodsCount of this timespan. |

| ActivePeriodsCount | decimal | ✔️ | The minimal number of distinct active periods to decide if user is active. Active users are those users who appeared in at least (equal or greater than) active periods count. |

| Bin | decimal, datetime, or timespan | ✔️ | A constant value of the analysis step period. May also be a string of week, month, or year. All periods will be the corresponding startofweek, startofmonth, orstartofyear functions. |

| dim1, dim2, … | dynamic | An array of the dimensions columns that slice the activity metrics calculation. |

Returns

Returns a table that has distinct count values for IDs that have appeared in ActivePeriodCounts in the following periods: the lookback period, each timeline period, and each existing dimensions combination.

Output table schema is:

| TimelineColumn | dim1 | .. | dim_n | dcount_values |

|---|---|---|---|---|

| type: as of TimelineColumn | .. | .. | .. | long |

Examples

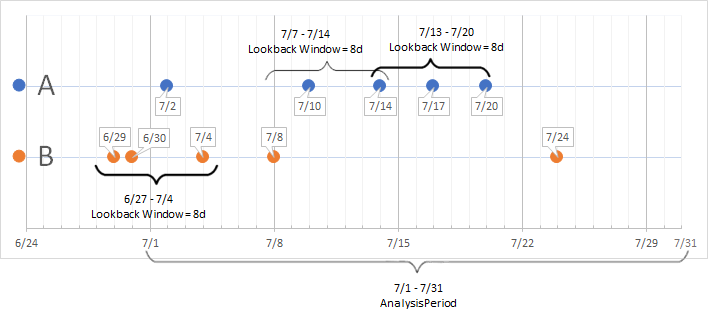

Calculate weekly number of distinct users that appeared in at least three different days over a period of prior eight days. Period of analysis: July 2018.

let Start = datetime(2018-07-01);

let End = datetime(2018-07-31);

let LookbackWindow = 8d;

let Period = 1d;

let ActivePeriods = 3;

let Bin = 7d;

let T = datatable(User:string, Timestamp:datetime)

[

"B", datetime(2018-06-29),

"B", datetime(2018-06-30),

"A", datetime(2018-07-02),

"B", datetime(2018-07-04),

"B", datetime(2018-07-08),

"A", datetime(2018-07-10),

"A", datetime(2018-07-14),

"A", datetime(2018-07-17),

"A", datetime(2018-07-20),

"B", datetime(2018-07-24)

];

T | evaluate active_users_count(User, Timestamp, Start, End, LookbackWindow, Period, ActivePeriods, Bin)

Output

| Timestamp | dcount |

|---|---|

| 2018-07-01 00:00:00.0000000 | 1 |

| 2018-07-15 00:00:00.0000000 | 1 |

A user is considered active if it fulfills both of the following criteria:

- The user was seen in at least three distinct days (Period = 1d, ActivePeriods=3).

- The user was seen in a lookback window of 8d before and including their current appearance.

In the illustration below, the only appearances that are active by this criteria are the following instances: User A on 7/20 and User B on 7/4 (see plugin results above). The appearances of User B are included for the lookback window on 7/4, but not for the Start-End time range of 6/29-30.

2 - activity_counts_metrics plugin

Calculates useful activity metrics for each time window compared/aggregated to all previous time windows. Metrics include: total count values, distinct count values, distinct count of new values, and aggregated distinct count. Compare this plugin to activity_metrics plugin, in which every time window is compared to its previous time window only.

Syntax

T | evaluate activity_counts_metrics(IdColumn, TimelineColumn, Start, End, Step [, Dimensions])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The tabular input used to count activities. |

| IdColumn | string | ✔️ | The name of the column with ID values that represent user activity. |

| TimelineColumn | string | ✔️ | The name of the column that represents the timeline. |

| Start | datetime | ✔️ | The analysis start period. |

| End | datetime | ✔️ | The analysis end period. |

| Step | decimal, datetime, or timespan | ✔️ | The analysis window period. The value may also be a string of week, month, or year, in which case all periods would be startofweek, startofmonth, or startofyear. |

| Dimensions | string | Zero or more comma-separated dimensions columns that slice the activity metrics calculation. |

Returns

Returns a table that has the total count values, distinct count values, distinct count of new values, and aggregated distinct count for each time window. If Dimensions are provided, then there’s another column for each dimension in the output table.

The following table describes the output table schema.

| Column name | Type | Description |

|---|---|---|

Timestamp | Same as the provided TimelineColumn argument | The time window start time. |

count | long | The total records count in the time window and dim(s) |

dcount | long | The distinct ID values count in the time window and dim(s) |

new_dcount | long | The distinct ID values in the time window and dim(s) compared to all previous time windows. |

aggregated_dcount | long | The total aggregated distinct ID values of dim(s) from first-time window to current (inclusive). |

Examples

The next query calculates daily activity counts for the provided input table.

let start=datetime(2017-08-01);

let end=datetime(2017-08-04);

let window=1d;

let T = datatable(UserId:string, Timestamp:datetime)

[

'A', datetime(2017-08-01),

'D', datetime(2017-08-01),

'J', datetime(2017-08-01),

'B', datetime(2017-08-01),

'C', datetime(2017-08-02),

'T', datetime(2017-08-02),

'J', datetime(2017-08-02),

'H', datetime(2017-08-03),

'T', datetime(2017-08-03),

'T', datetime(2017-08-03),

'J', datetime(2017-08-03),

'B', datetime(2017-08-03),

'S', datetime(2017-08-03),

'S', datetime(2017-08-04),

];

T

| evaluate activity_counts_metrics(UserId, Timestamp, start, end, window)

Output

Timestamp | count | dcount | new_dcount | aggregated_dcount |

|---|---|---|---|---|

| 2017-08-01 00:00:00.0000000 | 4 | 4 | 4 | 4 |

| 2017-08-02 00:00:00.0000000 | 3 | 3 | 2 | 6 |

| 2017-08-03 00:00:00.0000000 | 6 | 5 | 2 | 8 |

| 2017-08-04 00:00:00.0000000 | 1 | 1 | 0 | 8 |

3 - activity_engagement plugin

Calculates activity engagement ratio based on ID column over a sliding timeline window.

The activity_engagement plugin can be used for calculating DAU/WAU/MAU (daily/weekly/monthly activities).

Syntax

T | evaluate activity_engagement(IdColumn, TimelineColumn, [Start, End,] InnerActivityWindow, OuterActivityWindow [, dim1, dim2, …])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The tabular input used to calculate engagement. |

| IdCoumn | string | ✔️ | The name of the column with ID values that represent user activity. |

| TimelineColumn | string | ✔️ | The name of the column that represents timeline. |

| Start | datetime | The analysis start period. | |

| End | datetime | The analysis end period. | |

| InnerActivityWindow | timespan | ✔️ | The inner-scope analysis window period. |

| OuterActivityWindow | timespan | ✔️ | The outer-scope analysis window period. |

| dim1, dim2, … | dynamic | An array of the dimensions columns that slice the activity metrics calculation. |

Returns

Returns a table that has a distinct count of ID values inside an inner-scope window, inside an outer-scope window, and the activity ratio for each inner-scope window period for each existing dimensions combination.

Output table schema is:

| TimelineColumn | dcount_activities_inner | dcount_activities_outer | activity_ratio | dim1 | .. | dim_n | |||

|---|---|---|---|---|---|---|---|---|---|

| type: as of TimelineColumn | long | long | double | .. | .. | .. |

Examples

The following example calculates DAU/WAU (Daily Active Users / Weekly Active Users ratio) over a randomly generated data.

// Generate random data of user activities

let _start = datetime(2017-01-01);

let _end = datetime(2017-01-31);

range _day from _start to _end step 1d

| extend d = tolong((_day - _start)/1d)

| extend r = rand()+1

| extend _users=range(tolong(d*50*r), tolong(d*50*r+100*r-1), 1)

| mv-expand id=_users to typeof(long) limit 1000000

// Calculate DAU/WAU ratio

| evaluate activity_engagement(['id'], _day, _start, _end, 1d, 7d)

| project _day, Dau_Wau=activity_ratio*100

| render timechart

:::image type=“content” source=“media/activity-engagement-plugin/activity-engagement-dau-wau.png” border=“false” alt-text=“Graph displaying the ratio of daily active users to weekly active users as specified in the query.”:::

The following example calculates DAU/WAU (Daily Active Users / Weekly Active Users ratio) over a randomly generated data.

// Generate random data of user activities

let _start = datetime(2017-01-01);

let _end = datetime(2017-05-31);

range _day from _start to _end step 1d

| extend d = tolong((_day - _start)/1d)

| extend r = rand()+1

| extend _users=range(tolong(d*50*r), tolong(d*50*r+100*r-1), 1)

| mv-expand id=_users to typeof(long) limit 1000000

// Calculate DAU/MAU ratio

| evaluate activity_engagement(['id'], _day, _start, _end, 1d, 30d)

| project _day, Dau_Mau=activity_ratio*100

| render timechart

:::image type=“content” source=“media/activity-engagement-plugin/activity-engagement-dau-mau.png” border=“false” alt-text=“Graph displaying the ratio of daily active users to monthly active users as specified in the query.”:::

The following example calculates DAU/WAU (Daily Active Users / Weekly Active Users ratio) over a randomly generated data with additional dimension (mod3).

// Generate random data of user activities

let _start = datetime(2017-01-01);

let _end = datetime(2017-05-31);

range _day from _start to _end step 1d

| extend d = tolong((_day - _start)/1d)

| extend r = rand()+1

| extend _users=range(tolong(d*50*r), tolong(d*50*r+100*r-1), 1)

| mv-expand id=_users to typeof(long) limit 1000000

| extend mod3 = strcat("mod3=", id % 3)

// Calculate DAU/MAU ratio

| evaluate activity_engagement(['id'], _day, _start, _end, 1d, 30d, mod3)

| project _day, Dau_Mau=activity_ratio*100, mod3

| render timechart

:::image type=“content” source=“media/activity-engagement-plugin/activity-engagement-dau-mau-mod3.png” border=“false” alt-text=“Graph displaying the ratio of daily active users to monthly active users with modulo 3 as specified in the query.”:::

4 - activity_metrics plugin

Calculates useful metrics that include distinct count values, distinct count of new values, retention rate, and churn rate. This plugin is different from activity_counts_metrics plugin in which every time window is compared to all previous time windows.

Syntax

T | evaluate activity_metrics(IdColumn, TimelineColumn, [Start, End,] Window [, dim1, dim2, …])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The input used to calculate activity metrics. |

| IdCoumn | string | ✔️ | The name of the column with ID values that represent user activity. |

| TimelineColumn | string | ✔️ | The name of the column that represents timeline. |

| Start | datetime | ✔️ | The analysis start period. |

| End | datetime | ✔️ | The analysis end period. |

| Step | decimal, datetime, or timespan | ✔️ | The analysis window period. This value may also be a string of week, month, or year, in which case all periods will be startofweek, startofmonth, or startofyear respectively. |

| dim1, dim2, … | dynamic | An array of the dimensions columns that slice the activity metrics calculation. |

Returns

The plugin returns a table with the distinct count values, distinct count of new values, retention rate, and churn rate for each timeline period for each existing dimensions combination.

Output table schema is:

| TimelineColumn | dcount_values | dcount_newvalues | retention_rate | churn_rate | dim1 | .. | dim_n | |||

|---|---|---|---|---|---|---|---|---|---|---|

| type: as of TimelineColumn | long | long | double | double | .. | .. | .. |

Notes

Retention Rate Definition

Retention Rate over a period is calculated as:

where the # of customers returned during the period is defined as:

Retention Rate can vary from 0.0 to 1.0

A higher score means a larger number of returning users.

Churn Rate Definition

Churn Rate over a period is calculated as:

where the # of customer lost in the period is defined as:

Churn Rate can vary from 0.0 to 1.0

The higher score means the larger number of users are NOT returning to the service.

Churn vs. Retention Rate

The churn vs. retention Rate is derived from the definition of Churn Rate and Retention Rate. The following calculation is always true:

Examples

The following example calculates retention and churn rate for week-over-week window.

// Generate random data of user activities

let _start = datetime(2017-01-02);

let _end = datetime(2017-05-31);

range _day from _start to _end step 1d

| extend d = tolong((_day - _start)/1d)

| extend r = rand()+1

| extend _users=range(tolong(d*50*r), tolong(d*50*r+200*r-1), 1)

| mv-expand id=_users to typeof(long) limit 1000000

//

| evaluate activity_metrics(['id'], _day, _start, _end, 7d)

| project _day, retention_rate, churn_rate

| render timechart

Output

| _day | retention_rate | churn_rate |

|---|---|---|

| 2017-01-02 00:00:00.0000000 | NaN | NaN |

| 2017-01-09 00:00:00.0000000 | 0.179910044977511 | 0.820089955022489 |

| 2017-01-16 00:00:00.0000000 | 0.744374437443744 | 0.255625562556256 |

| 2017-01-23 00:00:00.0000000 | 0.612096774193548 | 0.387903225806452 |

| 2017-01-30 00:00:00.0000000 | 0.681141439205955 | 0.318858560794045 |

| 2017-02-06 00:00:00.0000000 | 0.278145695364238 | 0.721854304635762 |

| 2017-02-13 00:00:00.0000000 | 0.223172628304821 | 0.776827371695179 |

| 2017-02-20 00:00:00.0000000 | 0.38 | 0.62 |

| 2017-02-27 00:00:00.0000000 | 0.295519001701645 | 0.704480998298355 |

| 2017-03-06 00:00:00.0000000 | 0.280387770320656 | 0.719612229679344 |

| 2017-03-13 00:00:00.0000000 | 0.360628154795289 | 0.639371845204711 |

| 2017-03-20 00:00:00.0000000 | 0.288008028098344 | 0.711991971901656 |

| 2017-03-27 00:00:00.0000000 | 0.306134969325153 | 0.693865030674847 |

| 2017-04-03 00:00:00.0000000 | 0.356866537717602 | 0.643133462282398 |

| 2017-04-10 00:00:00.0000000 | 0.495098039215686 | 0.504901960784314 |

| 2017-04-17 00:00:00.0000000 | 0.198296836982968 | 0.801703163017032 |

| 2017-04-24 00:00:00.0000000 | 0.0618811881188119 | 0.938118811881188 |

| 2017-05-01 00:00:00.0000000 | 0.204657727593507 | 0.795342272406493 |

| 2017-05-08 00:00:00.0000000 | 0.517391304347826 | 0.482608695652174 |

| 2017-05-15 00:00:00.0000000 | 0.143667296786389 | 0.856332703213611 |

| 2017-05-22 00:00:00.0000000 | 0.199122325836533 | 0.800877674163467 |

| 2017-05-29 00:00:00.0000000 | 0.063468992248062 | 0.936531007751938 |

:::image type=“content” source=“media/activity-metrics-plugin/activity-metrics-churn-and-retention.png” border=“false” alt-text=“Table showing the calculated retention and churn rates per seven days as specified in the query.”:::

The following example calculates distinct values and ’new’ values (IDs that didn’t appear in previous time window) for week-over-week window.

// Generate random data of user activities

let _start = datetime(2017-01-02);

let _end = datetime(2017-05-31);

range _day from _start to _end step 1d

| extend d = tolong((_day - _start)/1d)

| extend r = rand()+1

| extend _users=range(tolong(d*50*r), tolong(d*50*r+200*r-1), 1)

| mv-expand id=_users to typeof(long) limit 1000000

//

| evaluate activity_metrics(['id'], _day, _start, _end, 7d)

| project _day, dcount_values, dcount_newvalues

| render timechart

Output

| _day | dcount_values | dcount_newvalues |

|---|---|---|

| 2017-01-02 00:00:00.0000000 | 630 | 630 |

| 2017-01-09 00:00:00.0000000 | 738 | 575 |

| 2017-01-16 00:00:00.0000000 | 1187 | 841 |

| 2017-01-23 00:00:00.0000000 | 1092 | 465 |

| 2017-01-30 00:00:00.0000000 | 1261 | 647 |

| 2017-02-06 00:00:00.0000000 | 1744 | 1043 |

| 2017-02-13 00:00:00.0000000 | 1563 | 432 |

| 2017-02-20 00:00:00.0000000 | 1406 | 818 |

| 2017-02-27 00:00:00.0000000 | 1956 | 1429 |

| 2017-03-06 00:00:00.0000000 | 1593 | 848 |

| 2017-03-13 00:00:00.0000000 | 1801 | 1423 |

| 2017-03-20 00:00:00.0000000 | 1710 | 1017 |

| 2017-03-27 00:00:00.0000000 | 1796 | 1516 |

| 2017-04-03 00:00:00.0000000 | 1381 | 1008 |

| 2017-04-10 00:00:00.0000000 | 1756 | 1162 |

| 2017-04-17 00:00:00.0000000 | 1831 | 1409 |

| 2017-04-24 00:00:00.0000000 | 1823 | 1164 |

| 2017-05-01 00:00:00.0000000 | 1811 | 1353 |

| 2017-05-08 00:00:00.0000000 | 1691 | 1246 |

| 2017-05-15 00:00:00.0000000 | 1812 | 1608 |

| 2017-05-22 00:00:00.0000000 | 1740 | 1017 |

| 2017-05-29 00:00:00.0000000 | 960 | 756 |

:::image type=“content” source=“media/activity-metrics-plugin/activity-metrics-dcount-and-dcount-newvalues.png” border=“false” alt-text=“Table showing the count of distinct values (dcount_values) and of new distinct values (dcount_newvalues) that didn’t appear in previous time window as specified in the query.”:::

5 - funnel_sequence plugin

Calculates distinct count of users who have taken a sequence of states, and the distribution of previous/next states that have led to/were followed by the sequence. The plugin is invoked with the evaluate operator.

Syntax

T | evaluate funnel_sequence(IdColumn, TimelineColumn, Start, End, MaxSequenceStepWindow, Step, StateColumn, Sequence)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The input tabular expression. |

| IdColum | string | ✔️ | The column reference representing the ID. This column must be present in T. |

| TimelineColumn | string | ✔️ | The column reference representing the timeline. This column must be present in T. |

| Start | datetime, timespan, or long | ✔️ | The analysis start period. |

| End | datetime, timespan, or long | ✔️ | The analysis end period. |

| MaxSequenceStepWindow | datetime, timespan, or long | ✔️ | The value of the max allowed timespan between two sequential steps in the sequence. |

| Step | datetime, timespan, or long | ✔️ | The analysis step period, or bin. |

| StateColumn | string | ✔️ | The column reference representing the state. This column must be present in T. |

| Sequence | dynamic | ✔️ | An array with the sequence values that are looked up in StateColumn. |

Returns

Returns three output tables, which are useful for constructing sankey diagrams for the analyzed sequence:

Table #1 - prev-sequence-next

dcount- TimelineColumn: the analyzed time window

- prev: the prev state (may be empty if there were any users that only had events for the searched sequence, but not any events prior to it).

- next: the next state (may be empty if there were any users that only had events for the searched sequence, but not any events that followed it).

dcount: distinct count ofIdColumnin time window that transitionedprev–>Sequence–>next.- samples: an array of IDs (from

IdColumn) corresponding to the row’s sequence (a maximum of 128 IDs are returned).

Table #2 - prev-sequence

dcount- TimelineColumn: the analyzed time window

- prev: the prev state (may be empty if there were any users that only had events for the searched sequence, but not any events prior to it).

dcount: distinct count ofIdColumnin time window that transitionedprev–>Sequence–>next.- samples: an array of IDs (from

IdColumn) corresponding to the row’s sequence (a maximum of 128 IDs are returned).

Table #3 - sequence-next

dcount- TimelineColumn: the analyzed time window

- next: the next state (may be empty if there were any users that only had events for the searched sequence, but not any events that followed it).

dcount: distinct count ofIdColumnin time window that transitionedprev–>Sequence–>next.- samples: an array of IDs (from

IdColumn) corresponding to the row’s sequence (a maximum of 128 IDs are returned).

Examples

The following examples show how to use the funnel_sequence plugin to analyze storm events.

The query looks at the table StormEvents (weather statistics for 2007) and shows which events happened before/after all Tornado events occurred in 2007.

// Looking on StormEvents statistics:

// Q1: What happens before Tornado event?

// Q2: What happens after Tornado event?

StormEvents

| evaluate funnel_sequence(EpisodeId, StartTime, datetime(2007-01-01), datetime(2008-01-01), 1d,365d, EventType, dynamic(['Tornado']))

Result includes three tables:

- Table #1: All possible variants of what happened before and after the sequence. For example, the second line means that there were 87 different events that had following sequence:

Hail->Tornado->Hail

StartTime | prev | next | dcount |

|---|---|---|---|

| 2007-01-01 00:00:00.0000000 | 293 | ||

| 2007-01-01 00:00:00.0000000 | Hail | Hail | 87 |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | Thunderstorm Wind | 77 |

| 2007-01-01 00:00:00.0000000 | Hail | Thunderstorm Wind | 28 |

| 2007-01-01 00:00:00.0000000 | Hail | 28 | |

| 2007-01-01 00:00:00.0000000 | Hail | 27 | |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | 25 | |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | Hail | 24 |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | 24 | |

| 2007-01-01 00:00:00.0000000 | Flash Flood | Flash Flood | 12 |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | Flash Flood | 8 |

| 2007-01-01 00:00:00.0000000 | Flash Flood | 8 | |

| 2007-01-01 00:00:00.0000000 | Funnel Cloud | Thunderstorm Wind | 6 |

| 2007-01-01 00:00:00.0000000 | Funnel Cloud | 6 | |

| 2007-01-01 00:00:00.0000000 | Flash Flood | 6 | |

| 2007-01-01 00:00:00.0000000 | Funnel Cloud | Funnel Cloud | 6 |

| 2007-01-01 00:00:00.0000000 | Hail | Flash Flood | 4 |

| 2007-01-01 00:00:00.0000000 | Flash Flood | Thunderstorm Wind | 4 |

| 2007-01-01 00:00:00.0000000 | Hail | Funnel Cloud | 4 |

| 2007-01-01 00:00:00.0000000 | Funnel Cloud | Hail | 4 |

| 2007-01-01 00:00:00.0000000 | Funnel Cloud | 4 | |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | Funnel Cloud | 3 |

| 2007-01-01 00:00:00.0000000 | Heavy Rain | Thunderstorm Wind | 2 |

| 2007-01-01 00:00:00.0000000 | Flash Flood | Funnel Cloud | 2 |

| 2007-01-01 00:00:00.0000000 | Flash Flood | Hail | 2 |

| 2007-01-01 00:00:00.0000000 | Strong Wind | Thunderstorm Wind | 1 |

| 2007-01-01 00:00:00.0000000 | Heavy Rain | Flash Flood | 1 |

| 2007-01-01 00:00:00.0000000 | Heavy Rain | Hail | 1 |

| 2007-01-01 00:00:00.0000000 | Hail | Flood | 1 |

| 2007-01-01 00:00:00.0000000 | Lightning | Hail | 1 |

| 2007-01-01 00:00:00.0000000 | Heavy Rain | Lightning | 1 |

| 2007-01-01 00:00:00.0000000 | Funnel Cloud | Heavy Rain | 1 |

| 2007-01-01 00:00:00.0000000 | Flash Flood | Flood | 1 |

| 2007-01-01 00:00:00.0000000 | Flood | Flash Flood | 1 |

| 2007-01-01 00:00:00.0000000 | Heavy Rain | 1 | |

| 2007-01-01 00:00:00.0000000 | Funnel Cloud | Lightning | 1 |

| 2007-01-01 00:00:00.0000000 | Lightning | Thunderstorm Wind | 1 |

| 2007-01-01 00:00:00.0000000 | Flood | Thunderstorm Wind | 1 |

| 2007-01-01 00:00:00.0000000 | Hail | Lightning | 1 |

| 2007-01-01 00:00:00.0000000 | Lightning | 1 | |

| 2007-01-01 00:00:00.0000000 | Tropical Storm | Hurricane (Typhoon) | 1 |

| 2007-01-01 00:00:00.0000000 | Coastal Flood | 1 | |

| 2007-01-01 00:00:00.0000000 | Rip Current | 1 | |

| 2007-01-01 00:00:00.0000000 | Heavy Snow | 1 | |

| 2007-01-01 00:00:00.0000000 | Strong Wind | 1 |

- Table #2: shows all distinct events grouped by the previous event. For example, the second line shows that there were a total of 150 events of

Hailthat happened just beforeTornado.

StartTime | prev | dcount |

|---|---|---|

| 2007-01-01 00:00:00.0000000 | 331 | |

| 2007-01-01 00:00:00.0000000 | Hail | 150 |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | 135 |

| 2007-01-01 00:00:00.0000000 | Flash Flood | 28 |

| 2007-01-01 00:00:00.0000000 | Funnel Cloud | 22 |

| 2007-01-01 00:00:00.0000000 | Heavy Rain | 5 |

| 2007-01-01 00:00:00.0000000 | Flood | 2 |

| 2007-01-01 00:00:00.0000000 | Lightning | 2 |

| 2007-01-01 00:00:00.0000000 | Strong Wind | 2 |

| 2007-01-01 00:00:00.0000000 | Heavy Snow | 1 |

| 2007-01-01 00:00:00.0000000 | Rip Current | 1 |

| 2007-01-01 00:00:00.0000000 | Coastal Flood | 1 |

| 2007-01-01 00:00:00.0000000 | Tropical Storm | 1 |

- Table #3: shows all distinct events grouped by next event. For example, the second line shows that there were a total of 143 events of

Hailthat happened afterTornado.

StartTime | next | dcount |

|---|---|---|

| 2007-01-01 00:00:00.0000000 | 332 | |

| 2007-01-01 00:00:00.0000000 | Hail | 145 |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | 143 |

| 2007-01-01 00:00:00.0000000 | Flash Flood | 32 |

| 2007-01-01 00:00:00.0000000 | Funnel Cloud | 21 |

| 2007-01-01 00:00:00.0000000 | Lightning | 4 |

| 2007-01-01 00:00:00.0000000 | Heavy Rain | 2 |

| 2007-01-01 00:00:00.0000000 | Flood | 2 |

| 2007-01-01 00:00:00.0000000 | Hurricane (Typhoon) | 1 |

Now, let’s try to find out how the following sequence continues:Hail -> Tornado -> Thunderstorm Wind

StormEvents

| evaluate funnel_sequence(

EpisodeId,

StartTime,

datetime(2007-01-01),

datetime(2008-01-01),

1d,

365d,

EventType,

dynamic(['Hail', 'Tornado', 'Thunderstorm Wind'])

)

Skipping Table #1 and Table #2, and looking at Table #3, we can conclude that sequence Hail -> Tornado -> Thunderstorm Wind in 92 events ended with this sequence, continued as Hail in 41 events, and turned back to Tornado in 14.

StartTime | next | dcount |

|---|---|---|

| 2007-01-01 00:00:00.0000000 | 92 | |

| 2007-01-01 00:00:00.0000000 | Hail | 41 |

| 2007-01-01 00:00:00.0000000 | Tornado | 14 |

| 2007-01-01 00:00:00.0000000 | Flash Flood | 11 |

| 2007-01-01 00:00:00.0000000 | Lightning | 2 |

| 2007-01-01 00:00:00.0000000 | Heavy Rain | 1 |

| 2007-01-01 00:00:00.0000000 | Flood | 1 |

6 - funnel_sequence_completion plugin

Calculates a funnel of completed sequence steps while comparing different time periods. The plugin is invoked with the evaluate operator.

Syntax

T | evaluate funnel_sequence_completion(IdColumn, TimelineColumn, Start, End, BinSize, StateColumn, Sequence, MaxSequenceStepWindows)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The input tabular expression. |

| IdColum | string | ✔️ | The column reference representing the ID. The column must be present in T. |

| TimelineColumn | string | ✔️ | The column reference representing the timeline. The column must be present in T. |

| Start | datetime, timespan, or long | ✔️ | The analysis start period. |

| End | datetime, timespan, or long | ✔️ | The analysis end period. |

| BinSize | datetime, timespan, or long | ✔️ | The analysis window size. Each window is analyzed separately. |

| StateColumn | string | ✔️ | The column reference representing the state. The column must be present in T. |

| Sequence | dynamic | ✔️ | An array with the sequence values that are looked up in StateColumn. |

| MaxSequenceStepPeriods | dynamic | ✔️ | An array with the values of the max allowed timespan between the first and last sequential steps in the sequence. Each period in the array generates a funnel analysis result. |

Returns

Returns a single table useful for constructing a funnel diagram for the analyzed sequence:

TimelineColumn: the analyzed time window (bin), each bin in the analysis timeframe (Start to End) generates a funnel analysis separately.StateColumn: the state of the sequence.Period: the maximal period allowed for completing steps in the funnel sequence measured from the first step in the sequence. Each value in MaxSequenceStepPeriods generates a funnel analysis with a separate period.dcount: distinct count ofIdColumnin time window that transitioned from first sequence state to the value ofStateColumn.

Examples

The following examples show how to use the funnel_sequence_completion plugin to analyze storm events.

The query checks the completion funnel of the sequence: Hail -> Tornado -> Thunderstorm Wind

in “overall” time of 1hour, 4hours, 1day.

let _start = datetime(2007-01-01);

let _end = datetime(2008-01-01);

let _windowSize = 365d;

let _sequence = dynamic(['Hail', 'Tornado', 'Thunderstorm Wind']);

let _periods = dynamic([1h, 4h, 1d]);

StormEvents

| evaluate funnel_sequence_completion(EpisodeId, StartTime, _start, _end, _windowSize, EventType, _sequence, _periods)

Output

StartTime | EventType | Period | dcount |

|---|---|---|---|

| 2007-01-01 00:00:00.0000000 | Hail | 01:00:00 | 2877 |

| 2007-01-01 00:00:00.0000000 | Tornado | 01:00:00 | 208 |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | 01:00:00 | 87 |

| 2007-01-01 00:00:00.0000000 | Hail | 04:00:00 | 2877 |

| 2007-01-01 00:00:00.0000000 | Tornado | 04:00:00 | 231 |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | 04:00:00 | 141 |

| 2007-01-01 00:00:00.0000000 | Hail | 1.00:00:00 | 2877 |

| 2007-01-01 00:00:00.0000000 | Tornado | 1.00:00:00 | 244 |

| 2007-01-01 00:00:00.0000000 | Thunderstorm Wind | 1.00:00:00 | 155 |

Understanding the results:

The outcome is three funnels (for periods: One hour, 4 hours, and one day). For each funnel step, a number of distinct counts of are shown. You can see that the more time is given to complete the whole sequence of Hail -> Tornado -> Thunderstorm Wind, the higher dcount value is obtained. In other words, there were more occurrences of the sequence reaching the funnel step.

Related content

7 - new_activity_metrics plugin

Calculates useful activity metrics (distinct count values, distinct count of new values, retention rate, and churn rate) for the cohort of New Users. Each cohort of New Users (all users, which were first seen in time window) is compared to all prior cohorts.

Comparison takes into account all previous time windows. For example, for records from T2 to T3, the distinct count of users will be all users in T3 who weren’t seen in both T1 and T2.

The plugin is invoked with the evaluate operator.

Syntax

TabularExpression | evaluate new_activity_metrics(IdColumn, TimelineColumn, Start, End, Window [, Cohort] [, dim1, dim2, …] [, Lookback] )

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| TabularExpression | string | ✔️ | The tabular expression for which to calculate activity metrics. |

| IdColumn | string | ✔️ | The name of the column with ID values that represent user activity. |

| TimelineColumn | string | ✔️ | The name of the column that represents the timeline. |

| Start | scalar | ✔️ | The value of the analysis start period. |

| End | scalar | ✔️ | The value of the analysis end period. |

| Window | scalar | ✔️ | The value of the analysis window period. Can be a numeric, datetime, or timespan value, or a string that is one of week, month or year, in which case all periods will be startofweek/startofmonth/startofyear accordingly. When using startofweek, make sure start time is a Sunday, otherwise first cohort will be empty (since startofweek is considered to be a Sunday). |

| Cohort | scalar | Indicates a specific cohort. If not provided, all cohorts corresponding to the analysis time window are calculated and returned. | |

| dim1, dim2, … | dynamic | An array of the dimensions columns that slice the activity metrics calculation. | |

| Lookback | string | A tabular expression with a set of IDs that belong to the ’look back’ period. |

Returns

Returns a table that contains the following for each combination of ‘from’ and ’to’ timeline periods and for each existing column (dimensions) combination:

- distinct count values

- distinct count of new values

- retention rate

- churn rate

Output table schema is:

| from_TimelineColumn | to_TimelineColumn | dcount_new_values | dcount_retained_values | dcount_churn_values | retention_rate | churn_rate | dim1 | .. | dim_n |

|---|---|---|---|---|---|---|---|---|---|

| type: as of TimelineColumn | same | long | long | double | double | double | .. | .. | .. |

from_TimelineColumn- the cohort of new users. Metrics in this record refer to all users who were first seen in this period. The decision on first seen takes into account all previous periods in the analysis period.to_TimelineColumn- the period being compared to.dcount_new_values- the number of distinct users into_TimelineColumnthat weren’t seen in all periods prior to and includingfrom_TimelineColumn.dcount_retained_values- out of all new users, first seen infrom_TimelineColumn, the number of distinct users that were seen into_TimelineCoumn.dcount_churn_values- out of all new users, first seen infrom_TimelineColumn, the number of distinct users that weren’t seen into_TimelineCoumn.retention_rate- the percent ofdcount_retained_valuesout of the cohort (users first seen infrom_TimelineColumn).churn_rate- the percent ofdcount_churn_valuesout of the cohort (users first seen infrom_TimelineColumn).

Examples

The following sample dataset shows which users seen on which days. The table was generated based on a source Users table, as follows:

Users | summarize tostring(make_set(user)) by bin(Timestamp, 1d) | order by Timestamp asc;

Output

| Timestamp | set_user |

|---|---|

| 2019-11-01 00:00:00.0000000 | [0,2,3,4] |

| 2019-11-02 00:00:00.0000000 | [0,1,3,4,5] |

| 2019-11-03 00:00:00.0000000 | [0,2,4,5] |

| 2019-11-04 00:00:00.0000000 | [0,1,2,3] |

| 2019-11-05 00:00:00.0000000 | [0,1,2,3,4] |

The output of the plugin for the original table is the following:

let StartDate = datetime(2019-11-01 00:00:00);

let EndDate = datetime(2019-11-07 00:00:00);

Users

| evaluate new_activity_metrics(user, Timestamp, StartDate, EndDate-1tick, 1d)

| where from_Timestamp < datetime(2019-11-03 00:00:00.0000000)

Output

| R | from_Timestamp | to_Timestamp | dcount_new_values | dcount_retained_values | dcount_churn_values | retention_rate | churn_rate |

|---|---|---|---|---|---|---|---|

| 1 | 2019-11-01 00:00:00.0000000 | 2019-11-01 00:00:00.0000000 | 4 | 4 | 0 | 1 | 0 |

| 2 | 2019-11-01 00:00:00.0000000 | 2019-11-02 00:00:00.0000000 | 2 | 3 | 1 | 0.75 | 0.25 |

| 3 | 2019-11-01 00:00:00.0000000 | 2019-11-03 00:00:00.0000000 | 1 | 3 | 1 | 0.75 | 0.25 |

| 4 | 2019-11-01 00:00:00.0000000 | 2019-11-04 00:00:00.0000000 | 1 | 3 | 1 | 0.75 | 0.25 |

| 5 | 2019-11-01 00:00:00.0000000 | 2019-11-05 00:00:00.0000000 | 1 | 4 | 0 | 1 | 0 |

| 6 | 2019-11-01 00:00:00.0000000 | 2019-11-06 00:00:00.0000000 | 0 | 0 | 4 | 0 | 1 |

| 7 | 2019-11-02 00:00:00.0000000 | 2019-11-02 00:00:00.0000000 | 2 | 2 | 0 | 1 | 0 |

| 8 | 2019-11-02 00:00:00.0000000 | 2019-11-03 00:00:00.0000000 | 0 | 1 | 1 | 0.5 | 0.5 |

| 9 | 2019-11-02 00:00:00.0000000 | 2019-11-04 00:00:00.0000000 | 0 | 1 | 1 | 0.5 | 0.5 |

| 10 | 2019-11-02 00:00:00.0000000 | 2019-11-05 00:00:00.0000000 | 0 | 1 | 1 | 0.5 | 0.5 |

| 11 | 2019-11-02 00:00:00.0000000 | 2019-11-06 00:00:00.0000000 | 0 | 0 | 2 | 0 | 1 |

Following is an analysis of a few records from the output:

Record

R=3,from_TimelineColumn=2019-11-01,to_TimelineColumn=2019-11-03:- The users considered for this record are all new users seen on 11/1. Since this is the first period, these are all users in that bin – [0,2,3,4]

dcount_new_values– the number of users on 11/3 who weren’t seen on 11/1. This includes a single user –5.dcount_retained_values– out of all new users on 11/1, how many were retained until 11/3? There are three values ([0,2,4]), whilecount_churn_valuesis one (user=3).retention_rate= 0.75 – the three retained users out of the four new users who were first seen in 11/1.

Record

R=9,from_TimelineColumn=2019-11-02,to_TimelineColumn=2019-11-04:- This record focuses on the new users who were first seen on 11/2 – users

1and5. dcount_new_values– the number of users on 11/4 who weren’t seen through all periodsT0 .. from_Timestamp. Meaning, users who are seen on 11/4 but who weren’t seen on either 11/1 or 11/2 – there are no such users.dcount_retained_values– out of all new users on 11/2 ([1,5]), how many were retained until 11/4? There’s one such user ([1]),while count_churn_valuesis one (user5).retention_rateis 0.5 – the single user that was retained on 11/4 out of the two new ones on 11/2.

- This record focuses on the new users who were first seen on 11/2 – users

Weekly retention rate, and churn rate (single week)

The next query calculates a retention and churn rate for week-over-week window for New Users cohort (users that arrived on the first week).

// Generate random data of user activities

let _start = datetime(2017-05-01);

let _end = datetime(2017-05-31);

range Day from _start to _end step 1d

| extend d = tolong((Day - _start) / 1d)

| extend r = rand() + 1

| extend _users=range(tolong(d * 50 * r), tolong(d * 50 * r + 200 * r - 1), 1)

| mv-expand id=_users to typeof(long) limit 1000000

// Take only the first week cohort (last parameter)

| evaluate new_activity_metrics(['id'], Day, _start, _end, 7d, _start)

| project from_Day, to_Day, retention_rate, churn_rate

Output

| from_Day | to_Day | retention_rate | churn_rate |

|---|---|---|---|

| 2017-05-01 00:00:00.0000000 | 2017-05-01 00:00:00.0000000 | 1 | 0 |

| 2017-05-01 00:00:00.0000000 | 2017-05-08 00:00:00.0000000 | 0.544632768361582 | 0.455367231638418 |

| 2017-05-01 00:00:00.0000000 | 2017-05-15 00:00:00.0000000 | 0.031638418079096 | 0.968361581920904 |

| 2017-05-01 00:00:00.0000000 | 2017-05-22 00:00:00.0000000 | 0 | 1 |

| 2017-05-01 00:00:00.0000000 | 2017-05-29 00:00:00.0000000 | 0 | 1 |

Weekly retention rate, and churn rate (complete matrix)

The next query calculates retention and churn rate for week-over-week window for New Users cohort. If the previous example calculated the statistics for a single week - the following query produces an NxN table for each from/to combination.

// Generate random data of user activities

let _start = datetime(2017-05-01);

let _end = datetime(2017-05-31);

range Day from _start to _end step 1d

| extend d = tolong((Day - _start) / 1d)

| extend r = rand() + 1

| extend _users=range(tolong(d * 50 * r), tolong(d * 50 * r + 200 * r - 1), 1)

| mv-expand id=_users to typeof(long) limit 1000000

// Last parameter is omitted -

| evaluate new_activity_metrics(['id'], Day, _start, _end, 7d)

| project from_Day, to_Day, retention_rate, churn_rate

Output

| from_Day | to_Day | retention_rate | churn_rate |

|---|---|---|---|

| 2017-05-01 00:00:00.0000000 | 2017-05-01 00:00:00.0000000 | 1 | 0 |

| 2017-05-01 00:00:00.0000000 | 2017-05-08 00:00:00.0000000 | 0.190397350993377 | 0.809602649006622 |

| 2017-05-01 00:00:00.0000000 | 2017-05-15 00:00:00.0000000 | 0 | 1 |

| 2017-05-01 00:00:00.0000000 | 2017-05-22 00:00:00.0000000 | 0 | 1 |

| 2017-05-01 00:00:00.0000000 | 2017-05-29 00:00:00.0000000 | 0 | 1 |

| 2017-05-08 00:00:00.0000000 | 2017-05-08 00:00:00.0000000 | 1 | 0 |

| 2017-05-08 00:00:00.0000000 | 2017-05-15 00:00:00.0000000 | 0.405263157894737 | 0.594736842105263 |

| 2017-05-08 00:00:00.0000000 | 2017-05-22 00:00:00.0000000 | 0.227631578947368 | 0.772368421052632 |

| 2017-05-08 00:00:00.0000000 | 2017-05-29 00:00:00.0000000 | 0 | 1 |

| 2017-05-15 00:00:00.0000000 | 2017-05-15 00:00:00.0000000 | 1 | 0 |

| 2017-05-15 00:00:00.0000000 | 2017-05-22 00:00:00.0000000 | 0.785488958990536 | 0.214511041009464 |

| 2017-05-15 00:00:00.0000000 | 2017-05-29 00:00:00.0000000 | 0.237644584647739 | 0.762355415352261 |

| 2017-05-22 00:00:00.0000000 | 2017-05-22 00:00:00.0000000 | 1 | 0 |

| 2017-05-22 00:00:00.0000000 | 2017-05-29 00:00:00.0000000 | 0.621835443037975 | 0.378164556962025 |

| 2017-05-29 00:00:00.0000000 | 2017-05-29 00:00:00.0000000 | 1 | 0 |

Weekly retention rate with lookback period

The following query calculates the retention rate of New Users cohort when taking into

consideration lookback period: a tabular query with set of Ids that are used to define

the New Users cohort (all IDs that don’t appear in this set are New Users). The

query examines the retention behavior of the New Users during the analysis period.

// Generate random data of user activities

let _lookback = datetime(2017-02-01);

let _start = datetime(2017-05-01);

let _end = datetime(2017-05-31);

let _data = range Day from _lookback to _end step 1d

| extend d = tolong((Day - _lookback) / 1d)

| extend r = rand() + 1

| extend _users=range(tolong(d * 50 * r), tolong(d * 50 * r + 200 * r - 1), 1)

| mv-expand id=_users to typeof(long) limit 1000000;

//

let lookback_data = _data | where Day < _start | project Day, id;

_data

| evaluate new_activity_metrics(id, Day, _start, _end, 7d, _start, lookback_data)

| project from_Day, to_Day, retention_rate

Output

| from_Day | to_Day | retention_rate |

|---|---|---|

| 2017-05-01 00:00:00.0000000 | 2017-05-01 00:00:00.0000000 | 1 |

| 2017-05-01 00:00:00.0000000 | 2017-05-08 00:00:00.0000000 | 0.404081632653061 |

| 2017-05-01 00:00:00.0000000 | 2017-05-15 00:00:00.0000000 | 0.257142857142857 |

| 2017-05-01 00:00:00.0000000 | 2017-05-22 00:00:00.0000000 | 0.296326530612245 |

| 2017-05-01 00:00:00.0000000 | 2017-05-29 00:00:00.0000000 | 0.0587755102040816 |

8 - rolling_percentile plugin

Returns an estimate for the specified percentile of the ValueColumn population in a rolling (sliding) BinsPerWindow size window per BinSize.

The plugin is invoked with the evaluate operator.

Syntax

T | evaluate rolling_percentile(ValueColumn, Percentile, IndexColumn, BinSize, BinsPerWindow [, dim1, dim2, …] )

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The input tabular expression. |

| ValueColumn | string | ✔️ | The name of the column used to calculate the percentiles. |

| Percentile | int, long, or real | ✔️ | Scalar with the percentile to calculate. |

| IndexColumn | string | ✔️ | The name of the column over which to run the rolling window. |

| BinSize | int, long, real, datetime, or timespan | ✔️ | Scalar with size of the bins to apply over the IndexColumn. |

| BinsPerWindow | int | ✔️ | The number of bins included in each window. |

| dim1, dim2, … | string | A list of the dimensions columns to slice by. |

Returns

Returns a table with a row per each bin (and combination of dimensions if specified) that has the rolling percentile of values in the window ending at the bin (inclusive). Output table schema is:

| IndexColumn | dim1 | … | dim_n | rolling_BinsPerWindow_percentile_ValueColumn_Pct |

|---|

Examples

Rolling 3-day median value per day

The next query calculates a 3-day median value in daily granularity. Each row in the output represents the median value for the last 3 bins (days), including the bin itself.

let T =

range idx from 0 to 24 * 10 - 1 step 1

| project Timestamp = datetime(2018-01-01) + 1h * idx, val=idx + 1

| extend EvenOrOdd = iff(val % 2 == 0, "Even", "Odd");

T

| evaluate rolling_percentile(val, 50, Timestamp, 1d, 3)

Output

| Timestamp | rolling_3_percentile_val_50 |

|---|---|

| 2018-01-01 00:00:00.0000000 | 12 |

| 2018-01-02 00:00:00.0000000 | 24 |

| 2018-01-03 00:00:00.0000000 | 36 |

| 2018-01-04 00:00:00.0000000 | 60 |

| 2018-01-05 00:00:00.0000000 | 84 |

| 2018-01-06 00:00:00.0000000 | 108 |

| 2018-01-07 00:00:00.0000000 | 132 |

| 2018-01-08 00:00:00.0000000 | 156 |

| 2018-01-09 00:00:00.0000000 | 180 |

| 2018-01-10 00:00:00.0000000 | 204 |

Rolling 3-day median value per day by dimension

Same example from above, but now also calculates the rolling window partitioned for each value of the dimension.

let T =

range idx from 0 to 24 * 10 - 1 step 1

| project Timestamp = datetime(2018-01-01) + 1h * idx, val=idx + 1

| extend EvenOrOdd = iff(val % 2 == 0, "Even", "Odd");

T

| evaluate rolling_percentile(val, 50, Timestamp, 1d, 3, EvenOrOdd)

Output

| Timestamp | EvenOrOdd | rolling_3_percentile_val_50 |

|---|---|---|

| 2018-01-01 00:00:00.0000000 | Even | 12 |

| 2018-01-02 00:00:00.0000000 | Even | 24 |

| 2018-01-03 00:00:00.0000000 | Even | 36 |

| 2018-01-04 00:00:00.0000000 | Even | 60 |

| 2018-01-05 00:00:00.0000000 | Even | 84 |

| 2018-01-06 00:00:00.0000000 | Even | 108 |

| 2018-01-07 00:00:00.0000000 | Even | 132 |

| 2018-01-08 00:00:00.0000000 | Even | 156 |

| 2018-01-09 00:00:00.0000000 | Even | 180 |

| 2018-01-10 00:00:00.0000000 | Even | 204 |

| 2018-01-01 00:00:00.0000000 | Odd | 11 |

| 2018-01-02 00:00:00.0000000 | Odd | 23 |

| 2018-01-03 00:00:00.0000000 | Odd | 35 |

| 2018-01-04 00:00:00.0000000 | Odd | 59 |

| 2018-01-05 00:00:00.0000000 | Odd | 83 |

| 2018-01-06 00:00:00.0000000 | Odd | 107 |

| 2018-01-07 00:00:00.0000000 | Odd | 131 |

| 2018-01-08 00:00:00.0000000 | Odd | 155 |

| 2018-01-09 00:00:00.0000000 | Odd | 179 |

| 2018-01-10 00:00:00.0000000 | Odd | 203 |

9 - rows_near plugin

Finds rows near a specified condition.

The plugin is invoked with the evaluate operator.

Syntax

T | evaluate rows_near(Condition, NumRows, [, RowsAfter ])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The input tabular expression. |

| Condition | bool | ✔️ | Represents the condition to find rows around. |

| NumRows | int | ✔️ | The number of rows to find before and after the condition. |

| RowsAfter | int | When specified, overrides the number of rows to find after the condition. |

Returns

Every row from the input that is within NumRows from a true Condition,

When RowsAfter is specified, returns every row from the input that is NumRows before or RowsAfter after a true Condition.

Example

Find rows with an "Error" State, and returns 2 rows before and after the "Error" record.

datatable (Timestamp:datetime, Value:long, State:string )

[

datetime(2021-06-01), 1, "Success",

datetime(2021-06-02), 4, "Success",

datetime(2021-06-03), 3, "Success",

datetime(2021-06-04), 11, "Success",

datetime(2021-06-05), 15, "Success",

datetime(2021-06-06), 2, "Success",

datetime(2021-06-07), 19, "Error",

datetime(2021-06-08), 12, "Success",

datetime(2021-06-09), 7, "Success",

datetime(2021-06-10), 9, "Success",

datetime(2021-06-11), 4, "Success",

datetime(2021-06-12), 1, "Success",

]

| sort by Timestamp asc

| evaluate rows_near(State == "Error", 2)

Output

| Timestamp | Value | State |

|---|---|---|

| 2021-06-05 00:00:00.0000000 | 15 | Success |

| 2021-06-06 00:00:00.0000000 | 2 | Success |

| 2021-06-07 00:00:00.0000000 | 19 | Error |

| 2021-06-08 00:00:00.0000000 | 12 | Success |

| 2021-06-09 00:00:00.0000000 | 7 | Success |

10 - sequence_detect plugin

Detects sequence occurrences based on provided predicates. The plugin is invoked with the evaluate operator.

Syntax

T | evaluate sequence_detect (TimelineColumn, MaxSequenceStepWindow, MaxSequenceSpan, Expr1, Expr2, …, Dim1, Dim2, …)

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The input tabular expression. |

| TimelineColumn | string | ✔️ | The column reference representing timeline, must be present in the source expression. |

| MaxSequenceStepWindow | timespan | ✔️ | The value of the max allowed timespan between 2 sequential steps in the sequence. |

| MaxSequenceSpan | timespan | ✔️ | The max timespan for the sequence to complete all steps. |

| Expr1, Expr2, … | string | ✔️ | The boolean predicate expressions defining sequence steps. |

| Dim1, Dim2, … | string | ✔️ | The dimension expressions that are used to correlate sequences. |

Returns

Returns a single table where each row in the table represents a single sequence occurrence:

- Dim1, Dim2, …: dimension columns that were used to correlate sequences.

- Expr1TimelineColumn, Expr2TimelineColumn, …: Columns with time values, representing the timeline of each sequence step.

- Duration: the overall sequence time window

Examples

The following query looks at the table T to search for relevant data from a specified time period.

T | evaluate sequence_detect(datetime_column, 10m, 1h, e1 = (Col1 == 'Val'), e2 = (Col2 == 'Val2'), Dim1, Dim2)

Exploring Storm Events

The following query looks on the table StormEvents (weather statistics for 2007) and shows cases where sequence of ‘Excessive Heat’ was followed by ‘Wildfire’ within 5 days.

StormEvents

| evaluate sequence_detect(

StartTime,

5d, // step max-time

5d, // sequence max-time

heat=(EventType == "Excessive Heat"),

wildfire=(EventType == 'Wildfire'),

State

)

Output

| State | heat_StartTime | wildfire_StartTime | Duration |

|---|---|---|---|

| CALIFORNIA | 2007-05-08 00:00:00.0000000 | 2007-05-08 16:02:00.0000000 | 16:02:00 |

| CALIFORNIA | 2007-05-08 00:00:00.0000000 | 2007-05-10 11:30:00.0000000 | 2.11:30:00 |

| CALIFORNIA | 2007-07-04 09:00:00.0000000 | 2007-07-05 23:01:00.0000000 | 1.14:01:00 |

| SOUTH DAKOTA | 2007-07-23 12:00:00.0000000 | 2007-07-27 09:00:00.0000000 | 3.21:00:00 |

| TEXAS | 2007-08-10 08:00:00.0000000 | 2007-08-11 13:56:00.0000000 | 1.05:56:00 |

| CALIFORNIA | 2007-08-31 08:00:00.0000000 | 2007-09-01 11:28:00.0000000 | 1.03:28:00 |

| CALIFORNIA | 2007-08-31 08:00:00.0000000 | 2007-09-02 13:30:00.0000000 | 2.05:30:00 |

| CALIFORNIA | 2007-09-02 12:00:00.0000000 | 2007-09-02 13:30:00.0000000 | 01:30:00 |

11 - session_count plugin

Calculates the session count based on the ID column over a timeline. The plugin is invoked with the evaluate operator.

Syntax

TabularExpression | evaluate session_count(IdColumn, TimelineColumn, Start, End, Bin, LookBackWindow [, dim1, dim2, …])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| TabularExpression | string | ✔️ | The tabular expression that serves as input. |

| IdColumn | string | ✔️ | The name of the column with ID values that represents user activity. |

| TimelineColumn | string | ✔️ | The name of the column that represents the timeline. |

| Start | scalar | ✔️ | The start of the analysis period. |

| End | scalar | ✔️ | The end of the analysis period. |

| Bin | scalar | ✔️ | The session’s analysis step period. |

| LookBackWindow | scalar | ✔️ | The session lookback period. If the ID from IdColumn appears in a time window within LookBackWindow, the session is considered to be an existing one. If the ID doesn’t appear, then the session is considered to be new. |

| dim1, dim2, … | string | A list of the dimensions columns that slice the session count calculation. |

Returns

Returns a table that has the session count values for each timeline period and for each existing dimensions combination.

Output table schema is:

| TimelineColumn | dim1 | .. | dim_n | count_sessions | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| type: as of TimelineColumn | .. | .. | .. | long |

Examples

For this example, the data is deterministic, and we use a table with two columns:

Timeline: a running number from 1 to 10,000Id: ID of the user from 1 to 50

Id appears at the specific Timeline slot if it’s a divider of Timeline (Timeline % Id == 0).

An event with Id==1 will appear at any Timeline slot, an event with Id==2 at every second Timeline slot, and so on.

Here are 20 lines of the data:

let _data = range Timeline from 1 to 10000 step 1

| extend __key = 1

| join kind=inner (range Id from 1 to 50 step 1 | extend __key=1) on __key

| where Timeline % Id == 0

| project Timeline, Id;

// Look on few lines of the data

_data

| order by Timeline asc, Id asc

| take 20

Output

| Timeline | Id |

|---|---|

| 1 | 1 |

| 2 | 1 |

| 2 | 2 |

| 3 | 1 |

| 3 | 3 |

| 4 | 1 |

| 4 | 2 |

| 4 | 4 |

| 5 | 1 |

| 5 | 5 |

| 6 | 1 |

| 6 | 2 |

| 6 | 3 |

| 6 | 6 |

| 7 | 1 |

| 7 | 7 |

| 8 | 1 |

| 8 | 2 |

| 8 | 4 |

| 8 | 8 |

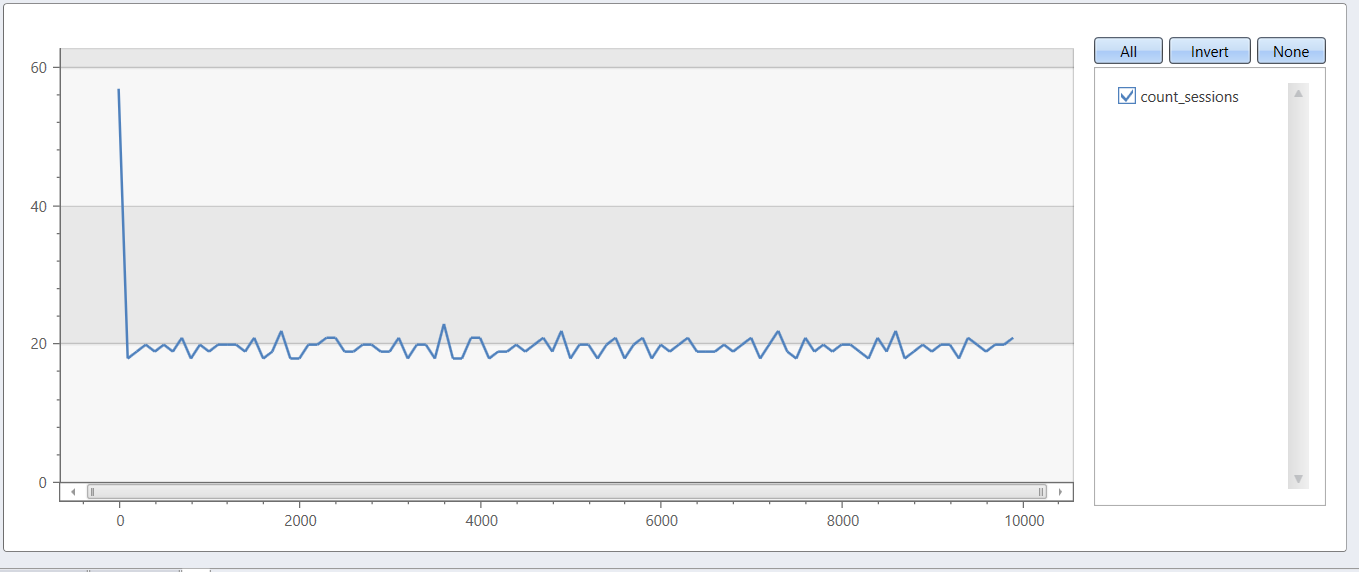

Let’s define a session in next terms: session considered to be active as long as user (Id) appears at least once at a timeframe of 100 time slots, while session look-back window is 41 time slots.

The next query shows the count of active sessions according to the above definition.

let _data = range Timeline from 1 to 9999 step 1

| extend __key = 1

| join kind=inner (range Id from 1 to 50 step 1 | extend __key=1) on __key

| where Timeline % Id == 0

| project Timeline, Id;

// End of data definition

_data

| evaluate session_count(Id, Timeline, 1, 10000, 100, 41)

| render linechart

12 - sliding_window_counts plugin

Calculates counts and distinct count of values in a sliding window over a lookback period, using the technique described in the Perform aggregations over a sliding window example. The plugin is invoked with the evaluate operator.

Syntax

T | evaluate sliding_window_counts(IdColumn, TimelineColumn, Start, End, LookbackWindow, Bin , [dim1, dim2, …])

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | The input tabular expression. |

| IdColumn | string | ✔️ | The name of the column with ID values that represent user activity. |

| TimelineColumn | string | ✔️ | The name of the column representing the timeline. |

| Start | int, long, real, datetime, or timespan | ✔️ | The analysis start period. |

| End | int, long, real, datetime, or timespan | ✔️ | The analysis end period. |

| LookbackWindow | int, long, real, datetime, or timespan | ✔️ | The lookback period. This value should be a multiple of the Bin value, otherwise the LookbackWindow will be rounded down to a multiple of the Bin value. For example, for dcount users in past 7d: LookbackWindow = 7d. |

| Bin | int, long, real, datetime, timespan, or string | ✔️ | The analysis step period. The possible string values are week, month, and year for which all periods will be startofweek, startofmonth, startofyear respectively. |

| dim1, dim2, … | string | A list of the dimensions columns that slice the activity metrics calculation. |

Returns

Returns a table that has the count and distinct count values of Ids in the lookback period, for each timeline period (by bin) and for each existing dimensions combination.

Output table schema is:

| TimelineColumn | dim1 | .. | dim_n | count | dcount |

|---|---|---|---|---|---|

| type: as of TimelineColumn | .. | .. | .. | long | long |

Example

Calculate counts and dcounts for users in past week, for each day in the analysis period.

let start = datetime(2017 - 08 - 01);

let end = datetime(2017 - 08 - 07);

let lookbackWindow = 3d;

let bin = 1d;

let T = datatable(UserId: string, Timestamp: datetime)

[

'Bob', datetime(2017 - 08 - 01),

'David', datetime(2017 - 08 - 01),

'David', datetime(2017 - 08 - 01),

'John', datetime(2017 - 08 - 01),

'Bob', datetime(2017 - 08 - 01),

'Ananda', datetime(2017 - 08 - 02),

'Atul', datetime(2017 - 08 - 02),

'John', datetime(2017 - 08 - 02),

'Ananda', datetime(2017 - 08 - 03),

'Atul', datetime(2017 - 08 - 03),

'Atul', datetime(2017 - 08 - 03),

'John', datetime(2017 - 08 - 03),

'Bob', datetime(2017 - 08 - 03),

'Betsy', datetime(2017 - 08 - 04),

'Bob', datetime(2017 - 08 - 05),

];

T

| evaluate sliding_window_counts(UserId, Timestamp, start, end, lookbackWindow, bin)

Output

| Timestamp | Count | dcount |

|---|---|---|

| 2017-08-01 00:00:00.0000000 | 5 | 3 |

| 2017-08-02 00:00:00.0000000 | 8 | 5 |

| 2017-08-03 00:00:00.0000000 | 13 | 5 |

| 2017-08-04 00:00:00.0000000 | 9 | 5 |

| 2017-08-05 00:00:00.0000000 | 7 | 5 |

| 2017-08-06 00:00:00.0000000 | 2 | 2 |

| 2017-08-07 00:00:00.0000000 | 1 | 1 |

13 - User Analytics

This section describes Kusto extensions (plugins) for user analytics scenarios.

| Scenario | Plugin | Details | User Experience |

|---|---|---|---|

| Counting new users over time | activity_counts_metrics | Returns counts/dcounts/new counts for each time window. Each time window is compared to all previous time windows | Kusto.Explorer: Report Gallery |

| Period-over-period: retention/churn rate and new users | activity_metrics | Returns dcount, retention/churn rate for each time window. Each time window is compared to previous time window | Kusto.Explorer: Report Gallery |

Users count and dcount over sliding window | sliding_window_counts | For each time window, returns count and dcount over a lookback period, in a sliding window manner | |

| New-users cohort: retention/churn rate and new users | new_activity_metrics | Compares between cohorts of new users (all users that were first seen in time window). Each cohort is compared to all prior cohorts. Comparison takes into account all previous time windows | Kusto.Explorer: Report Gallery |

| Active Users: distinct counts | active_users_count | Returns distinct users for each time window. A user is only considered if it appears in at least X distinct periods in a specified lookback period. | |

| User Engagement: DAU/WAU/MAU | activity_engagement | Compares between an inner time window (for example, daily) and an outer (for example, weekly) for computing engagement (for example, DAU/WAU) | Kusto.Explorer: Report Gallery |

| Sessions: count active sessions | session_count | Counts sessions, where a session is defined by a time period - a user record is considered a new session, if it hasn’t been seen in the lookback period from current record | |

| Funnels: previous and next state sequence analysis | funnel_sequence | Counts distinct users who have taken a sequence of events, and the previous or next events that led or were followed by the sequence. Useful for constructing sankey diagrams | |

| Funnels: sequence completion analysis | funnel_sequence_completion | Computes the distinct count of users that have completed a specified sequence in each time window | |

||||