This is the multi-page printable view of this section. Click here to print.

Tabular operators

- 1: Join operator

- 1.1: join flavors

- 1.1.1: fullouter join

- 1.1.2: inner join

- 1.1.3: innerunique join

- 1.1.4: leftanti join

- 1.1.5: leftouter join

- 1.1.6: leftsemi join

- 1.1.7: rightanti join

- 1.1.8: rightouter join

- 1.1.9: rightsemi join

- 1.2: Broadcast join

- 1.3: Cross-cluster join

- 1.4: join operator

- 1.5: Joining within time window

- 2: Render operator

- 2.1: visualizations

- 2.1.1: Anomaly chart visualization

- 2.1.2: Area chart visualization

- 2.1.3: Bar chart visualization

- 2.1.4: Card visualization

- 2.1.5: Column chart visualization

- 2.1.6: Ladder chart visualization

- 2.1.7: Line chart visualization

- 2.1.8: Pie chart visualization

- 2.1.9: Pivot chart visualization

- 2.1.10: Plotly visualization

- 2.1.11: Scatter chart visualization

- 2.1.12: Stacked area chart visualization

- 2.1.13: Table visualization

- 2.1.14: Time chart visualization

- 2.1.15: Time pivot visualization

- 2.1.16: Treemap visualization

- 2.2: render operator

- 3: Summarize operator

- 4: macro-expand operator

- 5: project-by-names operator

- 6: as operator

- 7: consume operator

- 8: count operator

- 9: datatable operator

- 10: distinct operator

- 11: evaluate plugin operator

- 12: extend operator

- 13: externaldata operator

- 14: facet operator

- 15: find operator

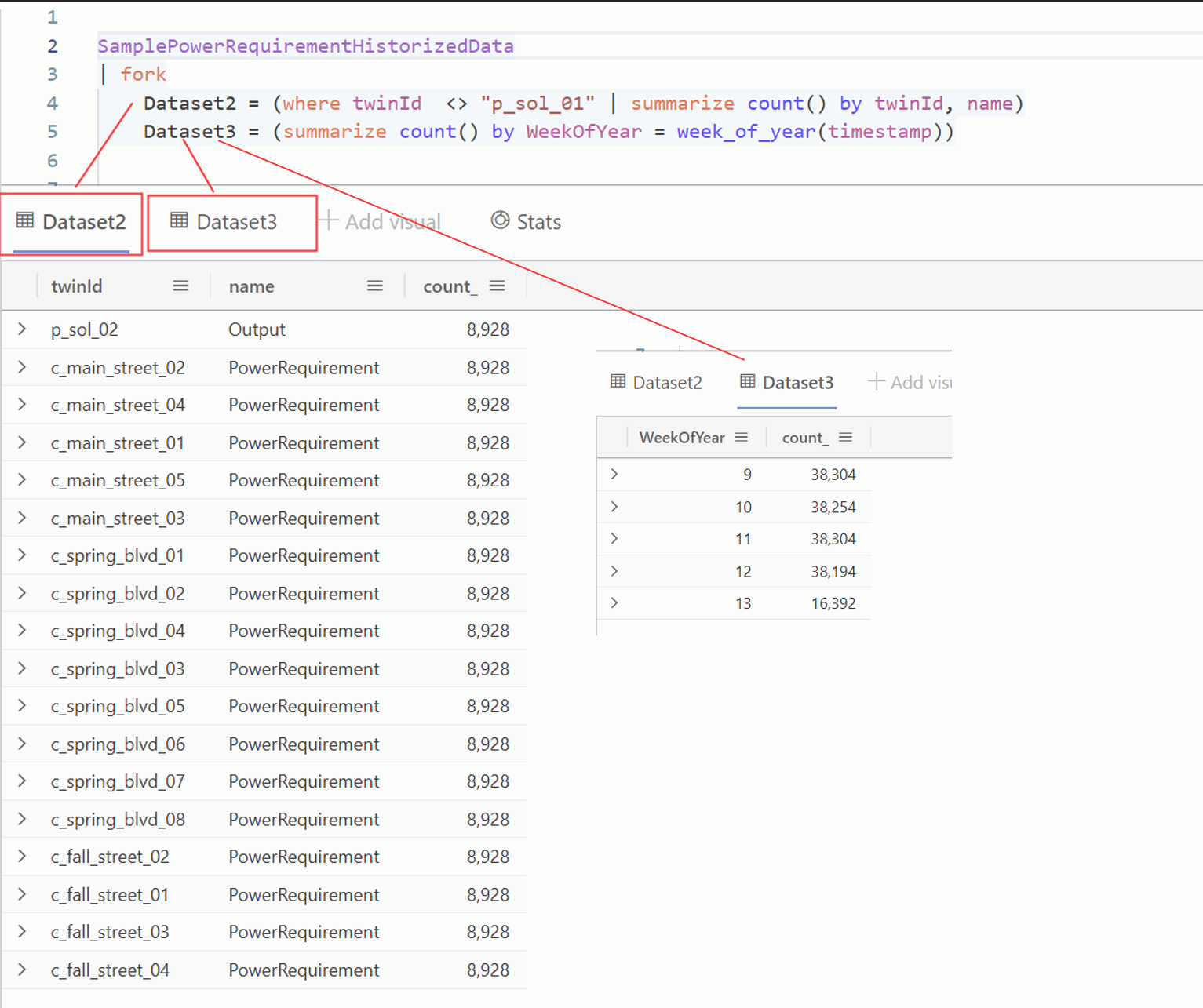

- 16: fork operator

- 17: getschema operator

- 18: invoke operator

- 19: lookup operator

- 20: mv-apply operator

- 21: mv-expand operator

- 22: parse operator

- 23: parse-kv operator

- 24: parse-where operator

- 25: partition operator

- 26: print operator

- 27: Project operator

- 28: project-away operator

- 29: project-keep operator

- 30: project-rename operator

- 31: project-reorder operator

- 32: Queries

- 33: range operator

- 34: reduce operator

- 35: sample operator

- 36: sample-distinct operator

- 37: scan operator

- 38: search operator

- 39: serialize operator

- 40: Shuffle query

- 41: sort operator

- 42: take operator

- 43: top operator

- 44: top-hitters operator

- 45: top-nested operator

- 46: union operator

- 47: where operator

1 - Join operator

1.1 - join flavors

1.1.1 - fullouter join

A fullouter join combines the effect of applying both left and right outer-joins. For columns of the table that lack a matching row, the result set contains null values. For those records that do match, a single row is produced in the result set containing fields populated from both tables.

Syntax

LeftTable | join kind=fullouter [ Hints ] RightTable on Conditions

Returns

Schema: All columns from both tables, including the matching keys.

Rows: All records from both tables with unmatched cells populated with null.

Example

This example query combines rows from both tables X and Y, filling in missing values with NULL where there’s no match in the other table. This allows you to see all possible combinations of keys from both tables.

let X = datatable(Key:string, Value1:long)

[

'a',1,

'b',2,

'b',3,

'c',4

];

let Y = datatable(Key:string, Value2:long)

[

'b',10,

'c',20,

'c',30,

'd',40

];

X | join kind=fullouter Y on Key

Output

| Key | Value1 | Key1 | Value2 |

|---|---|---|---|

| b | 3 | b | 10 |

| b | 2 | b | 10 |

| c | 4 | c | 20 |

| c | 4 | c | 30 |

| d | 40 | ||

| a | 1 |

Related content

- Learn about other join flavors

1.1.2 - inner join

The inner join flavor is like the standard inner join from the SQL world. An output record is produced whenever a record on the left side has the same join key as the record on the right side.

Syntax

LeftTable | join kind=inner [ Hints ] RightTable on Conditions

Returns

Schema: All columns from both tables, including the matching keys.

Rows: Only matching rows from both tables.

Example

The example query combines rows from tables X and Y where the keys match, showing only the rows that exist in both tables.

let X = datatable(Key:string, Value1:long)

[

'a',1,

'b',2,

'b',3,

'k',5,

'c',4

];

let Y = datatable(Key:string, Value2:long)

[

'b',10,

'c',20,

'c',30,

'd',40,

'k',50

];

X | join kind=inner Y on Key

Output

| Key | Value1 | Key1 | Value2 |

|---|---|---|---|

| b | 3 | b | 10 |

| b | 2 | b | 10 |

| c | 4 | c | 20 |

| c | 4 | c | 30 |

| k | 5 | k | 50 |

Related content

- Learn about other join flavors

1.1.3 - innerunique join

The innerunique join flavor removes duplicate keys from the left side. This behavior ensures that the output contains a row for every combination of unique left and right keys.

By default, the innerunique join flavor is used if the kind parameter isn’t specified. This default implementation is useful in log/trace analysis scenarios, where you aim to correlate two events based on a shared correlation ID. It allows you to retrieve all instances of the phenomenon while disregarding duplicate trace records that contribute to the correlation.

Syntax

LeftTable | join kind=innerunique [ Hints ] RightTable on Conditions

Returns

Schema: All columns from both tables, including the matching keys.

Rows: All deduplicated rows from the left table that match rows from the right table.

Examples

Review the examples and run them in your Data Explorer query page.

Use the default innerunique join

The example query combines rows from tables X and Y where the keys match, showing only the rows that exist in both tables

let X = datatable(Key:string, Value1:long)

[

'a',1,

'b',2,

'b',3,

'c',4

];

let Y = datatable(Key:string, Value2:long)

[

'b',10,

'c',20,

'c',30,

'd',40

];

X | join Y on Key

Output

| Key | Value1 | Key1 | Value2 |

|---|---|---|---|

| b | 2 | b | 10 |

| c | 4 | c | 20 |

| c | 4 | c | 30 |

The query executed the default join, which is an inner join after deduplicating the left side based on the join key. The deduplication keeps only the first record. The resulting left side of the join after deduplication is:

| Key | Value1 |

|---|---|

| a | 1 |

| b | 2 |

| c | 4 |

Two possible outputs from innerunique join

let t1 = datatable(key: long, value: string)

[

1, "val1.1",

1, "val1.2"

];

let t2 = datatable(key: long, value: string)

[

1, "val1.3",

1, "val1.4"

];

t1

| join kind = innerunique

t2

on key

Output

| key | value | key1 | value1 |

|---|---|---|---|

| 1 | val1.1 | 1 | val1.3 |

| 1 | val1.1 | 1 | val1.4 |

let t1 = datatable(key: long, value: string)

[

1, "val1.1",

1, "val1.2"

];

let t2 = datatable(key: long, value: string)

[

1, "val1.3",

1, "val1.4"

];

t1

| join kind = innerunique

t2

on key

Output

| key | value | key1 | value1 |

|---|---|---|---|

| 1 | val1.2 | 1 | val1.3 |

| 1 | val1.2 | 1 | val1.4 |

- Kusto is optimized to push filters that come after the

join, towards the appropriate join side, left or right, when possible. - Sometimes, the flavor used is innerunique and the filter is propagated to the left side of the join. The flavor is automatically propagated and the keys that apply to that filter appear in the output.

- Use the previous example and add a filter

where value == "val1.2". It gives the second result and will never give the first result for the datasets:

let t1 = datatable(key: long, value: string)

[

1, "val1.1",

1, "val1.2"

];

let t2 = datatable(key: long, value: string)

[

1, "val1.3",

1, "val1.4"

];

t1

| join kind = innerunique

t2

on key

| where value == "val1.2"

Output

| key | value | key1 | value1 |

|---|---|---|---|

| 1 | val1.2 | 1 | val1.3 |

| 1 | val1.2 | 1 | val1.4 |

Get extended sign-in activities

Get extended activities from a login that some entries mark as the start and end of an activity.

let Events = MyLogTable | where type=="Event" ;

Events

| where Name == "Start"

| project Name, City, ActivityId, StartTime=timestamp

| join (Events

| where Name == "Stop"

| project StopTime=timestamp, ActivityId)

on ActivityId

| project City, ActivityId, StartTime, StopTime, Duration = StopTime - StartTime

let Events = MyLogTable | where type=="Event" ;

Events

| where Name == "Start"

| project Name, City, ActivityIdLeft = ActivityId, StartTime=timestamp

| join (Events

| where Name == "Stop"

| project StopTime=timestamp, ActivityIdRight = ActivityId)

on $left.ActivityIdLeft == $right.ActivityIdRight

| project City, ActivityId, StartTime, StopTime, Duration = StopTime - StartTime

Related content

- Learn about other join flavors

1.1.4 - leftanti join

The leftanti join flavor returns all records from the left side that don’t match any record from the right side. The anti join models the “NOT IN” query.

Syntax

LeftTable | join kind=leftanti [ Hints ] RightTable on Conditions

Returns

Schema: All columns from the left table.

Rows: All records from the left table that don’t match records from the right table.

Example

The example query combines rows from tables X and Y where there is no match in Y for the keys in X, effectively filtering out any rows in X that have corresponding rows in Y.

let X = datatable(Key:string, Value1:long)

[

'a',1,

'b',2,

'b',3,

'c',4

];

let Y = datatable(Key:string, Value2:long)

[

'b',10,

'c',20,

'c',30,

'd',40

];

X | join kind=leftanti Y on Key

Output

| Key | Value1 |

|---|---|

| a | 1 |

Related content

- Learn about other join flavors

1.1.5 - leftouter join

The leftouter join flavor returns all the records from the left side table and only matching records from the right side table.

Syntax

LeftTable | join kind=leftouter [ Hints ] RightTable on Conditions

Returns

Schema: All columns from both tables, including the matching keys.

Rows: All records from the left table and only matching rows from the right table.

Example

The result of a left outer join for tables X and Y always contains all records of the left table (X), even if the join condition doesn’t find any matching record in the right table (Y).

let X = datatable(Key:string, Value1:long)

[

'a',1,

'b',2,

'b',3,

'c',4

];

let Y = datatable(Key:string, Value2:long)

[

'b',10,

'c',20,

'c',30,

'd',40

];

X | join kind=leftouter Y on Key

Output

| Key | Value1 | Key1 | Value2 |

|---|---|---|---|

| a | 1 | ||

| b | 2 | b | 10 |

| b | 3 | b | 10 |

| c | 4 | c | 20 |

| c | 4 | c | 30 |

Related content

- Learn about other join flavors

1.1.6 - leftsemi join

The leftsemi join flavor returns all records from the left side that match a record from the right side. Only columns from the left side are returned.

Syntax

LeftTable | join kind=leftsemi [ Hints ] RightTable on Conditions

Returns

Schema: All columns from the left table.

Rows: All records from the left table that match records from the right table.

Example

This query filters and returns only those rows from table X that have a matching key in table Y.

let X = datatable(Key:string, Value1:long)

[

'a',1,

'b',2,

'b',3,

'c',4

];

let Y = datatable(Key:string, Value2:long)

[

'b',10,

'c',20,

'c',30,

'd',40

];

X | join kind=leftsemi Y on Key

Output

| Key | Value1 |

|---|---|

| b | 2 |

| b | 3 |

| c | 4 |

Related content

- Learn about other join flavors

1.1.7 - rightanti join

The rightanti join flavor returns all records from the right side that don’t match any record from the left side. The anti join models the “NOT IN” query.

Syntax

LeftTable | join kind=rightanti [ Hints ] RightTable on Conditions

Returns

Schema: All columns from the right table.

Rows: All records from the right table that don’t match records from the left table.

Example

This query filters and returns only those rows from table Y that do not have a matching key in table X.

let X = datatable(Key:string, Value1:long)

[

'a',1,

'b',2,

'b',3,

'c',4

];

let Y = datatable(Key:string, Value2:long)

[

'b',10,

'c',20,

'c',30,

'd',40

];

X | join kind=rightanti Y on Key

Output

| Key | Value1 |

|---|---|

| d | 40 |

Related content

- Learn about other join flavors

1.1.8 - rightouter join

The rightouter join flavor returns all the records from the right side and only matching records from the left side. This join flavor resembles the leftouter join flavor, but the treatment of the tables is reversed.

Syntax

LeftTable | join kind=rightouter [ Hints ] RightTable on Conditions

Returns

Schema: All columns from both tables, including the matching keys.

Rows: All records from the right table and only matching rows from the left table.

Example

This query returns all rows from table Y and any matching rows from table X, filling in NULL values where there is no match from X.

let X = datatable(Key:string, Value1:long)

[

'a',1,

'b',2,

'b',3,

'c',4

];

let Y = datatable(Key:string, Value2:long)

[

'b',10,

'c',20,

'c',30,

'd',40

];

X | join kind=rightouter Y on Key

Output

| Key | Value1 | Key1 | Value2 |

|---|---|---|---|

| b | 2 | b | 10 |

| b | 3 | b | 10 |

| c | 4 | c | 20 |

| c | 4 | c | 30 |

| d | 40 |

Related content

- Learn about other join flavors

1.1.9 - rightsemi join

The rightsemi join flavor returns all records from the right side that match a record from the left side. Only columns from the right side are returned.

Syntax

LeftTable | join kind=rightsemi [ Hints ] RightTable on Conditions

Returns

Schema: All columns from the right table.

Rows: All records from the right table that match records from the left table.

Example

This query filters and returns only those rows from table Y that have a matching key in table X.

let X = datatable(Key:string, Value1:long)

[

'a',1,

'b',2,

'b',3,

'c',4

];

let Y = datatable(Key:string, Value2:long)

[

'b',10,

'c',20,

'c',30,

'd',40

];

X | join kind=rightsemi Y on Key

Output

| Key | Value2 |

|---|---|

| b | 10 |

| c | 20 |

| c | 30 |

Related content

- Learn about other join flavors

1.2 - Broadcast join

Today, regular joins are executed on a cluster single node. Broadcast join is an execution strategy of join that distributes the join over cluster nodes. This strategy is useful when the left side of the join is small (up to several tens of MBs). In this case, a broadcast join is more performant than a regular join.

Today, regular joins are executed on an Eventhouse single node. Broadcast join is an execution strategy of join that distributes the join over Eventhouse nodes. This strategy is useful when the left side of the join is small (up to several tens of MBs). In this case, a broadcast join is more performant than a regular join.

Use the lookup operator if the right side is smaller than the left side. The lookup operator runs in broadcast strategy by default when the right side is smaller than the left.

If left side of the join is a small dataset, then you may run join in broadcast mode using the following syntax (hint.strategy = broadcast):

leftSide

| join hint.strategy = broadcast (factTable) on key

The performance improvement is more noticeable in scenarios where the join is followed by other operators such as summarize. See the following query for example:

leftSide

| join hint.strategy = broadcast (factTable) on Key

| summarize dcount(Messages) by Timestamp, Key

Related content

1.3 - Cross-cluster join

A cross-cluster join involves joining data from datasets that reside in different clusters.

In a cross-cluster join, the query can be executed in three possible locations, each with a specific designation for reference throughout this document:

- Local cluster: The cluster to which the request is sent, which is also known as the cluster hosting the database in context.

- Left cluster: The cluster hosting the data on the left side of the join operation.

- Right cluster: The cluster hosting the data on the right side of the join operation.

The cluster that runs the query fetches the data from the other cluster.

Syntax

[ cluster(ClusterName).database(DatabaseName).]LeftTable | …| join [ hint.remote=Strategy ] (

[ cluster(ClusterName).database(DatabaseName).]RightTable | …

) on Conditions

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| LeftTable | string | ✔️ | The left table or tabular expression whose rows are to be merged. Denoted as $left. |

| Strategy | string | Determines the cluster on which to execute the join. Supported values are: left, right, local, and auto. For more information, see Strategies. | |

| ClusterName | string | If the data for the join resides outside of the local cluster, use the cluster() function to specify the cluster. | |

| DatabaseName | string | If the data for the join resides outside of the local database context, use the database() function to specify the database. | |

| RightTable | string | ✔️ | The right table or tabular expression whose rows are to be merged. Denoted as $right. |

| Conditions | string | ✔️ | Determines how rows from LeftTable are matched with rows from RightTable. If the columns you want to match have the same name in both tables, use the syntax ON ColumnName. Otherwise, use the syntax ON $left.LeftColumn == $right.RightColumn. To specify multiple conditions, you can either use the “and” keyword or separate them with commas. If you use commas, the conditions are evaluated using the “and” logical operator. |

Strategies

The following list explains the supported values for the Strategy parameter:

left: Execute join on the cluster of the left table, or left cluster.right: Execute join on the cluster of the right table, or right cluster.local: Execute join on the cluster of the current cluster, or local cluster.auto: (Default) Kusto makes the remoting decision.

How the auto strategy works

By default, the auto strategy determines where the cross-cluster join is executed based on the following rules:

If one of the tables is hosted in the local cluster, then the join is performed on the local cluster. For example, with the auto strategy, this query is executed on the local cluster:

T | ... | join (cluster("B").database("DB").T2 | ...) on Col1If both tables are hosted outside of the local cluster, then join is performed on the right cluster. For example, assuming neither cluster is the local cluster, the join would be executed on the right cluster:

cluster("B").database("DB").T | ... | join (cluster("C").database("DB2").T2 | ...) on Col1

Performance considerations

For optimal performance, we recommend running the query on the cluster that contains the largest table.

In the following example, if the dataset produced by T | ... is smaller than one produced by cluster("B").database("DB").T2 | ... then it would be more efficient to execute the join operation on cluster B, in this case the right cluster instead of on the local cluster.

T | ... | join (cluster("B").database("DB").T2 | ...) on Col1

You can rewrite the query to use hint.remote=right to optimize the performance. In this way, the join operation is performed on the right cluster, even if left table is in the local cluster.

T | ... | join hint.remote=right (cluster("B").database("DB").T2 | ...) on Col1

Related content

1.4 - join operator

Merge the rows of two tables to form a new table by matching values of the specified columns from each table.

Kusto Query Language (KQL) offers many kinds of joins that each affect the schema and rows in the resultant table in different ways. For example, if you use an inner join, the table has the same columns as the left table, plus the columns from the right table. For best performance, if one table is always smaller than the other, use it as the left side of the join operator.

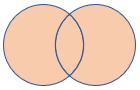

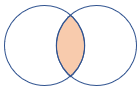

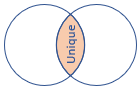

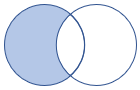

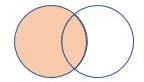

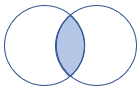

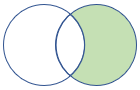

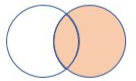

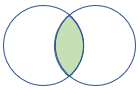

The following image provides a visual representation of the operation performed by each join. The color of the shading represents the columns returned, and the areas shaded represent the rows returned.

Syntax

LeftTable | join [ kind = JoinFlavor ] [ Hints ] (RightTable) on Conditions

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| LeftTable | string | ✔️ | The left table or tabular expression, sometimes called the outer table, whose rows are to be merged. Denoted as $left. |

| JoinFlavor | string | The type of join to perform: innerunique, inner, leftouter, rightouter, fullouter, leftanti, rightanti, leftsemi, rightsemi. The default is innerunique. For more information about join flavors, see Returns. | |

| Hints | string | Zero or more space-separated join hints in the form of Name = Value that control the behavior of the row-match operation and execution plan. For more information, see Hints. | |

| RightTable | string | ✔️ | The right table or tabular expression, sometimes called the inner table, whose rows are to be merged. Denoted as $right. |

| Conditions | string | ✔️ | Determines how rows from LeftTable are matched with rows from RightTable. If the columns you want to match have the same name in both tables, use the syntax ON ColumnName. Otherwise, use the syntax ON $left.LeftColumn == $right.RightColumn. To specify multiple conditions, you can either use the “and” keyword or separate them with commas. If you use commas, the conditions are evaluated using the “and” logical operator. |

Hints

| Hint key | Values | Description |

|---|---|---|

hint.remote | auto, left, local, right | See Cross-Cluster Join |

hint.strategy=broadcast | Specifies the way to share the query load on cluster nodes. | See broadcast join |

hint.shufflekey=<key> | The shufflekey query shares the query load on cluster nodes, using a key to partition data. | See shuffle query |

hint.strategy=shuffle | The shuffle strategy query shares the query load on cluster nodes, where each node processes one partition of the data. | See shuffle query |

| Name | Values | Description |

|---|---|---|

hint.remote | auto, left, local, right | |

hint.strategy=broadcast | Specifies the way to share the query load on cluster nodes. | See broadcast join |

hint.shufflekey=<key> | The shufflekey query shares the query load on cluster nodes, using a key to partition data. | See shuffle query |

hint.strategy=shuffle | The shuffle strategy query shares the query load on cluster nodes, where each node processes one partition of the data. | See shuffle query |

Returns

The return schema and rows depend on the join flavor. The join flavor is specified with the kind keyword. The following table shows the supported join flavors. To see examples for a specific join flavor, select the link in the Join flavor column.

| Join flavor | Returns | Illustration |

|---|---|---|

| innerunique (default) | Inner join with left side deduplication Schema: All columns from both tables, including the matching keys Rows: All deduplicated rows from the left table that match rows from the right table | :::image type=“icon” source=“media/joinoperator/join-innerunique.png” border=“false”::: |

| inner | Standard inner join Schema: All columns from both tables, including the matching keys Rows: Only matching rows from both tables | :::image type=“icon” source=“media/joinoperator/join-inner.png” border=“false”::: |

| leftouter | Left outer join Schema: All columns from both tables, including the matching keys Rows: All records from the left table and only matching rows from the right table | :::image type=“icon” source=“media/joinoperator/join-leftouter.png” border=“false”::: |

| rightouter | Right outer join Schema: All columns from both tables, including the matching keys Rows: All records from the right table and only matching rows from the left table | :::image type=“icon” source=“media/joinoperator/join-rightouter.png” border=“false”::: |

| fullouter | Full outer join Schema: All columns from both tables, including the matching keys Rows: All records from both tables with unmatched cells populated with null | :::image type=“icon” source=“media/joinoperator/join-fullouter.png” border=“false”::: |

| leftsemi | Left semi join Schema: All columns from the left table Rows: All records from the left table that match records from the right table | :::image type=“icon” source=“media/joinoperator/join-leftsemi.png” border=“false”::: |

leftanti, anti, leftantisemi | Left anti join and semi variant Schema: All columns from the left table Rows: All records from the left table that don’t match records from the right table | :::image type=“icon” source=“media/joinoperator/join-leftanti.png” border=“false”::: |

| rightsemi | Right semi join Schema: All columns from the right table Rows: All records from the right table that match records from the left table | :::image type=“icon” source=“media/joinoperator/join-rightsemi.png” border=“false”::: |

rightanti, rightantisemi | Right anti join and semi variant Schema: All columns from the right table Rows: All records from the right table that don’t match records from the left table | :::image type=“icon” source=“media/joinoperator/join-rightanti.png” border=“false”::: |

Cross-join

KQL doesn’t provide a cross-join flavor. However, you can achieve a cross-join effect by using a placeholder key approach.

In the following example, a placeholder key is added to both tables and then used for the inner join operation, effectively achieving a cross-join-like behavior:

X | extend placeholder=1 | join kind=inner (Y | extend placeholder=1) on placeholder

Related content

1.5 - Joining within time window

It’s often useful to join between two large datasets on some high-cardinality key, such as an operation ID or a session ID, and further limit the right-hand-side ($right) records that need to match up with each left-hand-side ($left) record by adding a restriction on the “time-distance” between datetime columns on the left and on the right.

The above operation differs from the usual join operation, since for the equi-join part of matching the high-cardinality key between the left and right datasets, the system can also apply a distance function and use it to considerably speed up the join.

Example to identify event sequences without time window

To identify event sequences within a relatively small time window, this example uses a table T with the following schema:

SessionId: A column of typestringwith correlation IDs.EventType: A column of typestringthat identifies the event type of the record.Timestamp: A column of typedatetimeindicates when the event described by the record happened.

| SessionId | EventType | Timestamp |

|---|---|---|

| 0 | A | 2017-10-01T00:00:00Z |

| 0 | B | 2017-10-01T00:01:00Z |

| 1 | B | 2017-10-01T00:02:00Z |

| 1 | A | 2017-10-01T00:03:00Z |

| 3 | A | 2017-10-01T00:04:00Z |

| 3 | B | 2017-10-01T00:10:00Z |

The following query creates the dataset and then identifies all the session IDs in which event type A was followed by an event type B within a 1min time window.

let T = datatable(SessionId:string, EventType:string, Timestamp:datetime)

[

'0', 'A', datetime(2017-10-01 00:00:00),

'0', 'B', datetime(2017-10-01 00:01:00),

'1', 'B', datetime(2017-10-01 00:02:00),

'1', 'A', datetime(2017-10-01 00:03:00),

'3', 'A', datetime(2017-10-01 00:04:00),

'3', 'B', datetime(2017-10-01 00:10:00),

];

T

| where EventType == 'A'

| project SessionId, Start=Timestamp

| join kind=inner

(

T

| where EventType == 'B'

| project SessionId, End=Timestamp

) on SessionId

| where (End - Start) between (0min .. 1min)

| project SessionId, Start, End

Output

| SessionId | Start | End |

|---|---|---|

| 0 | 2017-10-01 00:00:00.0000000 | 2017-10-01 00:01:00.0000000 |

Example optimized with time window

To optimize this query, we can rewrite it to account for the time window. THe time window is expressed as a join key. Rewrite the query so that the datetime values are “discretized” into buckets whose size is half the size of the time window. Use equi-join to compare the bucket IDs.

The query finds pairs of events within the same session (SessionId) where an ‘A’ event is followed by a ‘B’ event within 1 minute. It projects the session ID, the start time of the ‘A’ event, and the end time of the ‘B’ event.

let T = datatable(SessionId:string, EventType:string, Timestamp:datetime)

[

'0', 'A', datetime(2017-10-01 00:00:00),

'0', 'B', datetime(2017-10-01 00:01:00),

'1', 'B', datetime(2017-10-01 00:02:00),

'1', 'A', datetime(2017-10-01 00:03:00),

'3', 'A', datetime(2017-10-01 00:04:00),

'3', 'B', datetime(2017-10-01 00:10:00),

];

let lookupWindow = 1min;

let lookupBin = lookupWindow / 2.0;

T

| where EventType == 'A'

| project SessionId, Start=Timestamp, TimeKey = bin(Timestamp, lookupBin)

| join kind=inner

(

T

| where EventType == 'B'

| project SessionId, End=Timestamp,

TimeKey = range(bin(Timestamp-lookupWindow, lookupBin),

bin(Timestamp, lookupBin),

lookupBin)

| mv-expand TimeKey to typeof(datetime)

) on SessionId, TimeKey

| where (End - Start) between (0min .. lookupWindow)

| project SessionId, Start, End

Output

| SessionId | Start | End |

|---|---|---|

| 0 | 2017-10-01 00:00:00.0000000 | 2017-10-01 00:01:00.0000000 |

5 million data query

The next query emulates an extensive dataset of 5M records and approximately 1M Session IDs and runs the query with the time window technique.

let T = range x from 1 to 5000000 step 1

| extend SessionId = rand(1000000), EventType = rand(3), Time=datetime(2017-01-01)+(x * 10ms)

| extend EventType = case(EventType < 1, "A",

EventType < 2, "B",

"C");

let lookupWindow = 1min;

let lookupBin = lookupWindow / 2.0;

T

| where EventType == 'A'

| project SessionId, Start=Time, TimeKey = bin(Time, lookupBin)

| join kind=inner

(

T

| where EventType == 'B'

| project SessionId, End=Time,

TimeKey = range(bin(Time-lookupWindow, lookupBin),

bin(Time, lookupBin),

lookupBin)

| mv-expand TimeKey to typeof(datetime)

) on SessionId, TimeKey

| where (End - Start) between (0min .. lookupWindow)

| project SessionId, Start, End

| count

Output

| Count |

|---|

| 3344 |

Related content

2 - Render operator

2.1 - visualizations

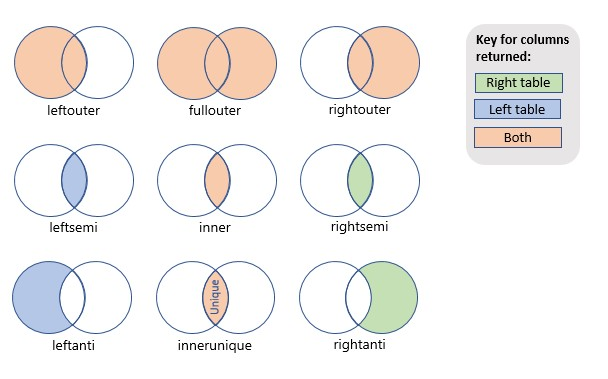

2.1.1 - Anomaly chart visualization

The anomaly chart visualization is similar to a timechart, but highlights anomalies using the series_decompose_anomalies function.

Syntax

T | render anomalychart [with ( propertyName = propertyValue [, …])]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors. (true or false) |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

ymin | The minimum value to be displayed on Y-axis. |

ymax | The maximum value to be displayed on Y-axis. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ysplit | How to split the visualization into multiple y-axis values. For more information, see Multiple y-axes. |

ytitle | The title of the y-axis (of type string). |

anomalycolumns | Comma-delimited list of columns, which will be considered as anomaly series and displayed as points on the chart |

ysplit property

This visualization supports splitting into multiple y-axis values. The supported values of this property are:

ysplit | Description |

|---|---|

none | A single y-axis is displayed for all series data. (Default) |

axes | A single chart is displayed with multiple y-axes (one per series). |

panels | One chart is rendered for each ycolumn value. Maximum five panels. |

Example

The example in this section shows how to use the syntax to help you get started.

let min_t = datetime(2017-01-05);

let max_t = datetime(2017-02-03 22:00);

let dt = 2h;

demo_make_series2

| make-series num=avg(num) on TimeStamp from min_t to max_t step dt by sid

| where sid == 'TS1' // select a single time series for a cleaner visualization

| extend (anomalies, score, baseline) = series_decompose_anomalies(num, 1.5, -1, 'linefit')

| render anomalychart with(anomalycolumns=anomalies, title='Web app. traffic of a month, anomalies') //use "| render anomalychart with anomalycolumns=anomalies" to render the anomalies as bold points on the series charts.

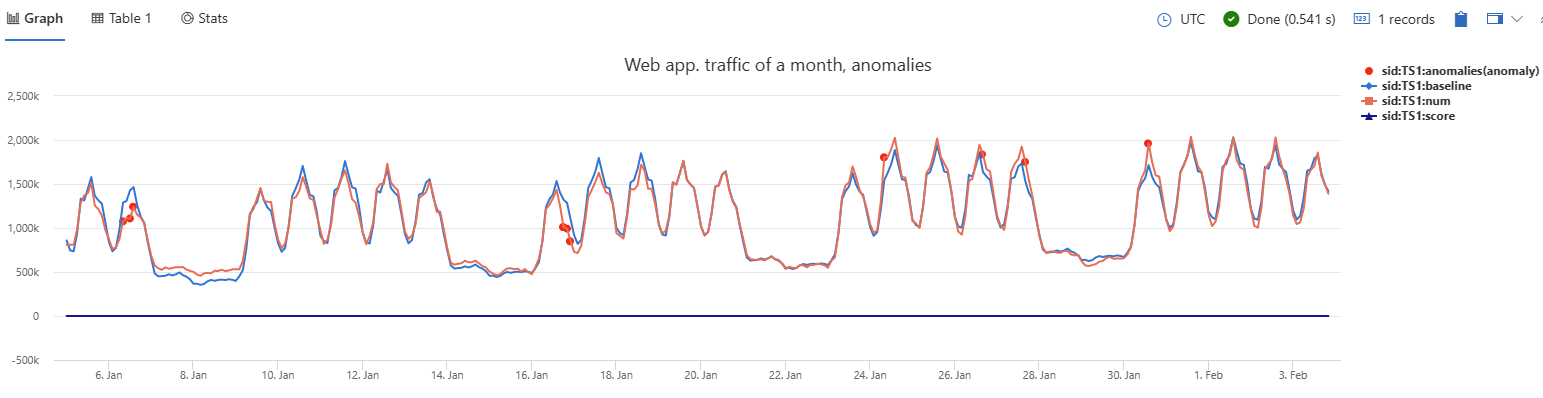

2.1.2 - Area chart visualization

The area chart visual shows a time-series relationship. The first column of the query should be numeric and is used as the x-axis. Other numeric columns are the y-axes. Unlike line charts, area charts also visually represent volume. Area charts are ideal for indicating the change among different datasets.

Syntax

T | render areachart [with (propertyName = propertyValue [, …])]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors. (true or false) |

kind | Further elaboration of the visualization kind. For more information, see kind property. |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

ymin | The minimum value to be displayed on Y-axis. |

ymax | The maximum value to be displayed on Y-axis. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ysplit | How to split the y-axis values for multiple visualizations. |

ytitle | The title of the y-axis (of type string). |

ysplit property

This visualization supports splitting into multiple y-axis values:

ysplit | Description |

|---|---|

none | A single y-axis is displayed for all series data. (Default) |

axes | A single chart is displayed with multiple y-axes (one per series). |

panels | One chart is rendered for each ycolumn value. Maximum five panels. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

kind | Further elaboration of the visualization kind. For more information, see kind property. |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

title | The title of the visualization (of type string). |

kind property

This visualization can be further elaborated by providing the kind property.

The supported values of this property are:

kind value | Description |

|---|---|

default | Each “area” stands on its own. |

unstacked | Same as default. |

stacked | Stack “areas” to the right. |

stacked100 | Stack “areas” to the right and stretch each one to the same width as the others. |

Examples

The example in this section shows how to use the syntax to help you get started.

Simple area chart

The following example shows a basic area chart visualization.

demo_series3

| render areachart

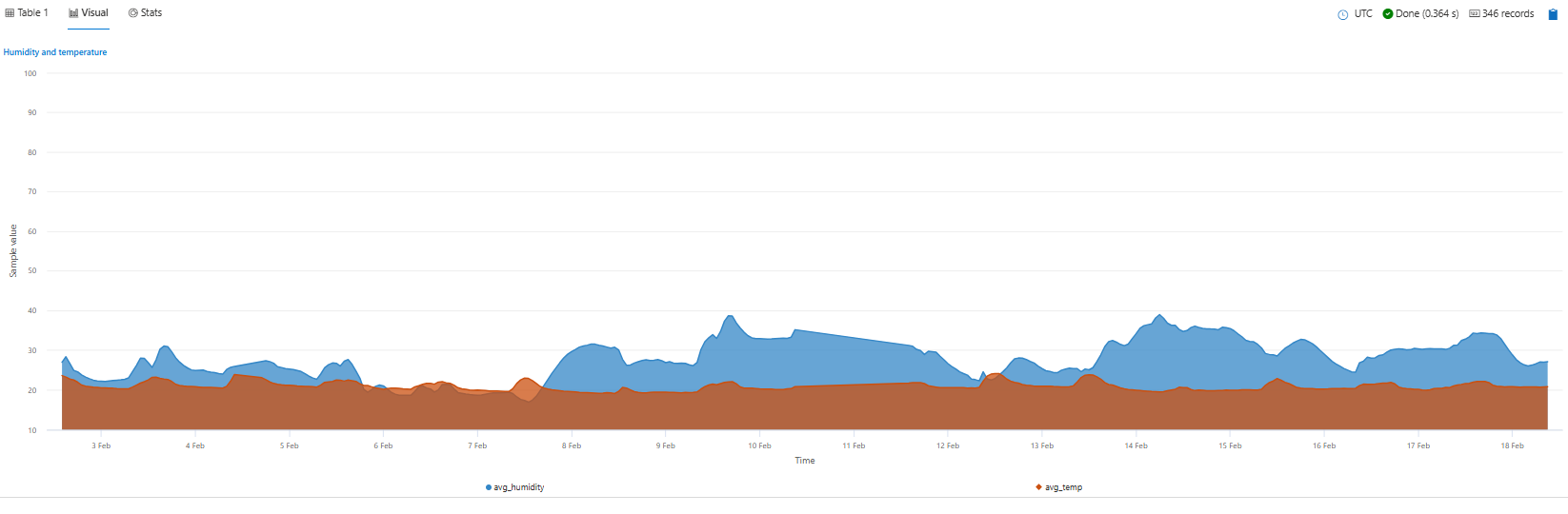

Area chart using properties

The following example shows an area chart using multiple property settings.

OccupancyDetection

| summarize avg_temp= avg(Temperature), avg_humidity= avg(Humidity) by bin(Timestamp, 1h)

| render areachart

with (

kind = unstacked,

legend = visible,

ytitle ="Sample value",

ymin = 10,

ymax =100,

xtitle = "Time",

title ="Humidity and temperature"

)

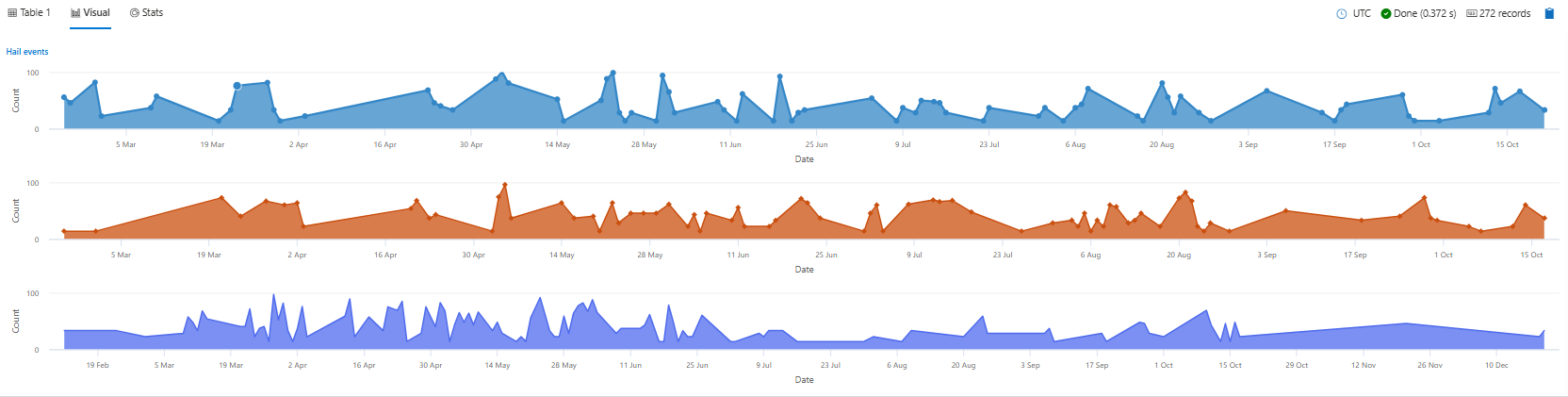

Area chart using split panels

The following example shows an area chart using split panels. In this example, the ysplit property is set to panels.

StormEvents

| where State in ("TEXAS", "NEBRASKA", "KANSAS") and EventType == "Hail"

| summarize count=count() by State, bin(StartTime, 1d)

| render areachart

with (

ysplit= panels,

legend = visible,

ycolumns=count,

yaxis =log,

ytitle ="Count",

ymin = 0,

ymax =100,

xaxis = linear,

xcolumn = StartTime,

xtitle = "Date",

title ="Hail events"

)

2.1.3 - Bar chart visualization

The bar chart visual needs a minimum of two columns in the query result. By default, the first column is used as the y-axis. This column can contain text, datetime, or numeric data types. The other columns are used as the x-axis and contain numeric data types to be displayed as horizontal lines. Bar charts are used mainly for comparing numeric and nominal discrete values, where the length of each line represents its value.

Syntax

T | render barchart [with (propertyName = propertyValue [, …])]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors (true or false). |

kind | Further elaboration of the visualization kind. For more information, see kind property. |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

ymin | The minimum value to be displayed on Y-axis. |

ymax | The maximum value to be displayed on Y-axis. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ytitle | The title of the y-axis (of type string). |

ysplit | How to split the visualization into multiple y-axis values. For more information, see ysplit property. |

ysplit property

This visualization supports splitting into multiple y-axis values:

ysplit | Description |

|---|---|

none | A single y-axis is displayed for all series data. This is the default. |

axes | A single chart is displayed with multiple y-axes (one per series). |

panels | One chart is rendered for each ycolumn value. Maximum five panels. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

kind | Further elaboration of the visualization kind. For more information, see kind property. |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

title | The title of the visualization (of type string). |

kind property

This visualization can be further elaborated by providing the kind property.

The supported values of this property are:

kind value | Description |

|---|---|

default | Each “bar” stands on its own. |

unstacked | Same as default. |

stacked | Stack “bars”. |

stacked100 | Stack “bars” and stretch each one to the same width as the others. |

Examples

The example in this section shows how to use the syntax to help you get started.

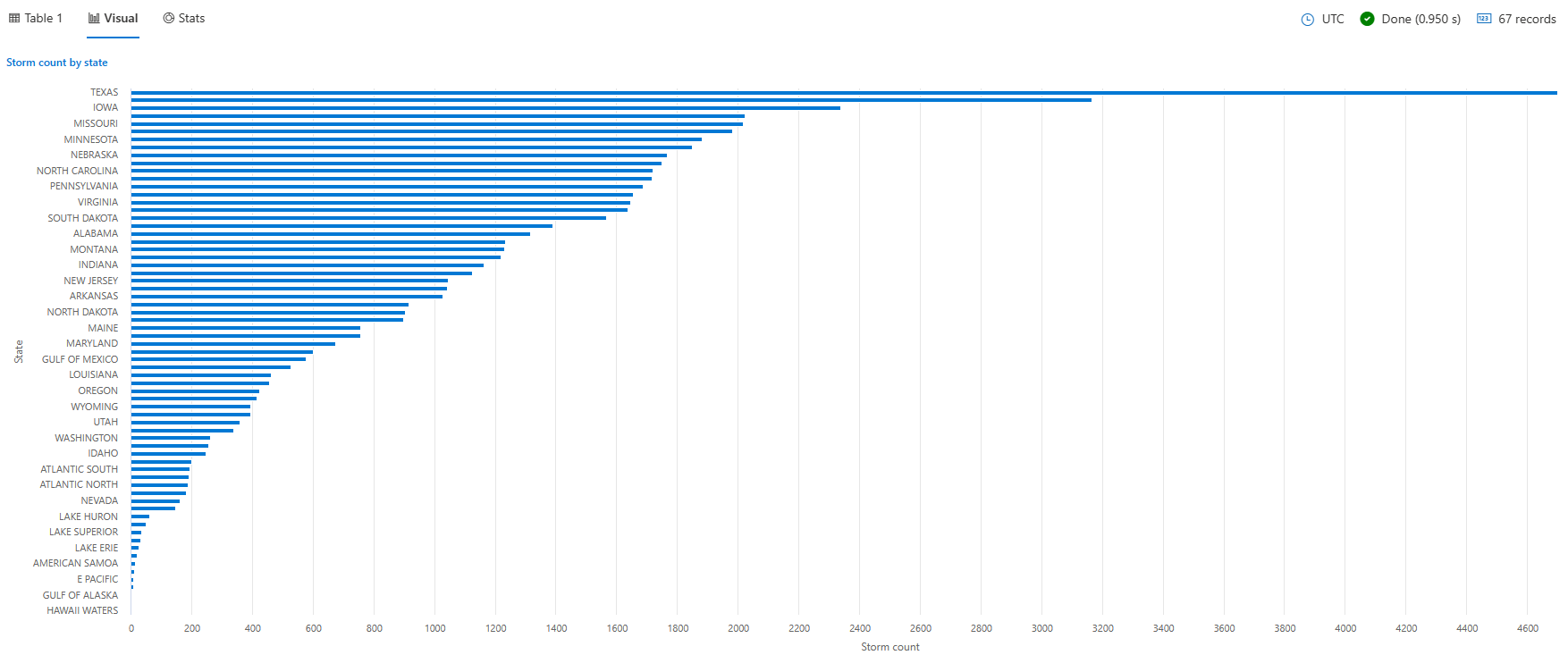

Render a bar chart

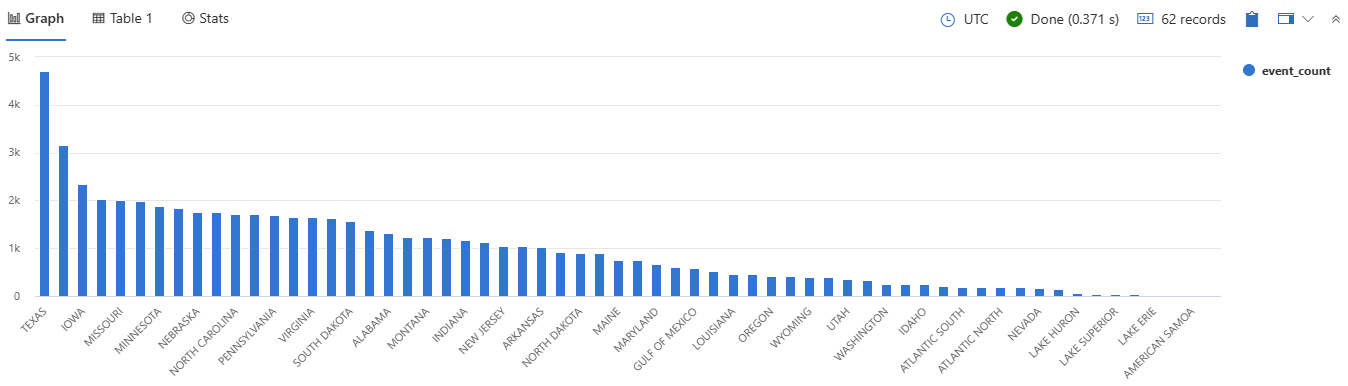

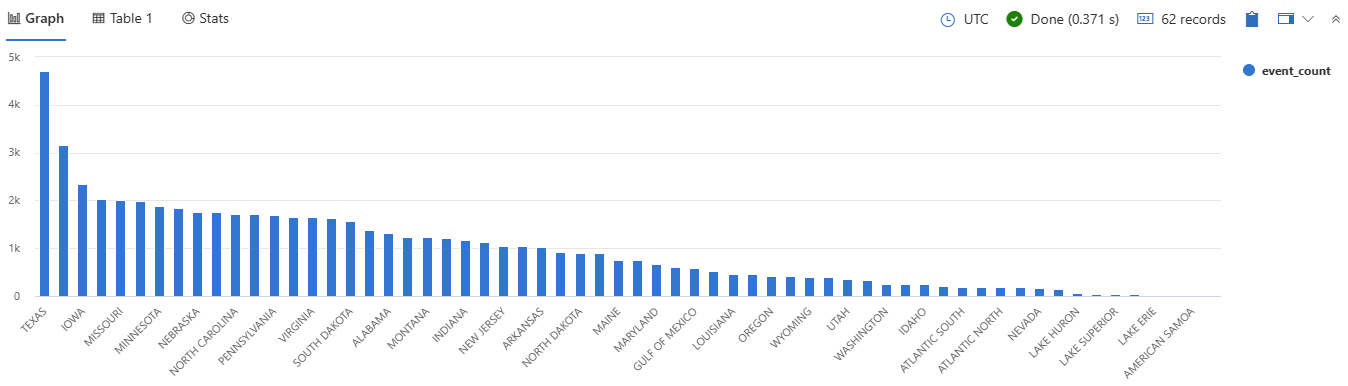

The following query creates a bar chart displaying the number of storm events for each state, filtering only those states with more than 10 events. The chart provides a visual representation of the event distribution across different states.

StormEvents

| summarize event_count=count() by State

| project State, event_count

| render barchart

with (

title="Storm count by state",

ytitle="Storm count",

xtitle="State",

legend=hidden

)

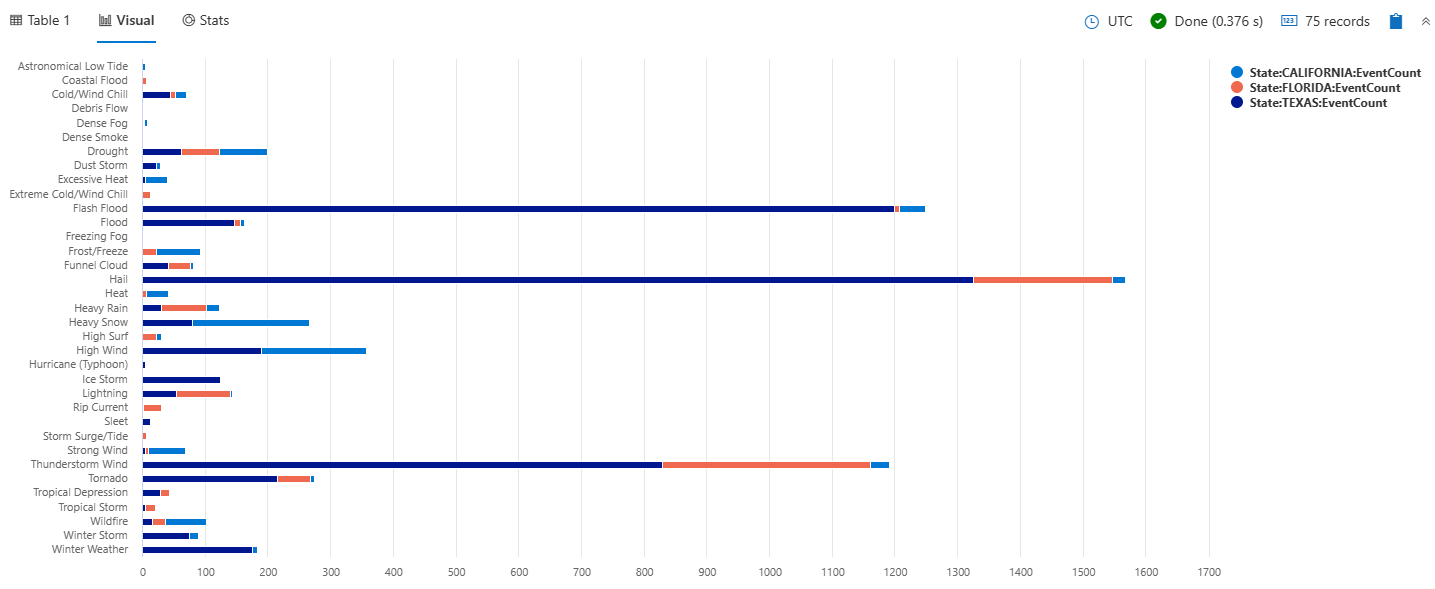

Render a stacked bar chart

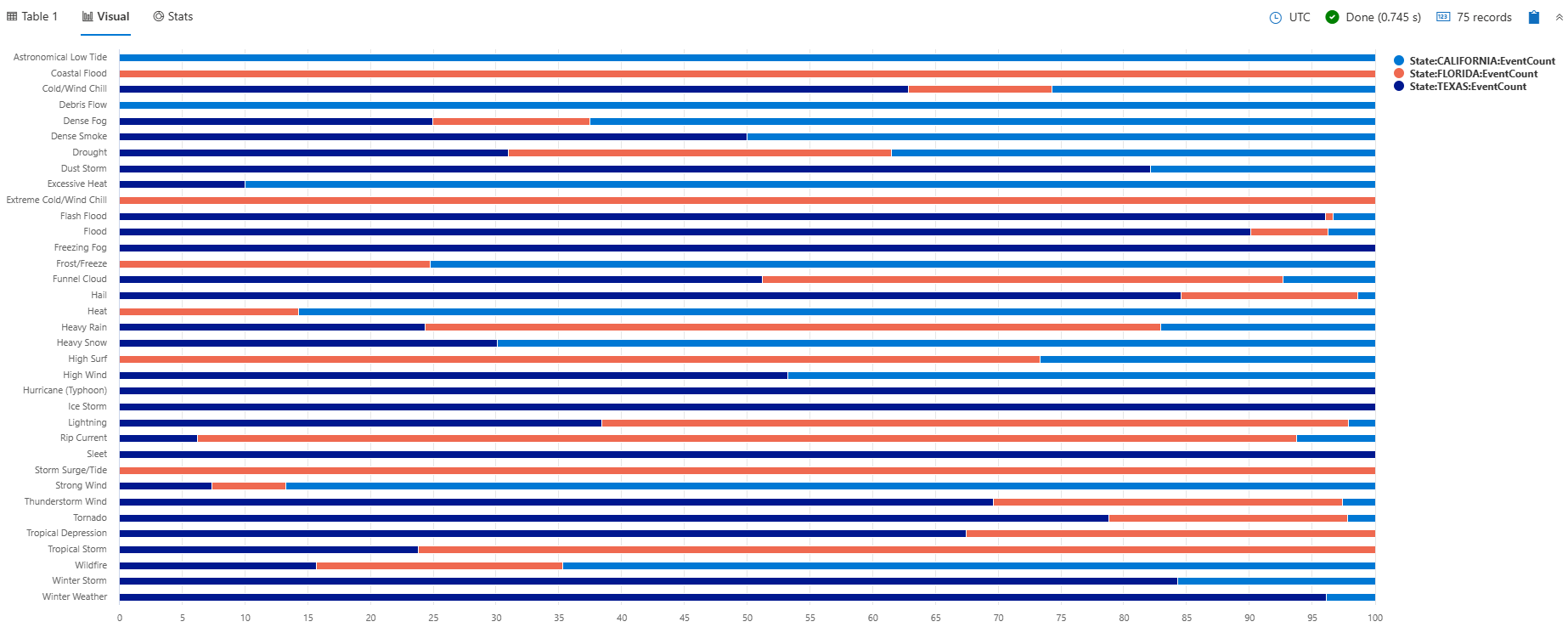

The following query creates a stacked bar chart that shows the total count of storm events by their type for selected states of Texas, California, and Florida. Each bar represents a storm event type, and the stacked bars show the breakdown of storm events by state within each type.

StormEvents

| where State in ("TEXAS", "CALIFORNIA", "FLORIDA")

| summarize EventCount = count() by EventType, State

| order by EventType asc, State desc

| render barchart with (kind=stacked)

Render a stacked100 bar chart

The following query creates a stacked100 bar chart that shows the total count of storm events by their type for selected states of Texas, California, and Florida. The chart shows the distribution of storm events across states within each type. Although the stacks visually sum up to 100, the values actually represent the number of events, not percentages. This visualization is helpful for understanding both the percentages and the actual event counts.

StormEvents

| where State in ("TEXAS", "CALIFORNIA", "FLORIDA")

| summarize EventCount = count() by EventType, State

| order by EventType asc, State desc

| render barchart with (kind=stacked100)

Use the ysplit property

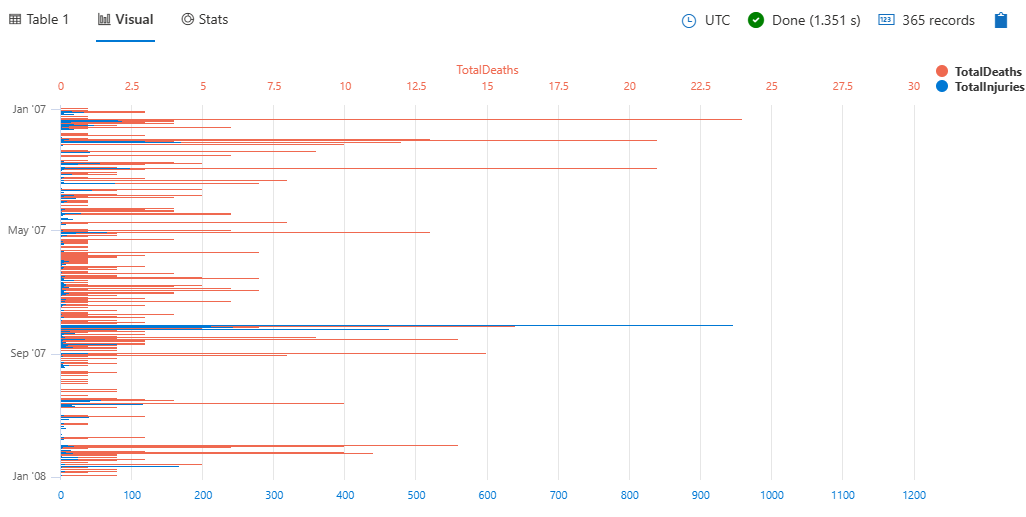

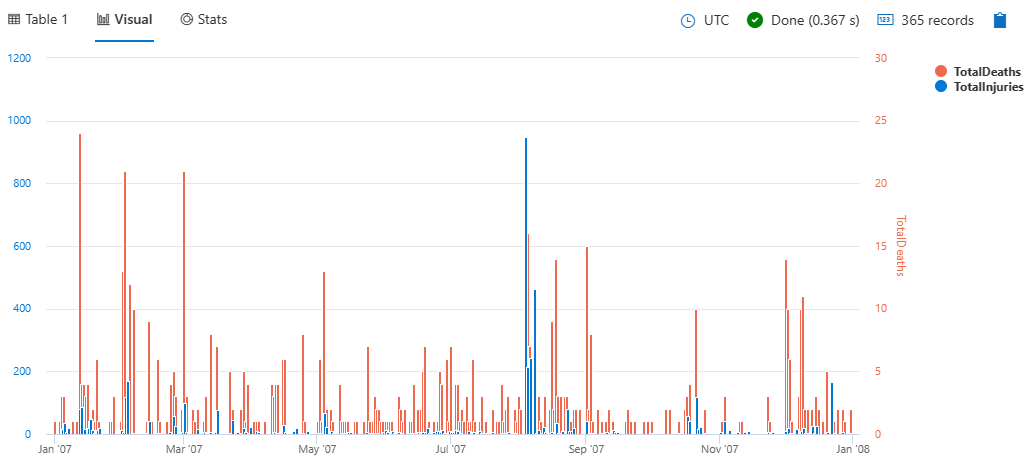

The following query provides a daily summary of storm-related injuries and deaths, visualized as a bar chart with split axes/panels for better comparison.

StormEvents

| summarize

TotalInjuries = sum(InjuriesDirect) + sum(InjuriesIndirect),

TotalDeaths = sum(DeathsDirect) + sum(DeathsIndirect)

by bin(StartTime, 1d)

| project StartTime, TotalInjuries, TotalDeaths

| render barchart with (ysplit=axes)

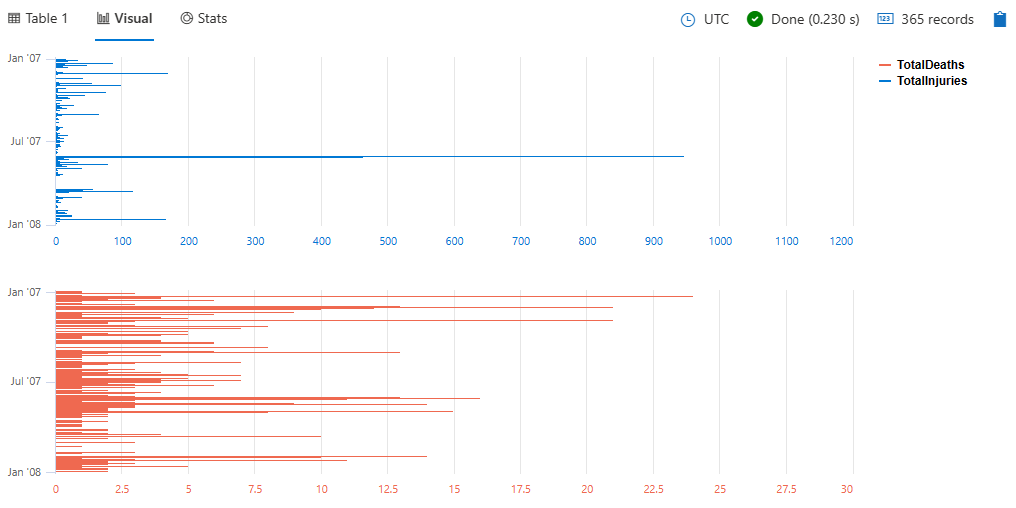

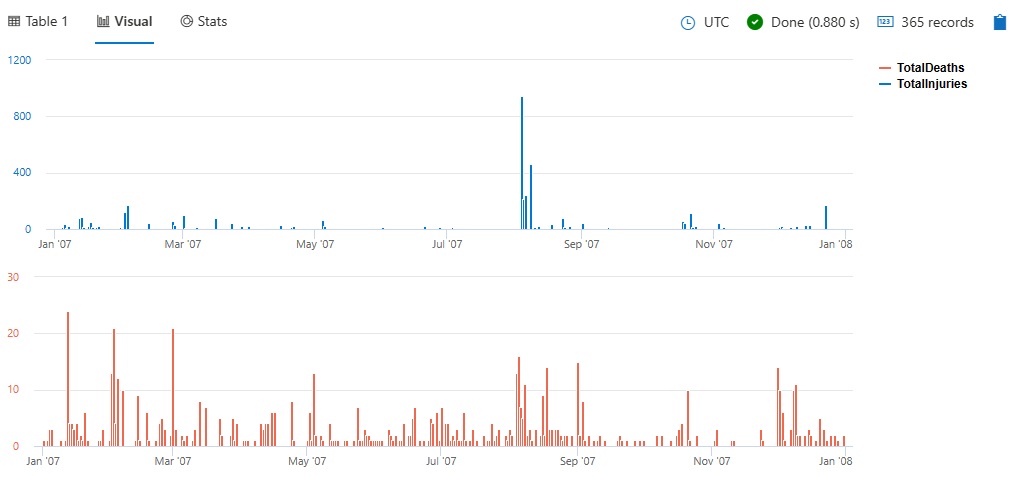

To split the view into separate panels, specify panels instead of axes:

StormEvents

| summarize

TotalInjuries = sum(InjuriesDirect) + sum(InjuriesIndirect),

TotalDeaths = sum(DeathsDirect) + sum(DeathsIndirect)

by bin(StartTime, 1d)

| project StartTime, TotalInjuries, TotalDeaths

| render barchart with (ysplit=panels)

2.1.4 - Card visualization

The card visual only shows one element. If there are multiple columns and rows in the output, the first result record is treated as set of scalar values and shows as a card.

Syntax

T | render card [with (propertyName = propertyValue [, …])]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

title | The title of the visualization (of type string). |

Example

This query provides a count of flood events in Virginia and displays the result in a card format.

StormEvents

| where State=="VIRGINIA" and EventType=="Flood"

| count

| render card with (title="Floods in Virginia")

2.1.5 - Column chart visualization

The column chart visual needs a minimum of two columns in the query result. By default, the first column is used as the x-axis. This column can contain text, datetime, or numeric data types. The other columns are used as the y-axis and contain numeric data types to be displayed as vertical lines. Column charts are used for comparing specific sub category items in a main category range, where the length of each line represents its value.

Syntax

T | render columnchart [with (propertyName = propertyValue [, …])]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors. (true or false) |

kind | Further elaboration of the visualization kind. For more information, see kind property. |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

ymin | The minimum value to be displayed on Y-axis. |

ymax | The maximum value to be displayed on Y-axis. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ytitle | The title of the y-axis (of type string). |

ysplit | How to split the visualization into multiple y-axis values. For more information, see ysplit property. |

ysplit property

This visualization supports splitting into multiple y-axis values:

ysplit | Description |

|---|---|

none | A single y-axis is displayed for all series data. This is the default. |

axes | A single chart is displayed with multiple y-axes (one per series). |

panels | One chart is rendered for each ycolumn value. Maximum five panels. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

kind | Further elaboration of the visualization kind. For more information, see kind property. |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

title | The title of the visualization (of type string). |

kind property

This visualization can be further elaborated by providing the kind property.

The supported values of this property are:

kind value | Definition |

|---|---|

default | Each “column” stands on its own. |

unstacked | Same as default. |

stacked | Stack “columns” one atop the other. |

stacked100 | Stack “columns” and stretch each one to the same height as the others. |

Examples

The example in this section shows how to use the syntax to help you get started.

Render a column chart

This query provides a visual representation of states with a high frequency of storm events, specifically those with more than 10 events, using a column chart.

StormEvents

| summarize event_count=count() by State

| where event_count > 10

| project State, event_count

| render columnchart

Use the ysplit property

This query provides a daily summary of storm-related injuries and deaths, visualized as a column chart with split axes/panels for better comparison.

StormEvents

| summarize

TotalInjuries = sum(InjuriesDirect) + sum(InjuriesIndirect),

TotalDeaths = sum(DeathsDirect) + sum(DeathsIndirect)

by bin(StartTime, 1d)

| project StartTime, TotalInjuries, TotalDeaths

| render columnchart with (ysplit=axes)

To split the view into separate panels, specify panels instead of axes:

StormEvents

| summarize

TotalInjuries = sum(InjuriesDirect) + sum(InjuriesIndirect),

TotalDeaths = sum(DeathsDirect) + sum(DeathsIndirect)

by bin(StartTime, 1d)

| project StartTime, TotalInjuries, TotalDeaths

| render columnchart with (ysplit=panels)

Example

This query helps you identify states with a significant number of storm events and presents the information in a clear, visual format.

StormEvents

| summarize event_count=count() by State

| where event_count > 10

| project State, event_count

| render columnchart

2.1.6 - Ladder chart visualization

The last two columns are the x-axis, and the other columns are the y-axis.

Syntax

T | render ladderchart [with (propertyName = propertyValue [, …])]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors. (true or false) |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

ymin | The minimum value to be displayed on Y-axis. |

ymax | The maximum value to be displayed on Y-axis. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ytitle | The title of the y-axis (of type string). |

Examples

The example in this section shows how to use the syntax to help you get started.

The examples in this article use publicly available tables in the help cluster, such as the StormEvents table in the Samples database.

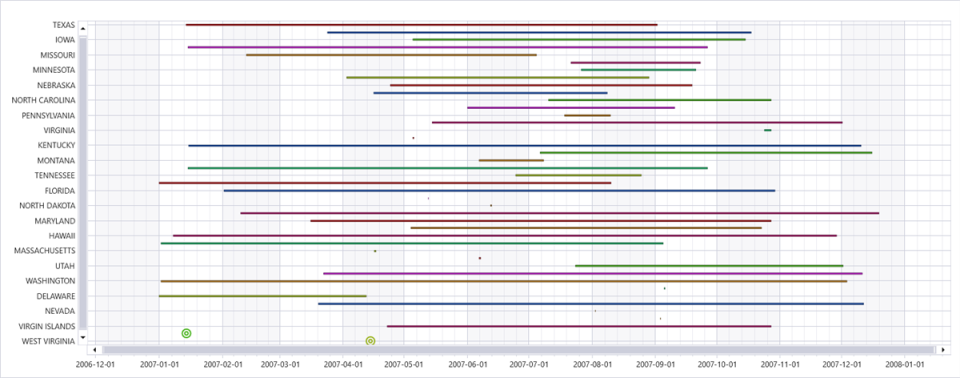

Dates of storms by state

This query outputs a state-wise visualization of the duration of rain-related storm events, displayed as a ladder chart to help you analyze the temporal distribution of these events.

StormEvents

| where EventType has "rain"

| summarize min(StartTime), max(EndTime) by State

| render ladderchart

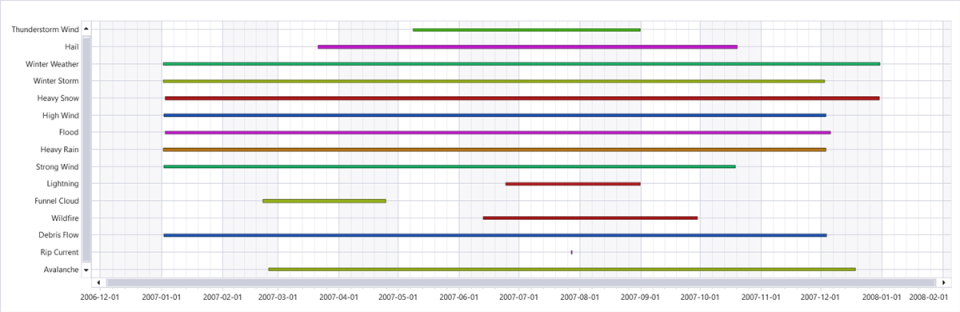

Dates of storms by event type

This query outputs a visualization of the duration of various storm events in Washington, displayed as a ladder chart to help you analyze the temporal distribution of these events by type.

StormEvents

| where State == "WASHINGTON"

| summarize min(StartTime), max(EndTime) by EventType

| render ladderchart

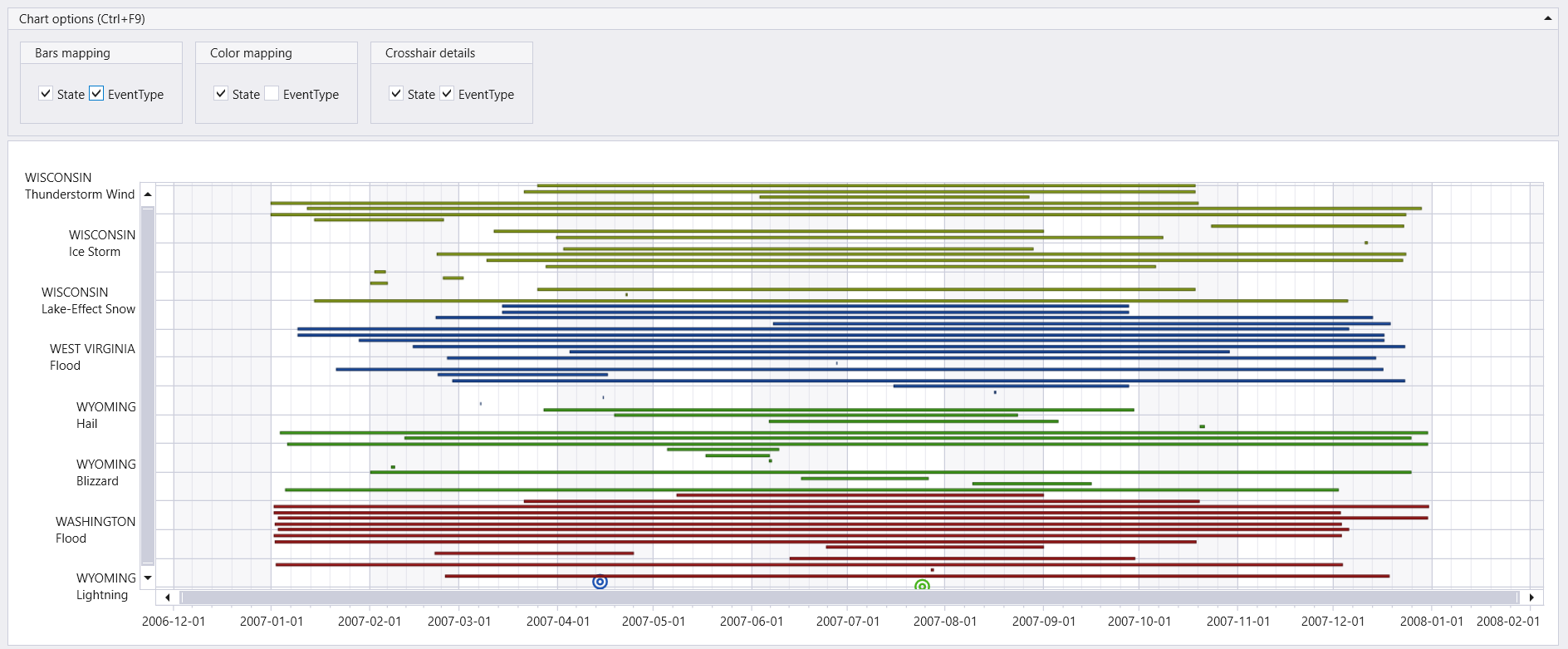

Dates of storms by state and event type

This query outputs a visualization of the duration of various storm events in states starting with “W”, displayed as a ladder chart to help you analyze the temporal distribution of these events by state and event type.

StormEvents

| where State startswith "W"

| summarize min(StartTime), max(EndTime) by State, EventType

| render ladderchart with (series=State, EventType)

2.1.7 - Line chart visualization

The line chart visual is the most basic type of chart. The first column of the query should be numeric and is used as the x-axis. Other numeric columns are the y-axes. Line charts track changes over short and long periods of time. When smaller changes exist, line graphs are more useful than bar graphs.

Syntax

T | render linechart [with ( propertyName = propertyValue [, …] )]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors (true or false). |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

ymin | The minimum value to be displayed on Y-axis. |

ymax | The maximum value to be displayed on Y-axis. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ysplit | How to split the visualization into multiple y-axis values. For more information, see ysplit property. |

ytitle | The title of the y-axis (of type string). |

ysplit property

This visualization supports splitting into multiple y-axis values:

ysplit | Description |

|---|---|

none | A single y-axis is displayed for all series data. (Default) |

axes | A single chart is displayed with multiple y-axes (one per series). |

panels | One chart is rendered for each ycolumn value. Maximum five panels. |

Examples

The example in this section shows how to use the syntax to help you get started.

Render a line chart

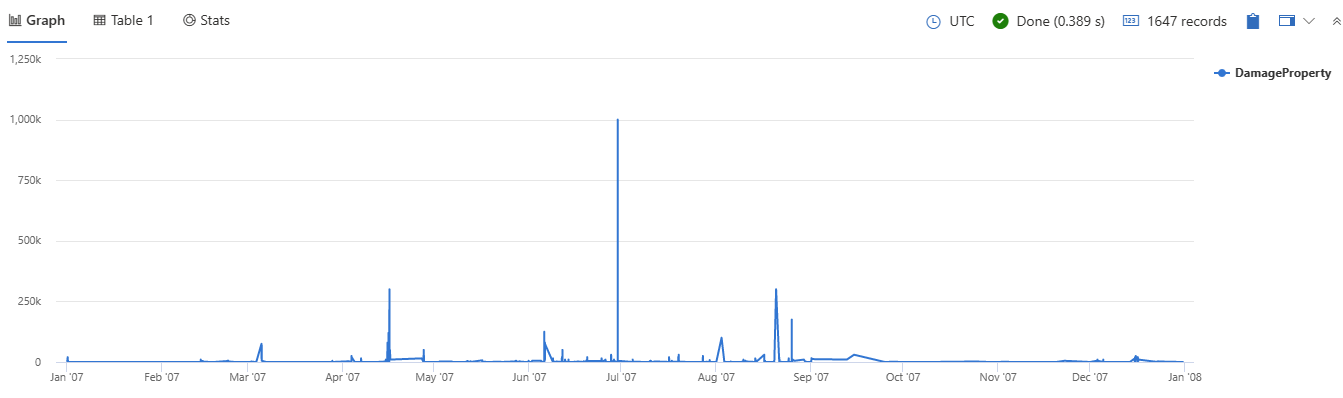

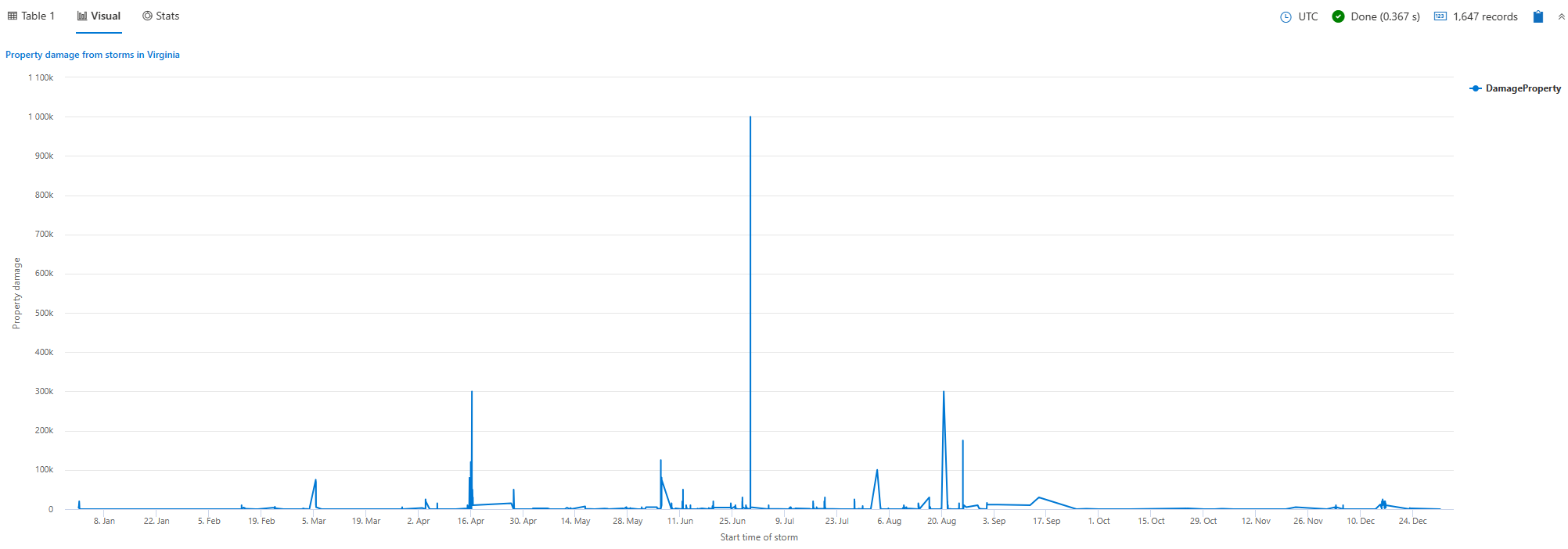

This query retrieves storm events in Virginia, focusing on the start time and property damage, and then displays this information in a line chart.

StormEvents

| where State=="VIRGINIA"

| project StartTime, DamageProperty

| render linechart

Label a line chart

This query retrieves storm events in Virginia, focusing on the start time and property damage, and then displays this information in a line chart with specified titles for better clarity and presentation.

StormEvents

| where State=="VIRGINIA"

| project StartTime, DamageProperty

| render linechart

with (

title="Property damage from storms in Virginia",

xtitle="Start time of storm",

ytitle="Property damage"

)

Limit values displayed on the y-axis

This query retrieves storm events in Virginia, focusing on the start time and property damage, and then displays this information in a line chart with specified y-axis limits for better visualization of the data.

StormEvents

| where State=="VIRGINIA"

| project StartTime, DamageProperty

| render linechart with (ymin=7000, ymax=300000)

View multiple y-axes

This query retrieves hail events in Texas, Nebraska, and Kansas. It counts the number of hail events per day for each state, and then displays this information in a line chart with separate panels for each state.

StormEvents

| where State in ("TEXAS", "NEBRASKA", "KANSAS") and EventType == "Hail"

| summarize count() by State, bin(StartTime, 1d)

| render linechart with (ysplit=panels)

Related content

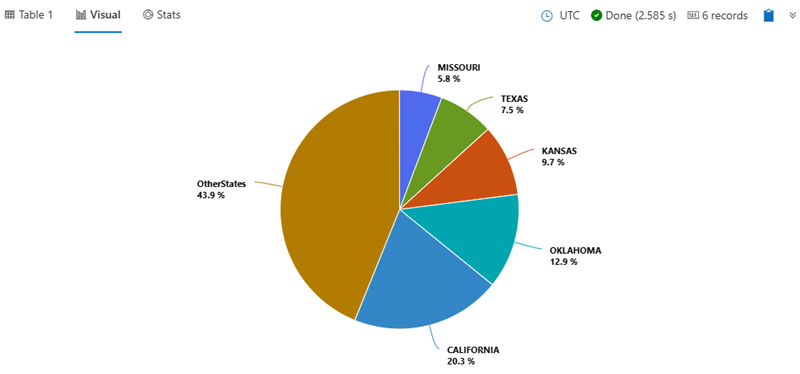

2.1.8 - Pie chart visualization

The pie chart visual needs a minimum of two columns in the query result. By default, the first column is used as the color axis. This column can contain text, datetime, or numeric data types. Other columns will be used to determine the size of each slice and contain numeric data types. Pie charts are used for presenting a composition of categories and their proportions out of a total.

The pie chart visual can also be used in the context of Geospatial visualizations.

Syntax

T | render piechart [with (propertyName = propertyValue [, …])]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors. (true or false) |

kind | Further elaboration of the visualization kind. For more information, see kind property. |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ytitle | The title of the y-axis (of type string). |

| PropertyName | PropertyValue |

|---|---|

kind | Further elaboration of the visualization kind. For more information, see kind property. |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

title | The title of the visualization (of type string). |

kind property

This visualization can be further elaborated by providing the kind property.

The supported values of this property are:

kind value | Description |

|---|---|

map | Expected columns are [Longitude, Latitude] or GeoJSON point, color-axis and numeric. Supported in Kusto Explorer desktop. For more information, see Geospatial visualizations |

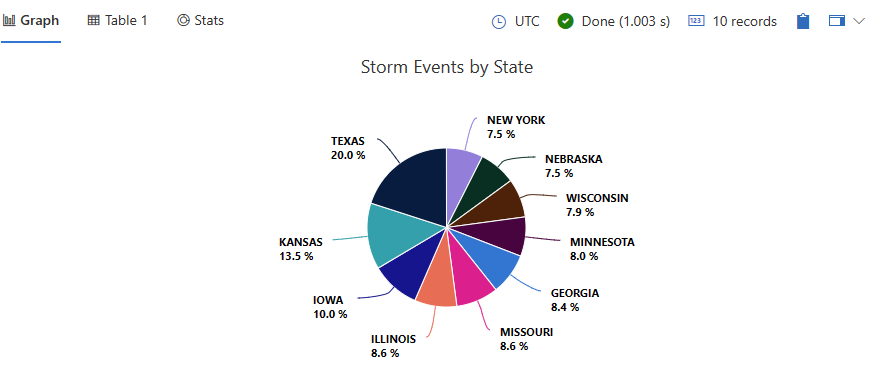

Example

This query provides a visual representation of the top 10 states with the highest number of storm events, displayed as a pie chart

StormEvents

| summarize statecount=count() by State

| sort by statecount

| limit 10

| render piechart with(title="Storm Events by State")

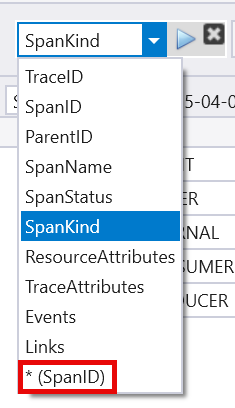

2.1.9 - Pivot chart visualization

Displays a pivot table and chart. You can interactively select data, columns, rows, and various chart types.

Syntax

T | render pivotchart

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

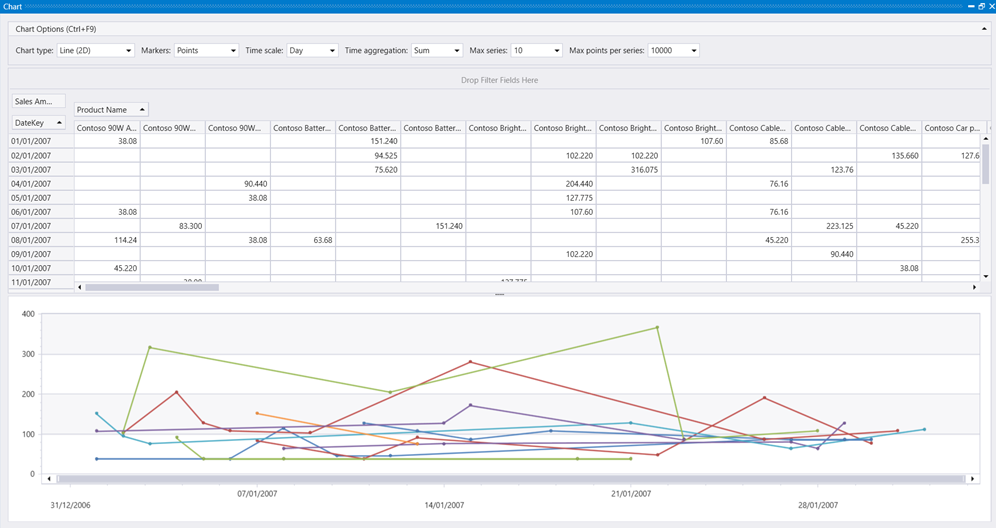

Example

This query provides a detailed analysis of sales for Contoso computer products within the specified date range, visualized as a pivot chart.

SalesFact

| join kind= inner Products on ProductKey

| where ProductCategoryName has "Computers" and ProductName has "Contoso"

| where DateKey between (datetime(2006-12-31) .. datetime(2007-02-01))

| project SalesAmount, ProductName, DateKey

| render pivotchart

Output

2.1.10 - Plotly visualization

The Plotly graphics library supports ~80 chart types that are useful for advanced charting including geographic, scientific, machine learning, 3d, animation, and many other chart types. For more information, see Plotly.

To render a Plotly visual in Kusto Query Language, the query must generate a table with a single string cell containing Plotly JSON. This Plotly JSON string can be generated by one of the following two methods:

Write your own Plotly visualization in Python

In this method, you dynamically create the Plotly JSON string in Python using the Plotly package. This process requires use of the python() plugin. The Python script is run on the existing nodes using the inline python() plugin. It generates a Plotly JSON that is rendered by the client application.

All types of Plotly visualizations are supported.

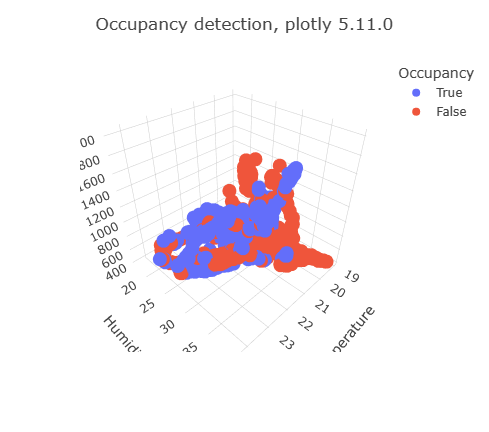

Example

The following query uses inline Python to create a 3D scatter chart:

OccupancyDetection

| project Temperature, Humidity, CO2, Occupancy

| where rand() < 0.1

| evaluate python(typeof(plotly:string),

```if 1:

import plotly.express as px

fig = px.scatter_3d(df, x='Temperature', y='Humidity', z='CO2', color='Occupancy')

fig.update_layout(title=dict(text="Occupancy detection, plotly 5.11.0"))

plotly_obj = fig.to_json()

result = pd.DataFrame(data = [plotly_obj], columns = ["plotly"])

```)

The Plotly graphics library supports ~80 chart types including basic charts, scientific, statistical, financial, maps, 3D, animations, and more. To render a Plotly visual in KQL, the query must generate a table with a single string cell containing Plotly JSON.

Since python isn’t available in this service, you create this Plotly JSON using a preprepared template.

Use a preprepared Plotly template

In this method, a preprepared Plotly JSON for specific visualization can be reused by replacing the data objects with the required data to be rendered. The templates can be stored in a standard table, and the data replacement logic can be packed in a stored function.

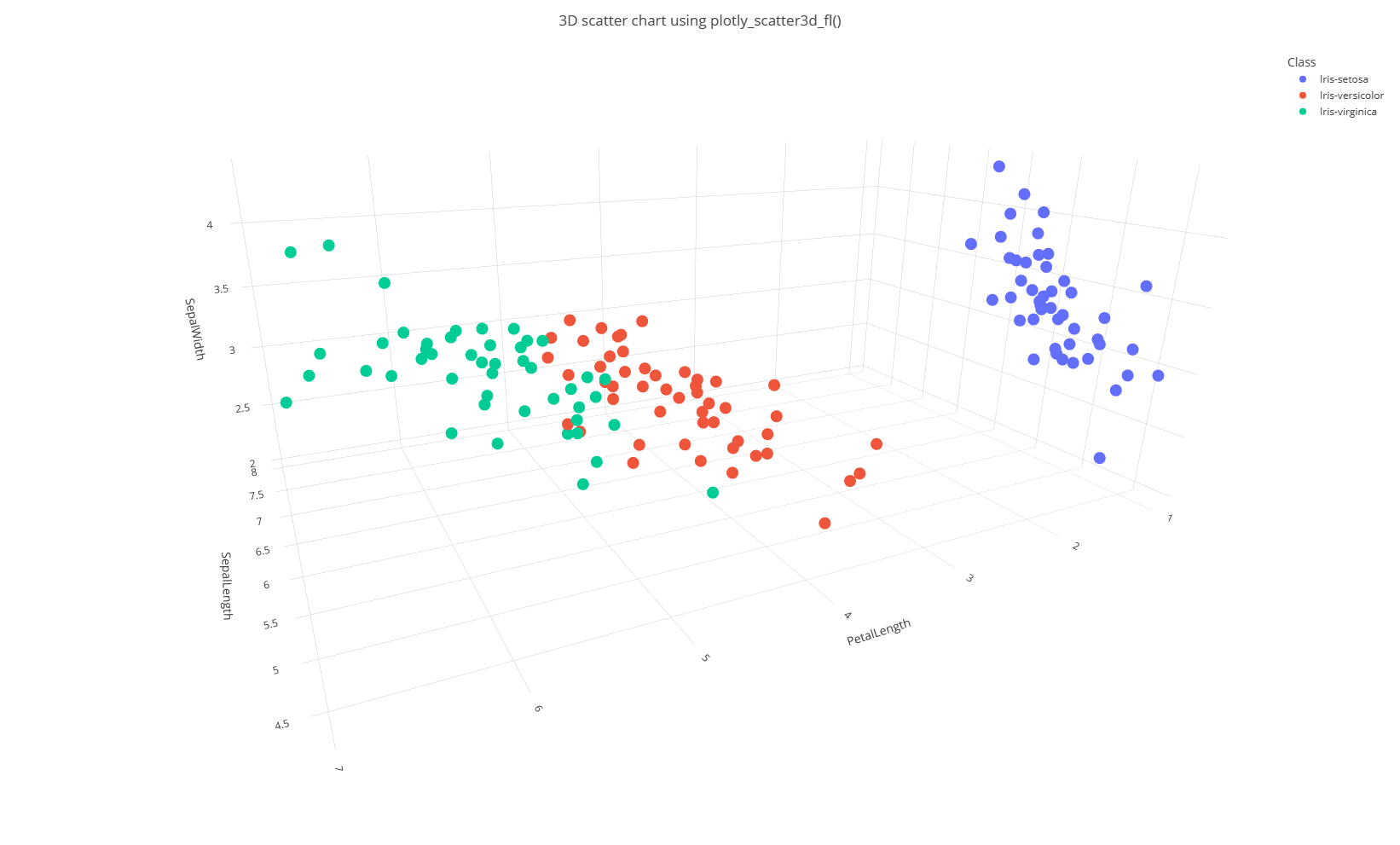

Currently, the supported templates are: plotly_anomaly_fl() and plotly_scatter3d_fl(). Refer to these documents for syntax and usage.

Example

let plotly_scatter3d_fl=(tbl:(*), x_col:string, y_col:string, z_col:string, aggr_col:string='', chart_title:string='3D Scatter chart')

{

let scatter3d_chart = toscalar(PlotlyTemplate | where name == "scatter3d" | project plotly);

let tbl_ex = tbl | extend _x = column_ifexists(x_col, 0.0), _y = column_ifexists(y_col, 0.0), _z = column_ifexists(z_col, 0.0), _aggr = column_ifexists(aggr_col, 'ALL');

tbl_ex

| serialize

| summarize _x=pack_array(make_list(_x)), _y=pack_array(make_list(_y)), _z=pack_array(make_list(_z)) by _aggr

| summarize _aggr=make_list(_aggr), _x=make_list(_x), _y=make_list(_y), _z=make_list(_z)

| extend plotly = scatter3d_chart

| extend plotly=replace_string(plotly, '$CLASS1$', tostring(_aggr[0]))

| extend plotly=replace_string(plotly, '$CLASS2$', tostring(_aggr[1]))

| extend plotly=replace_string(plotly, '$CLASS3$', tostring(_aggr[2]))

| extend plotly=replace_string(plotly, '$X_NAME$', x_col)

| extend plotly=replace_string(plotly, '$Y_NAME$', y_col)

| extend plotly=replace_string(plotly, '$Z_NAME$', z_col)

| extend plotly=replace_string(plotly, '$CLASS1_X$', tostring(_x[0]))

| extend plotly=replace_string(plotly, '$CLASS1_Y$', tostring(_y[0]))

| extend plotly=replace_string(plotly, '$CLASS1_Z$', tostring(_z[0]))

| extend plotly=replace_string(plotly, '$CLASS2_X$', tostring(_x[1]))

| extend plotly=replace_string(plotly, '$CLASS2_Y$', tostring(_y[1]))

| extend plotly=replace_string(plotly, '$CLASS2_Z$', tostring(_z[1]))

| extend plotly=replace_string(plotly, '$CLASS3_X$', tostring(_x[2]))

| extend plotly=replace_string(plotly, '$CLASS3_Y$', tostring(_y[2]))

| extend plotly=replace_string(plotly, '$CLASS3_Z$', tostring(_z[2]))

| extend plotly=replace_string(plotly, '$TITLE$', chart_title)

| project plotly

};

Iris

| invoke plotly_scatter3d_fl(x_col='SepalLength', y_col='PetalLength', z_col='SepalWidth', aggr_col='Class', chart_title='3D scatter chart using plotly_scatter3d_fl()')

| render plotly

Related content

2.1.11 - Scatter chart visualization

In a scatter chart visual, the first column is the x-axis and should be a numeric column. Other numeric columns are y-axes. Scatter plots are used to observe relationships between variables. The scatter chart visual can also be used in the context of Geospatial visualizations.

Syntax

T | render scatterchart [with (propertyName = propertyValue [, …])]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors. (true or false) |

kind | Further elaboration of the visualization kind. For more information, see kind property. |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

ymin | The minimum value to be displayed on Y-axis. |

ymax | The maximum value to be displayed on Y-axis. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ytitle | The title of the y-axis (of type string). |

| PropertyName | PropertyValue |

|---|---|

kind | Further elaboration of the visualization kind. For more information, see kind property. |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

title | The title of the visualization (of type string). |

kind property

This visualization can be further elaborated by providing the kind property.

The supported values of this property are:

kind value | Description |

|---|---|

map | Expected columns are [Longitude, Latitude] or GeoJSON point. Series column is optional. For more information, see Geospatial visualizations. |

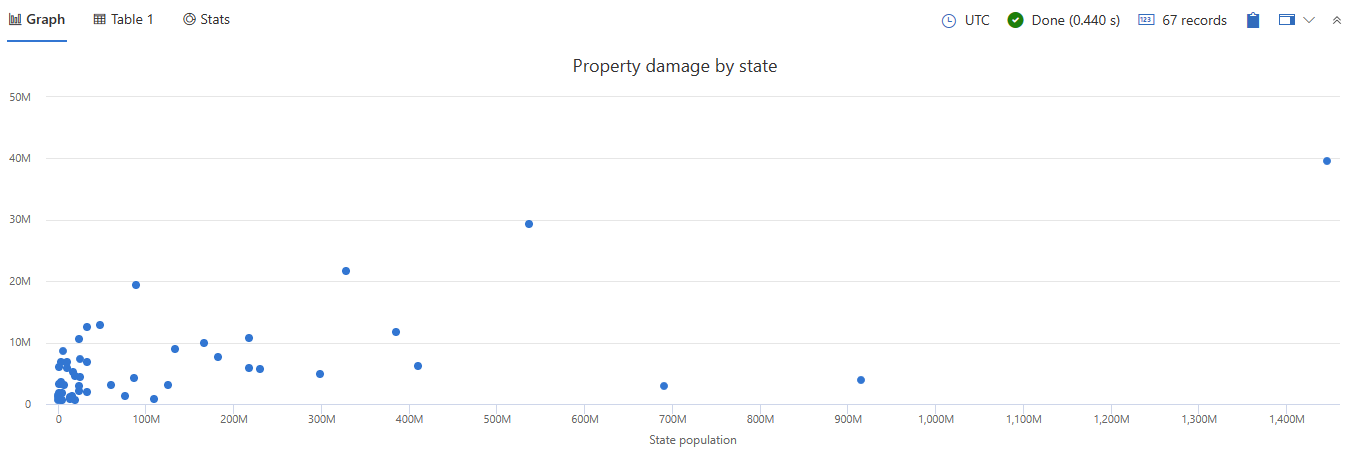

Example

This query provides a scatter chart that helps you analyze the correlation between state populations and the total property damage caused by storm events.

StormEvents

| summarize sum(DamageProperty)by State

| lookup PopulationData on State

| project-away State

| render scatterchart with (xtitle="State population", title="Property damage by state", legend=hidden)

2.1.12 - Stacked area chart visualization

The stacked area chart visual shows a continuous relationship. This visual is similar to the Area chart, but shows the area under each element of a series. The first column of the query should be numeric and is used as the x-axis. Other numeric columns are the y-axes. Unlike line charts, area charts also visually represent volume. Area charts are ideal for indicating the change among different datasets.

Syntax

T | render stackedareachart [with (propertyName = propertyValue [, …])]

Supported parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors. (true or false) |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

ymin | The minimum value to be displayed on Y-axis. |

ymax | The maximum value to be displayed on Y-axis. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ytitle | The title of the y-axis (of type string). |

Example

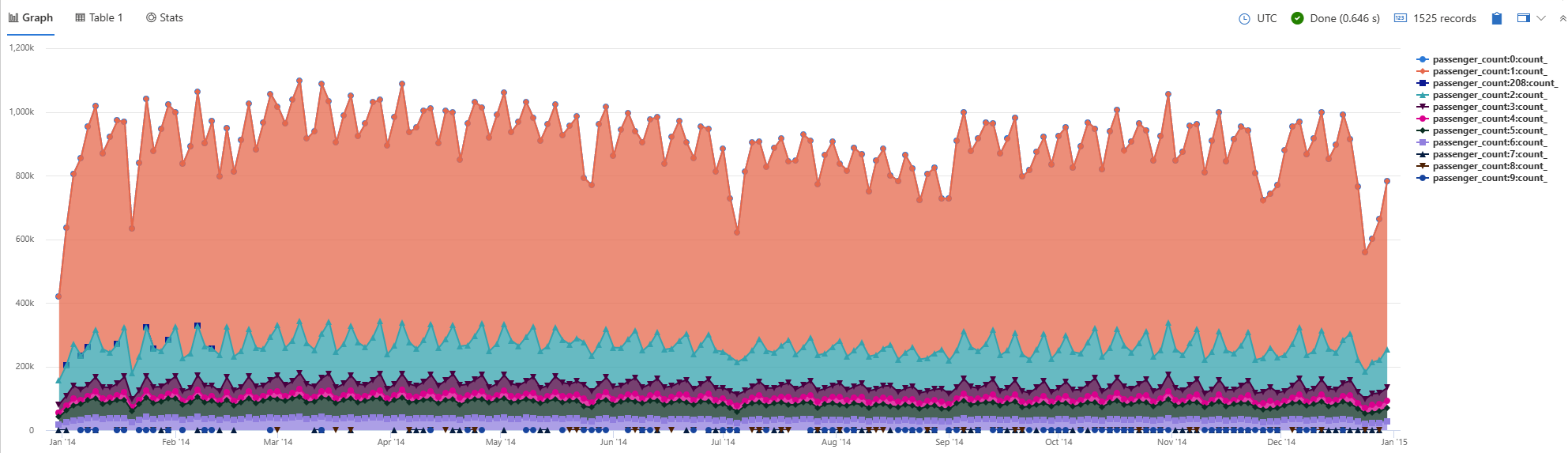

The following query summarizes data from the nyc_taxi table by number of passengers and visualizes the data in a stacked area chart. The x-axis shows the pickup time in two day intervals, and the stacked areas represent different passenger counts.

nyc_taxi

| summarize count() by passenger_count, bin(pickup_datetime, 2d)

| render stackedareachart with (xcolumn=pickup_datetime, series=passenger_count)

Output

Related content

2.1.13 - Table visualization

Default - results are shown as a table.

Syntax

T | render table [with (propertyName = propertyValue [, …])]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors. (true or false) |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

ymin | The minimum value to be displayed on Y-axis. |

ymax | The maximum value to be displayed on Y-axis. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ytitle | The title of the y-axis (of type string). |

| PropertyName | PropertyValue |

|---|---|

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

title | The title of the visualization (of type string). |

Example

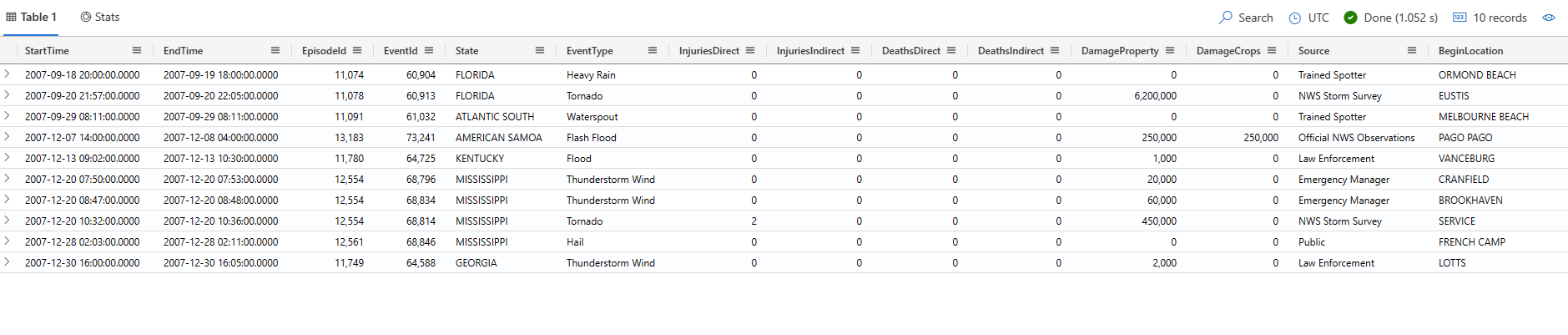

This query outputs a snapshot of the first 10 storm event records, displayed in a table format.

StormEvents

| take 10

| render table

2.1.14 - Time chart visualization

A time chart visual is a type of line graph. The first column of the query is the x-axis, and should be a datetime. Other numeric columns are y-axes. One string column values are used to group the numeric columns and create different lines in the chart. Other string columns are ignored. The time chart visual is like a line chart except the x-axis is always time.

Syntax

T | render timechart [with (propertyName = propertyValue [, …])]

Parameters

| Name | Type | Required | Description |

|---|---|---|---|

| T | string | ✔️ | Input table name. |

| propertyName, propertyValue | string | A comma-separated list of key-value property pairs. See supported properties. |

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

accumulate | Whether the value of each measure gets added to all its predecessors (true or false). |

legend | Whether to display a legend or not (visible or hidden). |

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

ymin | The minimum value to be displayed on Y-axis. |

ymax | The maximum value to be displayed on Y-axis. |

title | The title of the visualization (of type string). |

xaxis | How to scale the x-axis (linear or log). |

xcolumn | Which column in the result is used for the x-axis. |

xtitle | The title of the x-axis (of type string). |

yaxis | How to scale the y-axis (linear or log). |

ycolumns | Comma-delimited list of columns that consist of the values provided per value of the x column. |

ysplit | How to split the visualization into multiple y-axis values. For more information, see ysplit property. |

ytitle | The title of the y-axis (of type string). |

ysplit property

This visualization supports splitting into multiple y-axis values:

ysplit | Description |

|---|---|

none | A single y-axis is displayed for all series data. (Default) |

axes | A single chart is displayed with multiple y-axes (one per series). |

panels | One chart is rendered for each ycolumn value. Maximum five panels. |

Examples

The example in this section shows how to use the syntax to help you get started.

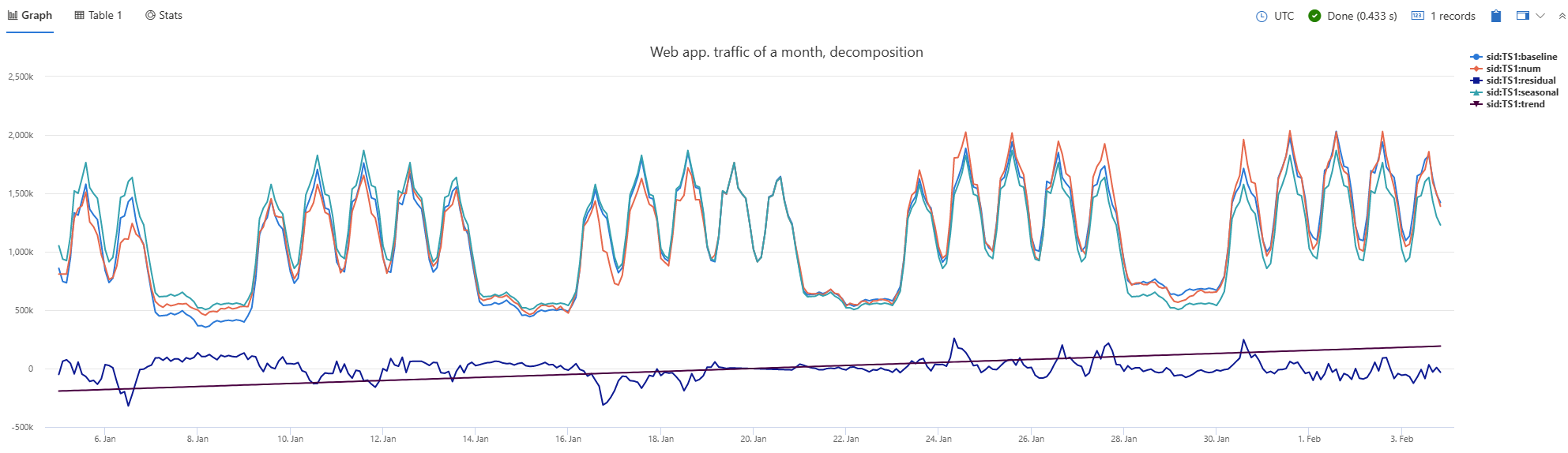

Render a timechart

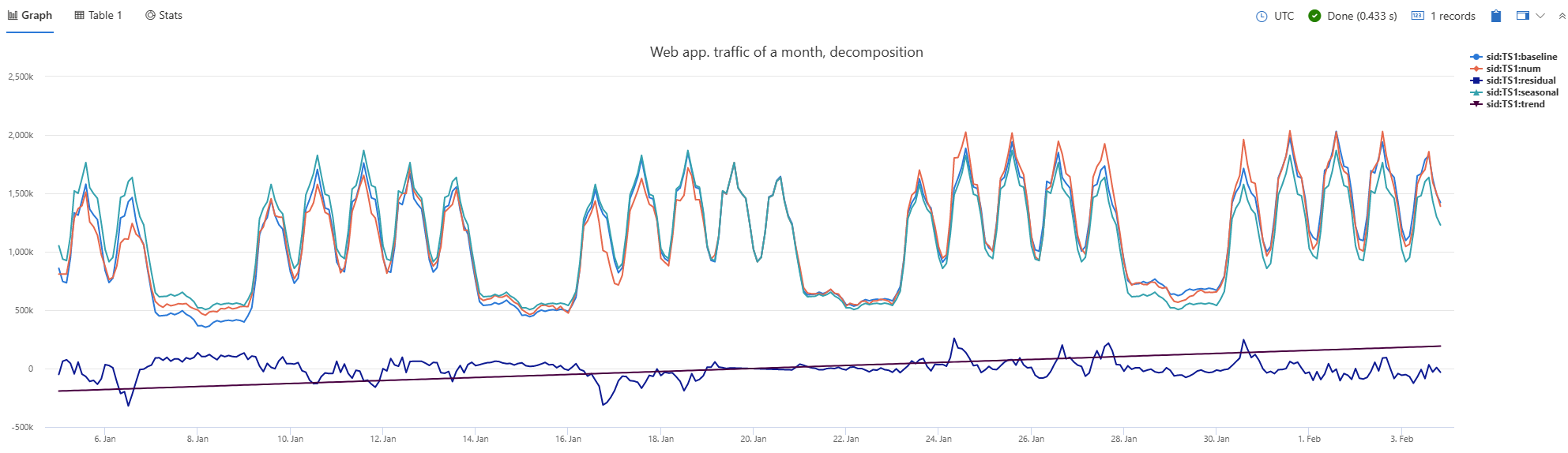

The following example renders a timechart with a title “Web app. traffic over a month, decomposing” that decomposes the data into baseline, seasonal, trend, and residual components.

let min_t = datetime(2017-01-05);

let max_t = datetime(2017-02-03 22:00);

let dt = 2h;

demo_make_series2

| make-series num=avg(num) on TimeStamp from min_t to max_t step dt by sid

| where sid == 'TS1' // select a single time series for a cleaner visualization

| extend (baseline, seasonal, trend, residual) = series_decompose(num, -1, 'linefit') // decomposition of a set of time series to seasonal, trend, residual, and baseline (seasonal+trend)

| render timechart with(title='Web app. traffic over a month, decomposition')

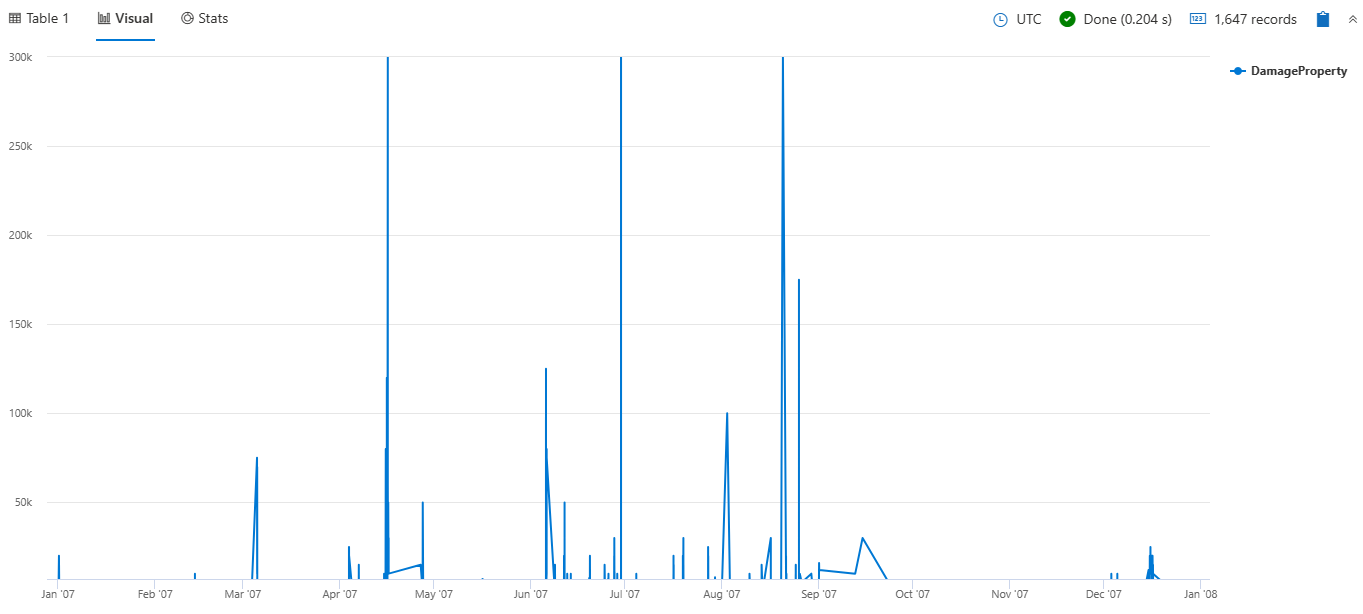

Label a timechart

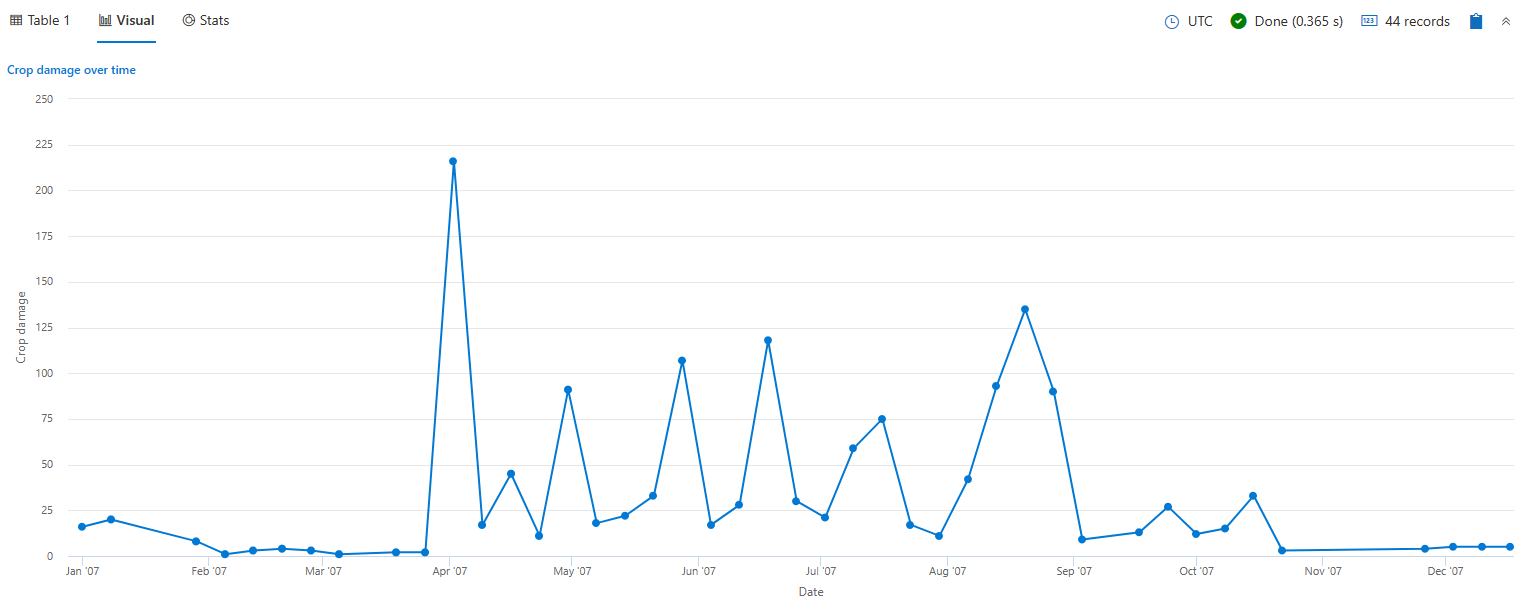

The following example renders a timechart that depicts crop damage grouped by week. The timechart x axis label is “Date” and the y axis label is “Crop damage.”

StormEvents

| where StartTime between (datetime(2007-01-01) .. datetime(2007-12-31))

and DamageCrops > 0

| summarize EventCount = count() by bin(StartTime, 7d)

| render timechart

with (

title="Crop damage over time",

xtitle="Date",

ytitle="Crop damage",

legend=hidden

)

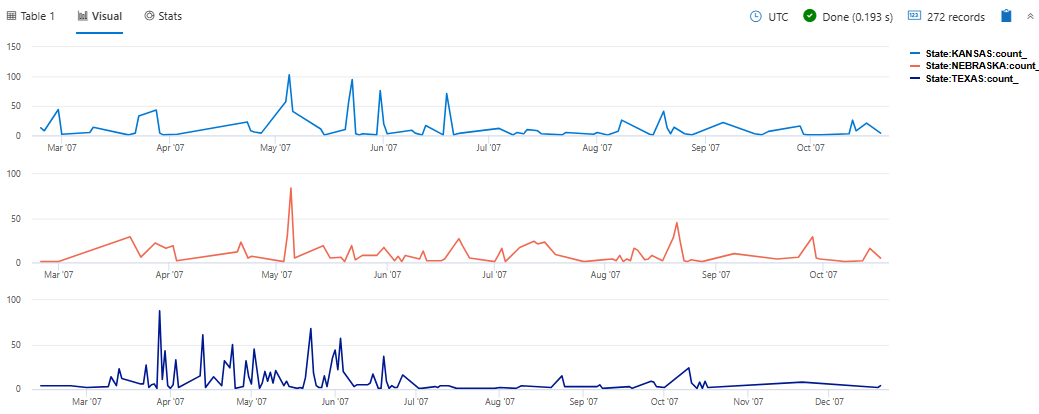

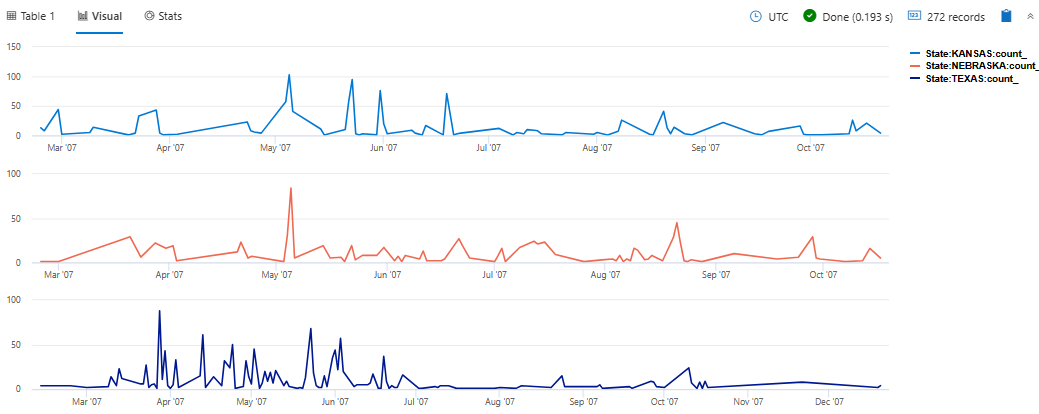

View multiple y-axes

The following example renders daily hail events in the states of Texas, Nebraska, and Kansas. The visualization uses the ysplit property to render each state’s events in separate panels for comparison.

StormEvents

| where State in ("TEXAS", "NEBRASKA", "KANSAS") and EventType == "Hail"

| summarize count() by State, bin(StartTime, 1d)

| render timechart with (ysplit=panels)

Related content

Supported properties

All properties are optional.

| PropertyName | PropertyValue |

|---|---|

series | Comma-delimited list of columns whose combined per-record values define the series that record belongs to. |

title | The title of the visualization (of type string). |

Example

The following example renders a timechart with a title “Web app. traffic over a month, decomposing” that decomposes the data into baseline, seasonal, trend, and residual components.

let min_t = datetime(2017-01-05);

let max_t = datetime(2017-02-03 22:00);

let dt = 2h;

demo_make_series2

| make-series num=avg(num) on TimeStamp from min_t to max_t step dt by sid

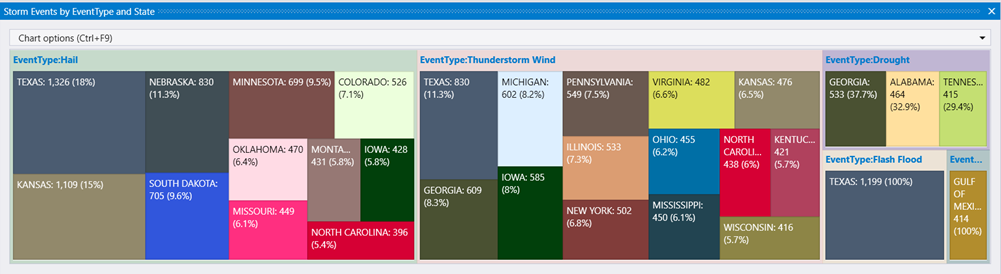

| where sid == 'TS1' // select a single time series for a cleaner visualization